How to improve the performance of your API

George Mulbah

George Mulbah

As a backend developer, you're constantly vigilant about your API's performance. If your API is lagging or performing poorly, it can impact the user experience significantly. In today's article, we will delve into API performance and explore strategies to enhance it. As the saying goes, "No matter how good the system is, there is always room for improvement."

We will explore five strategies to improve your API performance:

Caching

Load Balancing

Pagination

Asynchronous Processing

Connection Pooling

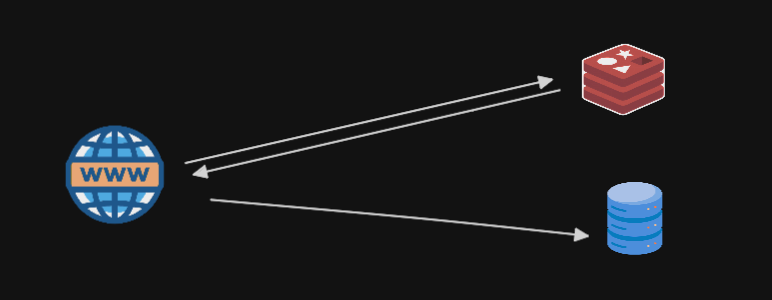

Let Talk Caching:

The concept behind caching is straightforward: store frequently accessed data in a cache, enabling quicker access when requested. However, if the requested data is not found in the cache (a cache miss), it needs to be retrieved from the underlying database. While caching offers significant performance benefits, managing cache invalidation and determining an appropriate caching strategy present notable challenges.

Cache Invalidation:

One of the primary challenges in caching is ensuring that cached data remains consistent with the source of truth (usually the database). Cache invalidation is the process of removing or updating cached data when the underlying data changes. Implementing effective cache invalidation mechanisms is crucial to prevent serving stale or outdated data to clients. Several strategies can be employed for cache invalidation, including:

Time-based Invalidation: Set an expiration time for cached data, after which it is considered stale and invalidated. This approach is suitable for data that doesn't change frequently and can be safely cached for a predetermined duration.

Event-based Invalidation: Utilize events or triggers to detect changes in the underlying data and invalidate corresponding cache entries. This approach ensures that cached data is promptly updated whenever changes occur, maintaining data consistency.

Choosing the Right Caching Strategy: Selecting an appropriate caching strategy depends on various factors, including the nature of the data, the frequency of updates, and performance requirements. Common caching strategies include:

Full-page Caching: Cache entire API responses or web pages to minimize server processing time and improve overall response times. This approach is suitable for static or relatively static content that doesn't change frequently.

Partial Caching: Cache specific parts of API responses or web pages that are computationally expensive or frequently accessed. By caching only relevant data or components, partial caching reduces overhead while still improving performance.

Distributed Caching: Utilize distributed caching solutions to store cached data across multiple servers or nodes, ensuring scalability and fault tolerance. Distributed caching improves performance by reducing the load on individual servers and facilitating horizontal scalability.

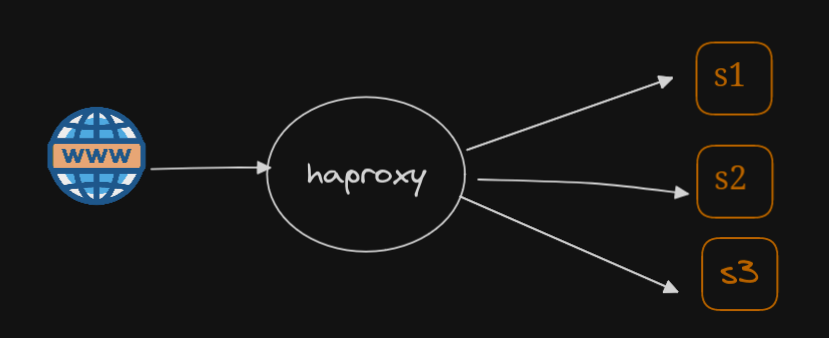

Scale-Out load Balance if needed

if one server instance isn't enough, you can think of scaling your API to multiple instances.

So what do I mean by this ?

Scaling to multiple instances essentially involves replicating your API across several servers to distribute incoming requests effectively. However, to ensure efficient distribution of requests among these instances, you need a mechanism to manage the traffic flow. This is where a load balancer comes into play.

Load Balancer: A load balancer acts as a traffic police, distributing incoming requests evenly across multiple server instances. By spreading the load across these instances, a load balancer helps optimize performance and ensures high availability of your application. Not only does it enhance performance, but it also enhances the reliability of your application infrastructure.

However, it's important to note that load balancers work best in specific architectural scenarios. They are most effective when your application is stateless, meaning that each request can be handled independently without relying on previous requests' context. Additionally, load balancers are not as effective in serverless architectures, where the underlying infrastructure dynamically allocates resources as needed.

Load balancers excel in environments where scaling horizontally—adding more instances to handle increased load—is straightforward. This typically involves adding more servers or containers to accommodate growing demand. Load balancers can seamlessly distribute incoming traffic across these dynamically scaled instances, ensuring optimal performance and reliability.

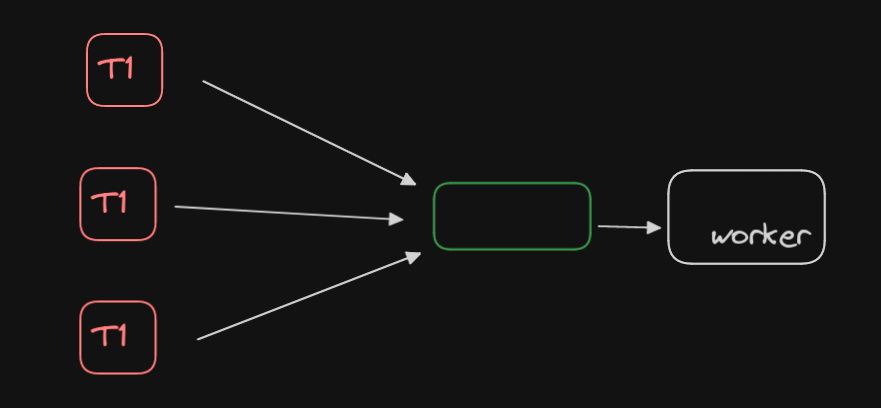

Asynchronous Processing

At times, tackling multiple problems simultaneously isn't feasible. For instance, consider an online app-building engine like Expo. In such cases, it's often pragmatic to set aside certain issues for future resolution.

Enter async processing—a valuable technique that offers a workaround. Instead of attempting to address all requests immediately, async processing allows you to acknowledge clients' requests promptly while indicating that they are queued for processing. Subsequently, your system can methodically handle these requests one by one, ensuring that each receives due attention. Once processed, the results are communicated back to the client.

This approach grants your application server the opportunity to catch its breath, optimizing its performance by managing the workload more efficiently.

However, it's essential to recognize that async processing isn't universally applicable. While it offers significant benefits in certain scenarios, it may not be suitable for every requirement or use case. Hence, it's crucial to evaluate the specific needs of your application before implementing async processing as a solution.

Pagination

If your API returns a large number of records, implementing pagination is crucial. Pagination involves limiting the number of records per request, which helps improve the response time of your API for consumers. By breaking down the data into manageable chunks, pagination ensures that the client receives data efficiently without overwhelming the server. Additionally, pagination allows for smoother user experiences by enabling users to navigate through large datasets more easily. When implementing pagination, consider factors such as the optimal page size and how to handle requests for specific pages efficiently to ensure optimal performance.

Connection Pool

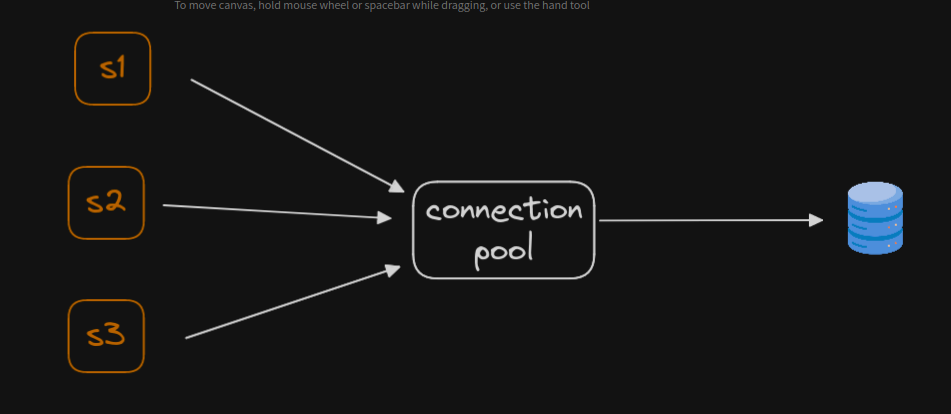

If you've worked with macro services, you're likely familiar with the challenge of ensuring each service can reliably connect to the database. Each time an API needs to fetch data, establishing a new database connection can be resource-intensive and harm performance. That's where connection pooling comes into play.

Connection pooling involves setting up a pool of reusable database connections that can be shared across requests. By reusing existing connections rather than creating new ones for each request, you can significantly reduce the overhead associated with establishing connections.

In highly concurrent systems, the benefits of connection pooling become even more pronounced. Without pooling, the system would be bogged down by the overhead of constantly opening and closing connections for each incoming request. With pooling, resources are used more efficiently, leading to improved performance and scalability.

However, implementing connection pooling isn't without its complexities. Proper configuration and management are essential to ensure that the pool size is appropriate for the workload and that connections are efficiently utilized without being overburdened. Additionally, monitoring and adjusting the pool size dynamically based on traffic patterns can further optimize performance.

In summary, while connection pooling may seem like a subtle aspect of database management, its impact on performance, especially in highly concurrent systems, can be significant. By intelligently managing database connections, you can ensure that your macro services or api module operate efficiently and scale effectively to meet the demands of your application.

So, there you have it! These are the five fundamental methods to enhance your API performance. If you found this newsletter helpful, don't hesitate to subscribe and stay in the loop with all the latest developments and insights. Keep optimizing and building amazing APIs! until I see you in another read let peace be with you.

Subscribe to my newsletter

Read articles from George Mulbah directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by