Neural Networks: A Step-by-Step Guide

Prakhar Kumar

Prakhar Kumar

Neural networks are the backbone of deep learning, enabling machines to learn from data and make intelligent decisions. In this comprehensive guide, we'll unravel the complexities of neural networks step by step, providing a detailed explanation along with examples to aid understanding.

What is a Neural Network?

A neural network is a computational model inspired by the human brain's neural structure. It comprises interconnected layers of artificial neurons that process and transform data to perform tasks such as classification, regression, and pattern recognition.

Components of a Neural Network

1. Neurons (Nodes)

Neurons are the basic units of a neural network. Each neuron receives input signals, applies a transformation using weights and biases, and produces an output signal using an activation function.

2. Layers

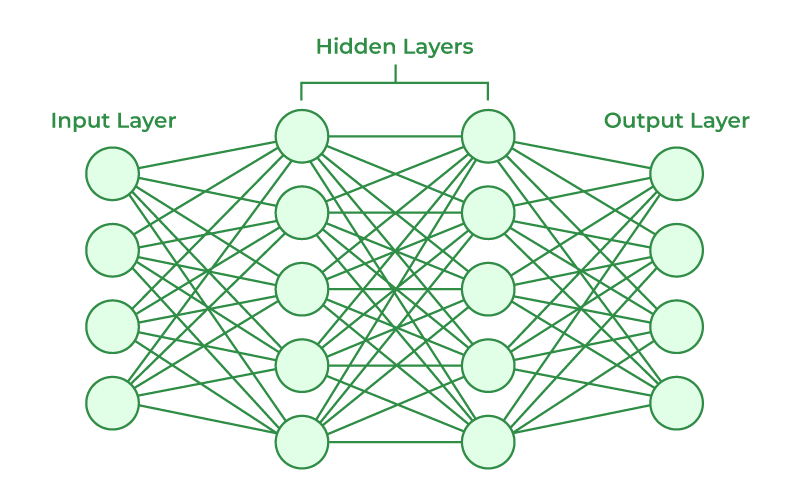

Neurons are organized into layers within a neural network:

Input Layer: Receives input data features.

Hidden Layers: Intermediate layers that process data, extract features, and learn representations.

Output Layer: Produces the final output (e.g., classification probabilities or regression predictions).

3. Weights and Biases

Weights determine the strength of connections between neurons, influencing signal propagation and learning. Biases add flexibility and control to neuron activations.

4. Activation Functions

Activation functions introduce non-linearity to neural networks, enabling them to learn complex relationships and make non-linear predictions. Common activation functions include ReLU, Sigmoid, and Tanh.

Building a Neural Network: Step-by-Step Explanation

Let's illustrate the process of building a simple feedforward neural network for binary classification using the MNIST dataset of handwritten digits.

Step 1: Preprocessing Data

Load the MNIST dataset and preprocess images into numerical arrays.

Normalize pixel values (0-255) to a range between 0 and 1.

Split data into training and testing sets.

Step 2: Define the Neural Network Architecture

Choose the number of layers and neurons per layer.

Define activation functions for hidden layers and output layer (e.g., ReLU for hidden layers and Sigmoid for binary classification).

Step 3: Initialize Weights and Biases

Initialize weights and biases randomly or using techniques like Xavier initialization.

Assign learning rate, batch size, and optimization algorithm (e.g., stochastic gradient descent).

Step 4: Forward Propagation

Perform forward propagation to compute outputs of each layer.

Apply activation functions to intermediate layer outputs.

Step 5: Compute Loss

- Use a suitable loss function (e.g., binary cross-entropy) to compute the difference between predicted and actual outputs.

Step 6: Backpropagation and Gradient Descent

Perform backpropagation to calculate gradients of the loss with respect to weights and biases.

Update weights and biases using gradient descent to minimize the loss.

Step 7: Training and Evaluation

Iterate through training data in batches, updating weights and biases after each batch.

Validate the model on the testing data to assess its performance (e.g., accuracy, precision, recall).

Example: Binary Classification of Handwritten Digits

Let's implement the above steps using Python and TensorFlow/Keras to build and train a neural network for classifying handwritten digits as 0 or 1 from the MNIST dataset.

pythonCopy codeimport tensorflow as tf

from tensorflow.keras import layers, models

# Define the neural network architecture

model = models.Sequential([

layers.Flatten(input_shape=(28, 28)), # Input layer

layers.Dense(128, activation='relu'), # Hidden layer with ReLU activation

layers.Dense(1, activation='sigmoid') # Output layer with Sigmoid activation for binary classification

])

# Compile the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

# Train the model

model.fit(X_train, y_train, epochs=10, batch_size=32, validation_data=(X_test, y_test))

# Evaluate the model

test_loss, test_accuracy = model.evaluate(X_test, y_test)

print('Test Accuracy:', test_accuracy)

Conclusion

Neural networks are versatile and powerful tools in machine learning, capable of solving a wide range of tasks. By understanding their components, architecture, and the step-by-step process of building and training a neural network, you gain a solid foundation to explore more complex models and applications in deep learning.

Subscribe to my newsletter

Read articles from Prakhar Kumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by