Building Integrations with the Oracle DB APEX & ORDS - Part 1

Jon Dixon

Jon Dixon

Introduction

There are many ways to integrate data between two systems. One way to start a lively conversation / fight between two developers is to ask them how they would move some data between system A and system B.

There are also many Integration Platform as a Service (iPaaS) solutions. These include Oracle Integration Cloud (OIC), Mulesoft, Jitterbit, and Dell Boomi. These solutions offer pre-built connectors, data mapping, orchestration, API management, multiple transportation protocols, error-handling capabilities, etc. These features do not, however, come without a significant cost. The cost relates to the monthly subscription fees and hiring highly paid engineers to build and maintain your integrations. Don't get me wrong, in many situations, utilizing one of these iPaaS solutions is the right thing to do, especially for larger companies with complex integrations.

Common Integration Patterns

To make my case for the RAD stack as an integration platform, I will examine three common integration patterns and see how APEX, ORDS, and the Oracle Database can be used to implement them. Each blog post in the series will cover one of the patterns listed below.

Fetching Data from Other Systems (this post)

Receiving Data from Other Systems

Sending Data to Other Systems

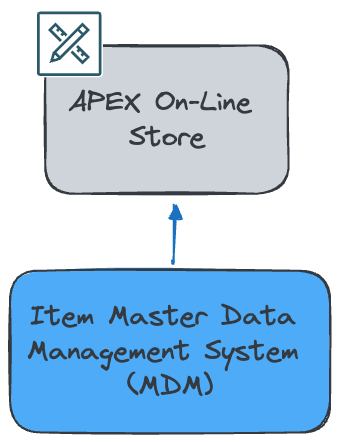

Use Case

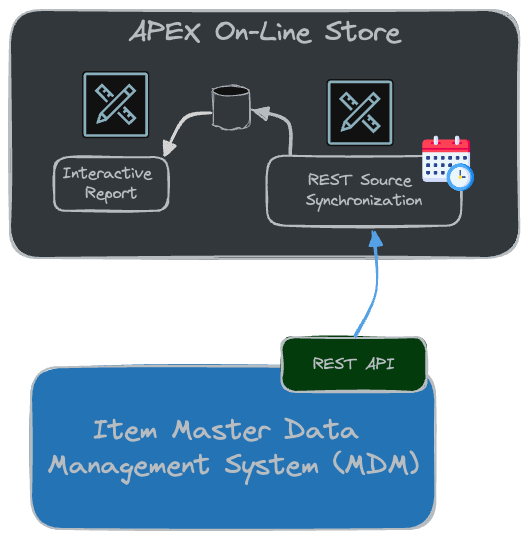

I designed this use case to illustrate these patterns and provide context throughout the series of blog posts. The use case centers around a Master Data Management (MDM) system that maintains an organization's inventory items. This system acts as the source of truth for the organization's inventory items. We also have an online store, which was developed in APEX. The online store must be able to access the latest information on items from the MDM system.

The MDM System:

Stores a total of 10,000 items.

Has REST Services Secured using OAuth2 Client Credentials.

Has a GET REST API to fetch Items, which returns JSON. The service supports pagination, allowing up to 500 items per page.

Fetching Data from Other Systems

Let's start the series by reviewing one of the most common integration patterns.

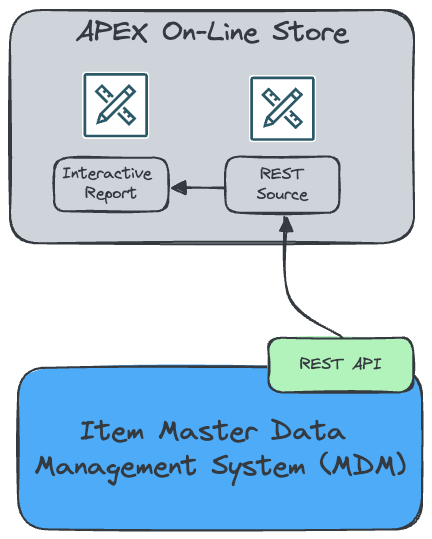

We often need to fetch data from other systems to process it locally or display it in our APEX Applications. Let's look at three approaches for accessing remote data over REST. Following our use case, we must provide our APEX Online Ordering App users with an Interactive Report (IR) showing items from the MDM system (Items Report).

The following sections describe three approaches to handle this scenario.

Real-Time Via REST Data Source

Sync to a Local Table Using REST Data Source

Sync Changes to a Local Table using an APEX Automation and PL/SQL Code

1. Real-Time Via REST Data Source

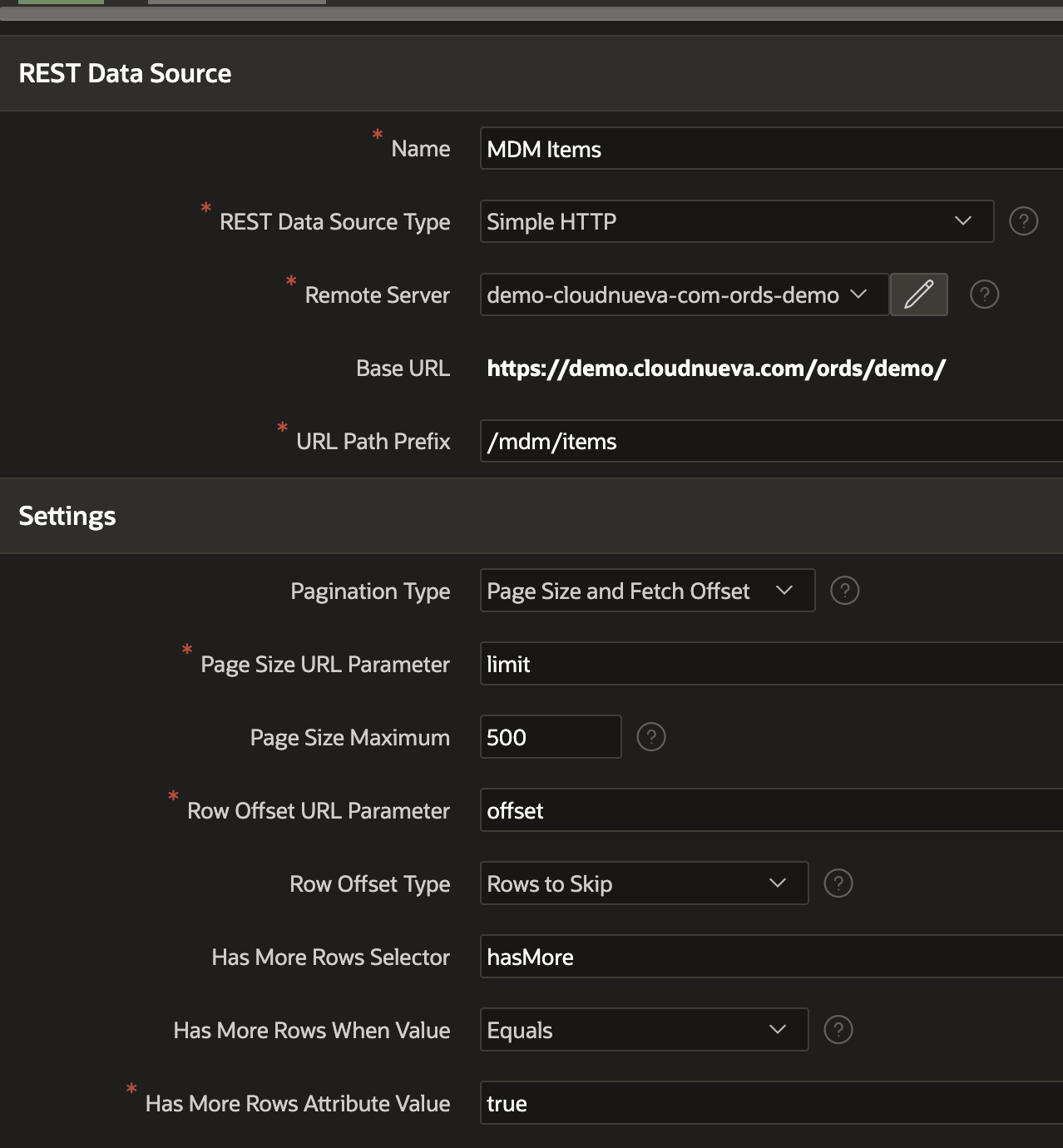

A great way to display remote data in our APEX applications in real-time is to use APEX REST Data Sources. REST Data Sources provide a low-code approach for configuring calls to a remote REST API. REST Data Sources store everything APEX needs to know to authenticate and execute a REST API and parse the results. In addition, REST Data Sources can be used just about anywhere you can reference a local database table. This includes Reports, List of Values, and Charts.

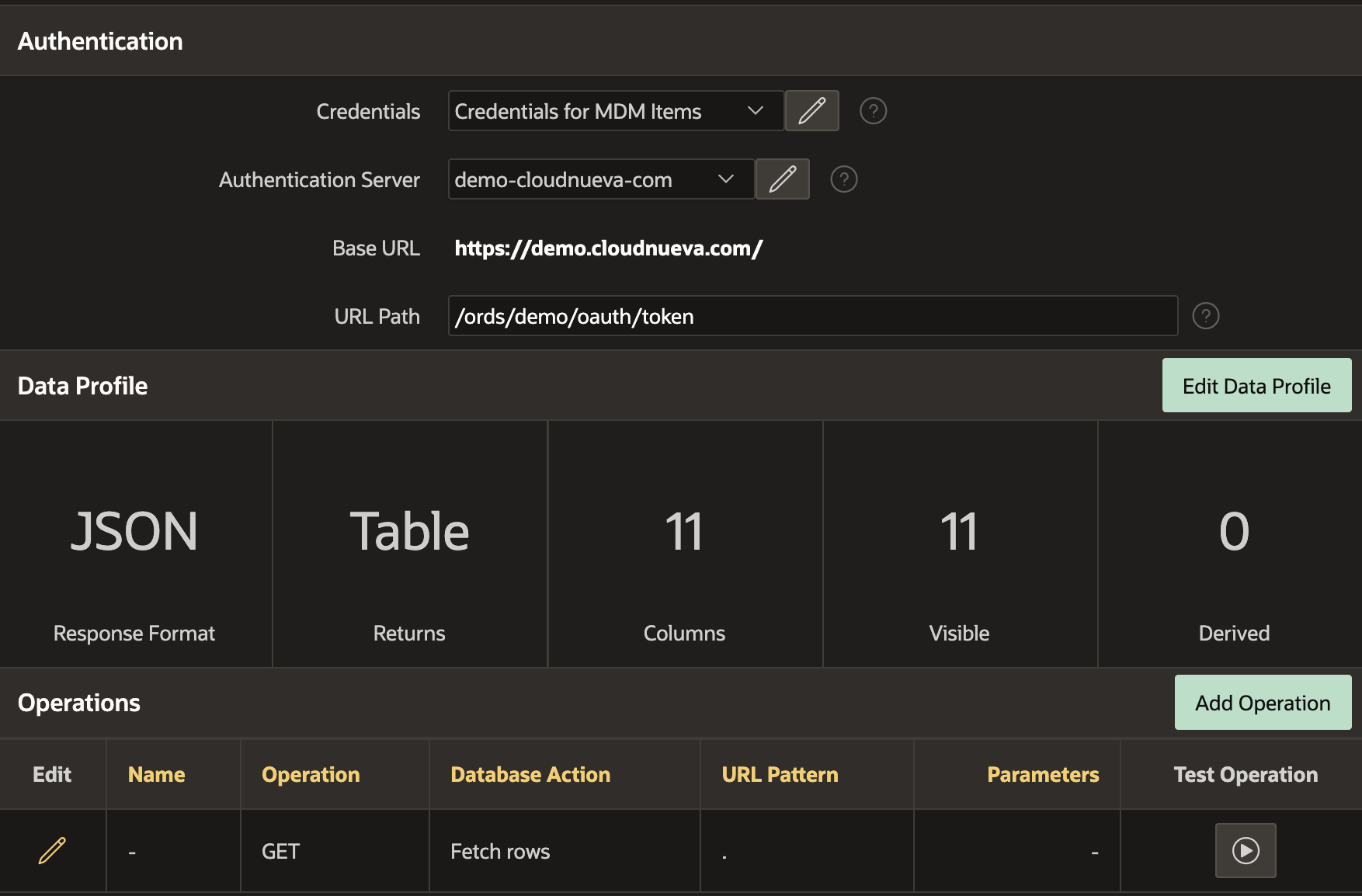

We must create a REST Data Source on the MDM Items REST API to build our Items Report.

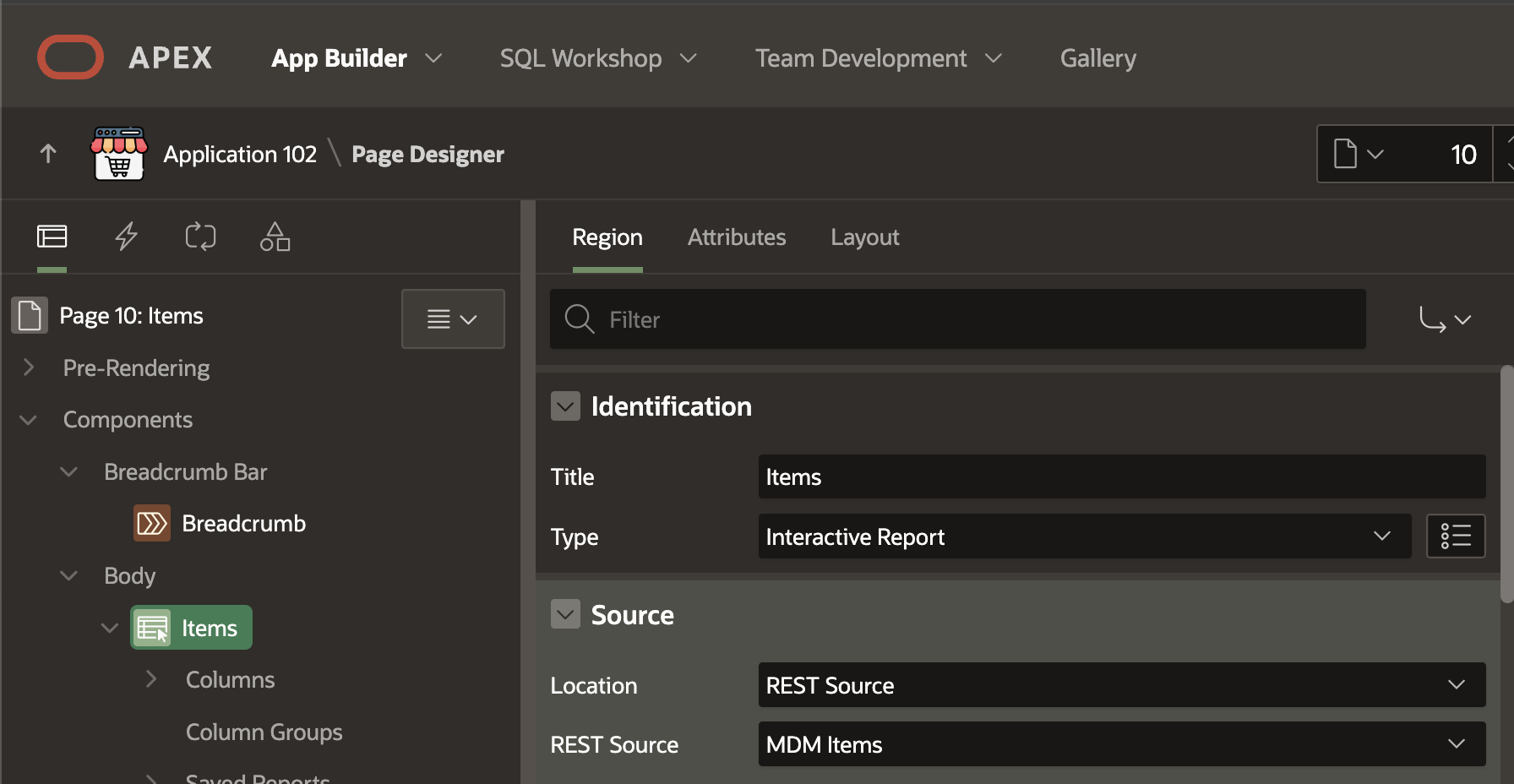

Then we reference the REST Data Source in the APEX Interactive Report:

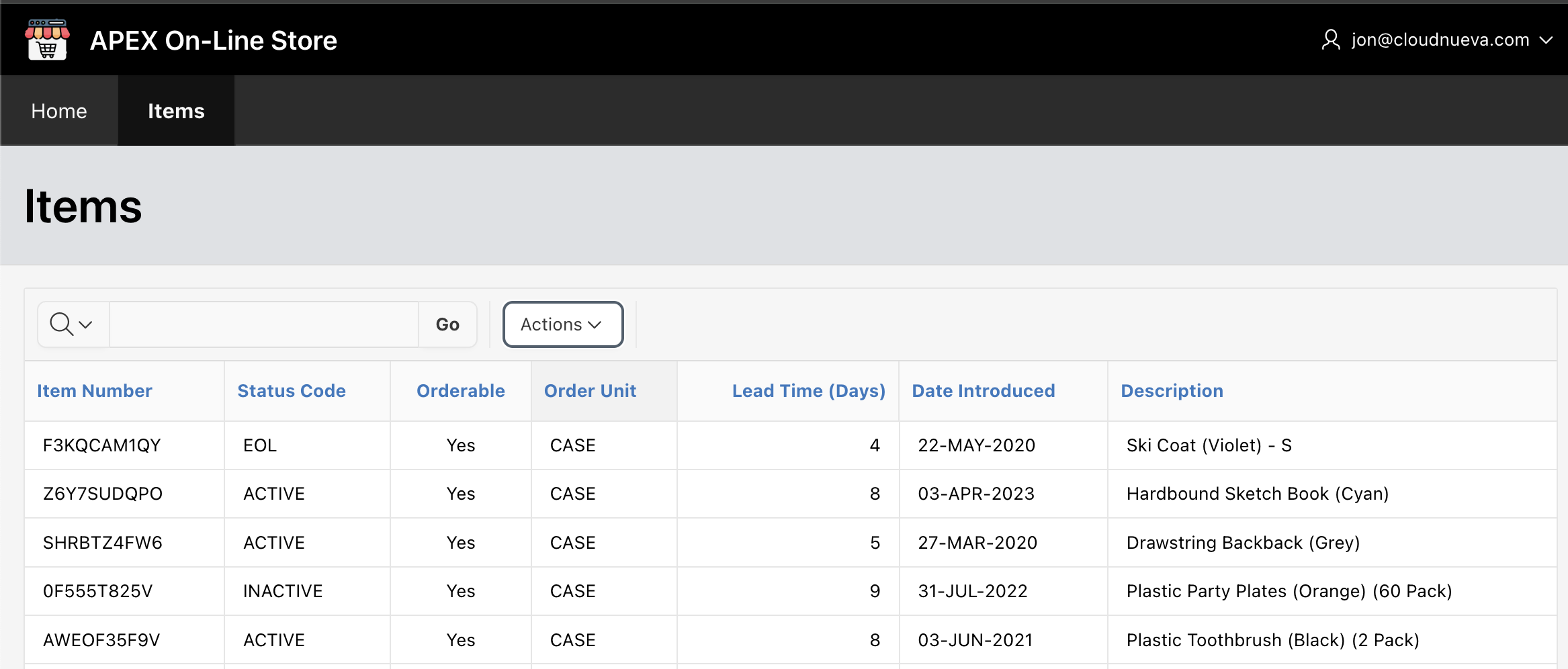

When we run the report, we see items pulled from the MDM System in real-time. Wow, that was easy, and the page loaded in less than a second!

What about Search?

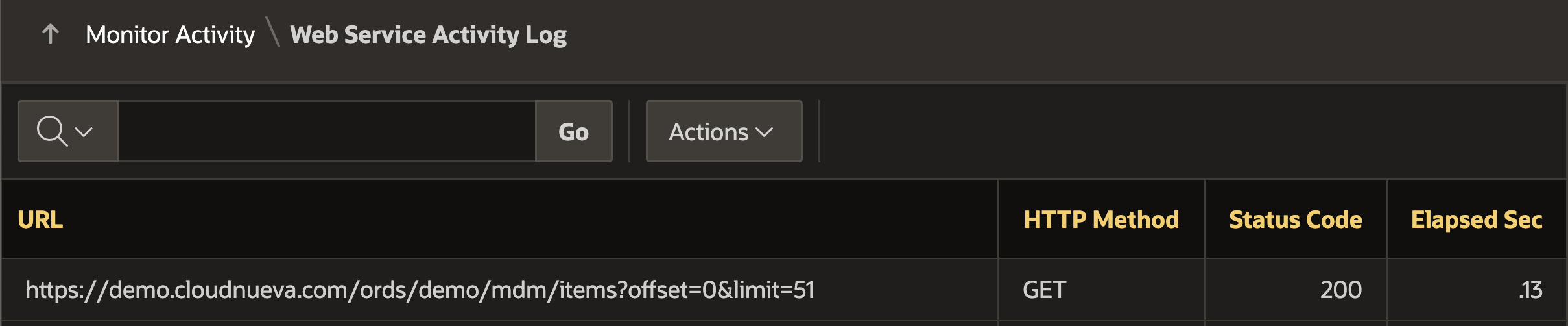

When the page was rendered, APEX checked the 'Rows Per Page' setting in the Interactive Report, which was set to 50, and then called the MDM REST End Point to request just the first 50 records. We can verify this by checking the 'Web Service Activity Log' under 'Monitor Activity'.

This is perfectly reasonable; we only need the first 50 rows, so APEX only got the first 50 rows.

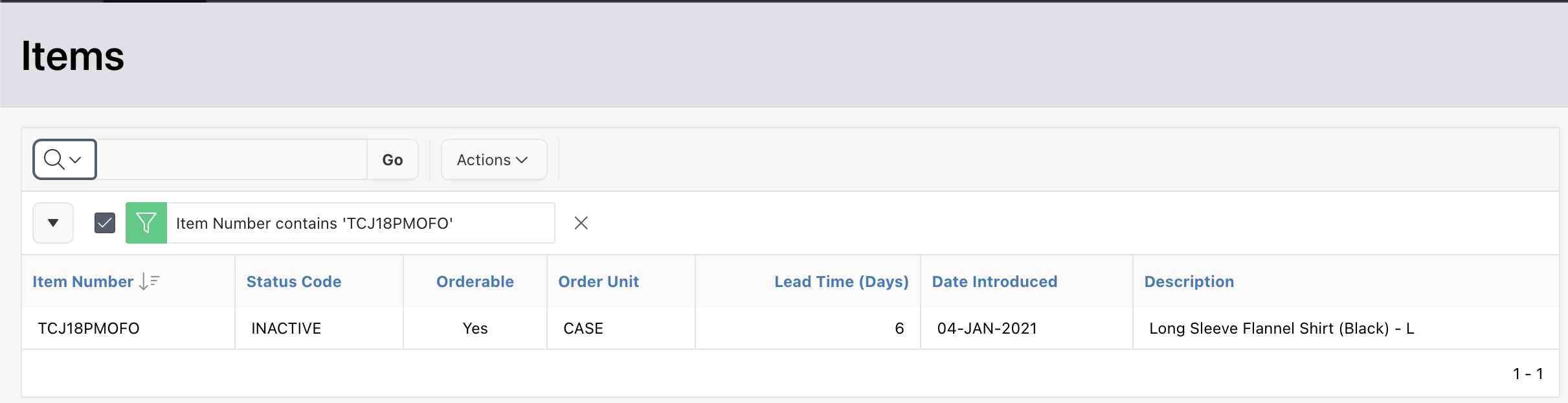

What happens if we search for a specific item?

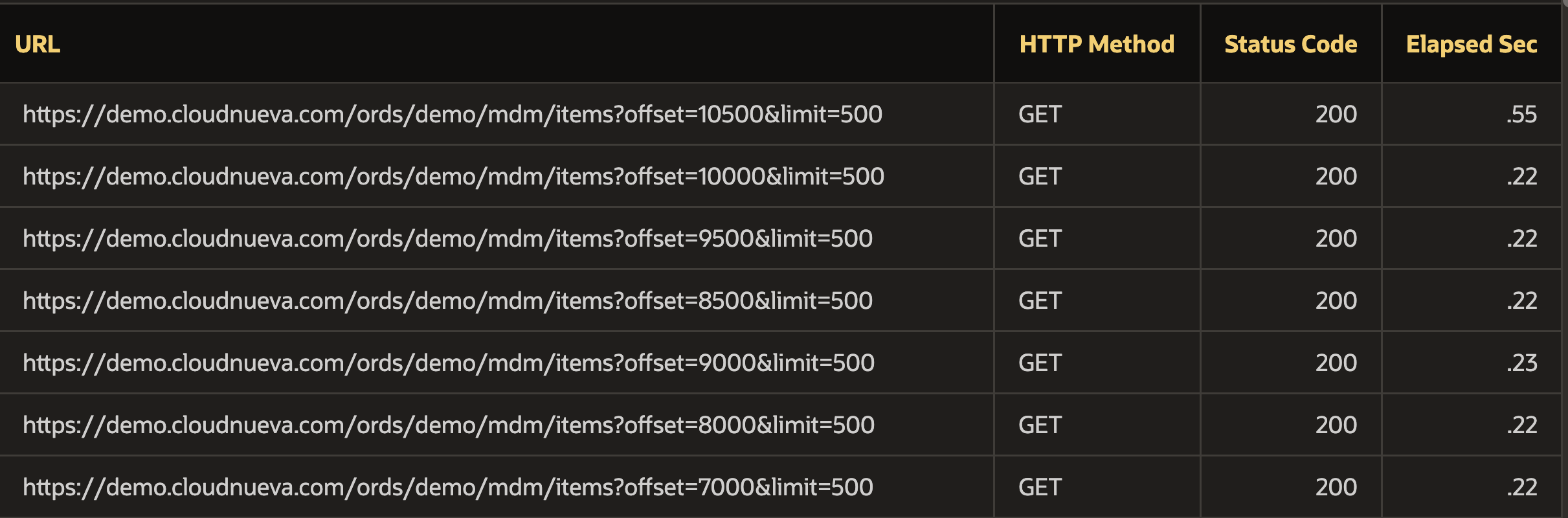

To find that item, APEX must fetch all 10,000 records from the MDM REST API and then perform a search for the item locally. We can prove this by searching for an item and then looking at the 'Web Service Activity Log' again (the screenshot below only shows the last seven requests).

We can see that APEX fetched all 10,000 records in batches of 500. If you remember, 500 is the maximum page size for the MDM REST API.

Now, the page takes more than five seconds to render, which is not good. Ideally, the REST API would provide the ability to perform the filter in the MDM system. In many cases, REST APIs do not offer this ability, and even if they did, APEX must be made aware of this so that it can pass the appropriate filter criteria in the request. Unless you can use a REST Source Type that supports filtering (e.g., OData or Oracle REST Data Services), you must build a REST Source Connector Plugin to handle the APEX IR and the REST API interaction. This can add quite a bit of time to your project.

What About Sorting?

We have an even bigger problem with sorting. When we try to sort on the Item Number column, APEX fetches the first 50 records and then sorts just those 50 records. This can again be remedied if the REST API supports sorting. You can build a REST Source Connectory Plugin that handles passing the REST API the necessary information to perform the sort on the remote system and return the sorted results.

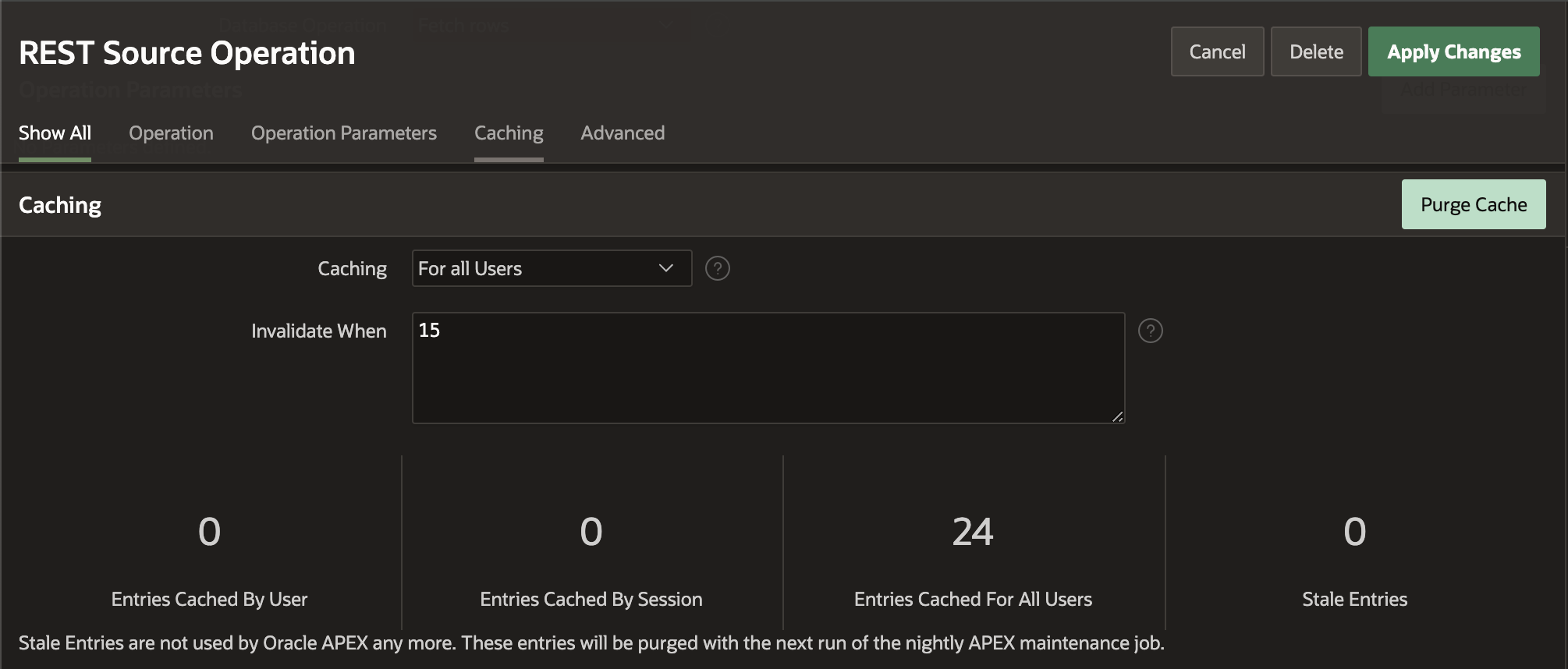

What About REST Source Caching?

When you enable Caching for a REST Source, APEX looks at the URL for the Request (e.g., https://example.com/ords/demo/mdm/items?offset=0&limit=51) and checks to see if it already has cached results for this request. The problem is that the URL to fetch the first page of records for a user that has IR Rows Per Page set to 50 (items?offset=0&limit=51) is different from the URL of the user that has IR Rows Per Page set to 100 (items?offset=0&limit=101). This means it can take some time for the cache to become practical, and by the time it has, it will likely become invalidated and need to be built up all over again.

Best Practices

If you know the REST API will never return more than approximately 1,000 records, and you can configure pagination in the APEX REST Source, then don't worry about it. Create the REST Source and move on.

When considering caching:

Use the Web Service Activity Log to see how request URLs vary between calls to the REST API. The data for each unique URL will be saved in a different cache.

Consider how often the cache should be invalidated and if it can be shared across users and sessions.

The cache is a JSON CLOB in a table that must be parsed and converted to a table. Large responses may still take a few seconds to process.

Use a REST Source Type that supports pagination, filtering, and ordering if available. Examples include the OData and Oracle REST Data Services types.

If you will be doing a lot of work with an API that supports pagination, filtering, and ordering, create a REST Source Connector Plugin. This will allow APEX to pass the appropriate parameters with each REST request so that activities are performed on the remote server and the results are passed back to APEX. It will make your user experience so much better.

Benefits

Your users are always acting on up-to-date data from the remote system.

There is no risk of integration errors and having the wrong data presented to your user.

You do not have to store the data in two places.

Considerations

If the remote REST API becomes unavailable, you cannot use the data from the REST Source. Depending on your reliance on that data, this could make your App unusable.

Performance will be slower than if the data was fetched from a local table. This can be mitigated if you can paginate, filter, and sort on the remote server, but it will always be slower than having the data locally.

Joining data from the REST Source with tables in your local DB will also impact performance. APEX must first fetch and parse the data and then join the un-indexed result with your local tables. This may be fine for smaller data sets but slow for multiple thousands of records.

2. Sync to a Local Table Using a REST Data Source

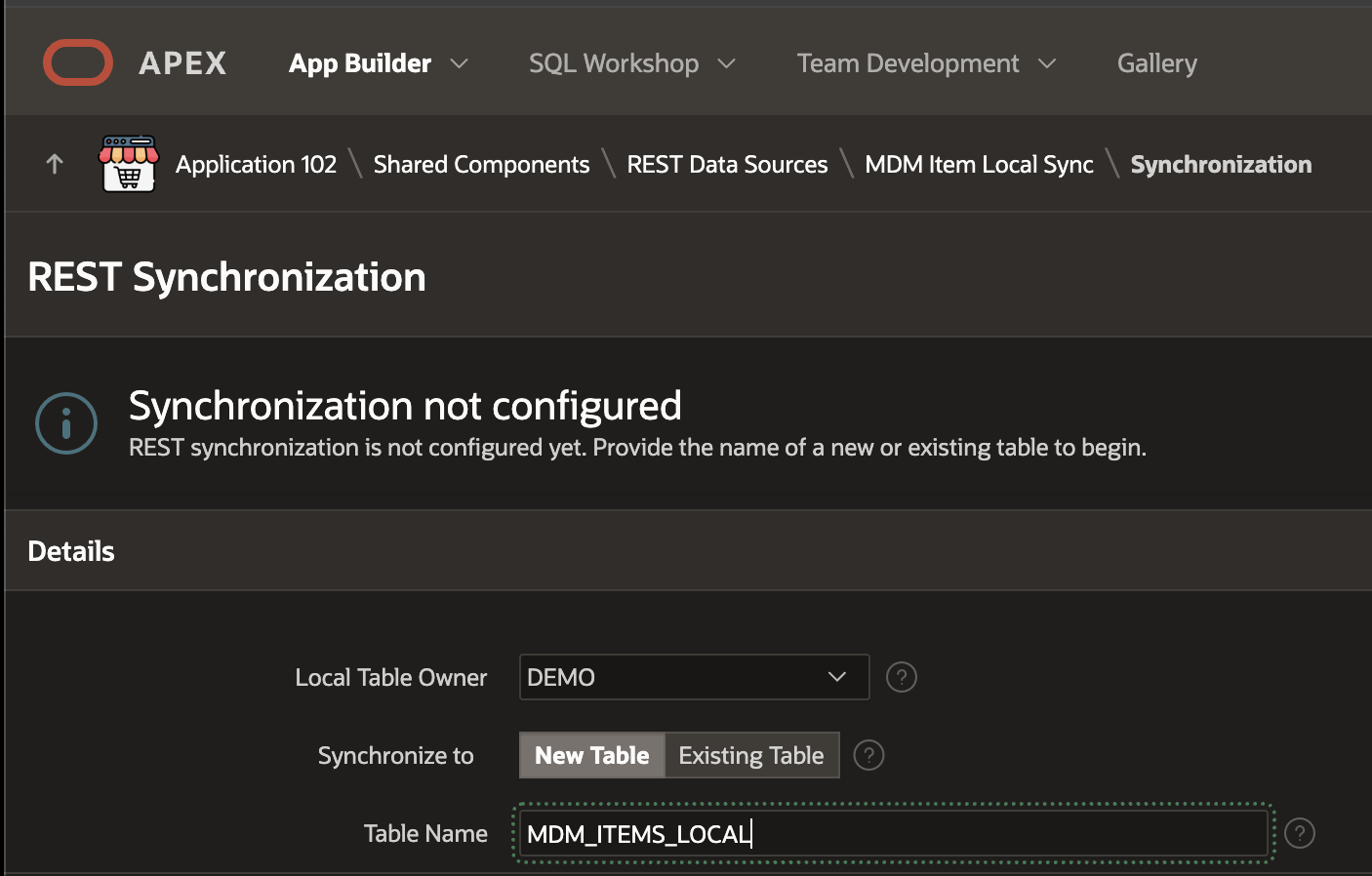

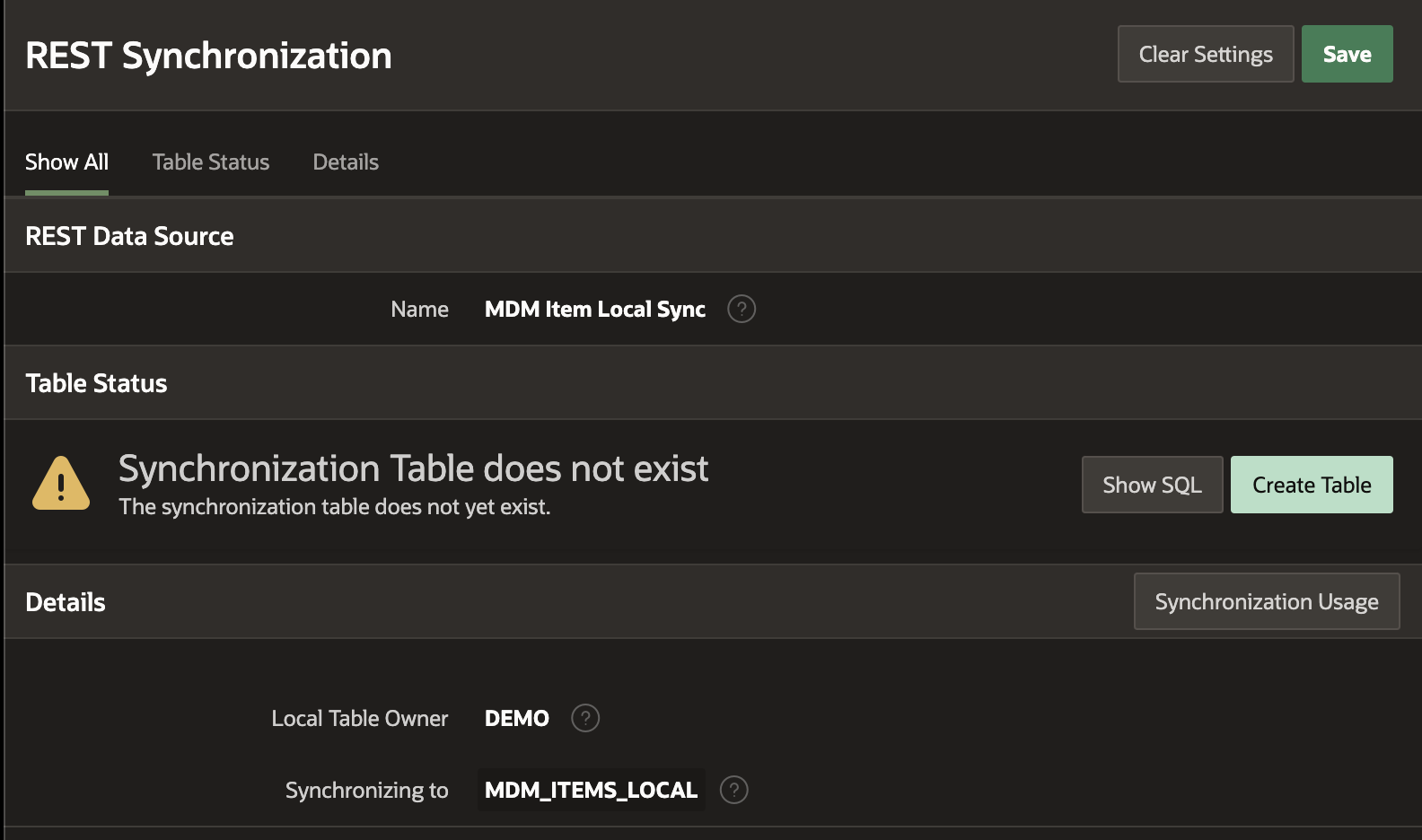

Let's assume a Real-Time REST Source will not work for us, and we need to store data from the REST API locally. In this case, we can enable REST Source Synchronization.

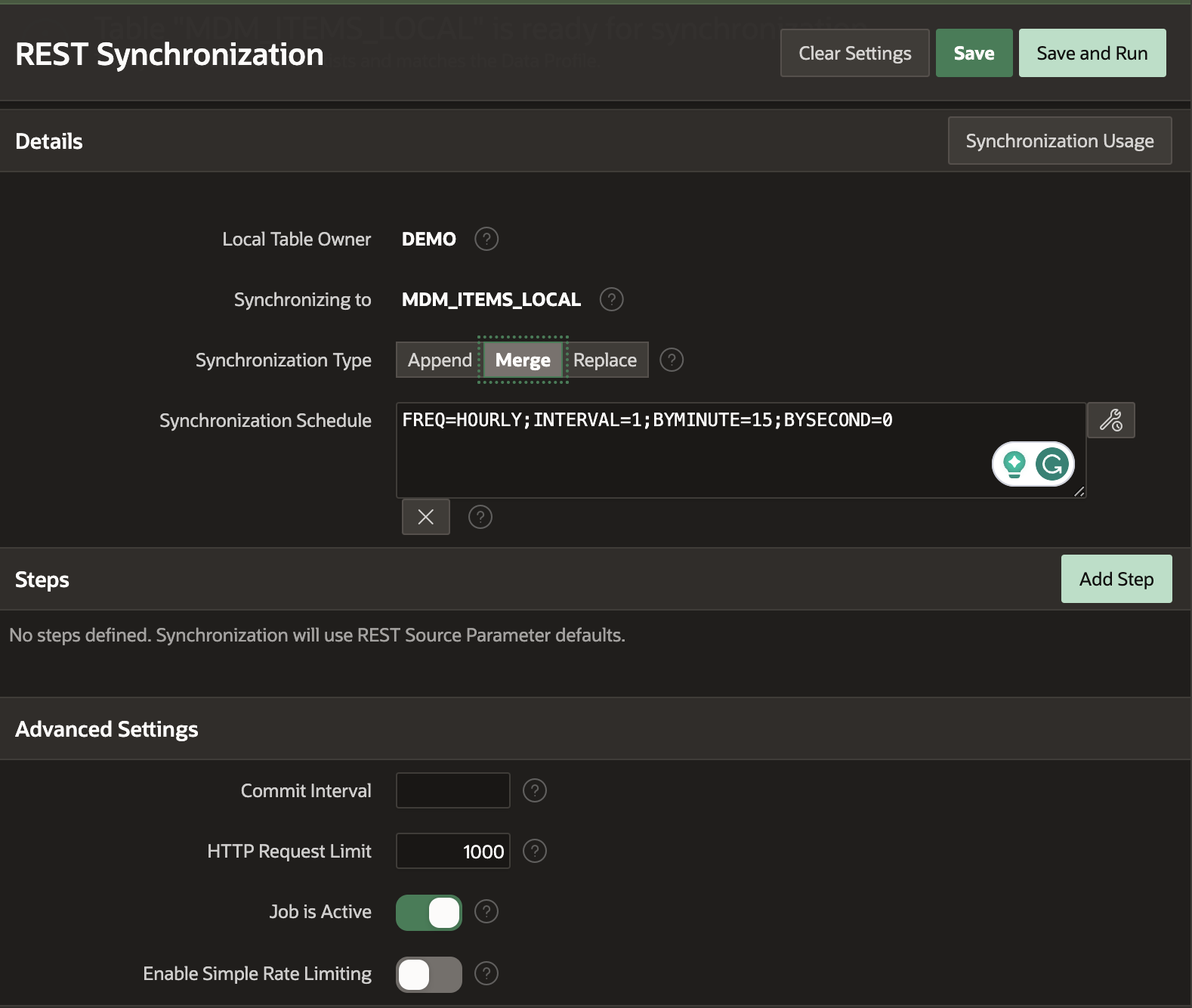

Based on the above configuration, APEX will Sync records from the REST API to a local table called MDM_ITEMS_LOCAL every hour. By selecting the Synchronization Type of 'Merge', new records will be added to the local table, and changes will be updated to the local table; no records will be deleted from the local table.

The local table behaves just like any other table. You can add indexes, constraints, etc., as you see fit.

Best Practices

To determine which records have been added or updated, APEX must fetch all records from the REST API. Because of the network overhead, many requests to the API for a small number of records will probably take longer than fewer requests for a larger set of records. If the REST API supports variable page sizes, look for the sweet spot in setting the page size.

Think carefully before using this approach with REST Sources that return more than 100K records.

Put some monitoring and alerting in place to verify that the Synchronization is running. You could check the log table

apex_rest_source_sync_logand send an email if any Synchromizations fail.

Benefits

This is still a Low Code Solution.

Having data stored in local tables improves the performance of any queries you run against this data. It also improves performance when joining this data with other tables in the local DB.

Considerations

A considerable amount of extra work is being carried out because the entire REST API content must be fetched to check for changes in the local DB.

Syncing a large data set could take a long time, depending on how many records there are. In addition, frequent fetching of large data sets could impact the performance of the REST API and your local database. Given this, think carefully before using this approach for data sets with more than 100k records.

It is impossible to schedule Sync Frequencies less than every 10 minutes. This is due to the way APEX schedules REST Source Synchronizations. You can read more about this here. If you cannot afford the data in the local table to be more than 10 minutes out of date, then this approach is not for you.

Using this approach, you are now duplicating data in two places. Nothing is wrong with this per se, but you should be aware of it.

3. Sync Changes to a Local Table using an APEX Automation and PL/SQL Code

With this option, the goal is to sync only changed data to the local table. While this means we avoid some of the overhead of the REST Source Sync, we now have to write some PL/SQL to orchestrate this. We also need to rely on the REST API having functionality that will allow us to query only changed data.

This approach assumes the target system's REST API allows you to request only the records that have changed since a specific date and time. It requires you to keep track of the last time you called the REST API. Let's see how we can do this.

Automation

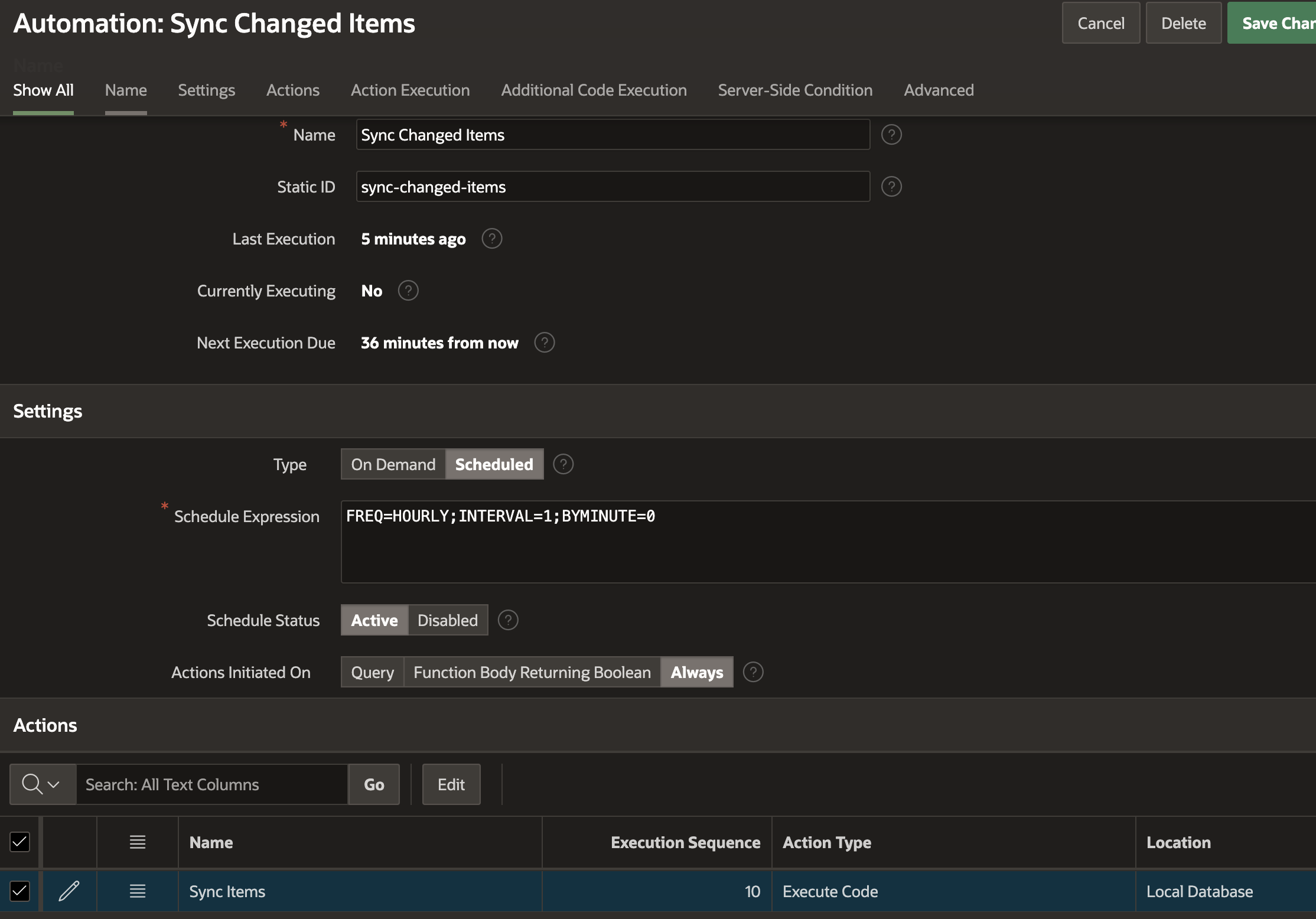

I have created an automation that runs every hour.

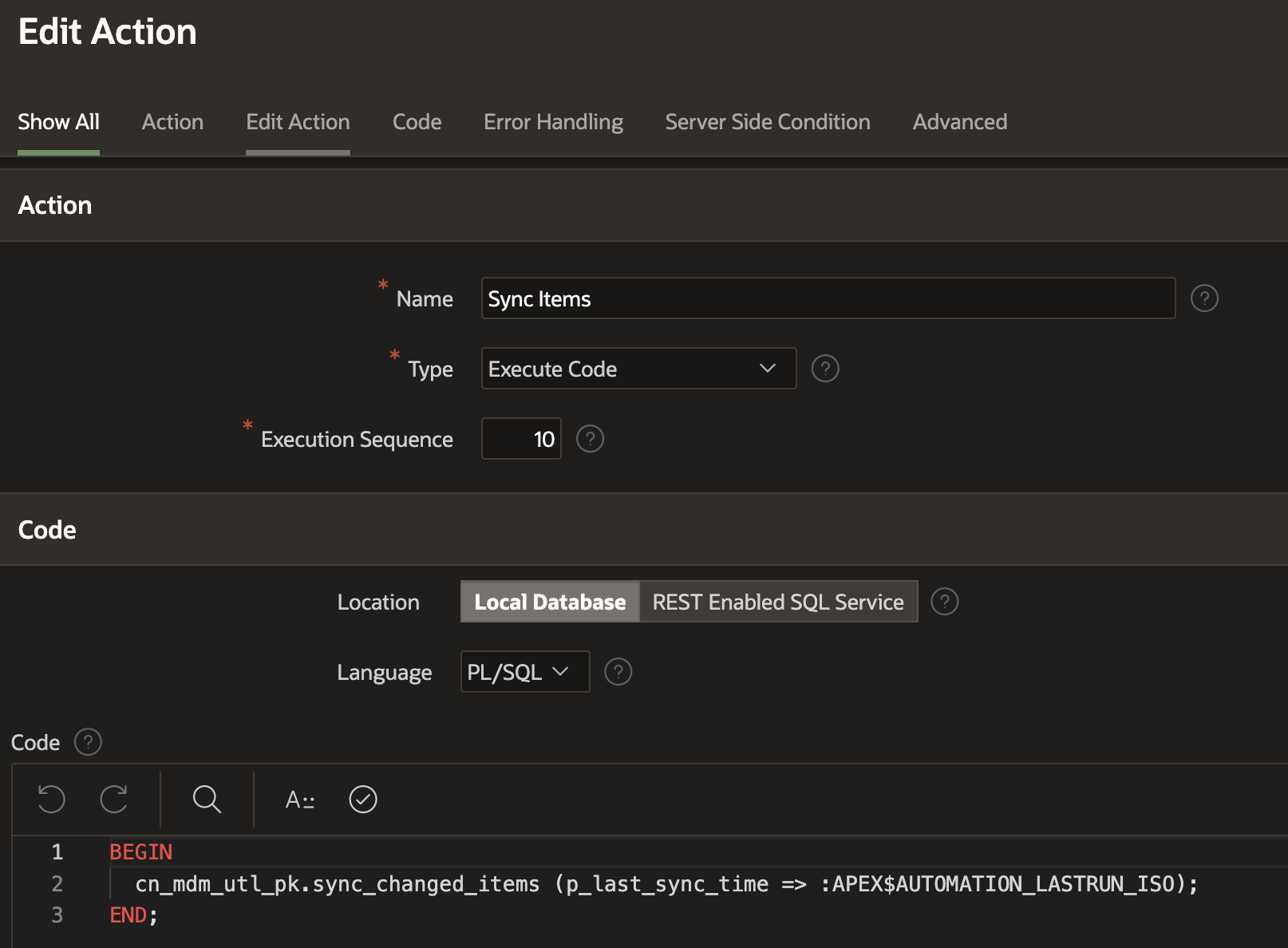

The APEX Automation performs the following action:

Notice that I am passing the bind variable :APEX$AUTOMATION_LASTRUN_ISO to my PL/SQL procedure. This bind variable is populated by APEX when the Automation runs. It contains the date and time that the Automation was last run in the format YYYY-MM-DD"T"HH24:MI:SS.FF3TZH:TZM, e.g. 2023-12-04T00:13:22.379+00:00.

:APEX$AUTOMATION_LASTRUN_ISO is convenient, you may want to consider storing the last checked date and time in your own table. This gives you more control and allows you to reset the date to a date in the past to potentially re-process records.PLSQL Code

The automation calls a PL/SQL procedure that takes the last run date and passes it to the remote server's REST API. I have included comments in the code describing what happens during each step.

Best Practices

Be aware of the Timezone if you use the last update time to check for changed records. Make sure the Timezone of the last update time you store locally matches the Timezone of the last update time of the REST API. My advice is to use the UTC Timezone.

If your APEX Automation fails, think about how you could re-process records from the REST API. This is where storing the last updated time in a table allows you to set the date back in time to pick up records from the last time it ran successfully.

Put some monitoring and alerting in place to verify that the APEX Automation is running. You could check the log tables

apex_automation_logandapex_automation_msg_log, and send an email if the Automation fails.

Benefits

Only new or changed records are fetched from the REST API on the remote server. This reduces the payload significantly and can dramatically improve performance.

When you write your own code to process changed records, you can include logic to perform additional processing. For example, you could send updates to another system, update other local tables, or send an email.

You can write more robust error handling and re-try logic when you write your own integration code.

Considerations

- If all you are doing is syncing a few thousand records to a local table, then a REST Source Synchronization is a better alternative to writing your own integration code.

Webhooks

If the system you are extracting data from supports Webhooks, you should consider this an alternative to periodically checking for changes. A Webhook configured in the remote system will call a REST API in your system every time a record is added or updated. This allows you to receive updates in near real-time and avoids the overhead of constantly polling for changes. You can learn more about Webhooks in this post and the next post in this series on integrations.

Examples

So far, this has been all theory. It is time to review examples from projects where I have used the RAD stack to build fetch-based integrations for my customers.

Show MS SharePoint Data in an APEX Application. Allow users to traverse a SharePoint site in real-time to select a file to attach to a document in APEX.

Synchronize Oracle Fusion GL Journals and Code Combinations to a PaaS Database for Analysis. Call Fusion APIs using

APEX_WEB_SERVICEto generate exports of GL Journals and Account Combinations, then pull this data into an Oracle database using OCI Object Store.Synchronize Harvest Time Entries, Projects, and Tasks to local tables and report on time entries using APEX.

Synchronize Quickbooks Customers and AR Invoices local tables and report on time entries using APEX.

Identity Management. Utilize REST APIs from several services (Active Directory, OnBase, Salesforce, Oracle EBS, Oracle Account Reconciliation (ARCS), and Attendance Enterprise, to pull in identity data and provide a consolidated view of user accounts in an APEX Application.

Conclusion

That's it for the first installment of my series on using the Oracle Database, APEX, and ORDS to handle integrations. In this post, I looked at three approaches for Fetching Data from other Systems. Each approach has pros and cons; matching the correct method with your particular use case is essential.

Other Posts in this Series

Post 1 - Fetching Data from Other Systems

Post 2 - Receiving Data from Other Systems

Subscribe to my newsletter

Read articles from Jon Dixon directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Jon Dixon

Jon Dixon

Hi, thanks for stopping by! I am focused on designing and building innovative solutions using the Oracle Database, Oracle APEX, and Oracle REST Data Services (ORDS). I hope you enjoy my blog.