How to install Airflow on an Ubuntu machine?

jeeva B

jeeva B

Before performing the operation, we need to ensure that our Ubuntu webserver is up to date with the desired packages.

The code provided below will help us install the necessary packages in Linux. In addition to this, we need to install any other packages required for Airflow to function properly.

sudo apt install build-essential gcc g++ make cmake git wget curl python3 python3-pip openssh-server htop neofetch vim nano zip unzip tree

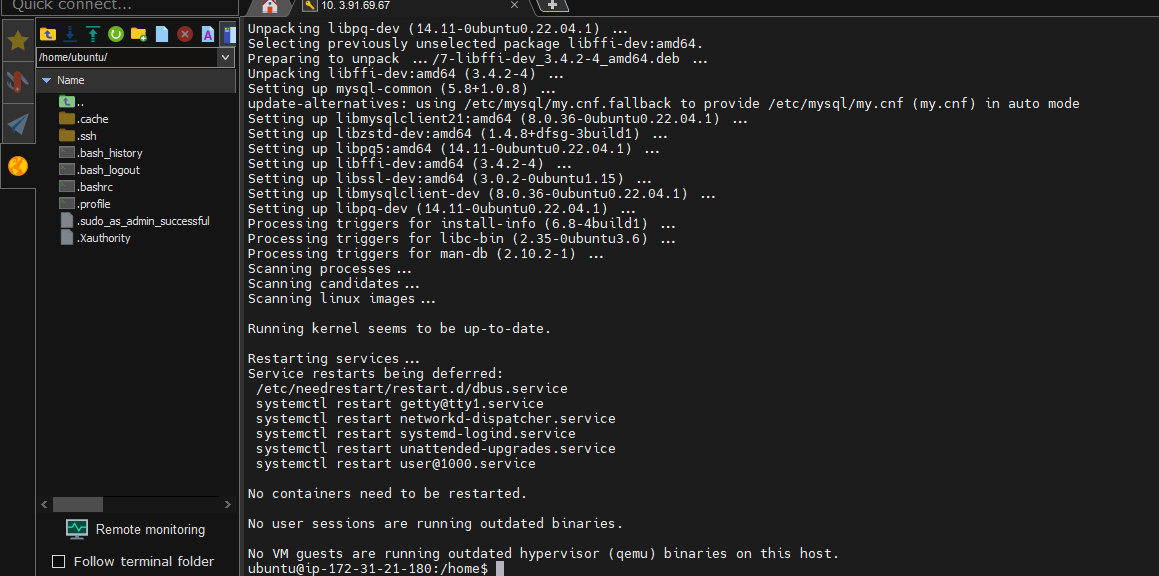

Install Dependencies: First, install the necessary dependencies for Apache Airflow:

sudo apt update sudo apt install python3-pip python3-dev libssl-dev libffi-dev libpq-dev libmysqlclient-dev

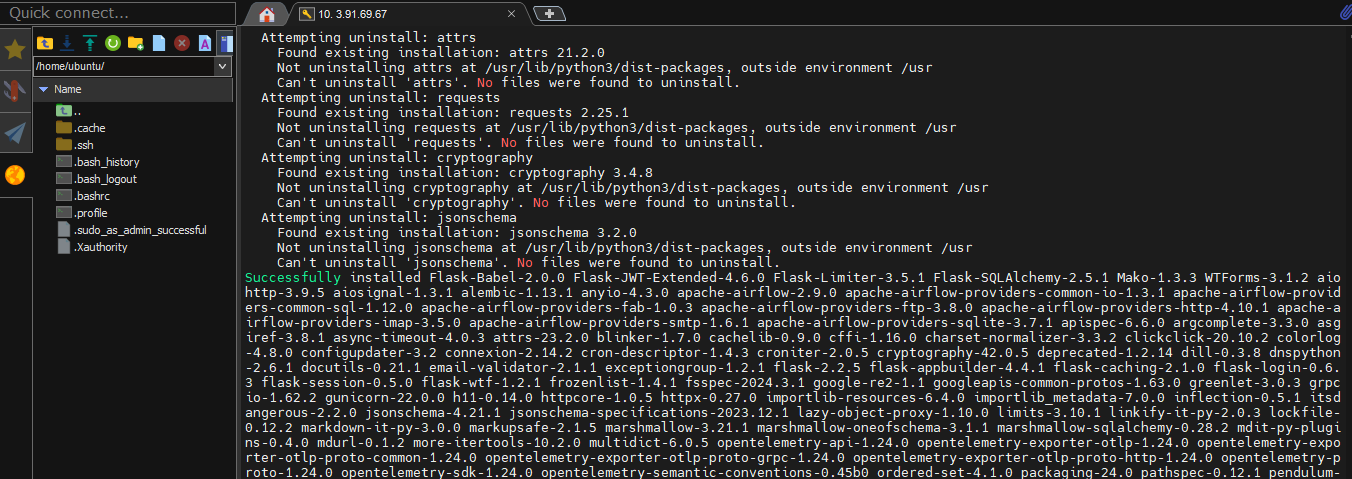

Install Airflow: Next, you can install Apache Airflow using pip.

Always recommended to install Airflow in a virtual environment to avoid conflicts with system-wide Python packages.

sudo pip3 install apache-airflow After executing the commands, you will get the success message like below

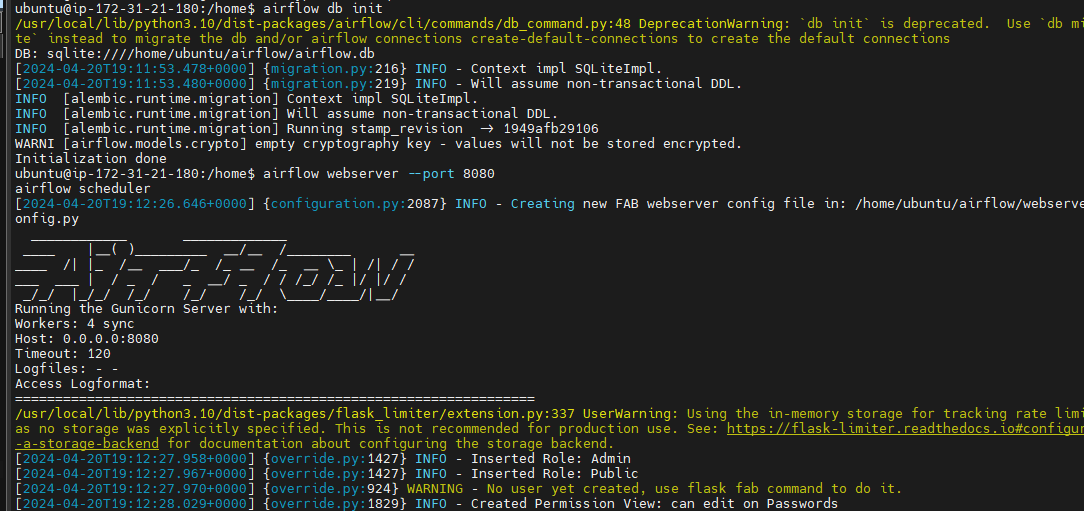

Initialize Airflow Database: After installing Airflow, you need to initialize the database where Airflow will store its metadata.

airflow db init

Start the Airflow Scheduler and Webserver: Finally, start the Airflow scheduler and webserver components.

airflow webserver --port 8080 airflow scheduler

We are now going to schedule our DAG and work on setting up our Airflow scheduler.

Subscribe to my newsletter

Read articles from jeeva B directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

jeeva B

jeeva B

👋 Hey there! I'm Jeeva, a passionate DevOps and Cloud Engineer with a knack for simplifying complex infrastructures and optimizing workflows. With a strong foundation in Python and PySpark, I thrive on designing scalable solutions that leverage the power of the cloud. 🛠️ In my journey as a DevOps professional, I've honed my skills in automating deployment pipelines, orchestrating containerized environments, and ensuring robust security measures. Whether it's architecting cloud-native applications or fine-tuning infrastructure performance, I'm committed to driving efficiency and reliability at every step. 💻 When I'm not tinkering with code or diving into cloud platforms, you'll likely find me exploring the latest trends in technology, sharing insights on DevOps best practices, or diving deep into data analysis with PySpark. 📝 Join me on this exhilarating ride through the realms of DevOps, Cloud Engineering where we'll unravel the complexities of modern IT landscapes and empower ourselves with the tools to build a more resilient digital future. Connect with me on LinkedIn: https://www.linkedin.com/in/jeevabalakrishnan