Web Scraping Wikipedia Tables

Crawlbase

Crawlbase

This blog is originally posted to the crawlbase blog.

In this article, you’ll learn how to scrape a table from Wikipedia, transforming unstructured web page content into a structured format using Python. Covering the essentials from understanding Wikipedia tables’ structure to utilizing Python’s libraries for data extraction, this guide is your comprehensive tutorial for web scraping Wikipedia tables efficiently and responsibly.

If you want to scrape Wikipedia data, refer to our guide on ‘How to scrape Wikipedia‘ which covers extracting data like Page Title, Images etc.

In this guide we will walk you through a simple tutorial on web scraping Wikipedia tables only.

To web scrape Wikipedia table, we’ll utilize BeautifulSoup along with the Crawlbase library for fetching the HTML content.

Structure of Wikipedia Tables

Wikipedia tables are structured using a combination of HTML and wikitext, allowing for both visual and textual editing. To identify a table suitable for scraping, you can look for features like sortable columns which can be arranged in ascending or descending order. The basic components of a Wikipedia table include the table start tag, captions, rows, headers, and data cells. These elements are defined using specific symbols in wikitext, such as ‘|’ for cell separators and ‘—‘ for row separators. The ‘|+’ symbol is used specifically for table captions, while ‘!’ denotes table headings.

Tables on Wikipedia can be styled using CSS. Attributes such as class, style, scope, rowspan, and colspan enhance visual presentation and data organization, ensuring that the table is not only informative but also accessible. It is recommended to use CSS classes for styling instead of inline styles to maintain consistency and clarity across different tables. Additionally, the ‘wikitable’ class is often used to apply a standard style to tables, making them visually consistent across various articles.

Understanding the metadata associated with tables is crucial for effective data scraping. Each table in a relational database-like structure on Wikipedia consists of rows and columns, with each row identified by a primary key. Metadata may include constraints on the table itself or on values within specific columns, which helps in maintaining data integrity and relevance. When scraping Wikipedia, it is essential to consider these structures and metadata to accurately scrape tables from Wikipedia.

How to Scrape a Table from Wikipedia

Step 1: Importing Libraries

We’ll import the necessary libraries for scraping the table from Wikipedia. This includes BeautifulSoup for parsing HTML, pandas for data manipulation, and the CrawlingAPI class from the Crawlbase library for making requests to fetch the HTML content.

from bs4 import BeautifulSoup |

Step 2: Web Scraping Table from Wikipedia pages

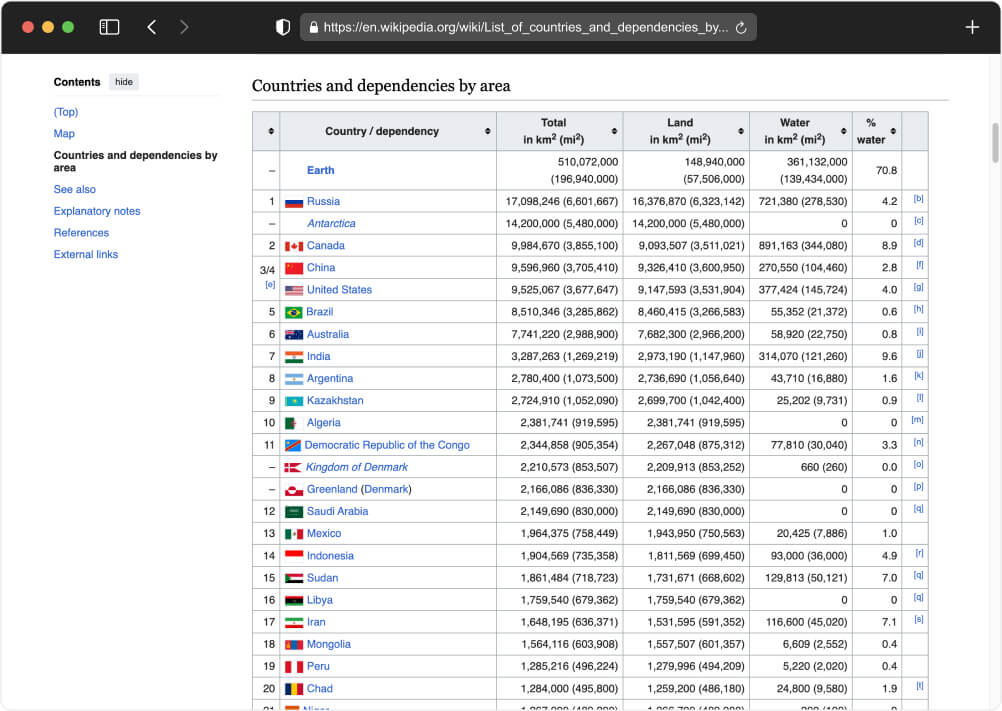

To scrape table from a section of Wikipedia in python, we need to inspect the HTML structure of the webpage containing the table. This can be done by right-clicking on the table, selecting “Inspect” from the context menu. This action will reveal the HTML content of the page, allowing us to identify the tags inside which our data is stored. Typically, tabular data in HTML is enclosed within <table> tags.

Let’s target this URL that contains the table we want to scrape. Once we have identified the URL, we can proceed to extract the table data from the HTML content.

Step 3: Fetch Wikipedia Table data

Next, we’ll initialize the CrawlingAPI to fetch the table data from the Wikipedia page. We’ll pass this data to the scrape_data function to create a BeautifulSoup object. Then, we’ll use the select_one() method to extract the relevant information, which in this case is the <table> tag. Since a Wikipedia page may contain multiple tables, we need to specify the table by passing either the “class” or the “id” attribute of the <table> tag.

You can copy and paste the complete code below:

from bs4 import BeautifulSoup |

Step 4: Execute the Code to Save in CSV file

Once you’re successful to extract data from Wikipedia table, it’s crucial to store it in a structured format. Depending on your project requirements, you can choose to store the data in JSON, CSV formats, or directly into a database. This flexibility allows the data to be used in various applications, ranging from data analysis to machine learning web scraping projects.

Run the code one again using the command below:

python wikipedia_scraper.py |

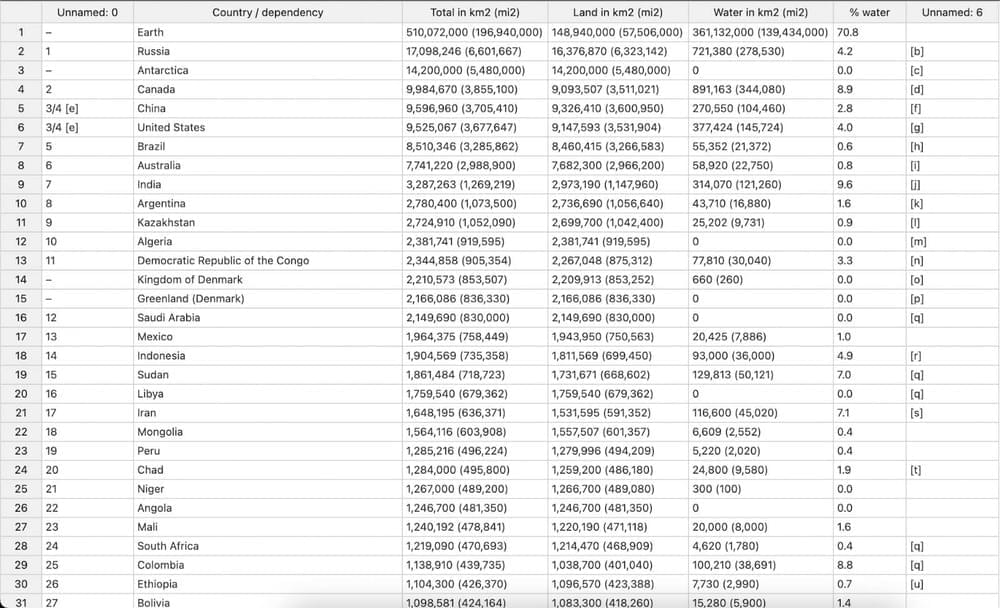

The code structure will allow us to scrape the table from the specified Wikipedia page, process it using BeautifulSoup, and save the extracted data to a CSV file for further analysis. See the example output below:

Conclusion

Throughout this article to web scrape Wikipedia table, we’ve journeyed together from understanding the basic premise of web scraping, particularly focusing on extracting tables from Wikipedia using Python, to setting up your environment and finally transforming the scraped data into a structured and clean format suitable for analysis. Through the use of powerful Python libraries like BeautifulSoup, Requests, and Pandas, we’ve successfully scraped a table from Wikipedia.

Whether you’re a data enthusiast keen on deep dives into datasets or a professional looking to enrich your analytical prowess, the skills you’ve honed here will serve as a solid foundation. To dive deeper into this fascinating world, explore more enlightening blogs on similar topics that can further your understanding and spark even more creativity in your projects. Here are some written tutorials you might be interested in:

How to Scrape Google Scholar Results

How to Scrape websites with ChatGPT

FAQs

- How can I extract a table from a Wikipedia page?

To extract a table from Wikipedia, simply navigate to the Wikipedia page that contains the table you are interested in. Enter the URL into the appropriate field of Crawlbase Crawling API and click “Send”. You can then either copy the table data to your clipboard or download it as a CSV file.

- Is it permissible to scrape data from Wikipedia for my own use?

Yes, web scraping Wikipedia tables using BeautifulSoup and python is generally allowed as many users reuse Wikipedia’s content. If you plan to use Wikipedia’s text materials in your own publications such as books, articles, websites, etc., you can do so under the condition that you adhere to one of the licenses under which Wikipedia’s text is available.

- What is the method to copy a table directly from Wikipedia?

To copy a table directly from Wikipedia, simply select the table by clicking and dragging your mouse over it, then right-click and choose “Copy.” You can then paste it into your document or a spreadsheet application using the “Paste” option.

Subscribe to my newsletter

Read articles from Crawlbase directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by