How to Manage Usage Limits in Colab for Optimal Performance

NovitaAI

NovitaAI

Optimize performance in Colab by managing usage limits effectively. Learn how to navigate usage limits in colab on our blog.

Key Highlights

Understand the usage limits of Google Colab and how they can impact your machine learning projects.

Discover common usage limits and their implications.

Explore strategies to monitor and manage resource consumption in Colab.

Find out about tools and techniques for monitoring usage.

Get tips on how to reduce computational load in Colab.

Learn how to optimize Colab notebooks for maximum performance.

Discover efficient coding practices and lesser-known Colab features that can enhance your ML projects.

Navigate through Colab’s restrictions and learn how to deal with RAM and GPU limitations.

Explore alternatives and supplements to Colab, such as Colab Pro and Google Cloud.

Find out when it’s appropriate to consider upgrading to Colab Pro and explore other platforms similar to Colab.

Get practical tips for long-term Colab users, including managing multiple sessions effectively and avoiding common pitfalls.

Introduction

As machine learning and deep learning projects become increasingly resource-intensive, finding a cost-effective and efficient development environment is crucial. Google Colab Enterprise, a managed version of Colab, offers additional features and capabilities for enterprise use, including the use of generative AI. With its integration with Vertex AI and BigQuery, Colab Enterprise provides a powerful platform for data scientists and machine learning enthusiasts. However, it is important to understand and manage usage limits in Colab Enterprise for optimal performance and resource management.

In this blog, we will explore how to manage usage limits in Colab for optimal performance. By understanding and effectively managing the usage limits in Colab, you can ensure smooth and efficient development of your machine learning projects without compromising on performance or incurring unnecessary costs.

Understanding Colab’s Usage Limits

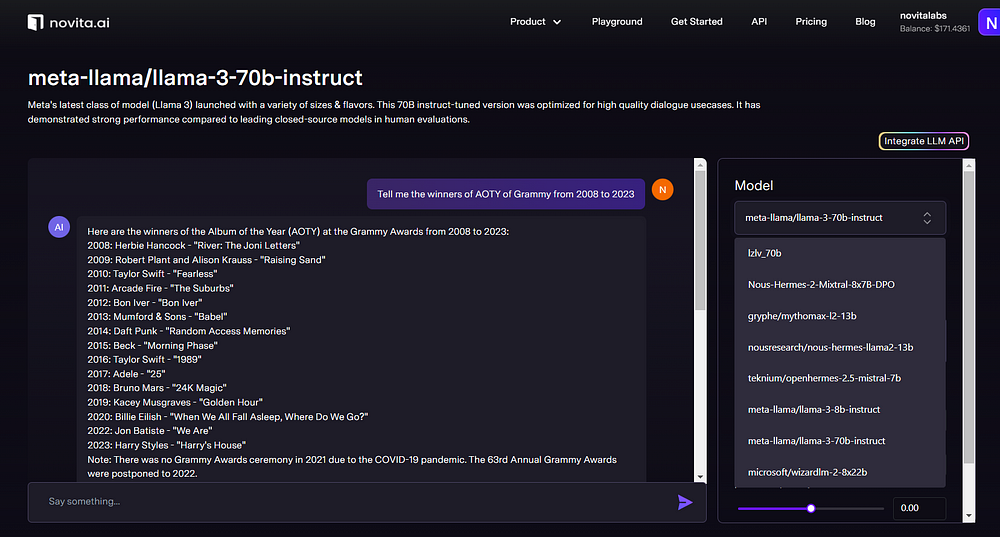

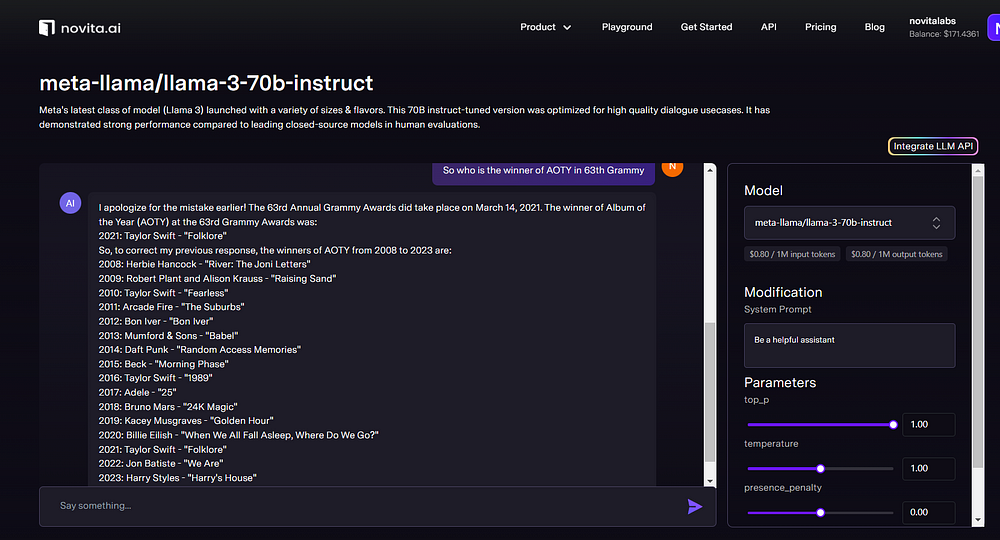

Google Colab offers a free Jupyter-based environment for machine learning projects, many large language models are fine-tuned through Colab including novita.ai LLM.

But it comes with certain usage limits. These limits are in place to manage resource allocation and prevent abuse of the service. It’s important to understand these limits to effectively manage your ML projects in Colab.

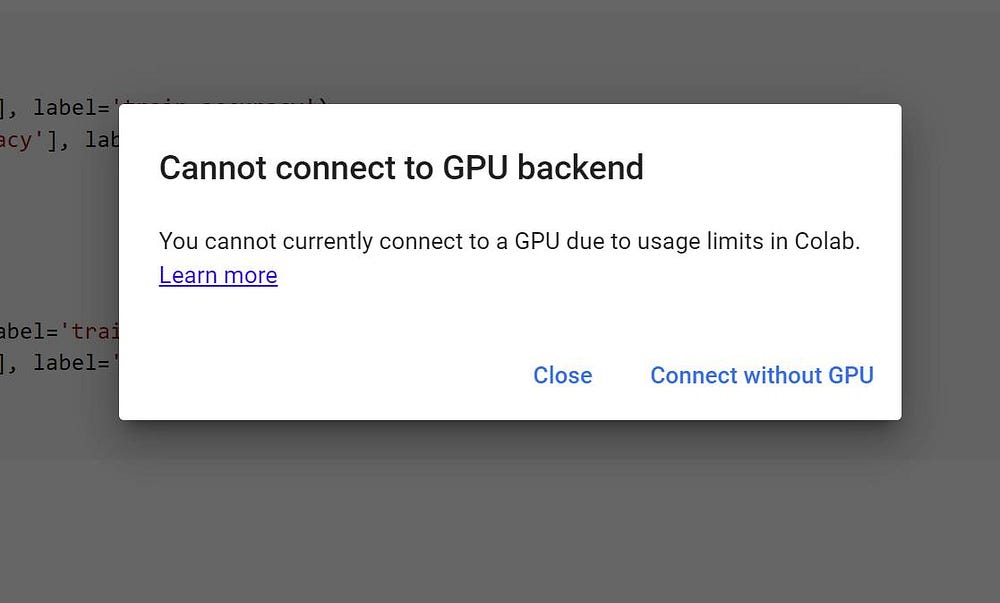

Colab’s usage limits are dynamic and can fluctuate over time. They include restrictions on CPU/GPU usage, maximum VM lifetime, idle timeout periods, and resource availability. While Colab does not publish these limits, they can impact your project’s execution and require monitoring and management for optimal performance.

What Are Colab’s Computational Resources?

Colab provides virtual machines (VMs) with different specifications to support machine learning tasks. These VMs come with pre-installed libraries and packages commonly used in ML projects. Users can access VMs with GPUs or TPUs for enhanced computational power.

The GPU options in Colab include the K80, T4, P100, and V100. GPUs are beneficial for accelerating training and inference tasks in deep learning models, with options to upgrade to faster Nvidia GPUs such as the V100 or A100. On the other hand, TPUs (Tensor Processing Units) are specialized hardware designed by Google for ML workloads. TPUs offer even faster and more efficient computation for training and predicting with large datasets using tensorflow.

Additionally, Colab VMs come with a certain amount of RAM, typically ranging from 12.7 GB to 25 GB, depending on the type of VM. Having a clear understanding of these computational resources is essential for optimizing your ML projects in Colab.

Common Usage Limits and Their Implications

Colab has certain usage limits that users need to be aware of in order to effectively manage their machine learning projects. These limits have implications on interactive compute, idle timeout periods, maximum VM lifetime, and resource availability.

Interactive compute refers to the duration of user activity in a Colab notebook. Colab notebooks have an idle timeout period, after which the runtime is automatically disconnected. This idle timeout period can range from a few minutes to several hours, depending on the VM type. Additionally, VMs in Colab have a maximum lifetime, after which they are automatically terminated.

Resource availability in Colab can also fluctuate, affecting CPU/GPU usage and other factors. These limits and variations impact the execution of ML projects and require careful monitoring and resource management for optimal performance.

Strategies to Monitor and Manage Resource Consumption

To ensure optimal performance and manage resource consumption in Colab, it is important to implement effective monitoring and management strategies. By monitoring resource consumption, you can identify potential bottlenecks and optimize resource allocation.

Tools and Techniques for Monitoring Usage

Monitoring resource usage in Colab can be done using various tools and techniques. These tools help users keep track of their resource consumption and make informed decisions about resource allocation. Some of the tools and techniques for monitoring usage in Colab include:

Google Account: The Google Account associated with Colab provides information on resource usage and allows users to manage their Colab sessions.

Colab Pro: The paid version of Colab, Colab Pro, offers additional tools and features for monitoring and managing resource consumption.

Compute Unit Balance: Colab Pro users have access to compute unit balance, which allows them to monitor their resource usage and make adjustments as needed.

Backend Termination: Colab Pro users can also set up backend termination to automatically terminate idle sessions and free up resources.

By utilizing these tools and techniques, users can effectively monitor and manage their resource consumption in Colab for optimal performance.

Tips to Reduce Computational Load

Reducing computational load is crucial for optimizing resource usage in Colab. By implementing efficient coding practices and minimizing unnecessary computations, users can reduce the strain on resources and improve performance. Some tips to reduce computational load in Colab include:

Efficient Coding Practices: Use optimized algorithms and data structures, minimize redundant computations, and leverage vectorized operations.

Memory Management: Avoid unnecessary memory allocation and deallocation, use generators and iterators instead of loading all data into memory at once.

- Parallel Processing: Utilize parallel processing techniques like multiprocessing or parallel computing libraries to distribute computations across multiple cores or nodes.

By following these tips, users can minimize computational load and optimize resource usage in Colab, leading to improved performance and efficiency in their machine learning projects.

Optimizing Colab Notebooks for Performance

Optimizing Colab notebooks for performance is essential to ensure efficient execution of machine learning projects. By implementing optimization techniques, users can maximize resource usage and improve overall performance.

Efficient Coding Practice

Efficient coding practices play a crucial role in optimizing Colab notebooks for performance. By following these practices, users can reduce computational load, minimize memory usage, and improve overall efficiency. Some efficient coding practices for Colab notebooks include:

Use optimized algorithms and data structures to reduce computational complexity.

Minimize redundant computations and cache intermediate results.

Leverage vectorized operations and optimized libraries to speed up computations.

Implement memory-efficient techniques such as lazy loading and generators to minimize memory usage.

Optimize I/O operations by batching or streaming data instead of loading all data into memory at once.

By following these efficient coding practices, users can enhance the performance of their Colab notebooks and achieve faster execution times for their machine learning projects.

Leveraging Lesser-Known Colab Features

Colab offers various features that are lesser-known but can greatly enhance the performance and efficiency of ML projects. These features enable users to take full advantage of the Colab environment and optimize resource usage. Some lesser-known Colab features include:

Version of Colab: Colab offers different versions, which can be selected based on specific project requirements.

Hidden Features: Colab has hidden features that can be discovered by exploring the Colab environment and experimenting with different settings.

By leveraging these lesser-known features, users can unlock additional capabilities in Colab and optimize their ML projects for improved performance and efficiency.

Navigating Through Colab’s Restrictions

While Colab provides a free and convenient ML development environment, it also has certain restrictions that users need to navigate. These restrictions may impact resource usage limits, including dynamic usage limits, and require users to adapt their projects accordingly.

Dealing with RAM and GPU Limitations

Colab’s free VMs have limitations regarding RAM and GPU usage. Users need to be aware of these limitations and find ways to work within them. Some strategies for dealing with RAM and GPU limitations in Colab include:

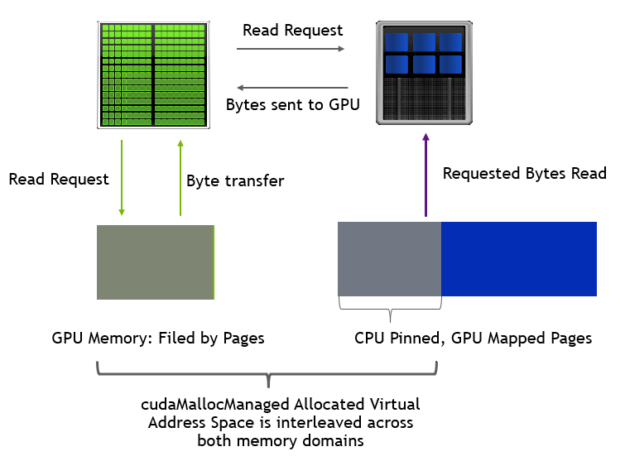

Optimizing Memory Usage: Minimize unnecessary memory allocation, use generators and iterators instead of loading all data into memory at once.

Batch Processing: Split large datasets into smaller batches to accommodate RAM limitations.

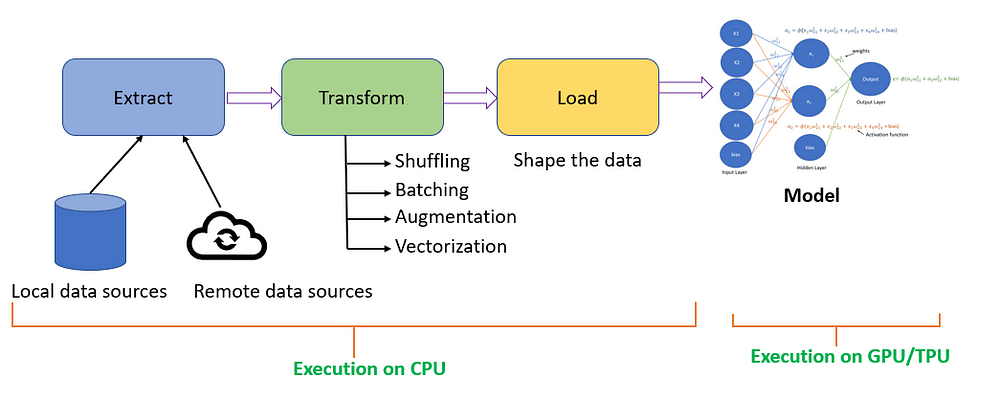

GPU Utilization: Implement batch-based data-flow to the GPU using tools like Keras/TF2 generators for efficient GPU usage.

By implementing these strategies, users can effectively manage RAM and GPU limitations in Colab and optimize resource usage for their ML projects.

Solutions for Time-bound Execution

Colab has time-bound execution limitations, with VMs having a maximum lifetime after which they are automatically terminated. To ensure uninterrupted execution of time-bound tasks, users can implement the following solutions:

Checkpointing: Save model checkpoints at regular intervals to ensure progress is not lost if the VM is terminated.

Job Scheduling: Divide long-running processes into smaller tasks that can be executed within the maximum VM lifetime.

Resource Monitoring: Regularly monitor resource usage and adjust the execution plan accordingly to complete time-bound tasks within the allocated time.

By employing these solutions, users can effectively manage time-bound execution in Colab and ensure the successful completion of their ML projects.

Alternatives and Supplements to Colab

While Colab offers a free environment for ML development, there are alternatives and supplements that users can consider for more advanced features and resource availability.

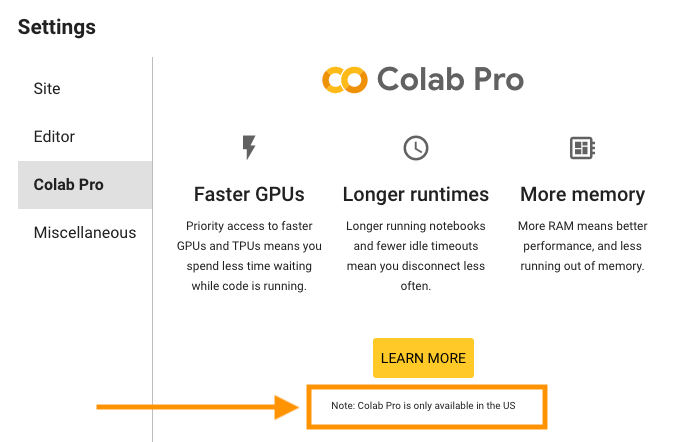

When to Consider Upgrading to Colab Pro

Colab Pro offers additional features and resources that can be beneficial for users working on more advanced ML projects. Some factors to consider when deciding to upgrade to Colab Pro include:

Increased resource availability: Colab Pro offers more powerful VMs with higher RAM and GPU options, providing better performance for resource-intensive tasks.

Longer session duration: Colab Pro extends the maximum session duration, allowing users to work on projects for extended periods without interruptions.

Background execution: With Colab Pro, users can run notebooks in the background while working on other tasks, improving productivity.

Terminal access: Colab Pro provides terminal access, enabling users to execute command-line operations within the Colab environment.

By evaluating these factors, users can determine if upgrading to Colab Pro is suitable for their ML projects and resource requirements.

Exploring Other Platforms Similar to Colab

Apart from Colab, there are other platforms that offer similar dynamics and features for ML development. These platforms provide alternatives and supplements to Colab, allowing users to explore different options for their ML projects. Some platforms similar to Colab include:

Jupyter Notebooks: Jupyter Notebooks is a widely used open-source platform for interactive computing that offers similar features to Colab.

Kaggle: Kaggle is a popular platform for data science and machine learning competitions that provides a hosted Jupyter notebook environment with resources for ML projects.

By exploring these alternative platforms, users can find the one that best suits their needs and preferences for ML development.

Practical Tips for Long-Term Colab Users

For long-term Colab users, it is important to adopt best practices and implement effective management strategies.

Managing Multiple Sessions Effectively

Managing multiple sessions effectively is essential for long-term Colab users. By implementing proper session management techniques, users can streamline their workflow and effectively utilize Colab’s resources. Some tips for managing multiple sessions in Colab include:

Organize Notebooks: Use folders and naming conventions to keep your notebooks organized and easily accessible.

Utilize Sessions Tab: Take advantage of Colab’s sessions tab to manage and switch between different active sessions.

Backup Notebooks: Regularly backup your notebooks to ensure that your progress is saved and can be easily accessed.

Use Colab VMs: Consider using Colab VMs for long-term projects to avoid interruptions due to idle timeout periods.

By following these tips, long-term Colab users can effectively manage multiple sessions and optimize their workflow.

Avoiding Common Pitfalls in Colab Usage

While using Colab, there are common pitfalls that users should be aware of and avoid to ensure a smooth and efficient experience. Some common pitfalls in Colab usage include:

Resource Exhaustion: Being mindful of resource usage and avoiding excessive consumption to prevent unexpected termination of VMs.

Poor Code Optimization: Failing to optimize code for efficient resource usage, leading to slow execution and increased resource consumption.

Lack of Backup: Not regularly backing up notebooks, which can result in loss of progress if a session is terminated or an error occurs.

Overreliance on Free Resources: Relying solely on free resources without considering the need for additional resources or upgrading to Colab Pro.

By avoiding these common pitfalls, users can maximize their productivity and avoid unnecessary setbacks in their Colab usage.

Conclusion

In conclusion, managing usage limits in Colab is crucial for optimal performance. By understanding Colab’s computational resources and implementing strategies to monitor and manage resource consumption, you can enhance your coding experience. Optimizing Colab notebooks through efficient coding practices and leveraging lesser-known features can improve overall performance. Navigating through Colab’s restrictions, dealing with limitations, and considering alternatives like Colab Pro when needed are essential steps. Practical tips for long-term users include managing multiple sessions effectively and avoiding common pitfalls. Stay mindful of your resource usage to make the most of Colab’s capabilities.

Frequently Asked Questions

How Can I Check My Current Resource Usage in Colab?

You can check your current resource usage in Colab by accessing your Google Account associated with Colab. The account provides information on resource consumption and allows you to monitor and manage your Colab sessions.

What Happens When I Reach My Usage Limit?

When you reach your usage limit in Colab, your backend may be terminated, resulting in the disconnection of your session. This termination is a measure to manage resource allocation and prevent abuse of the service.

Can I Extend My Usage Limits Without Upgrading to Pro?

No, you cannot extend your usage limits in Colab without upgrading to Colab Pro. Colab Pro offers additional resources and features that are not available in the free version.

Originally published at novita.ai

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.

Subscribe to my newsletter

Read articles from NovitaAI directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by