Introduction to Cloud-native buildpack (CNB)

Meenu Yadav

Meenu Yadav

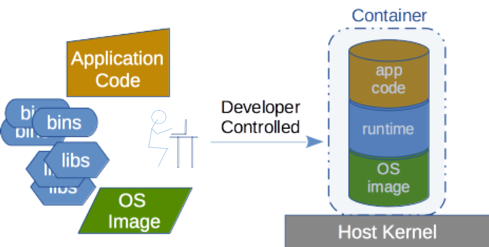

If you are working with a container then chances you have seen a diagram that shows something like this where is the rectangular box that shows applications and libraries layered together into a container. Development teams love containers because you know this neat little packaging that makes it portable. You can run the container on cloud infrastructure or on primes. The size of the container is very small. So it makes sense from the resources consumption model and that is what the unique ability of a container is. The containers are built in layers so it makes it easy for somebody to take a container from one end and send it away or ship it as a brand-new container. This is what makes things much easier and fun for the development teams.

But development teams do not work with only one container they might work with tens or hundreds or even more. How fun is it to walk through each container and start building that? Do they save time? Is there an overhead that they have to spend constructing these containers? That is what we are going to find out.

Typically, that is what a simplified container diagram is. In reality, a container diagram might have multiple layers. It will most likely have another base layer with something like Ubuntu or base OS or any runtime capabilities required to compile software that you packaged up within the container. When development teams are trying to build a container the objective is really simple to solve a business problem that they have coded up or they have created an application around and that is going to run in a container. It starts with writing some kind of code. The application code is built with lots of discussions such as what kind of language will used. Is this Java? or is this a Ruby app? and what will be an architecture of the application? How it will go?

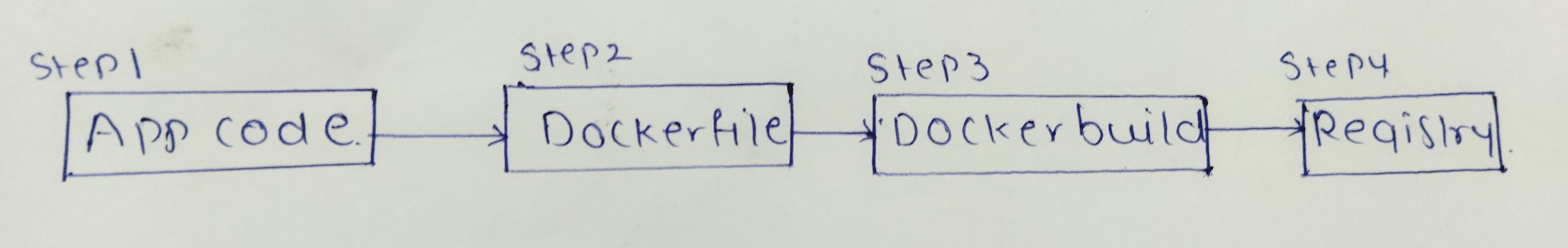

Once that is decided then development teams would like to package it up in a container and to do so most likely they are going to start writing file calls as Dockerfile. Dockerfile is essential text file that has instructions on how to package up your application code so that it can run on a container on time. (Need to know more about that go read it out https://meenuy.hashnode.dev/dive-into-docker-a-beginners-guide-to-containerization ). This docker file has a set of instructions or just a template to create a docker image. For creating a docker file you need to decide what would be a base image. For example if Nodejs application then NPM could be base image. And next thing they have to figure out is what libraries will be needed and how to start installing them. And you have to give those instructions in the docker file. After these, you have to actually compile or build the application itself. Because the containers are bite size you have to remove unnecessary files which are created either by compile or build process.

Once the docker file is ready then the building of the docker image will be start. After the building of the docker image is done then put it into the registry (The registry is which having multiple docker images) for example, the docker hub. Then start deploying these containers

Now think about the entire process of enabling or writing application code, packaging it up into a container, and then finally deploying it. This process may seem simple for maybe one, two, or three containers but what if you have to build tens or hundreds of containers then there is significant overhead when it comes to manually writing a dockerfile building it, and then deploying it. There is also a testing cycle involved because you wanna test out whether that dockerfile that you have created is working or not. This can be overhead.

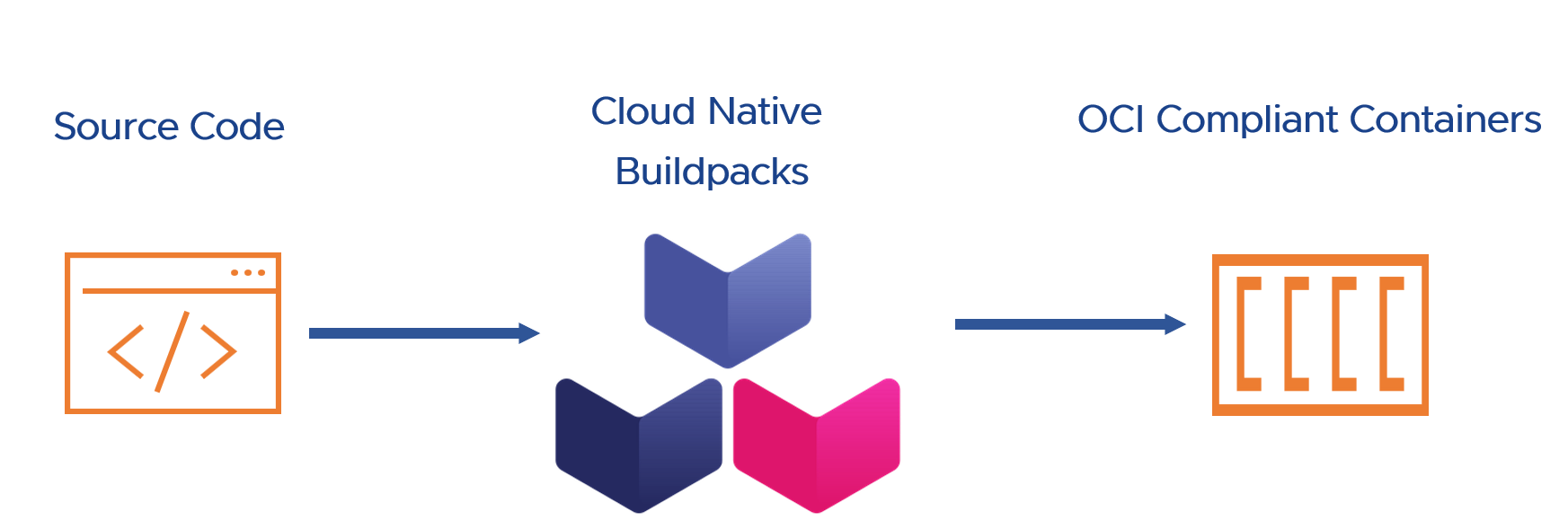

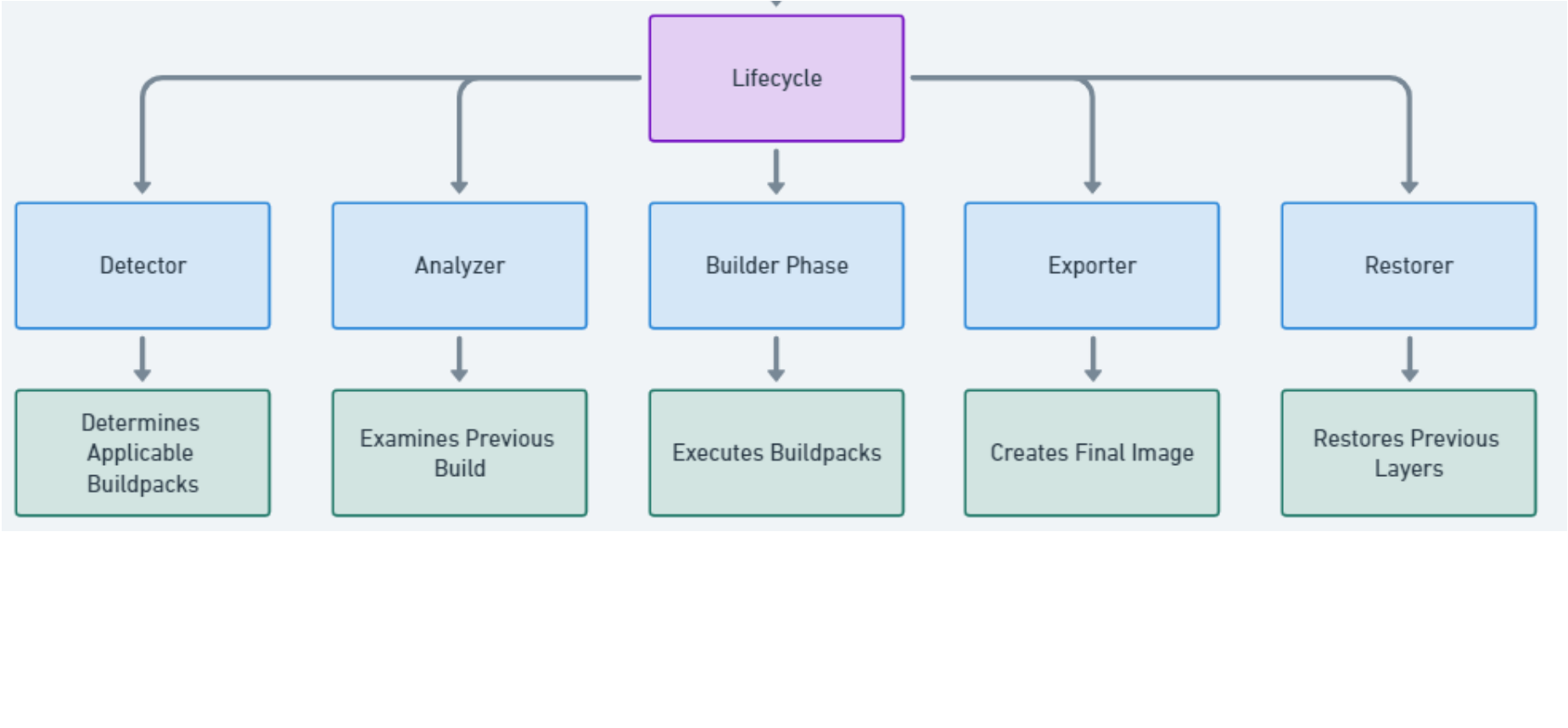

Imagine if development teams did not have to do all these three steps step number two, three, and four. If there is a way to automate a lot of this right? We can automate this entire process and that is what cloud-native buildpacks help us to do. Cloud-native buildpacks are open-source projects within cloud native computing foundation (CNCF) and it is a set of standards defining how these steps that are required to build a compliant container image can be automated. Using these standards there are projects that have been built around enabling that using a CLI or an API. The most common way of doing that is through the cloud-native buildpacks project pack. pack is a command line tool. What will the pack do? Pack will run into your application code and It will detect what kind of application code have for example if it sees a pom.xml file it understands that this is probably a Java or a spring boot application that needs a spring or Java. If it sees the gem file it knows that this is probably a Ruby app, so it needs to have a Ruby build pack that will detect and package it up. Cloud-native buildpacks have multiple different buildpacks based on different languages. These buildpacks essentially understand how to create, and how to package up this application into a container that is layered to make life simpler of development teams. The first thing it will do is if you run it in some directories or where your code is there it will scroll through those directories and understand what language buildpack is needed to enable. Then it will create a plan for what to build and how to build.

There might be a possibility that your application can have more than one language or might need multiple stages in the docker file build process. The plan is going to analyze and figure out which buildpack should be executed first. Once the plan has been created then comes the actual build stage where the cloud native buildpack will package up your code into multiple different layers. The build process is essentially going to figure out the plan and then build layers of different container images. The build will also compile the app and it will build the app into a completely different image whatever is not needed will be discarded and then it will be packaged up. Then it will be exported into an OCI-compliant image. These are the different lifecycles they go through.

Now these things are very interesting because it is not only optimizing or automating the entire process of building dockerfile but it is also optimizing what the dockerfile is going to be from the security perspective. Not these things are interesting but there is another thing of the build pack that is also interesting which is rebasing let's understand that.

Once the image starts running locally or you have deployed it the image has the base layer, library layer, runtime layer, and application layer and also you have multiple such containers where you can start to use pack CLI to automate these entire processes. This is great where you have automated everything that a container needs. But if something with the one of image layers or one of the base layers you find out there is a vulnerability or some dependency version needs to be chang. Then you now have to update that container In the traditional process you have to go to again and create a new dockerfile give it a new base image and then go through the entire build process again. But with the cloud-native buildpacks you have to really go to the CLI and write only pack rebase my-app:my-tag what it will do because cloud-native buildpacks has already built that image for you it will see through the different layers that exist within that container. It will discard that layer and replace it with a new layer or whatever the latest version of the patched layer. So you do not have to go through the entire process of redefining you just have to run one command. This is very fast, if you have to apply the patch to your container image this is a much more efficient and secure way to do so.

Cloud-native buildpacks are a great way to go about automating your docker file instead of just handwriting them going through the error process and then testing them all over again and again multiple numbers of times. So the Cloud-native buildpacks save you time.

Go check out the official docs of Buildpack. Thank you for reading If you find this article helpful do like, comment and share with your friends.

Subscribe to my newsletter

Read articles from Meenu Yadav directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Meenu Yadav

Meenu Yadav

Hello! My name is Meenu Yadav and I am a computer science student with a passion for technology. I started this blog as a way to document my learning journey and share my knowledge and insights with others who are also interested in the field of computer science.