Phi-3: The Free Local Alternative to Github Copilot for VS Code

Pradeep Vats

Pradeep Vats

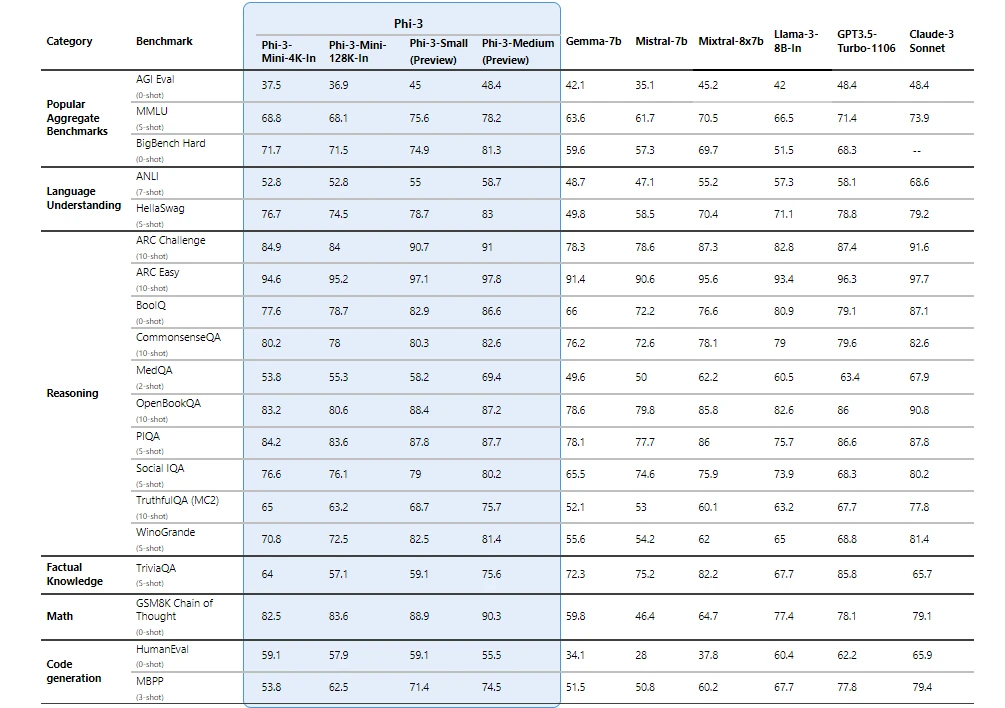

Hey coders, tired of shelling out cash for fancy code completion tools? Look no further! Today, we'll guide you through building your very own free, local AI assistant that rivals the likes of Github's Copilot. This project leverages the power of Microsoft's new Phi-3 model, are the most capable and cost-effective small language models (SLMs) available, outperforming models of the same size and next size up across a variety of language, reasoning, coding, and math benchmarks.

Starting today, Phi-3-mini, a 3.8B language model is available on Microsoft Azure AI Studio, Hugging Face, and Ollama.

Phi-3-mini is available in two context-length variants—4K and 128K tokens. It is the first model in its class to support a context window of up to 128K tokens, with little impact on quality.

It is instruction-tuned, meaning that it’s trained to follow different types of instructions reflecting how people normally communicate. This ensures the model is ready to use out-of-the-box.

It has been optimized for ONNX Runtime with support for Windows DirectML along with cross-platform support across graphics processing unit (GPU), CPU, and even mobile hardware.

In the coming weeks, additional models will be added to Phi-3 family to offer customers even more flexibility across the quality-cost curve. Phi-3-small (7B) and Phi-3-medium (14B) will be available in the Azure AI model catalog and other model gardens shortly.

Microsoft continues to offer the best models across the quality-cost curve and today’s Phi-3 release expands the selection of models with state-of-the-art small models.

Building Your Local Code Hero

To construct your very own local AI assistant, we'll utilize two key tools:

Ollama: This open-source platform acts as the API, allowing us to interact with the Phi-3 model.

Continue Dev: This handy VS Code extension seamlessly integrates with Llama, providing a user-friendly interface for interacting with Phi-3.

Setting Up Your AI Co-pilot:

Install Ollama: Go to Ollama website (https://ollama.com/) and download the appropriate version for your operating system. Follow the installation instructions provided.

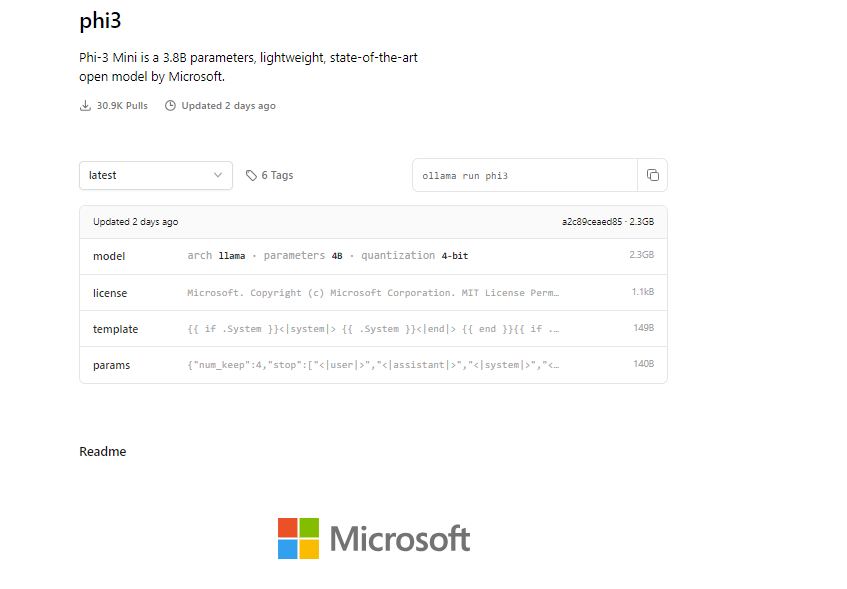

Unleash Phi-3: Once Llama is up and running, Go to Models and click on phi3 (https://ollama.com/library/phi3) for the installation command for the Phi-3 model on Llama's model page. Copy this command, paste it into your terminal, and let the magic happen!

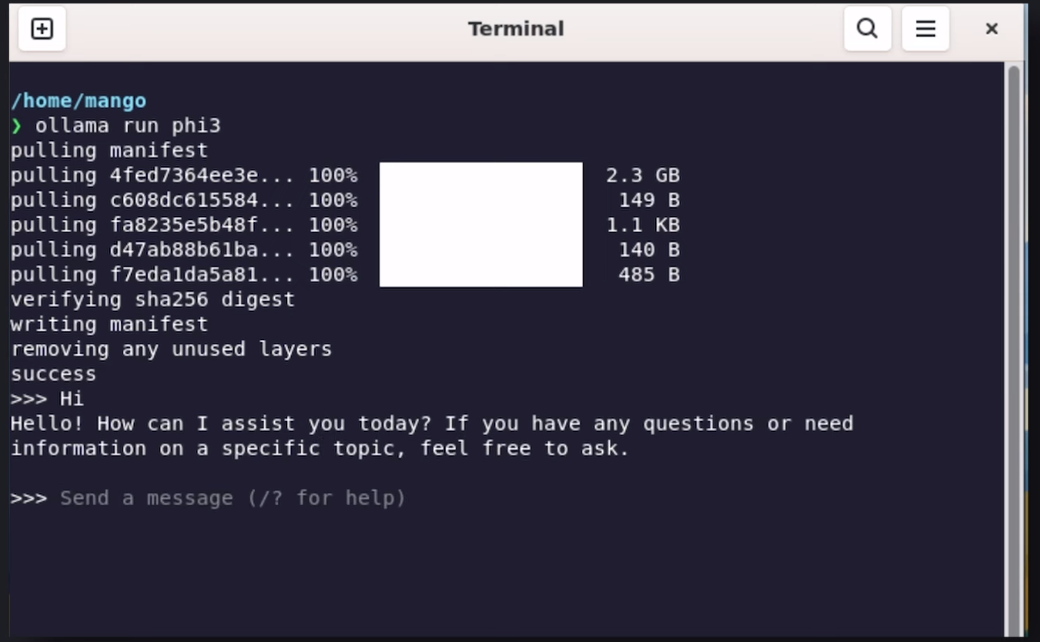

ollama run phi3

Test Your New Friend: A chat interface should appear in your terminal. Send a message to Phi-3 to confirm everything's working smoothly.

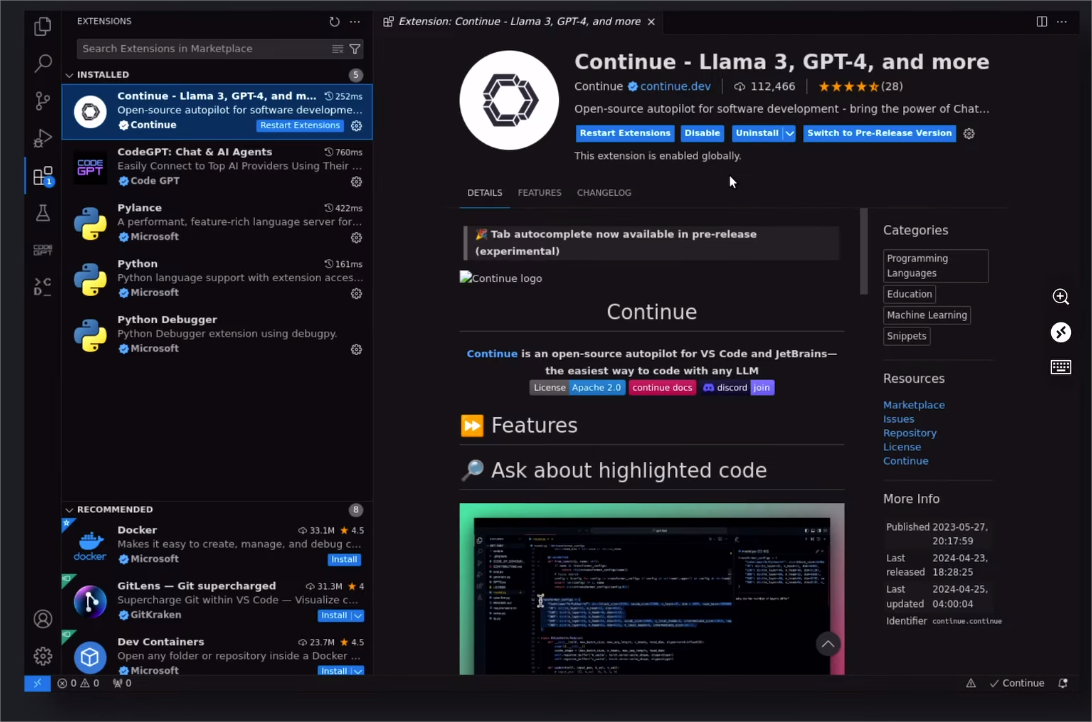

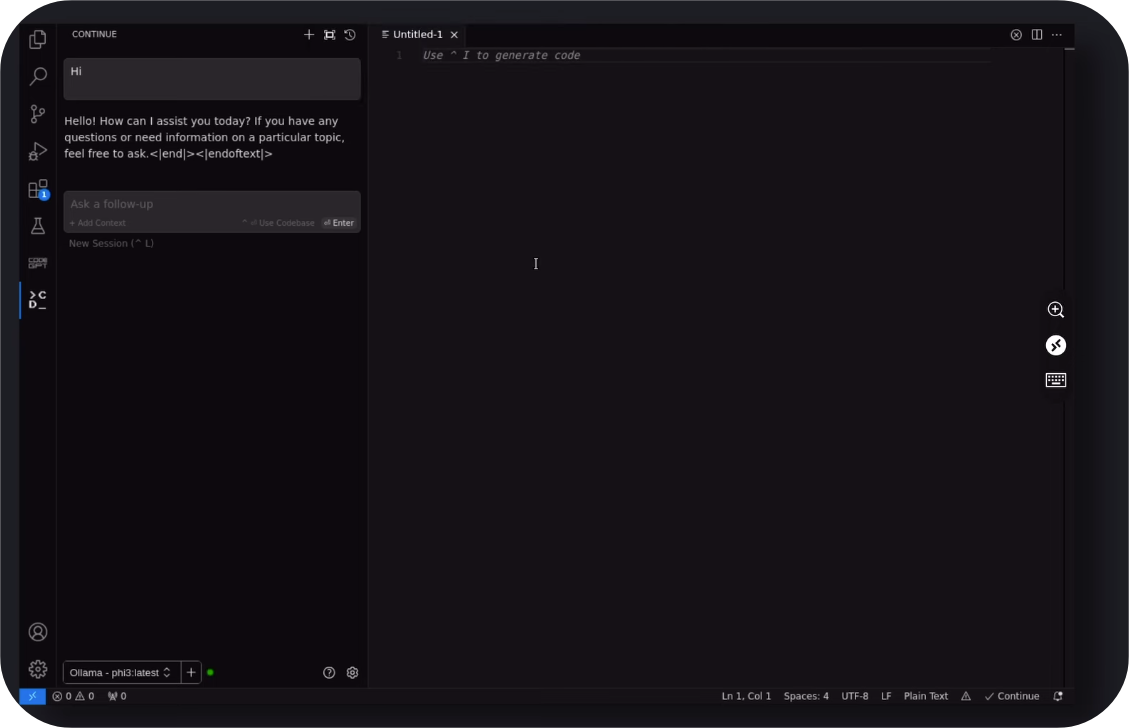

Welcome Continue Dev: Now that Llama is operational, it's time to install the Continue Dev extension for VS Code. You'll find it readily available in the VS Code marketplace.

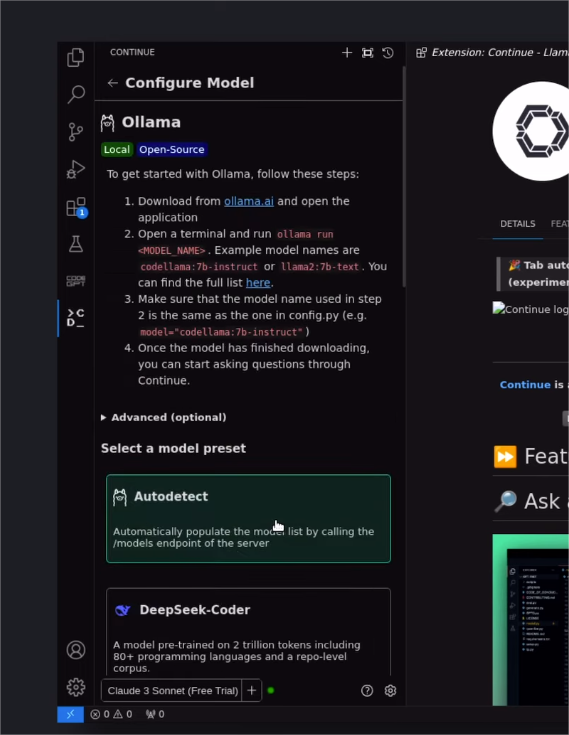

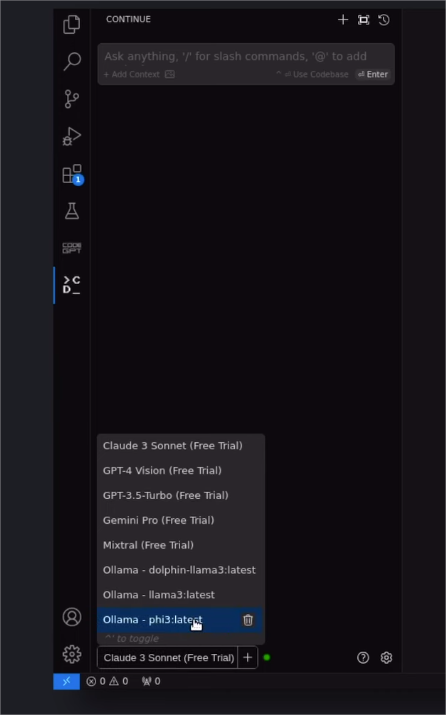

Configure Continue Dev: After installation, open the extension and locate the "Add" button. From the models dropdown, select Llama and choose "Autodetect." This ensures Continue Dev utilizes the Phi-3 model you previously installed.

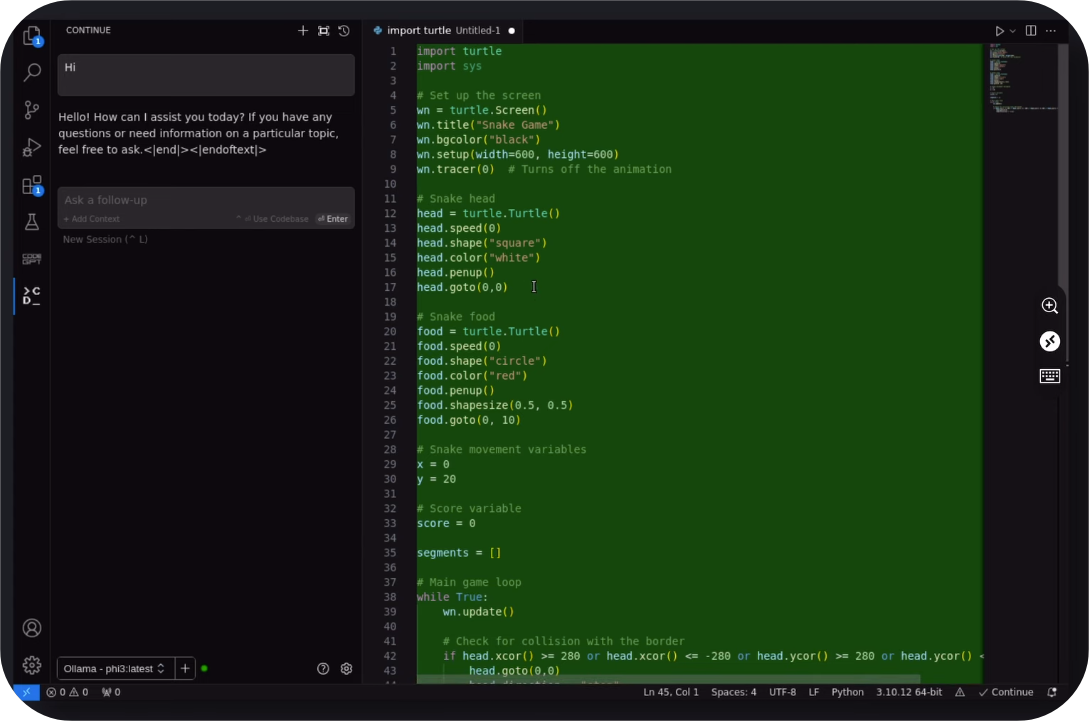

Time to Code! You're now ready to unleash the power of Phi-3! Interact with it directly through the Continue Dev chat interface or use the magic shortcut (Control+I) within VS Code to generate code based on your prompts. Continue Dev also offers autocompletion and the ability to ask questions related to your code.

Free Your Coding Potential!

This setup provides a powerful, free alternative to paid code completion tools. Phi-3, with its local accessibility, offers a compelling option for coders on a budget or those seeking more control over their AI assistance. So, ditch the subscription fees and dive into the world of free, local AI coding with Phi-3!

Get started today

To experience Phi-3 for yourself, start with playing with the model on Azure AI Playground. You can also find the model on the Hugging Chat playground. Start building with and customizing Phi-3 for your scenarios using the Azure AI Studio. Join us to learn more about Phi-3 during a special live stream of the AI Show.

Subscribe to my newsletter

Read articles from Pradeep Vats directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by