Chat with any file using Gemini

HU, Pili

HU, Pili2 min read

The latest interface of Gemini Pro supports to send a sequence of interlaced text / files as input (aka parts). The raw JSON API is convenient and straightfoward. One can wrap a utility function in 20 lins of codes.

Utility function

import requests

# Change to your own configurations

PROJECT_ID = "test-project-gdg-gemini"

LOCATION = "us-central1"

API_ENDPOINT = f"{LOCATION}-aiplatform.googleapis.com"

MODEL_ID="gemini-1.0-pro-vision"

def request_gemini(prompt, gs_path):

# url = f'https://{API_ENDPOINT}/v1/projects/{PROJECT_ID}/locations/{LOCATION}/publishers/google/models/{MODEL_ID}:streamGenerateContent'

url = f'https://{API_ENDPOINT}/v1/projects/{PROJECT_ID}/locations/{LOCATION}/publishers/google/models/{MODEL_ID}:generateContent'

r = requests.post(

url,

headers={

"Authorization": f'Bearer {access_token}',

"Content-Type": "application/json",

},

json={

"contents": {

"role": "USER",

"parts": [

{"text": prompt},

{"file_data": {

"mime_type": "text/html",

"file_uri": gs_path

}}

]

},

"generation_config": {

"temperature": 0.2,

"top_p": 0.1,

"top_k": 16,

"max_output_tokens": 2048,

"candidate_count": 1,

"stop_sequences": []

},

"safety_settings": {

"category": "HARM_CATEGORY_SEXUALLY_EXPLICIT",

# "threshold": "BLOCK_LOW_AND_ABOVE",

"threshold": "BLOCK_NONE",

}

})

return r.json()

def chat(prompt, gs_path):

r = request_gemini(prompt, gs_path)

t = r['candidates'][0]['content']['parts'][0]['text']

return t

def transfer_to_gs(url, gs_filename):

r = requests.get(url)

open(gs_filename, 'wb').write(r.content)

!gsutil cp {gs_filename} gs://test-gemini-files/

Caution:

- Change the project to yours.

- The

!gsutil cpis a shortcut in CoLab/ Jupyter env to copy files to Google Cloud Storage. You may need to change to proper Python code in prod environment. - Assign access token with the auth method suitable in your env. One example in CoLab is as below.

access_token = !gcloud auth print-access-token

access_token = access_token[0]

Usage

url = 'https://hupili.net/article/ultra-notes-on-track/'

transfer_to_gs(url, 'on-track.html')

# Checkout the head of the downloaded file

!head -n30 on-track.html

#

chat('prompt here', 'gs://test-gemini-files/on-track.html')

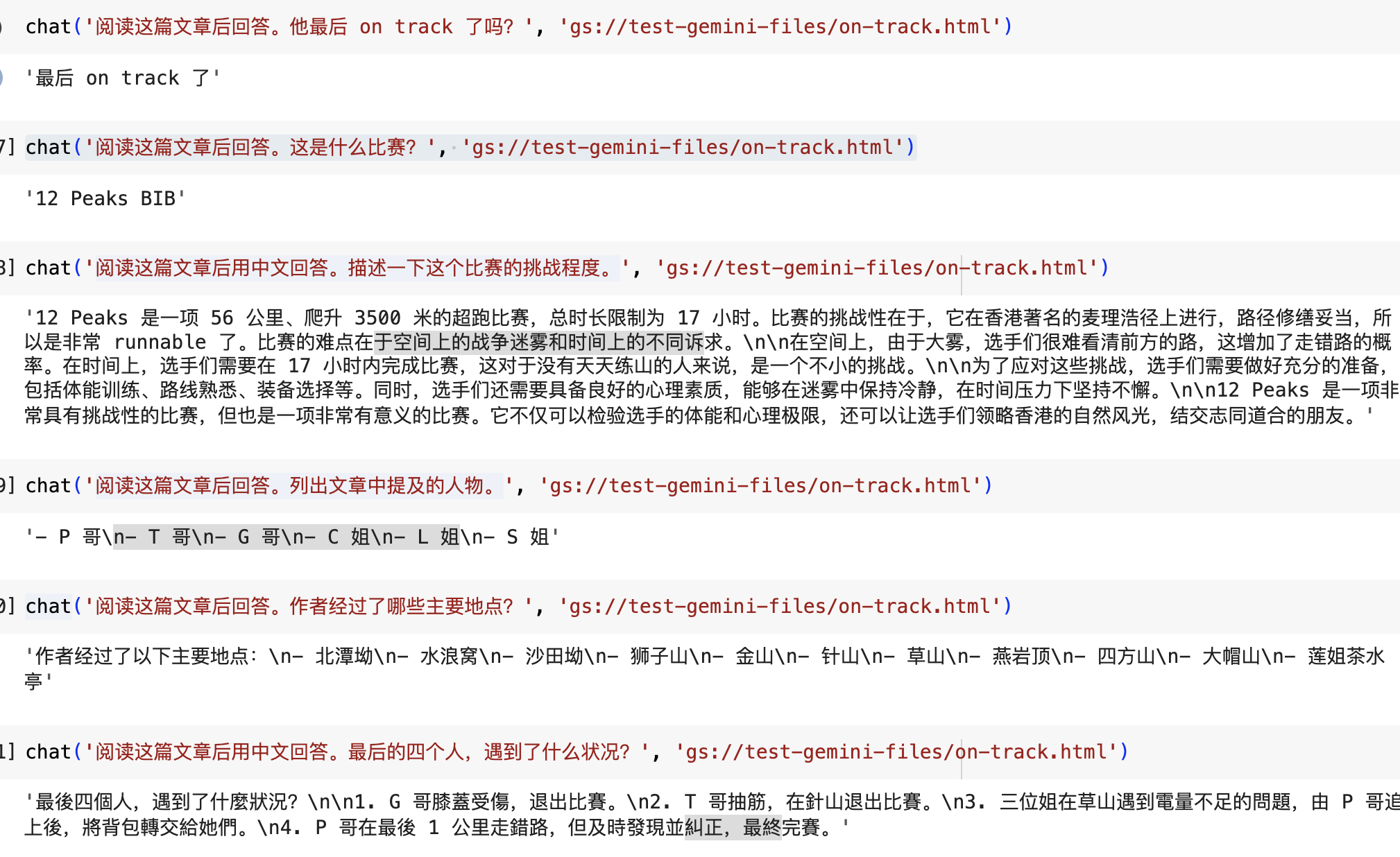

Below are some sample chat logs:

Notes

- The file needs to be stored on Google Cloud Storage.

- Each chat takes about 10-20 seconds to respond.

0

Subscribe to my newsletter

Read articles from HU, Pili directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

HU, Pili

HU, Pili

Just run the code, or yourself.