Explained: Proxy, Reverse Proxy, Forward Proxy, Load Balancer, and API Gateway Differences

Riham Fayez

Riham Fayez

Load balancers, Forward/Reverse proxies, and API gateways are crucial components for handling network traffic and improving the performance of web applications.

Choosing the right solution depends on your specific use case, scalability needs, performance requirements, security considerations, integration capabilities, and cost factors.

Understanding the roles of load balancers, forward/reverse proxies, and API gateways is crucial for architects and developers aiming to construct scalable, secure, and high-performance systems in the constantly changing world of web technologies.

What is a Proxy?

In the dictionary, a proxy is a person authorized to act on behalf of another.

Imagine a proxy server as a middle-person or messenger that assists when you need to communicate with someone else, similar to sending a letter through a friend.

A proxy is a server that acts as a middleman between clients (like web browsers or applications) and servers (your backend). It facilitates their communication by offering functions such as security, caching, load balancing, or anonymity.

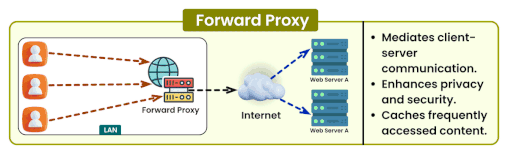

What is a Forward Proxy?

It intercepts and forwards requests from clients (e.g., computers, smartphones) to the internet (destination servers e.g., web servers, external services), and then forwards the responses back to the clients.

It is positioned on the client side of the network, serving as a gateway for client devices to access resources on the internet.

How it works?

Client Request: When a client (e.g., a web browser) makes a request to access a resource on the internet, it sends the request to the forward proxy server instead of directly connecting to the target server.

Proxy Server Handling: The forward proxy server receives the client's request and forwards it on behalf of the client to the target server.

Response Relay: The target server processes the request and sends the response back to the forward proxy server.

Client Response: The forward proxy server, upon receiving the response, forwards it to the client.

Benefits:

Improved Performance: it may cache responses to improve performance.

Anonymity: It provides anonymity for clients by masking their IP addresses from the internet.

Content Filtering: They are commonly used for content filtering, access control, and monitoring, especially in corporate networks.

Example:

In a corporate network, employees might use a forward proxy to access the internet. The proxy can filter content, block certain websites, and provide additional security.

Use cases:

Enhancing privacy

Access control and content filtering

Monitor web activity

Log client's requests/response

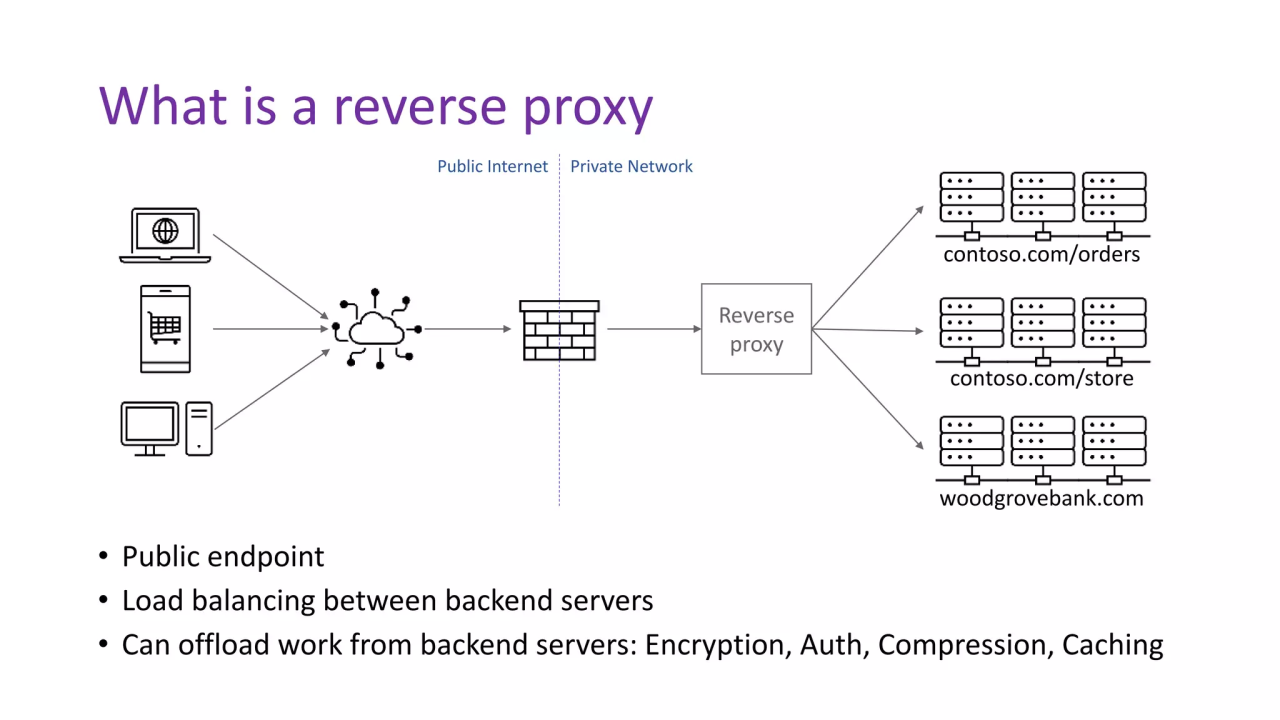

What is a Reverse Proxy?

Sits in front of web servers, acts as an intermediary, and forwards client requests to the appropriate servers.

It's used to control, direct traffic from client to servers, load balancing, apply SSL termination, and many other things. It's a layer that sits between client request and backend server.

It is also known as an application-level gateway.

How it works?

Client Request: A client sends a request to access a resource on a particular domain.

Reverse Proxy Routing: The reverse proxy server receives the client's request and determines which backend server should handle the request based on predefined rules.

Request Forwarding: The reverse proxy forwards the client's request to the selected backend server.

Backend Server Response: The backend server processes the request and sends the response back to the reverse proxy.

Response to Client: The reverse proxy then relays the response to the client.

Options :

Nginx , Apache HTTP Server (when configured as a reverse proxy) ,HAProxy ,...etc

Use Cases:

Server-Side Functionality: Clients are often unaware of the presence of a reverse proxy. They send requests to the reverse proxy, which then distributes those requests to backend servers.

Web Application Security:

Reverse proxies act as a protective shield for backend servers by intercepting and filtering incoming requests. They can provide security measures such as filtering malicious traffic, protecting against DDoS attacks,Web Application Firewall (WAF) and implementing access controls.

Reverse proxies enhance security by hiding the internal structure of the network and providing an additional layer of protection against direct attacks on backend servers.

Example:

A website might use a reverse proxy to distribute incoming web traffic across multiple servers, achieving load balancing and ensuring high availability.

Caching and Content Delivery:

Reverse proxies can cache static and dynamic content (frequently requested content without) , reducing the load on backend servers and improving response times for subsequent requests. This is particularly useful for websites with high traffic and frequent content updates.

SSL Termination:

Reverse proxies can handle SSL encryption and decryption, offloading this task from backend servers. This simplifies the server configuration and reduces the computational load on the servers, improving performance.

Offload the burden of encrypting and decrypting traffic from the backend servers.

Load Balancing

One of the primary functions of a reverse proxy is load balancing. It distributes incoming client requests across multiple backend servers to ensure optimal resource utilization.

Benefits:

If you're managing a website and you want to improve its security, manage traffic efficiently, or provide a faster experience to visitors by caching content, a reverse proxy is helpful.

Enhanced Security: Reverse proxies offer an extra layer of security by protecting backend servers from direct internet exposure. They have the ability to filter and block harmful requests, stopping attacks and unauthorized access.

Improved Performance: By caching and delivering content, reverse proxies reduce the load on backend servers, resulting in faster response times and improved overall performance for users.

Flexibility in Application Deployment: Reverse proxies enable organizations to deploy multiple web applications on the same server or server farm. They can route requests based on URL patterns or domain names, allowing for efficient hosting of multiple applications on a single infrastructure.

Wing organizations to track API usage, monitor performance metrics, and gain insights.

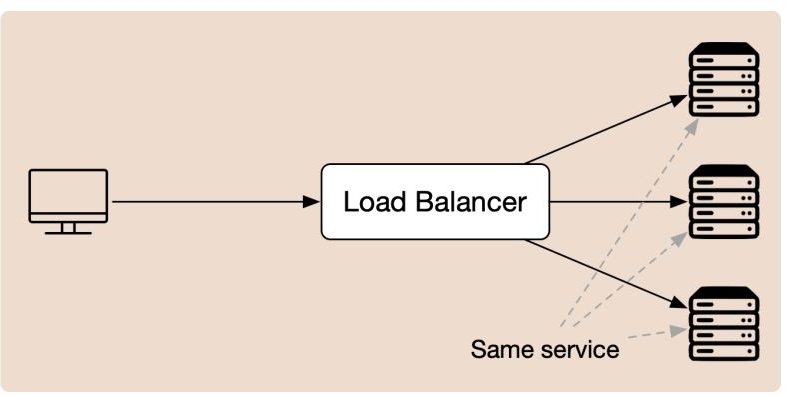

What is a Load Balancer?

A load balancer is a device or software application that distributes incoming network traffic across multiple servers. The primary goal is to ensure no single server bears an overwhelming share of the load, thus preventing server overload and improving responsiveness.

Load balancers use various algorithms to determine which server should handle each request, considering factors like server health, available resources, and session persistence.

Benefits:

Improved Performance: Load balancers optimize resource utilization by evenly distributing traffic, leading to improved response times and better overall performance of web applications.

Scalability: Load balancers allow for seamless horizontal scaling by adding more servers to the server farm. This helps accommodate increased traffic and ensures that the application can handle growing user demands.

Enhanced Reliability: With load balancers, the risk of application downtime due to server failures is minimized. Load balancers detect and redirect traffic away from failed servers, ensuring continuous availability and a reliable user experience.

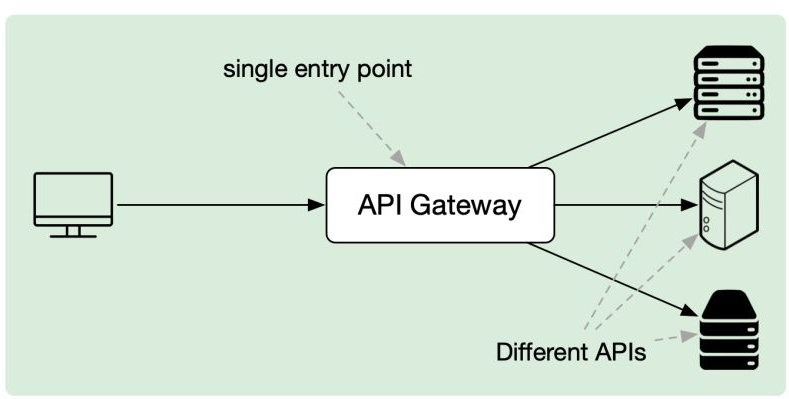

What is an Api Gateway?

An API gateway is an entry point for clients to access backend services or APIs.

It acts as a single point of entry, abstracting the underlying architecture and providing a unified interface for clients. It acts as an API front-end, receiving API requests, enforcing throttling and security policies, passing requests to the backends, and then passing the response back to the requester

API gateways offer various functionalities like request routing, protocol translation, authentication, and rate limiting. They enable organizations to manage and secure their APIs effectively.

It is an important concept in microservice architecture it forms an entry point for external clients or anything that's not a part of your core microservice system. Providing centralized management and control over a set of microservices

Benefits:

Simplified API Integration: API gateways offer a unified interface for clients to access multiple backend services or APIs.

Scalability and Performance: API gateways can handle high volumes of API traffic by distributing requests across multiple backend servers. They provide features like request throttling and caching to improve performance and handle spikes in demand.

Analytics and Monitoring: API gateways often include analytics and monitoring capabilities.

Options:

The open-source API gateway from Netflix's microservice stack is called Zuul, Hosted implementations by nginx ,Aws API gateway,..etc

Disadvantages of the API gateway pattern:

You've added a network hop here, so things are going to be a bit slower. There's not much you can do about it since the pattern kind of requires it.

API gateway serves as a single entry point for your entire system. If one API gateway fails, the entire system goes down. To prevent this, you can set up multiple API gateways and distribute incoming calls among them using tools like load balancers and elastic IPs.

Subscribe to my newsletter

Read articles from Riham Fayez directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by