Why AI models prefer GPUs to CPUs

Retzam Tarle

Retzam Tarle

print("Why AI models prefer GPUs to CPUs")

Given the recent explosion in the value of GPU chip makers like NVIDIA(With a market cap of over $ 2 trillion 🤑), I have been thinking of the reason GPUs are preferred over CPUs for AI model development, so I went to find out 👽.

Note: I refer toAI modelshere as primarilyDeep LearningModels which use**Neural Networks.

So first what are CPUs and GPUs?

A Central Processing Unit (CPU) is a type of processor that the computer uses to perform tasks. It is a general-purpose processor suited to performing tasks sequentially, that is, one after the other. The CPU is responsible for executing instructions from programs and managing tasks such as running applications, handling input/output operations, and managing memory.

The Graphics Processing Unit (GPU) on the other hand is also a type of processor that the computer uses to perform tasks. However it is a specialized processor suited to performing specific types of tasks in parallel, that is, handling several tasks at a time. Originally GPUs were designed to accelerate graphics rendering in computers, particularly for 3D graphics in video games. The GPU is the main component of the Graphics Card in a computer. GPUs were designed for graphics as we can see from the name. Gamers 🎮 would be familiar with GPUs I am sure 🙂. To understand GPU indepthly(another big word lol) you can watch this.

Now that we know what CPUs and GPUs are, why do we use both in a computer?

CPUs and GPUs are both used in a computer because they complement each other and excel at different types of tasks. GPUs are faster than CPUs in some tasks because they handle tasks simultaneously, but remember they are specialized, so they work on specific types of tasks. CPUs on the other hand are fast as well, just not as fast as GPUs in some tasks because they handle tasks in sequence, but they can handle all types of tasks, think of it as a jack of all trades. Also remember, the core function of a GPU is to help render graphics, allowing the CPU to focus on other tasks.

Consider having 10 different math problems to solve, where each is independent of the other. You can give this to 10 students to solve at the same time, which would save you about one-tenth the time it'll take one student. One key thing to note is that this problem can be solved in parallel, that is simultaneously because each problem is independent of the other. This illustrates the type of problems GPUs are suited for. This type of operation can be seen in matrix calculations.

Consider a second scenario having 10 math problems to solve, where each problem depends on the solution of the previous problem. In this case, giving the problems to 10 students would not save you any time, as each student needs to wait for the solution from the other student, you might as well give one student, right? Yes. This illustrates the type of problems CPUs are suited for. This type of operation can be seen in scalar calculations.

Now we should not forget that CPUs can have multiple cores, so that means you can run tasks in parallel too with CPUs. A typical CPU has 2 to 4 cores, some can get maxed to 16 and even 32 cores which is impressive, but compared to GPUs they are a drop in the ocean. GPUs have hundreds to thousands of cores all running in parallel. For example, Nvidia's RTX 4090 GPU processor has 16,384 CUDA cores. To understand GPU architecture better you can watch this.

So why do AI models prefer GPUs to CPUs?

We've built up to this moment. The answer is simple now. AI models (Deep Learning Models) prefer GPUs to CPUs because AI model training tasks which are matrix calculations can be run in parallel which suits GPUs 😊.

Deep Learning (Neural Networks) training tasks are "Embarrassingly parallel". Yeah, you read that right, and it's a real term 👽. An Embarrassingly parallel task is one where little or no effort is needed to separate the problem into several parallel tasks. This is what makes it perfect for GPUs. To get hands-on on embarrassingly parallel tasks you can check this.

But how do we use the GPU for training AI models since it is purpose-built for graphics?

We use frameworks like Compute Unified Device Architecture (CUDA) from NVIDIA which helps us run our code on GPUs in parallel as appropriate by maximizing the thousands of cores a GPU has.

GPUs consume a lot of energy, but they can help us train a model in a few hours which might take us days using a CPU.

GPUs are also used in other areas like bitcoin mining.

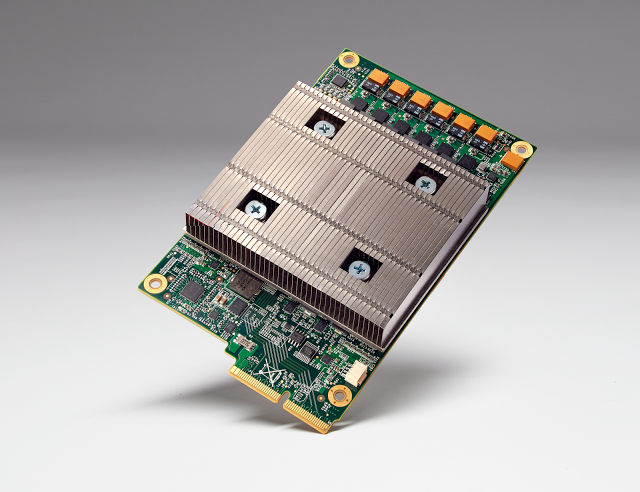

Since we are here there are actually other custom hardware used to train AI models, which are still based on the philosophy of the GPU. One I would like to mention is the TPU.

The Tensor Processing Unit(TPU) is an integrated circuit (a sort of processor) used to train deep learning models. This is primarily used for Tensorflow and was created by Google. This actually edges traditional GPUs for such specific scenarios.

I am sure we'll get to see more custom hardware built for training deep-learning models in the future, but they will most probably still be based on the philosophy of the GPU. Recently there was a report Sam Altman the co-founder and CEO of Open AI was trying to raise $7 trillion to build its own custom GPU chips, more here.

The end.

Well, this was not bad for a mid-series chapter right? 😊

We'll be back to the AI series next week, see ya! 👽

Subscribe to my newsletter

Read articles from Retzam Tarle directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Retzam Tarle

Retzam Tarle

I am a software engineer.