GLSL Shaders on MS-DOS

Coder of the Cellar

Coder of the Cellar

I always found the demoscene fascinating, and since we got hardware flexible and powerful enough to render almost anything, people that had an artistic brain ablation like me (look at my cover image) turned to size coding. How much can you do in 64 kB? In the early 2000s, you were floored to see 10 minutes of varying 3D scenes and materials, with music. As technology continued to improve and people continued to get smarter, 10 years later you could fit a lot of awesomeness into a measly 4 kB.

That's always refreshing to see as nowadays you can't do much without consuming hundreds of megabytes of RAM and storage, and even an "empty" C++ program is already over 100 kB. So to make such tiny executables, you learn that you need to ditch out the C(++) runtime (no std-anything), interact with the OS native API, compress your code, be careful of the constants you use for them to compress better (minimize the significant bits), and most importantly, you need to be a master of procedural content. It's very likely that you have to learn to do everything differently than what you are used to. So for that reason I never tried to do one myself, and I only got farther and farther away of this world (thinking about what it takes to draw anything using a GPU and modern APIs...).

I didn't pay much attention to it since, but I guess that the demoscene has continued to improve on what you can do in 4 kB, and maybe there are some cool demos in 1 kB. And I saw this:

In 256 bytes! How is that possible!?

After a moment of shock, you then see it's for DOS and you recognize some usual suspects:

The low resolution is likely the classic 320x200 256 color mode 13h (most MS-DOS games in the 90s used it or one of its variants).

These .COM files are primitive executables that have no overhead, no header, it's pure machine code, execution starts at its first byte.

At this size, you are likely running in "real mode", the x86 CPU mode which the original 8086 only knew about. There are a lot of things to explain, but the most relevant one is that it's the mode in which DOS and the BIOS are running, and an important role of the BIOS at that time was to provide a library of callable low level functions that standardized how you interact with the hardware, so it could work the same way on different PCs. It was kind of a low level runtime that you could use as long as you were in real mode. For example, you can ask for a standard graphics mode (19 or 0x13/13h), which has a resolution of 320x200, with 8 bit palettized colors, you can initialize the palette with default colors (which includes a grayscale gradient...), all in one BIOS function call (2 instructions!), the BIOS handles everything for you. Then, your framebuffer is memory-mapped in a 64 kB memory window, with one byte per pixel, that's really convenient.

All this means you really don't need much to draw on screen. Switch to mode 13h, setup your pointer to the framebuffer, that's it! Total overhead: four instructions!

For the demo itself, it looks like a fractal raymarching, which is quite common on Shadertoy. You can quickly achieve complex shapes with fractals and it doesn't necessarily require a lot of code. There is even some people trying to do some cool shaders with the least characters possible (code golfing), it should correlate pretty well with the number of instructions.

A quick glance at Remnants disassembly showed a heavy use of x87 instructions (FPU, floating point), so that must be that, a port of a compact and clever Shadertoy into x86 (and x87) assembly. Then, plotting pixels as we saw is easy.

Suddenly it seems that I could do one myself, ~240 bytes seems plenty after all for a bunch of instructions in some loops. It shouldn't be that hard to do one myself right? RIGHT? So let's go...

Step 1: Learn x86 assembly

I already have some experience with 16 bit x86 assembly, but I have never written assembly code for the legacy FPU (you would use scalar SSE/AVX instructions nowadays). And oh boy what a mess this is!

It's kind of a stack based architecture, because at the time it was created, the transistor budget for a CPU was so tight that you were forced to heavily compromise your design. You were already lucky to have a bunch of "random access" 16 bit registers, and hardware multiplication (on 16 bit integers). 64 bit (and even 80 bit) hardware floating point numbers are much more expensive to implement than 16 bit integers, so even if it was originally a dedicated chip (the math co-processor), heavy compromises had to be done as well.

So we have a register stack, but there are a lot of exceptions to make things less inefficient. For example you can swap or do some operations between the top of the stack and any other register (and store the result in either one). It's quite flexible but you can't treat it as with random access registers, so it requires a lot of experience to extract the most out of this architecture. So, you know what, scrap this, let's make a compiler do this for us instead.

Step 1 again: Learn x86 assembly how to compile into an MS-DOS .COM executable

I wasn't really pleased at the idea of digging out an old compiler that could target MS-DOS. But in theory, if you could just generate machine code for an old CPU, it would mostly be valid real mode code. GCC for example can generate code for the 386, the first 32 bit x86 CPU and coincidently, GCC doesn't know anything about real mode.

This complicates things quite a bit (I only spent like ten hours on this...) but a collection of switches are able to take care of many problems. And for things like accessing another memory segment (far pointers), like accessing the framebuffer, you could wrap it with inline assembly.

Initially, as I only need raw machine code, I just compiled into an object file, no linking. I then used objcopy bundled with MinGW to rip off the .text section and put it into a .com file. And for really simple programs it works! That's a start!

What doesn't work is that you don't control in which order your functions will be laid out, and DOS just runs from the first byte. I haven't found a way to force GCC to put the entry point on top, so everything except the entry point must be declared inline.

Also, as soon as GCC puts some data in another section that the program uses (.rdata mainly), you also need to copy it, and patch the code so it uses the correct data addresses. Basically that's part of the link stage. And making MinGW link with flat hybrid 16 bit binary code generated some more headaches and a bunch of additional flags to pass.

But surprisingly it works! Now I could wrap the program prologue/epilogue and pixel plotting with assembly, and write all the rest in C, the main body was like this:

void __attribute__((naked)) startup() {

begin(); // 16 bit prologue, graphics mode, custom palette...

for (short y = 0; y < 200; y++) {

for (short x = 0; x < 320; x++) {

unsigned char color = ...;

plot(x, y, color);

}}

end(); // 16 bit epilogue

}

It doesn't generate the optimal assembly (mostly because some parts of GCC don't know it has to emit 16 bit code so it spams as much size override prefixes as it can...), but now we can port shaders to C instead of assembly, which is a much simpler task!

And we can do even better. C++ is so flexible that there must be a way to make vectors and matrices work the same way as in GLSL, and even with the same syntax. Also, remember, all functions must be inline, hopefully it is always the case in GLSL. out parameters are just reference parameters, and that's pretty much it! Think about it, not having to port GLSL at all, take all interesting Shadertoys, compile them directly into tiny .COM executables, win all the next demoparties, become famous, all the girls undress in front of you, ...

Well. First...

Step 2: Make GCC understand GLSL

Ah. Vector swizzling. Turns out I also spent a lot of time trying to handle all the cases, and boy it quickly became hard and cryptic. It's bad when you write more code in a high level language than what you would have done in assembly... So instead I turned out to GLM (which didn't showed up on Google when searching about vector swizzling in C++). Handily you can ask GLM to inline all of its functions, and ask for swizzling members (the possibility of doing .zyy instead of .zyy()). The later required either the Microsoft compiler, or SSE support for Clang/GCC for no apparent good reason. Again, throwing some more flags at the problem (-fms-extensions -D_MSC_EXTENSIONS) can solve it, but in the end I got the same errors than I had when doing arithmetic with swizzled vectors (the temporary type isn't implicitly cast back to vector types). So I concluded that doing it The Right Way was not possible.

Reverting to swizzle functions made more cases working, and I just brute forced the problem with macros to automatically add the parentheses each time you encounter a zyy (and some other combinations I encountered, I haven't generated all of them yet). Last thing that doesn't work, is assignation to a swizzled vector (v.xy = ...), it silently does nothing (!). So you need to have this in mind!

So I ended up in a middle ground where I wrap some GLSL/C++ incompatibilities inside macros (the out parameters, explicit inline functions). You can handily define them in the common tab in Shadertoy.

Last problem, math functions... GLM uses the standard math library, which I can't link to (MSVCRT doesn't work in DOS). But! For some functions where the equivalent x87 instruction exist, and if you tell GCC to not care that much about the specification (-funsafe-math-optimizations), it will generate the instruction directly instead of calling the math function. So it somehow works for most operations, expect floor, exp, pow, and their derivatives. floor can be overridden, contrary of the others, but that's a problem for later. Link time optimization (-flto) save me as it inlines it (I can't change the declaration to add inline), but with this you need to specify your entry point, or else nothing is considered used (apart from main() which brings its own set of problems).

EDIT 13/05: I've found that you can put functions into dedicated sections and you can order them with the linker script (the com.ld file). So no need to explicitly inline functions anymore!

The complete command to compile with GCC now looks like this:

gcc glsldos.cpp -o demo.exe

-std=gnu++17 -Wall -Wextra -nostdlib

-Wa,--32 -march=i586 -m16 -Ofast -fno-ident -flto

-fsingle-precision-constant -frounding-math

-fno-align-functions -fno-align-labels -fno-align-loops -fno-align-jumps

-Wl,--file-alignment 1 -Wl,--section-alignment 1

-Wl,--script=com.ld -Wl,-e _startup -Wl,-m i386pe

MinGW can't generate flat binaries directly, that's why we need to make an .EXE first before copying the sections we need into the final .COM file:

objcopy -O binary demo.exe demo.com -j .text -j .rdata

I haven't talked about all the flags that came to solve many other problems (huge wasted space due to alignments, the "ident" signature, default .EXE format incompatible with 16 bit code, force default floating point rounding mode, ...). Worth mentioning though is asking GCC to interpret floating point literals in single precision by default (1.0 is 64 bit, 1.0f is 32 bit), instead of double precision, which is a huge pain saver as it mimics GLSL behavior.

In the end I can write shaders that works on both Shadertoy and C++, but it won't work in the general case. Also, Shadertoy resources (textures, ...), multiple buffers, and some input variables are not implemented (yet?).

Now, we have to put it under a real test. How does it compare to a handcrafted assembly demo like Remnants? To know this, we would need its Shadertoy version, which doesn't exist to my knowledge. So...

Step 3: Reverse engineer the Remnants demo

I've already sunk way more free time than I expected on this project, I'm way past counting at this point. When I started, the only thing I got was the executable disassembly, and a comment from HellMood that indeed guessed what shaders were the inspiration, they are the ones that introduced the fractal that has been used in the Remnants demo. I specifically started from this shader, and slowly de-obfuscated the code to try to better grasp how it works, and how to reproduce the result of Remnants.

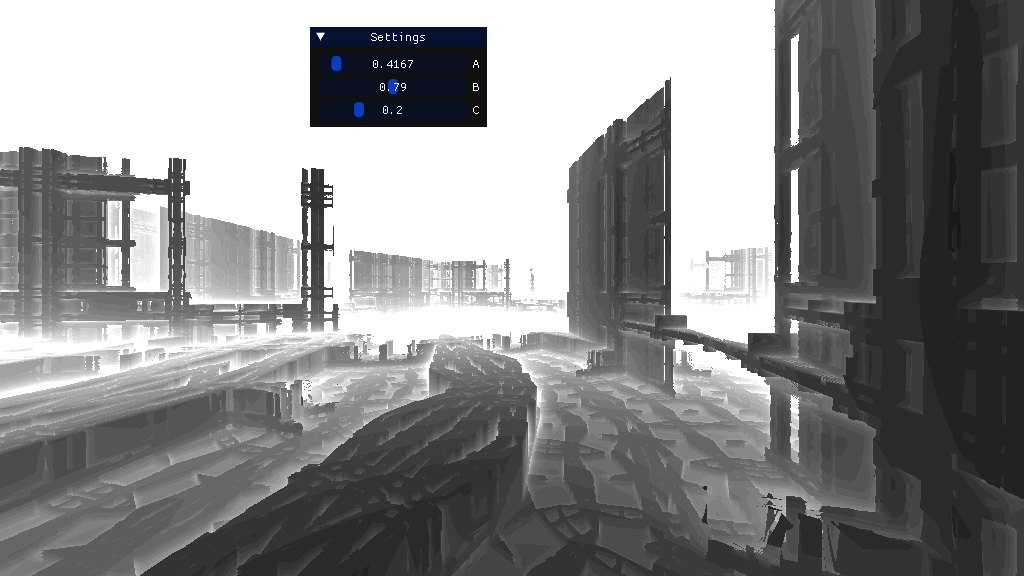

I also ported it into the 3D framework I presented in the last article, to use a real camera, and sliders to live edit some constants (here it is). It helped a lot to find the first camera shot :

Then, the author posted the commented assembly source which helped to fine tune things and finish replicating the whole demo. So here is my Shadertoy replica!

Results

So, I could make my own remnants.com MS-DOS demo.

What size is it?

It has successfully been tested on Dosbox and real hardware.

And what size is it?

It turns out that asking the compiler to optimize for speed instead of size produce code that is much faster, by about an order of magnitude, for a ~15% increase in size. So we shouldn't put that much importance on how many kilobytes it is...

WHAT'S THE FRIGGIN' SIZE GODDAMMIT!

840 bytes when optimized for size, and 968 bytes when optimized for speed.

EDIT 13/05: 1016 bytes for the speedy one, now that I added the dithering and blur post effect of the original demo... just shy of the 1 kb mark.

Well, I may not get famous by winning all the next demo parties after all. It turns out that you can't just compile GLSL code and be competitive in the small demos categories.

And it's far from being GLSL compliant either...

Yeah... And assembly is still the best way to write small demos, who could have guessed it?

On that bombshell, if you are somehow interested by my "GLSL to DOS build process", I've put my files in a Github repository.

I will probably continue to work on it and add support for more features. Mouse, colors, and what not.

And remember, it's not the size that matters.

Subscribe to my newsletter

Read articles from Coder of the Cellar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Coder of the Cellar

Coder of the Cellar

Codeur du Cellier