What the f*ck is Hill Climbing Algorithm? - A Complete Tutorial

Dhruvvv

Dhruvvv

In the intricate world of artificial intelligence (AI), the Hill Climbing Algorithm emerges as a fundamental method for problem-solving. Inspired by the metaphorical ascent up a hill, this technique is crucial for navigating the complex terrain of optimization problems in AI. It’s a strategic approach to finding the most effective solution among many possibilities, making it a cornerstone in various AI applications.

In Hill Climbing, the algorithm starts with an initial solution and then iteratively makes small changes to it in order to improve the solution. These changes are based on a heuristic function that evaluates the quality of the solution. The algorithm continues to make these small changes until it reaches a local maximum, meaning that no further improvement can be made with the current set of moves.

Here, we will discuss two variations of hill climbling: Simple Hill Climbing and Steepest Ascent Hill Climbing. Let us look at them one by one. But first, let us discuss the properties of Hill Climbing Algorithm.

01 . Properties of Hill Climbing 🚵♀️

Key features of the Hill Climbing Algorithm include:

Generate and Test Approach: This feature involves generating neighboring solutions and evaluating their effectiveness, always aiming for an upward move in the solution space.

Greedy Local Search: The algorithm uses a cheap strategy, opting for immediate beneficial moves that promise local improvements.

No Backtracking: Unlike other algorithms, Hill Climbing Algorithm in AI does not revisit or reconsider previous decisions, persistently moving forward in the quest for the optimal solution.

02 . Simple Hill Climbing Algorithm 🏔️

Simple hill climbing is the simplest way to implement a hill climbing algorithm. It only evaluates the neighbor node state at a time and selects the first one which optimizes current cost and set it as a current state. It only checks it's one successor state, and if it finds better than the current state, then move else be in the same state. This algorithm has the following features:

Less time consuming

Less optimal solution and the solution is not guaranteed

The simple hill climbing algorithm is straightforward -

Step 1: Start with an initial state.

Step 2: Check if the initial state is the goal. If so, return success and exit.

Step 3: Enter a loop to search for a better state continuously.

Select a neighboring state within the loop by applying an operator to the current state.

Evaluate this new state:

If it’s the goal state, return success and exit.

If it’s better than the current state, update the current state to this new state.

If it’s not better, discard it and continue the loop.

Step 4: End the process if no better state is found and the goal isn’t achieved.

03 . Steepest Ascent Hill Climbing Algorithm ⛰️

The steepest-Ascent algorithm is a variation of simple hill climbing algorithm. This algorithm examines all the neighboring nodes of the current state and selects one neighbor node which is closest to the goal state. This algorithm consumes more time as it searches for multiple neighbors.

The algorithm for Steepest Ascent Hill Climbing is:

Step 1: Evaluate the initial state, if it is goal state then return success and stop, else make current state as initial state.

Step 2: Loop until a solution is found or the current state does not change.

Let SUCC be a state such that any successor of the current state will be better than it.

For each operator that applies to the current state:

Apply the new operator and generate a new state.

Evaluate the new state.

If it is goal state, then return it and quit, else compare it to the SUCC.

If it is better than SUCC, then set new state as SUCC.

If the SUCC is better than the current state, then set current state to SUCC.

Step 5: Exit.

04 . Regions in Hill Climbing 🗺️

The following are the important regions in hill climbing:

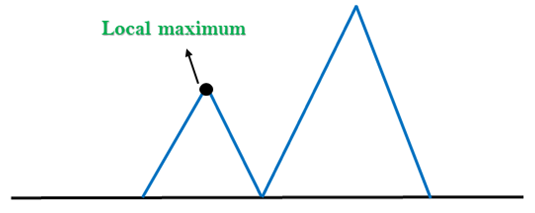

Local Maximum: As the diagram makes clear, this is the state that is marginally superior to its neighboring states but never higher than the highest state.

Global maximum: Its cost function value is at its highest, and it is the highest state in the state space.

Current State: This is the condition in which an active agent is present.

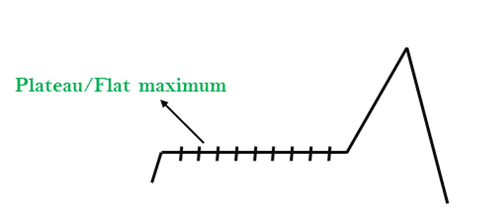

Flat local maximums are what happens when all the neighboring states have the same value and can be visualized as flat spaces (as shown in the diagram).

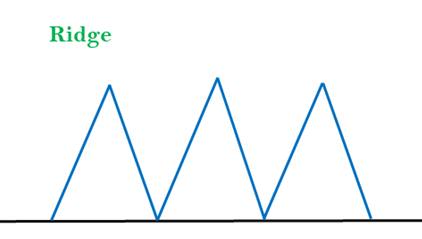

Shoulder region: A region with an upward edge, it is also one of the issues with algorithms for climbing hills.

05 . Travelling Salesman Problem 🚋

The Travelling Salesman Problem (TSP) is a classic optimization problem where the goal is to find the shortest possible route that visits a given set of cities exactly once and returns to the original city. The hill climbing algorithm is one of the simplest optimization algorithms that can be used to solve the TSP. Here's how it works:

Initialization: Start with a random solution, which represents a random ordering of the cities.

Evaluation: Calculate the total distance of the route represented by the current solution.

Neighborhood Generation: Generate neighboring solutions by making small modifications to the current solution. For example, you can swap the order of two cities in the route.

Selection of Next Solution: Select the neighboring solution that improves (reduces) the total distance the most.

Termination: Repeat steps 3 and 4 until no further improvements can be made or a certain number of iterations is reached.

Return the Best Solution Found: Once the algorithm terminates, return the best solution found, which represents the shortest route.

Let's illustrate this with a simple example:

Suppose we have 4 cities: A, B, C, and D, and their pairwise distances are as follows:

Distance between A and B: 10

Distance between A and C: 15

Distance between A and D: 20

Distance between B and C: 35

Distance between B and D: 25

Distance between C and D: 30

Let's start with an initial random solution: A -> B -> C -> D -> A. The total distance for this route is 10 + 35 + 30 + 20 = 95.

Now, we generate neighboring solutions by swapping the order of two cities. Let's say we swap B and C, yielding the route A -> C -> B -> D -> A. The total distance for this route is 15 + 25 + 35 + 20 = 95, which is the same as the previous solution.

Since there is no improvement, we try another neighboring solution. Let's swap C and D, yielding the route A -> B -> D -> C -> A. The total distance for this route is 10 + 25 + 30 + 15 = 80, which is an improvement over the previous solutions.

We continue generating and evaluating neighboring solutions until we reach a local optimum or a termination criterion is met.

06 . Limitations of Hill Climbing 😔

Hill Climbing has some limitations (which can easily be solved by simple solutions)-

1. Local Maximum: A local maximum is a peak state in the landscape which is better than each of its neighboring states, but there is another state also present which is higher than the local maximum.

Solution: Backtracking technique can be a solution of the local maximum in state space landscape. Create a list of the promising path so that the algorithm can backtrack the search space and explore other paths as well.

2. Plateau: A plateau is the flat area of the search space in which all the neighbor states of the current state contains the same value, because of this algorithm does not find any best direction to move. A hill-climbing search might be lost in the plateau area.

Solution: The solution for the plateau is to take big steps or very little steps while searching, to solve the problem. Randomly select a state which is far away from the current state so it is possible that the algorithm could find non-plateau region.

3. Ridges: A ridge is a special form of the local maximum. It has an area which is higher than its surrounding areas, but itself has a slope, and cannot be reached in a single move.

Solution: With the use of bidirectional search, or by moving in different directions, we can improve this problem.

07 . Simulated Annealing 🪙

To make the algorithm more efficient we can simulate annealing in hill climbing. A hill-climbing algorithm which never makes a move towards a lower value guaranteed to be incomplete because it can get stuck on a local maximum. And if algorithm applies a random walk, by moving a successor, then it may complete but not efficient. Simulated Annealing is an algorithm which yields both efficiency and completeness.

In mechanical term Annealing is a process of hardening a metal or glass to a high temperature then cooling gradually, so this allows the metal to reach a low-energy crystalline state. The same process is used in simulated annealing in which the algorithm picks a random move, instead of picking the best move. If the random move improves the state, then it follows the same path. Otherwise, the algorithm follows the path which has a probability of less than 1 or it moves downhill and chooses another path.

This was it about hill climbing. See ya!

Subscribe to my newsletter

Read articles from Dhruvvv directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Dhruvvv

Dhruvvv

A Flutter Developer, Unity Developer and Product Manager.