Cilium: Network Policies

Karim El Jamali

Karim El Jamali

Introduction to Network Policies

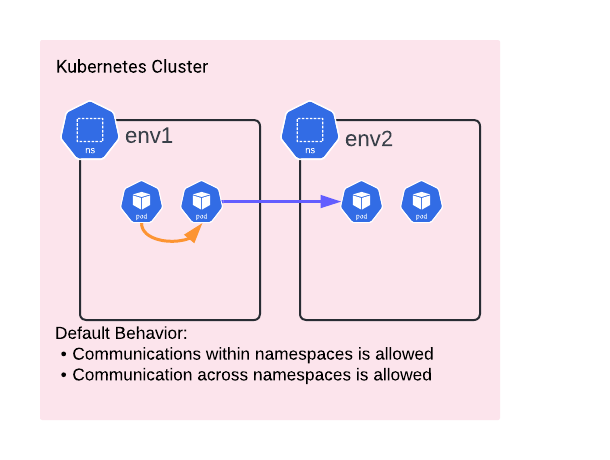

In this blog episode, we're diving into the world of Kubernetes Network Policies. But before we get there, let's talk about the main headache these policies aim to fix. So, without Network Policies, Kubernetes lets Pods communicate freely with each other, no matter if they are on the same or different node or within the same namespace or in different namespaces. But here's the catch: while it might seem convenient for Pods to enable free communication among pods, it's like leaving your front door wide open in a not-so-great neighbourhood. Sure, it's all fun and games until someone you don't want barges in. And in Kubernetes land, that means inviting all sorts of security risks.

|

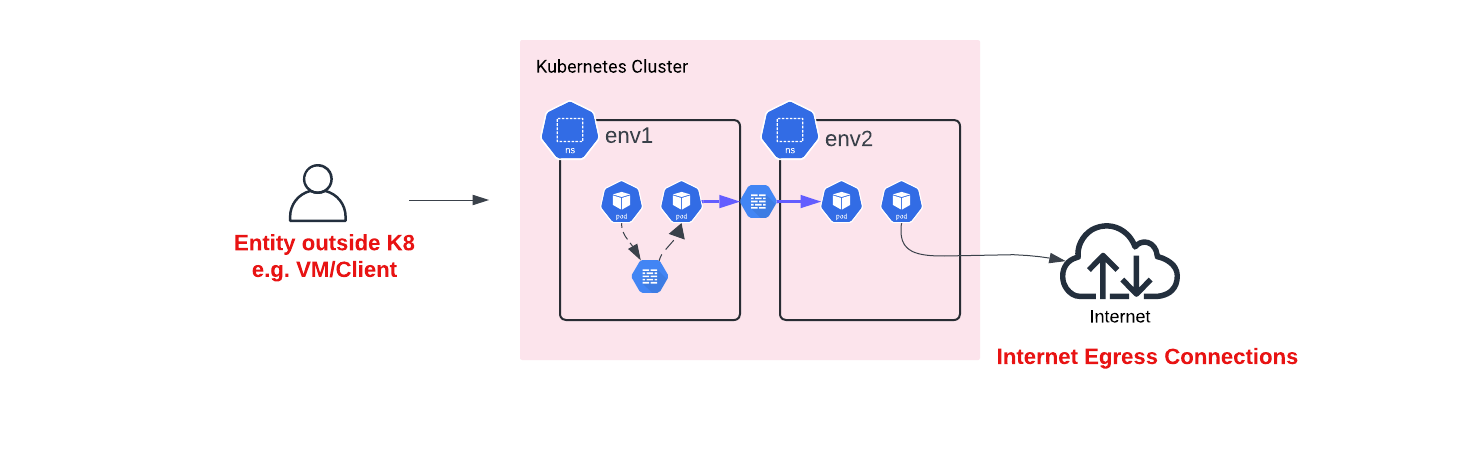

Thus, in a nutshell Kubernetes Network policies can help in isolating namespaces from one another in the sense that workloads belonging to "env1" to communicate with workloads in "env2". Another very common use of Network Policies is enforcing Zero Trust policies around workloads ensuring least privilege access & that a compromise in one of your workloads can't spread laterally to affect other workloads. Note that policies can govern East-West Traffic within Kubernetes but can also extend to govern the communication patterns with other non-Kubernetes workloads (IP Addresses).

It's essential to understand that while these policies result in isolation at the Dataplane level (e.g., Firewall), they do not imply any form of Virtual Routing and Forwarding (VRF) or Segmentation from a networking perspective.

|

More on Network Policies Concepts

|

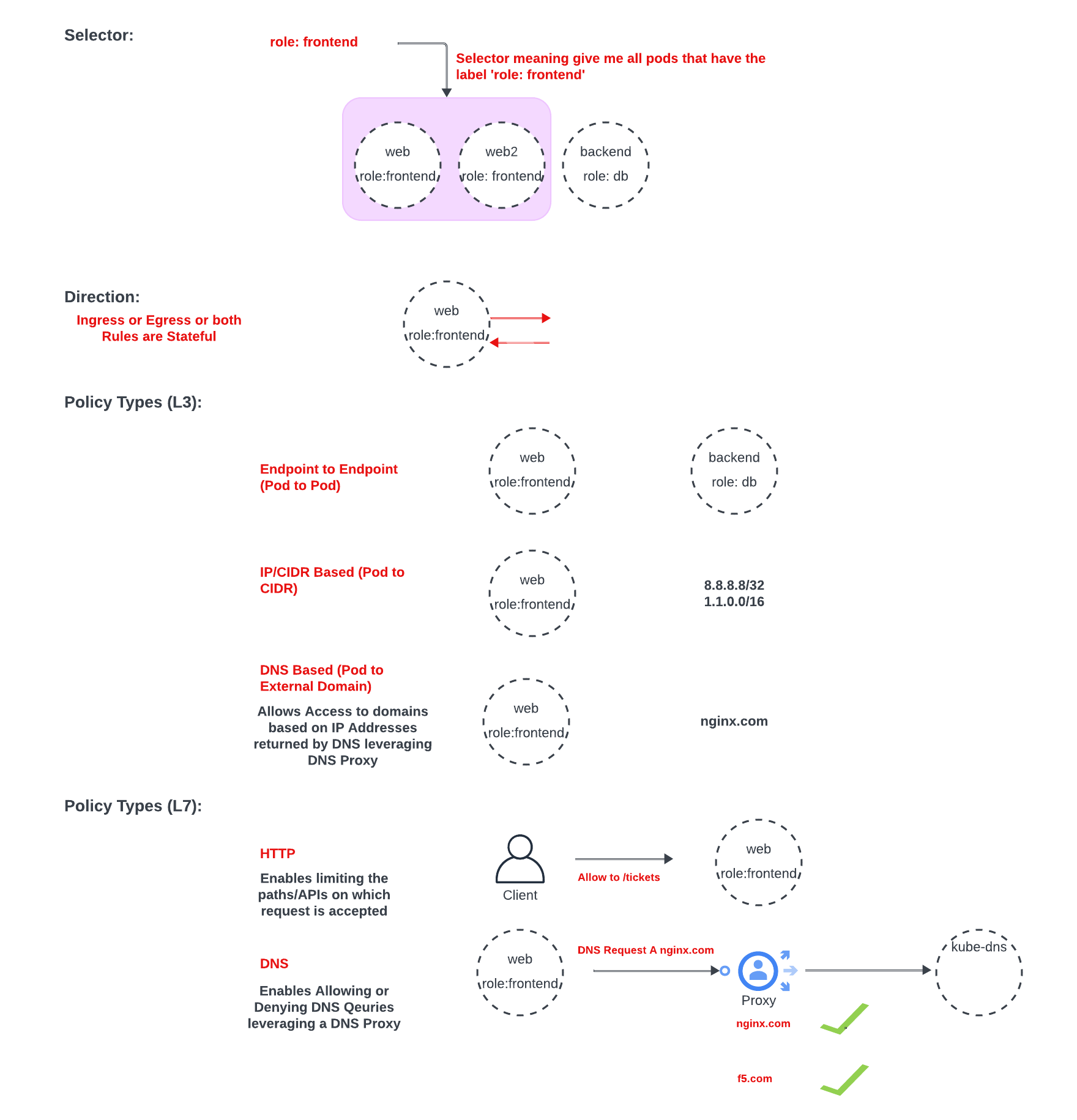

CiliumNetwork Policy structure can be found below and it's basic sections are:

Selector needs to define which endpoint(s) the policy is subject to.

Direction of the Policy: Ingress/Egress

Labels are heavily used in Kubernetes & Network Policies as they allow:

Decoupling from IP addresses especially recalling that Pods are ephemeral

Being able to apply policies on a number of Pods at the same time. Below example for instance shows that all 3 pods share the same label (app=reviews).

Any new upcoming Pods that are being instantiated can automatically be captured by the Network Policy.

Examples in the above diagram are role:frontend, & role:db.

In Cilium L3 Policies, most use-cases are neatly outlined in Figure 3. I'd like to highlight L3 DNS-based policies, which simplify allowing egress access to specific domains from particular Pods or groups of Pods.

Let's break it down: Instead of manually resolving the IP addresses for, say, nginx.com, and then configuring access accordingly, L3 DNS-based policies take a smarter approach. When a Pod sends a DNS request for a domain like nginx.com, Cilium steps in. It relays the request to a DNS proxy, giving us visibility over the DNS request and response.

Here's where the magic happens: the response to the DNS request becomes an IP address that we control communication to. This means we can seamlessly manage and restrict access to desired domains without the hassle of manual IP management. It's like having your own traffic cop directing Pod communications in real-time, all thanks to Cilium's intelligent handling of DNS

On Cilium L7 Policies ,the DNS policy here is a bit different where it basically leverages a DNS Proxy to accept/reject DNS requests.

HTTP Policy within L7 policies, allows for example more granular control on incoming traffic by allowing it from a group of pods only when it targets a particular URI path.

k.eljamali@LLJXN1XJ9X Network Policies % kubectl get pods -l app=reviews

NAME READY STATUS RESTARTS AGE

reviews-v1-5b5d6494f4-dwvpn 1/1 Running 0 4d14h

reviews-v2-5b667bcbf8-kjwhn 1/1 Running 0 4d14h

reviews-v3-5b9bd44f4-qjfth 1/1 Running 0 4d14h

apiVersion: "cilium.io/v2" kind: CiliumNetworkPolicy metadata: name: "productpage-deny-all" spec: endpointSelector: matchLabels: app: productpage egress: - {}Figure5. Sample Cilium Network Policy

Cilium Policy Enforcement Mode: Default

Cilium offers different modes for Policy Enforcement: Default, Always, and Never. Let's focus on describing the behavior under the Default mode:

- No Network Policies Attached: For endpoints without any Network Policies attached, Egress and Ingress traffic are allowed without restriction.

- Network Policy Applied to Endpoint in Egress Direction:When a Network Policy is applied to an endpoint in the egress direction, only the specific egress traffic explicitly mentioned in the policy will be allowed. All other egress traffic will be denied.

- An empty egress policy implies that no egress traffic is allowed, resulting in the denial of all egress traffic.

- Ingress traffic remains unaffected by egress policies.

Stateful Network Policies

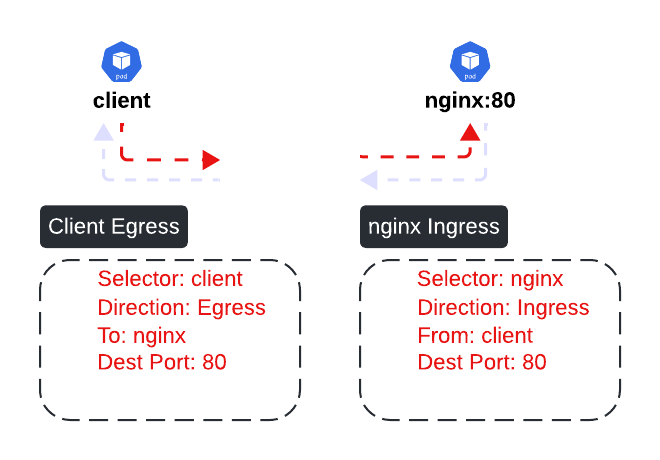

It's crucial to grasp the concept that Network Policies operate as stateful constructs. For instance, when considering permitting communication from a client Pod to an nginx Pod, we only need two policies:

Egress policy on the Client Pod to facilitate communication with the nginx Pod. Ingress policy on the nginx Pod to allow communication from the client Pod. Remarkably, explicit network policies for return traffic are unnecessary. This is because the return path of a flow, such as the response from the nginx Pod to the client Pod, is inherently part of the same outgoing flow permitted by the client to the nginx Pod. Therefore, no incoming policy is required on the client side for this return traffic, as it is already encompassed within the outgoing flow allowance.

|

Scenario

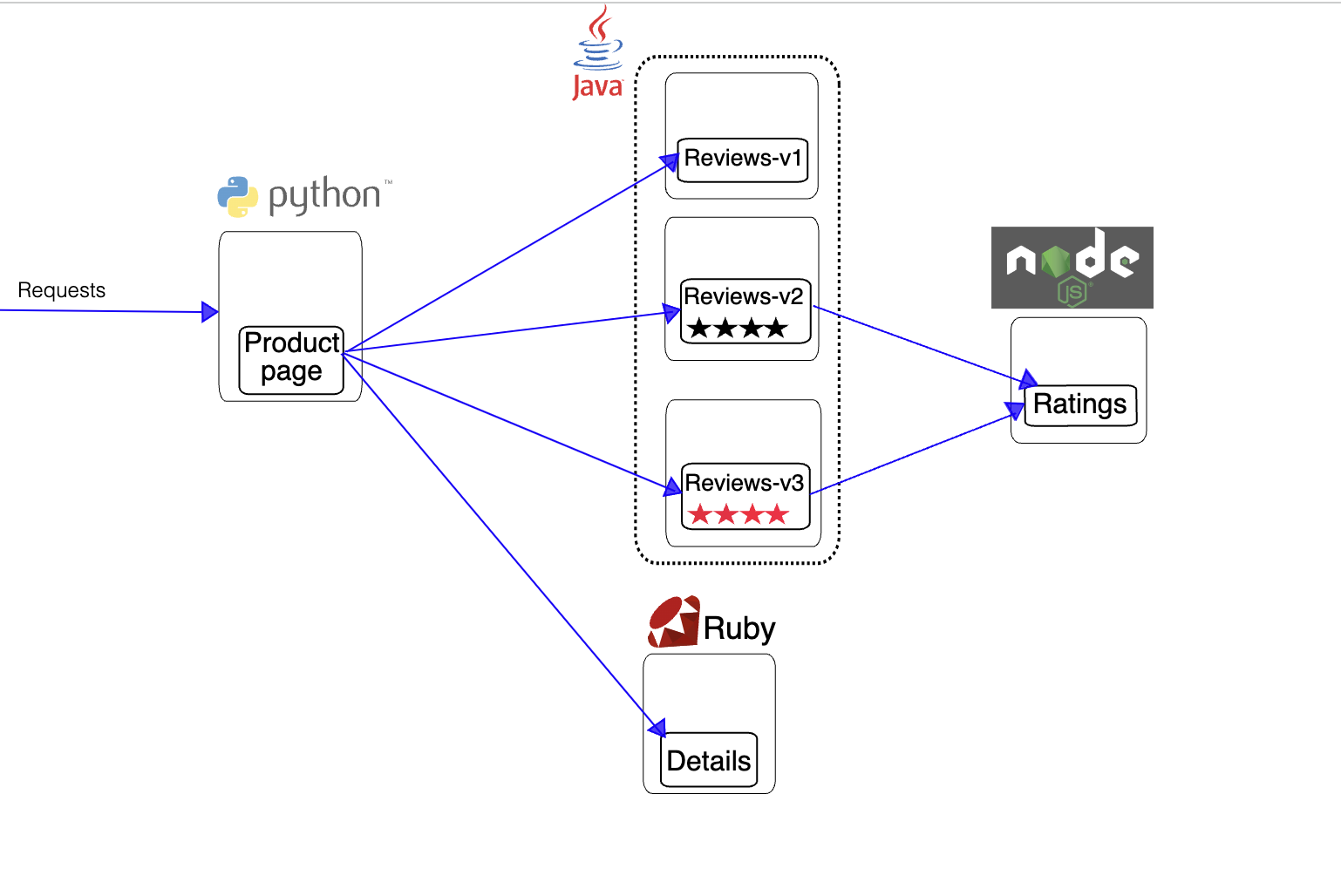

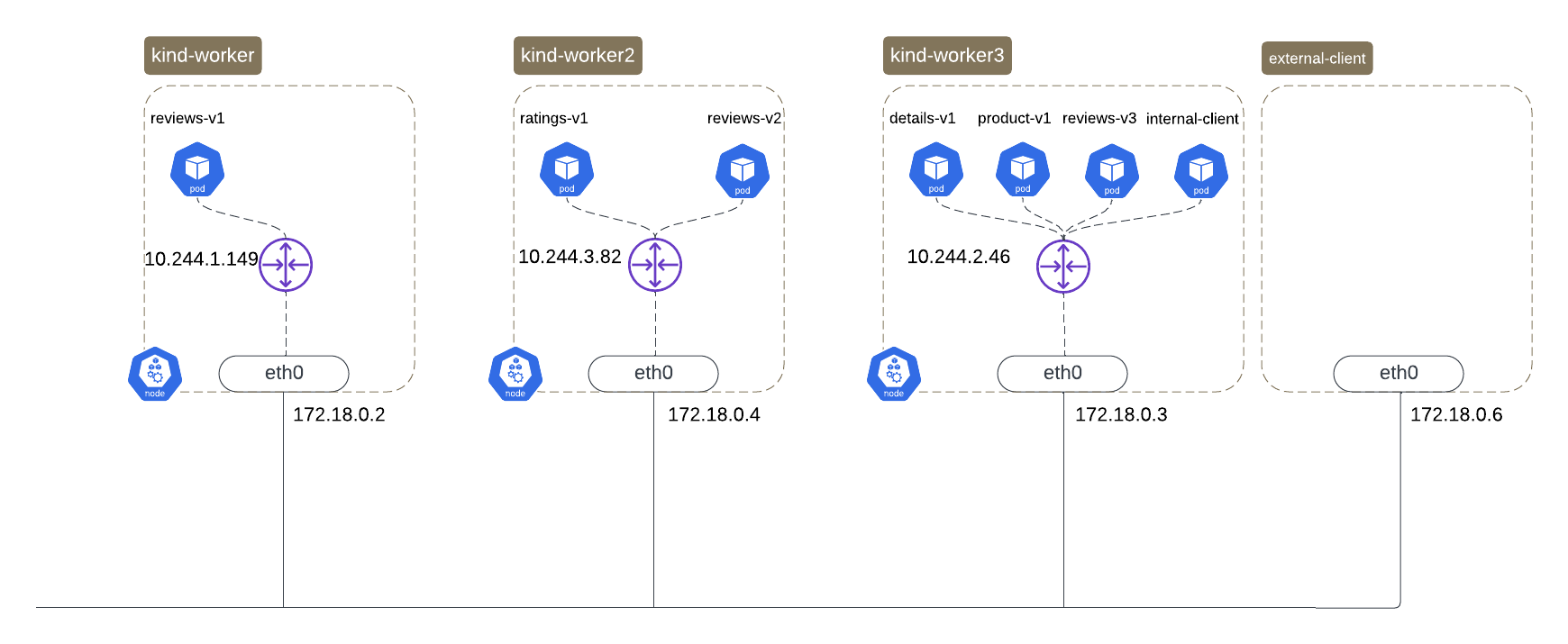

To keep things straightforward, I’ve opted for the BookInfo application—a commonly featured example in Istio’s getting started guide. This application comprises four microservices: Product Page, Reviews, Details, and Ratings. While Reviews has multiple versions, we’ll keep our focus on v1.

For a visual overview, check out the diagram below, sourced from Istio’s documentation. It vividly illustrates the key components and their interactions.More details can be found here.

|

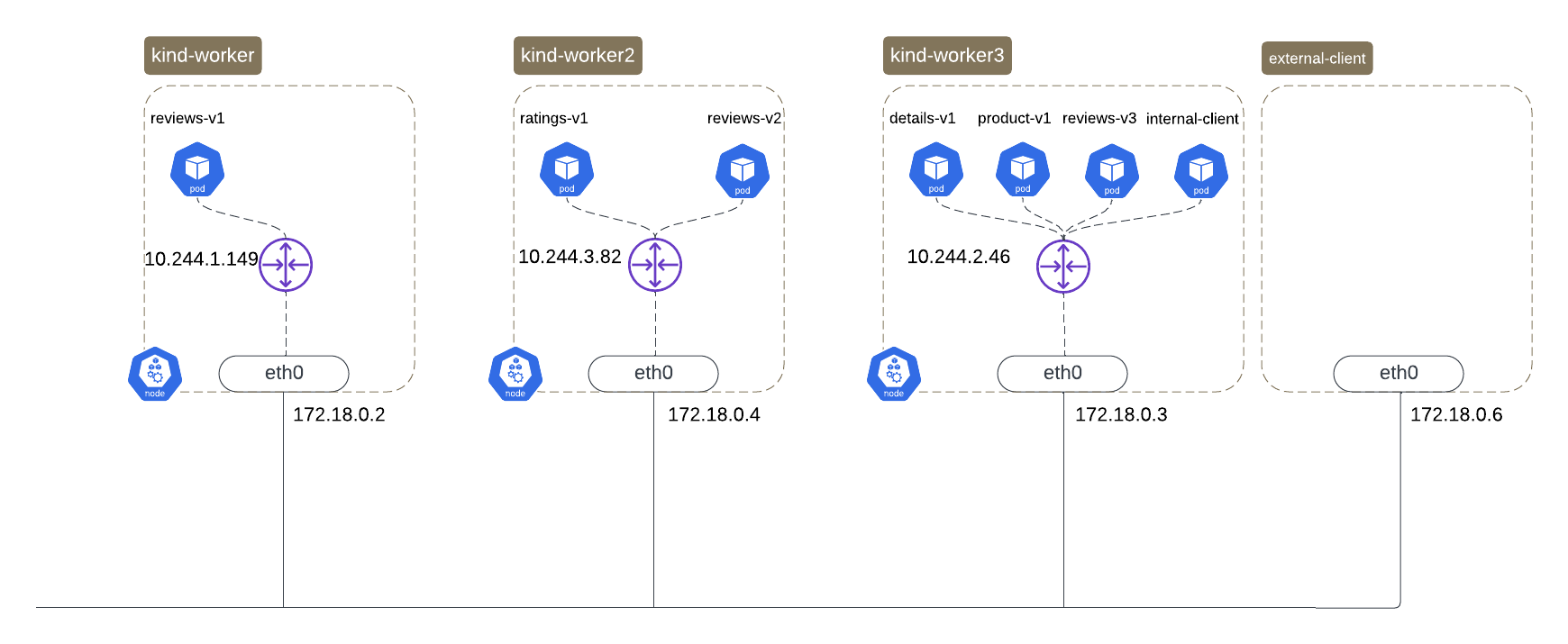

It is worth noting that all of this setup is running locally on my laptop as a kind cluster. More details can be found here.

|

Application Discovery

Hubble is a fully distributed networking and security observability platform. It is built on top of Cilium and eBPF to enable deep visibility into the communication and behavior of services as well as the networking infrastructure in a completely transparent manner. (Source: Cilium Documentation)

We will look at identities in the upcoming section but for now you can see:

Figure9. shows the traffic from the internal-client pod to productpage pod

Figure10. shows all the backends that the productpage actually communicates with reviews throughout its versions (v1,v2,v3).

Effective crafting of Network Policies hinges on attaining comprehensive visibility into the communication patterns among Pods and entities. This visibility enables a thorough understanding of how these components interact within the Kubernetes cluster, facilitating the creation of policies that accurately reflect and enforce security requirements.

k.eljamali@LLJXN1XJ9X Network Policies % hubble observe --to-identity 9889 --last 5 --from-identity 23217

Apr 27 22:05:46.867: default/internal-client:47838 (ID:23217) -> default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Apr 27 22:09:16.221: default/internal-client:42856 (ID:23217) -> default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: SYN)

Apr 27 22:09:16.221: default/internal-client:42856 (ID:23217) -> default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK)

Apr 27 22:09:16.221: default/internal-client:42856 (ID:23217) -> default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Apr 27 22:09:21.344: default/internal-client:42856 (ID:23217) -> default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

k.eljamali@LLJXN1XJ9X Network Policies % hubble observe --from-identity 9889 --last 5

Apr 27 22:05:46.813: default/productpage-v1-675fc69cf-8zzxh:35336 (ID:9889) -> default/reviews-v2-5b667bcbf8-kjwhn:9080 (ID:10139) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Apr 27 22:05:46.814: default/productpage-v1-675fc69cf-8zzxh:35336 (ID:9889) -> default/reviews-v2-5b667bcbf8-kjwhn:9080 (ID:10139) to-endpoint FORWARDED (TCP Flags: ACK)

Apr 27 22:05:46.822: default/productpage-v1-675fc69cf-8zzxh:43297 (ID:9889) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) to-endpoint FORWARDED (UDP)

Apr 27 22:05:46.859: default/productpage-v1-675fc69cf-8zzxh:43712 (ID:9889) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) to-endpoint FORWARDED (UDP)

Apr 27 22:05:46.863: default/productpage-v1-675fc69cf-8zzxh:35352 (ID:9889) -> default/reviews-v1-5b5d6494f4-dwvpn:9080 (ID:55669) to-endpoint FORWARDED (TCP Flags: ACK)

Apr 27 22:05:46.863: default/productpage-v1-675fc69cf-8zzxh:35352 (ID:9889) -> default/reviews-v1-5b5d6494f4-dwvpn:9080 (ID:55669) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Apr 27 22:05:46.866: default/productpage-v1-675fc69cf-8zzxh:35352 (ID:9889) -> default/reviews-v1-5b5d6494f4-dwvpn:9080 (ID:55669) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Apr 27 22:05:46.867: default/productpage-v1-675fc69cf-8zzxh:35352 (ID:9889) -> default/reviews-v1-5b5d6494f4-dwvpn:9080 (ID:55669) to-endpoint FORWARDED (TCP Flags: ACK)

Apr 27 22:09:16.230: default/productpage-v1-675fc69cf-8zzxh:50168 (ID:9889) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) to-endpoint FORWARDED (UDP)

Apr 27 22:09:21.260: default/productpage-v1-675fc69cf-8zzxh:42803 (ID:9889) -> kube-system/coredns-76f75df574-rw29s:53 (ID:17513) to-endpoint FORWARDED (UDP)

Apr 27 22:09:21.276: default/productpage-v1-675fc69cf-8zzxh:33718 (ID:9889) -> default/reviews-v3-5b9bd44f4-qjfth:9080 (ID:1786) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Apr 27 22:09:21.336: default/productpage-v1-675fc69cf-8zzxh:33718 (ID:9889) -> default/reviews-v3-5b9bd44f4-qjfth:9080 (ID:1786) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Apr 27 22:09:21.336: default/productpage-v1-675fc69cf-8zzxh:33718 (ID:9889) -> default/reviews-v3-5b9bd44f4-qjfth:9080 (ID:1786) to-endpoint FORWARDED (TCP Flags: ACK)

Apr 27 22:09:21.342: default/internal-client:42856 (ID:23217) <- default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Apr 27 22:09:21.343: default/internal-client:42856 (ID:23217) <- default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

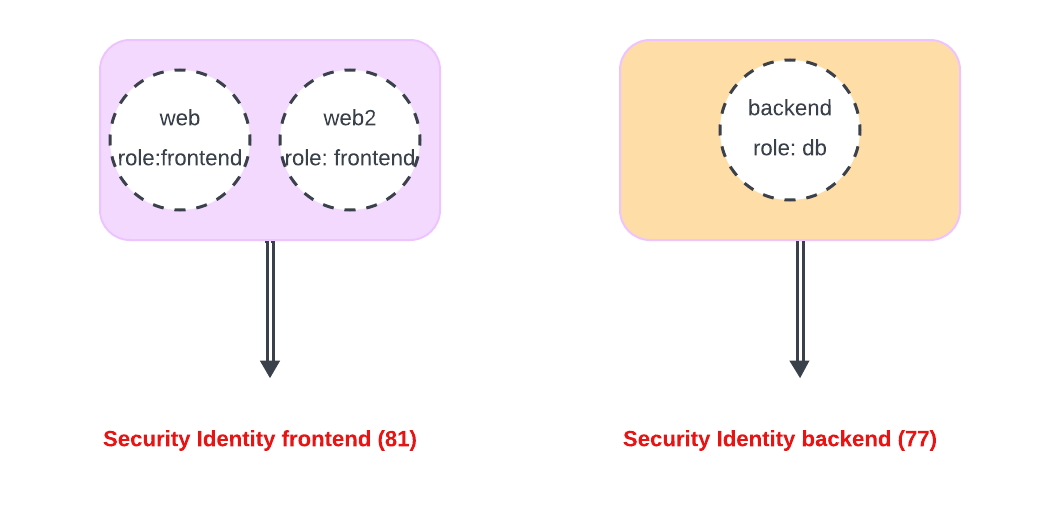

Security Identity

As discussed, Kubernetes & Network Policies are heavily dependent on labels. Basically, for every unique combination of labels that would target a set of Pods, Cilium would create unique security identities.

Thus, when traffic for instance needs to go from frontend to backend.

On the host having the web pod, Cilium figures out the security identity that maps to the source which is 81 in our example.

Cilium would ensure that the security identity information (81) is encoded into the packet it sends to the backend.

Thus, you can think about Network Policies as controlling access based on source and destination security identities.

Upon receiving the packet, we know that the source identity is 81 and the backend identity is 77 and need to check if there are policies that allow Identity 81

|

This output shows all the existing pods and their existing security identities.

NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

details-v1-698d88b-89gnc 285 ready 10.244.2.140

internal-client 23217 ready 10.244.2.172

productpage-v1-675fc69cf-8zzxh 9889 ready 10.244.2.148

ratings-v1-6484c4d9bb-8nsbj 5778 ready 10.244.3.241

reviews-v1-5b5d6494f4-dwvpn 55669 ready 10.244.1.170

reviews-v2-5b667bcbf8-kjwhn 10139 ready 10.244.3.87

reviews-v3-5b9bd44f4-qjfth 1786 ready 10.244.2.191

Egress Policy: Deny-All

This is an example of an empty egress network policy that is attached to the productpage pod. This would block all outgoing traffic from productpage to any other pod or entity.

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "productpage-deny-all"

spec:

endpointSelector:

matchLabels:

app: productpage

egress:

- {}

Refer back to figure 12 where the pods and their respective identities are listed. Please note that Hubble can also use pod names directly with from-pod and to-pod.

Figure 14 shows that traffic continues to flow from the client to the productpage which is expected as the policy on the productpage is an egress only policy.

k.eljamali@LLJXN1XJ9X Network Policies % hubble observe --to-identity 9889 --last 5 --from-identity 23217

Apr 27 22:17:28.254: default/internal-client:51160 (ID:23217) -> default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK)

Apr 27 22:17:28.255: default/internal-client:51160 (ID:23217) -> default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Apr 27 22:17:28.280: default/internal-client:46532 (ID:23217) -> default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: SYN)

Apr 27 22:17:28.281: default/internal-client:46532 (ID:23217) -> default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK)

Apr 27 22:17:28.281: default/internal-client:46532 (ID:23217) -> default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Figure 15, shows that traffic from productpage to coredns is blocked. The reason this happens is when productpage wants to communicate with any of its backend services (reviews, details..etc) it sends a DNS request to resolve the IP address of the service name (reviews for example). For more details refer to the Kubernetes Services blog post here.

hubble observe --from-identity 9889 --since 2m

Apr 27 22:17:28.285: default/productpage-v1-675fc69cf-8zzxh:40789 (ID:9889) <> kube-system/coredns-76f75df574-rw29s:53 (ID:17513) policy-verdict:none EGRESS DENIED (UDP)

Apr 27 22:17:28.285: default/productpage-v1-675fc69cf-8zzxh:40789 (ID:9889) <> kube-system/coredns-76f75df574-rw29s:53 (ID:17513) Policy denied DROPPED (UDP)

Apr 27 22:17:28.285: default/productpage-v1-675fc69cf-8zzxh:40789 (ID:9889) <> kube-system/coredns-76f75df574-rw29s:53 (ID:17513) policy-verdict:none EGRESS DENIED (UDP)

Apr 27 22:17:28.285: default/productpage-v1-675fc69cf-8zzxh:40789 (ID:9889) <> kube-system/coredns-76f75df574-rw29s:53 (ID:17513) Policy denied DROPPED (UDP)

Apr 27 22:17:33.290: default/productpage-v1-675fc69cf-8zzxh:40789 (ID:9889) <> kube-system/coredns-76f75df574-rw29s:53 (ID:17513) policy-verdict:none EGRESS DENIED (UDP)

Apr 27 22:17:33.290: default/productpage-v1-675fc69cf-8zzxh:40789 (ID:9889) <> kube-system/coredns-76f75df574-rw29s:53 (ID:17513) Policy denied DROPPED (UDP)

Output from internal-client is actually inline with our findings whereby we get a response from productpage however it clearly mentions that reviews are inaccessible as a result of the egress policy.

</div>

<div class="col-md-6">

<h4 class="text-center text-primary">Error fetching product reviews!</h4>

<p>Sorry, product reviews are currently unavailable for this book.</p>

Allow Traffic to DNS

We have concluded our earlier section, whereby traffic to the coredns pod was being blocked. This policy allows product page to get to coredns on port 53.

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "allow-to-dns"

spec:

endpointSelector:

matchLabels:

app: productpage

egress:

- toEndpoints:

- matchLabels:

"k8s:io.kubernetes.pod.namespace": kube-system

"k8s:k8s-app": kube-dns

toPorts:

- ports:

- port: "53"

protocol: ANY

k.eljamali@LLJXN1XJ9X Network Policies % hubble observe --from-identity 9889 --last 1 min

Apr 28 00:36:17.388: default/productpage-v1-675fc69cf-8zzxh:49263 (ID:9889) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) to-endpoint FORWARDED (UDP)

Apr 28 00:36:17.407: default/productpage-v1-675fc69cf-8zzxh:60156 (ID:9889) -> default/reviews-v1-5b5d6494f4-dwvpn:9080 (ID:55669) to-endpoint FORWARDED (TCP Flags: ACK)

Apr 28 00:36:17.407: default/internal-client:40690 (ID:23217) <- default/productpage-v1-675fc69cf-8zzxh:9080 (ID:9889) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

The DNS rules being added here can be used as an L7 policy to allow DNS requests to particular domains (like an Allowlist) via a DNS proxy as shown in Figure 3. The other value that comes with DNS rules is that now we have added a DNS proxy into the picture, we can have more visibility on DNS traffic.

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "allow-to-dns"

spec:

endpointSelector:

matchLabels:

app: productpage

egress:

- toEndpoints:

- matchLabels:

"k8s:io.kubernetes.pod.namespace": kube-system

"k8s:k8s-app": kube-dns

toPorts:

- ports:

- port: "53"

protocol: ANY

rules:

dns:

- matchPattern: "*"

k.eljamali@LLJXN1XJ9X Network Policies % hubble observe --protocol dns

Apr 28 00:49:07.340: default/productpage-v1-675fc69cf-8zzxh:50114 (ID:9889) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query details.default.svc.cluster.local. AAAA)

Apr 28 00:49:07.340: default/productpage-v1-675fc69cf-8zzxh:50114 (ID:9889) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query details.default.svc.cluster.local. A)

Apr 28 00:49:07.342: default/productpage-v1-675fc69cf-8zzxh:50114 (ID:9889) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer "10.96.222.2" TTL: 30 (Proxy details.default.svc.cluster.local. A))

Apr 28 00:49:07.342: default/productpage-v1-675fc69cf-8zzxh:50114 (ID:9889) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer TTL: 4294967295 (Proxy details.default.svc.cluster.local. AAAA))

Apr 28 00:49:07.345: default/productpage-v1-675fc69cf-8zzxh:35429 (ID:9889) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query reviews.default.svc.cluster.local. AAAA)

Apr 28 00:49:07.345: default/productpage-v1-675fc69cf-8zzxh:35429 (ID:9889) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query reviews.default.svc.cluster.local. A)

Apr 28 00:49:07.346: default/productpage-v1-675fc69cf-8zzxh:35429 (ID:9889) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer "10.96.179.42" TTL: 10 (Proxy reviews.default.svc.cluster.local. A))

Apr 28 00:49:07.347: default/productpage-v1-675fc69cf-8zzxh:35429 (ID:9889) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer TTL: 4294967295 (Proxy reviews.default.svc.cluster.local. AAAA))

Allow Traffic to Backend Pods

Recall that at this stage, the DNS resolution works however we still need to add the relevant rules from productpage to reviews & details. Bear in mind that for other pods that are not selected as part of the policy all ingress/egress traffic continues to flow (e.g. Traffic between reviews & ratings)

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "from-product-to-reviews-details"

spec:

endpointSelector:

matchLabels:

app: productpage

egress:

- toEndpoints:

- matchLabels:

app: reviews

- toEndpoints:

- matchLabels:

app: details

k.eljamali@LLJXN1XJ9X Network Policies % hubble observe --from-identity 9889 --last 4 min

Apr 28 00:38:03.715: default/productpage-v1-675fc69cf-8zzxh:37378 (ID:9889) -> default/reviews-v1-5b5d6494f4-dwvpn:9080 (ID:55669) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Apr 28 00:38:03.724: default/productpage-v1-675fc69cf-8zzxh:37378 (ID:9889) -> default/reviews-v1-5b5d6494f4-dwvpn:9080 (ID:55669) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Apr 28 00:38:03.725: default/productpage-v1-675fc69cf-8zzxh:37378 (ID:9889) -> default/reviews-v1-5b5d6494f4-dwvpn:9080 (ID:55669) to-endpoint FORWARDED (TCP Flags: ACK)

Apr 28 00:38:03.740: default/productpage-v1-675fc69cf-8zzxh:58725 (ID:9889) -> kube-system/coredns-76f75df574-rw29s:53 (ID:17513) to-endpoint FORWARDED (UDP)

Apr 28 00:38:08.810: default/productpage-v1-675fc69cf-8zzxh:37394 (ID:9889) -> default/reviews-v2-5b667bcbf8-kjwhn:9080 (ID:10139) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Apr 28 00:38:08.810: default/productpage-v1-675fc69cf-8zzxh:37394 (ID:9889) -> default/reviews-v2-5b667bcbf8-kjwhn:9080 (ID:10139) to-endpoint FORWARDED (TCP Flags: ACK)

Apr 28 00:38:08.867: default/productpage-v1-675fc69cf-8zzxh:34917 (ID:9889) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) to-endpoint FORWARDED (UDP)

Apr 28 00:38:08.879: default/productpage-v1-675fc69cf-8zzxh:51600 (ID:9889) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) to-endpoint FORWARDED (UDP)

Apr 28 00:38:08.911: default/productpage-v1-675fc69cf-8zzxh:37402 (ID:9889) -> default/reviews-v3-5b9bd44f4-qjfth:9080 (ID:1786) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Apr 28 00:38:08.911: default/productpage-v1-675fc69cf-8zzxh:37402 (ID:9889) -> default/reviews-v3-5b9bd44f4-qjfth:9080 (ID:1786) to-endpoint FORWARDED (TCP Flags: ACK)

Internet Egress Policy

This is an Internet Egress use-case where the objective is to limit the domains that the internal-client pod can connect to on the Internet.

Notice you can still see the same DNS policy, however we are adding the toFQNDs construct in this policy. This works as follows:

Traffic from internal-client to coredns is allowed.

When the internal-client wants to connect to the public URL "ifconfig.me", it first needs to resolve its IP Address.

Since, we already have visibility to DNS traffic with DNS Proxy, we can see the DNS response containing the IP Address of "ifconfig.me".

toFQDN is basically a representation of that IP Address i.e. as if we are putting a policy to the IP address that is a result of DNS resolution.

The reason this approach is interesting is that we don't need to deal with changing addresses & we can just rely on DNS names.

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "from-internal-client-to-fqdn"

spec:

endpointSelector:

matchLabels:

app: internal-client

egress:

- toEndpoints:

- matchLabels:

"k8s:io.kubernetes.pod.namespace": kube-system

"k8s:k8s-app": kube-dns

toPorts:

- ports:

- port: "53"

protocol: ANY

rules:

dns:

- matchPattern: "*"

- toFQDNs:

- matchName: "ifconfig.me"

k.eljamali@LLJXN1XJ9X Network Policies % hubble observe --pod internal-client --protocol dns

Apr 28 01:03:39.378: default/internal-client:60700 (ID:23217) -> kube-system/coredns-76f75df574-rw29s:53 (ID:17513) dns-request proxy FORWARDED (DNS Query ifconfig.me. A)

Apr 28 01:03:39.378: default/internal-client:60700 (ID:23217) -> kube-system/coredns-76f75df574-rw29s:53 (ID:17513) dns-request proxy FORWARDED (DNS Query ifconfig.me. AAAA)

Apr 28 01:03:39.423: default/internal-client:60700 (ID:23217) <- kube-system/coredns-76f75df574-rw29s:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer "2600:1901:0:bbc3::" TTL: 30 (Proxy ifconfig.me. AAAA))

Apr 28 01:03:39.424: default/internal-client:60700 (ID:23217) <- kube-system/coredns-76f75df574-rw29s:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer "34.117.118.44" TTL: 30 (Proxy ifconfig.me. A))

Apr 28 01:04:16.604: default/internal-client:57590 (ID:23217) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query cnn.com.default.svc.cluster.local. AAAA)

Apr 28 01:04:16.604: default/internal-client:57590 (ID:23217) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query cnn.com.default.svc.cluster.local. A)

Apr 28 01:04:16.607: default/internal-client:57590 (ID:23217) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer RCode: Non-Existent Domain TTL: 4294967295 (Proxy cnn.com.default.svc.cluster.local. AAAA))

Apr 28 01:04:16.607: default/internal-client:57590 (ID:23217) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer RCode: Non-Existent Domain TTL: 4294967295 (Proxy cnn.com.default.svc.cluster.local. A))

Apr 28 01:04:16.610: default/internal-client:57590 (ID:23217) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query cnn.com.svc.cluster.local. A)

Apr 28 01:04:16.610: default/internal-client:57590 (ID:23217) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query cnn.com.svc.cluster.local. AAAA)

Apr 28 01:04:16.611: default/internal-client:57590 (ID:23217) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer RCode: Non-Existent Domain TTL: 4294967295 (Proxy cnn.com.svc.cluster.local. A))

Apr 28 01:04:16.611: default/internal-client:57590 (ID:23217) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer RCode: Non-Existent Domain TTL: 4294967295 (Proxy cnn.com.svc.cluster.local. AAAA))

Apr 28 01:04:16.611: default/internal-client:57590 (ID:23217) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query cnn.com.cluster.local. AAAA)

Apr 28 01:04:16.612: default/internal-client:57590 (ID:23217) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query cnn.com.cluster.local. A)

Apr 28 01:04:16.612: default/internal-client:57590 (ID:23217) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer RCode: Non-Existent Domain TTL: 4294967295 (Proxy cnn.com.cluster.local. AAAA))

Apr 28 01:04:16.612: default/internal-client:57590 (ID:23217) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer RCode: Non-Existent Domain TTL: 4294967295 (Proxy cnn.com.cluster.local. A))

Apr 28 01:04:16.612: default/internal-client:57590 (ID:23217) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query cnn.com. AAAA)

Apr 28 01:04:16.612: default/internal-client:57590 (ID:23217) -> kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-request proxy FORWARDED (DNS Query cnn.com. A)

Apr 28 01:04:16.652: default/internal-client:57590 (ID:23217) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer "151.101.3.5,151.101.131.5,151.101.67.5,151.101.195.5" TTL: 30 (Proxy cnn.com. A))

Apr 28 01:04:16.656: default/internal-client:57590 (ID:23217) <- kube-system/coredns-76f75df574-sgbcv:53 (ID:17513) dns-response proxy FORWARDED (DNS Answer "2a04:4e42:c00::773,2a04:4e42:a00::773,2a04:4e42::773,2a04:4e42:800::773,2a04:4e42:600::773,2a04:4e42:e00::773,2a04:4e42:200::773,2a04:4e42:400::773" TTL: 30 (Proxy cnn.com. AAAA))

Here you can see traffic to "ifconfig.me" forwarded whereas traffic for other domains being denied.

Apr 28 01:04:16.656: default/internal-client:54006 (ID:23217) <> cnn.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

Apr 28 01:04:16.656: default/internal-client:54006 (ID:23217) <> cnn.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

Apr 28 01:04:17.699: default/internal-client:54006 (ID:23217) <> cnn.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

Apr 28 01:04:17.699: default/internal-client:54006 (ID:23217) <> cnn.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

Apr 28 01:05:20.563: default/internal-client:54006 (ID:23217) <> 151.101.3.5:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

Apr 28 01:05:20.563: default/internal-client:54006 (ID:23217) <> 151.101.3.5:80 (world) Policy denied DROPPED (TCP Flags: SYN)

Apr 28 01:08:27.832: default/internal-client:54862 (ID:23217) -> ifconfig.me:80 (ID:16777219) policy-verdict:L3-Only EGRESS ALLOWED (TCP Flags: SYN)

Apr 28 01:08:27.833: default/internal-client:54862 (ID:23217) -> ifconfig.me:80 (ID:16777219) to-stack FORWARDED (TCP Flags: SYN)

Apr 28 01:08:27.854: default/internal-client:54862 (ID:23217) -> ifconfig.me:80 (ID:16777219) to-stack FORWARDED (TCP Flags: ACK)

Apr 28 01:08:27.854: default/internal-client:54862 (ID:23217) -> ifconfig.me:80 (ID:16777219) to-stack FORWARDED (TCP Flags: ACK, PSH)

Apr 28 01:08:27.894: default/internal-client:54862 (ID:23217) -> ifconfig.me:80 (ID:16777219) to-stack FORWARDED (TCP Flags: ACK, FIN)

Apr 28 01:08:27.894: default/internal-client:54862 (ID:23217) -> ifconfig.me:80 (ID:16777219) to-stack FORWARDED (TCP Flags: ACK)

Troubleshooting

In this section, I wanted to go a bit deeper on a flow productpage to reviews-v1 pod. I have added the figure again for convenience. You can clearly see that productpage and reviews-v1 are on different nodes.

In addition, in this setup VXLAN tunnels to encapsulate traffic between the nodes as per the output of Figure 27.

|

k.eljamali@LLJXN1XJ9X Network Policies % cilium config view | grep tunnel

routing-mode tunnel

tunnel-protocol vxlan

It is mind boggling to see all this information within Hubble on a particular flow.

Figure 28 shows all the information about source & destination in terms of labels & identities and we can clearly see the traffic being forwarded on the overlay (VXLAN Tunnel). Note that the verdict clearly shows that the traffic is forwarded.

{

"flow": {

"time": "2024-04-27T21:36:04.373296596Z",

"uuid": "3ce41f60-b203-4e43-ab34-c89f631f7cd2",

"verdict": "FORWARDED",

"ethernet": {

"source": "9e:f7:72:78:39:c7",

"destination": "d6:55:a3:91:69:37"

},

"IP": {

"source": "10.244.2.148",

"destination": "10.244.1.170",

"ipVersion": "IPv4"

},

"l4": {

"TCP": {

"source_port": 47812,

"destination_port": 9080,

"flags": {

"ACK": true

}

}

},

"source": {

"ID": 1881,

"identity": 9889,

"namespace": "default",

"labels": [

"k8s:app=productpage",

"k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default",

"k8s:io.cilium.k8s.policy.cluster=kind-kind",

"k8s:io.cilium.k8s.policy.serviceaccount=bookinfo-productpage",

"k8s:io.kubernetes.pod.namespace=default",

"k8s:version=v1"

],

"pod_name": "productpage-v1-675fc69cf-8zzxh",

"workloads": [

{

"name": "productpage-v1",

"kind": "Deployment"

}

]

},

"destination": {

"identity": 55669,

"namespace": "default",

"labels": [

"k8s:app=reviews",

"k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default",

"k8s:io.cilium.k8s.policy.cluster=kind-kind",

"k8s:io.cilium.k8s.policy.serviceaccount=bookinfo-reviews",

"k8s:io.kubernetes.pod.namespace=default",

"k8s:version=v1"

],

"pod_name": "reviews-v1-5b5d6494f4-dwvpn"

},

"Type": "L3_L4",

"node_name": "kind-kind/kind-worker3",

"event_type": {

"type": 4,

"sub_type": 4

},

"traffic_direction": "EGRESS",

"trace_observation_point": "TO_OVERLAY",

"is_reply": false,

" interface": {

"index": 6,

"name": "cilium_vxlan"

},

"Summary": "TCP Flags: ACK"

},

"node_name": "kind-kind/kind-worker3",

"time": "2024-04-27T21:36:04.373296596Z"

}

Figure29 shows the incoming traffic from kind-worker1's perspective, where the traffic gets forwarded to the interface connecting reviews-v1 pod. Note that the verdict clearly shows that the traffic is forwarded.

{

"flow": {

"time": "2024-04-27T21:36:04.373343054Z",

"uuid": "bf9df4cf-44b2-479a-bd41-9d232d5aacf6",

"verdict": "FORWARDED",

"ethernet": {

"source": "72:e6:23:94:38:bb",

"destination": "02:9e:93:c9:74:57"

},

"IP": {

"source": "10.244.2.148",

"destination": "10.244.1.170",

"ipVersion": "IPv4"

},

"l4": {

"TCP": {

"source_port": 47812,

"destination_port": 9080,

"flags": {

"ACK": true

}

}

},

"source": {

"identity": 9889,

"namespace": "default",

"labels": [

"k8s:app=productpage",

"k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default",

"k8s:io.cilium.k8s.policy.cluster=kind-kind",

"k8s:io.cilium.k8s.policy.serviceaccount=bookinfo-productpage",

"k8s:io.kubernetes.pod.namespace=default",

"k8s:version=v1"

],

"pod_name": "productpage-v1-675fc69cf-8zzxh"

},

"destination": {

"ID": 2039,

"identity": 55669,

"namespace": "default",

"labels": [

"k8s:app=reviews",

"k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default",

"k8s:io.cilium.k8s.policy.cluster=kind-kind",

"k8s:io.cilium.k8s.policy.serviceaccount=bookinfo-reviews",

"k8s:io.kubernetes.pod.namespace=default",

"k8s:version=v1"

],

"pod_name": "reviews-v1-5b5d6494f4-dwvpn",

"workloads": [

{

"name": "reviews-v1",

"kind": "Deployment"

}

]

},

"Type": "L3_L4",

"node_name": "kind-kind/kind-worker",

"event_type": {

"type": 4

},

"traffic_direction": "INGRESS",

"trace_observation_point": "TO_ENDPOINT",

"is_reply": false,

"interface": {

"index": 15,

"name": "lxc240530c746ff"

},

"Summary": "TCP Flags: ACK"

},

"node_name": "kind-kind/kind-worker",

"time": "2024-04-27T21:36:04.373343054Z"

}

Subscribe to my newsletter

Read articles from Karim El Jamali directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Karim El Jamali

Karim El Jamali

Self-directed and driven technology professional with 15+ years of experience in designing & implementing IP networks. I had roles in Product Management, Solutions Engineering, Technical Account Management, and Technical Enablement.