Take the Hassle Out of Transaction Logging with This Python Script

Jonathan

Jonathan

Before this script I would manually type all of my transaction data into any app or spreadsheet which I swore to myself that I would use. More often than not I’d forget and by the end of the day or worse, I would remember to do in the moment but could not be bothered to log the transaction which had I occured. I said to myself, “Oh, I’ll do it later”.

Trust me, I never did.

So I decided to venture forth and build out a Python script which can:

Filter and pull my transaction data from my emails

Pick out the right data from that email

Upload my transaction data to a spreadsheet

Main

from upload import upload_tx_data

from fetch_emails import extract_email_tx_data

def main () -> None :

try:

tx_data = extract_email_tx_data()

if not tx_data:

print("No new emails to process. Transaction data is empty.")

return None

upload_tx_data(tx_data)

except Exception as error:

raise Exception(f"Error encountered in main: {error}") from error

if __name__ == "__main__":

main()

tx_data = extract_email_tx_data() -> This right here is where we pull ALL of my transaction emails from the day which has passed

if not tx_data -> This captures if there hasn’t been any new transaction details for that day

if there is transaction data we move unto → upload_tx_data(tx_data) -> This is where the magic happens for the uploading of my transaction data to google spreadsheet

Pulling my transaction data from my emails

# Misc

from datetime import datetime, timedelta

from typing import List

# Guard railing

from pydantic import BaseModel, Field

import llm

# Langchain

from langchain_community.tools.gmail.search import GmailSearch

from langchain_community.tools.gmail.utils import (

build_resource_service,

get_gmail_credentials,

)

Importing a few modules from Langchain our main source of connection to the Gmail services. Imports are also done from a local file, and other python imports

Langchain was my library of choice to get a list transactions from my email.

class InvoiceData(BaseModel):

date: str = Field(description="The exact date of when the transaction occured")

time: str = Field(description="The exact time the transaction occur")

amount: float = Field(description="The total cost of the transaction")

merchant: str = Field(description="The person who the transaction occured between")

status: str = Field(description="Whether or not the transaction was approved or declined")

type: str = Field(description="Whether if the transaction was a withdrawal, deposit or other type of transaction")

Here I created class to act as a custom data type from pydantic the library.

If you’ve ever used ChatGPT before you know it has the issue of hallucinations. Where it will make information up if it doesn’t understand or doesn’t know where the information should come from. This class prevents that. Where only the information that I’ve specified will be considered in the final output.

def fetch_emails() -> List[dict]:

# Gathering the google data

credentials = get_gmail_credentials(

client_secrets_file='CREDENTIALS.json',

token_file='TOKEN.json',

scopes=["https://mail.google.com/"],

)

# Building a api resource service for GMail

api_resource = build_resource_service(credentials=credentials)

# Initializes the search object

search = GmailSearch(api_resource=api_resource)

# Searches for emails based on a given query

query = "from:no-reply-ncbcardalerts@jncb.com subject:TRANSACTION APPROVED after:{}".format(

(datetime.now() - timedelta(days=1)).strftime("%Y/%m/%d"))

# a list of dictionaries containing the transaction email data

emails: List[dict] = search(query)

if emails:

# if there are emails, return a list of dictionaries containing the transaction email data

print(f"{len(emails)} new email(s) found!")

return [{ "body": email["body"] } for email in emails]

else:

print("No new emails found!")

return []

Within this script, fetch_emails I created an instance of a GmailSearch object provided by Langchain having given it the appropriate authorizartion.

This function uses the Gmail API to fetch emails based on a given query, which in our case is the specific bank from the day before.

The function returns a list of dictionaries containing the email data. Each dictionary has a single key-value pair where the key is “body” and the value is the email body in the instance that it finds this data.

If there is an email then a message is printed to the console and the list of emails are passed to be processed or if there isn’t an empty list is returned.

Picking out the right data from my emails

def extract_email_tx_data() -> List[str]:

# Instructions sent to the LLM

template = """

# Context

Below are bodies of text which contain transaction data.

# Goal

Without commenting, adding comments or notes, extract the following from EMAIL TRANSACTION DATA: date, time, amount, merchant, status, type

# EMAIL TRANSACTION DATA

{mail_list}

# Format

{format_instructions}

"""

# How the message received from the LLM should be formatted

output_parser = PydanticOutputParser(pydantic_object=InvoiceData)

# Create a prompt for the LLM

prompt = PromptTemplate(

template=template,

input_variables=["mail_list"],

partial_variables={"format_instructions": output_parser.get_format_instructions()},

)

# Create the chain for the LLM call

chain = prompt | llm.LLMs.mistral | output_parser

# Fetch emails

mail_list = fetch_emails()

results = [chain.invoke({"mail_list": eachEmail}) for eachEmail in mail_list]

return results

The previous fetch_emails function is used here. Within this function a set of textual instructions called prompts are given to the AI model along with an email.

From this email the: date, time, amount, merchant, status and type are extract from each transaction based on the custom data type I create before.

All the information from the prompt is assigned values inside the PromptTemplate and then a chain is curated.

I want to take a moment to explain the section in the chain,

“llm.LLMs.mistral”

This is coming from a python script called llm.py. Within it there are two classes: ChatOpenRouter and LLMs

Using LLMs for free

I’m unemployed. I have been since January. As such I needed a way to use these AI models without incurring any costs. My research led me to OpenRouter, they provide a small selection of free and very good models to work with.

However, Langchain doesn’t have native support for it. This posed an initial issue for until for a special Medium article posted last month.

In it the author, Gal Peretz, discusses using open source models without the ops hassle.

This is where ChatOpenRouter comes from.

class ChatOpenRouter(ChatOpenAI):

"""Class for OpenAI LLMs"""

openai_api_base: str

openai_api_key: str

model_name: str

def __init__(self,

model_name: str,

openai_api_key: Optional[str] = None,

openai_api_base: str = "https://openrouter.ai/api/v1",

**kwargs):

openai_api_key = openai_api_key or os.getenv('OPENAI_API_KEY')

super().__init__(openai_api_base=openai_api_base,

openai_api_key=openai_api_key,

model_name=model_name, **kwargs)

OpenRouter uses the same api calling format as OpenAI so we can implement an interface to use it within Langchain.

Thus allowing us to call the free models there.

class LLMs():

"""Class of LLMs"""

# Free AI Models we will be using

mistral = ChatOpenRouter(

model_name="mistralai/mistral-7b-instruct:free",

openai_api_key=os.environ.get('OPENAI_API_KEY')

)

mythomist = ChatOpenRouter(

model_name="gryphe/mythomist-7b:free",

openai_api_key=os.environ.get('OPENAI_API_KEY')

)

From my testing these two models performed the best in my use case.

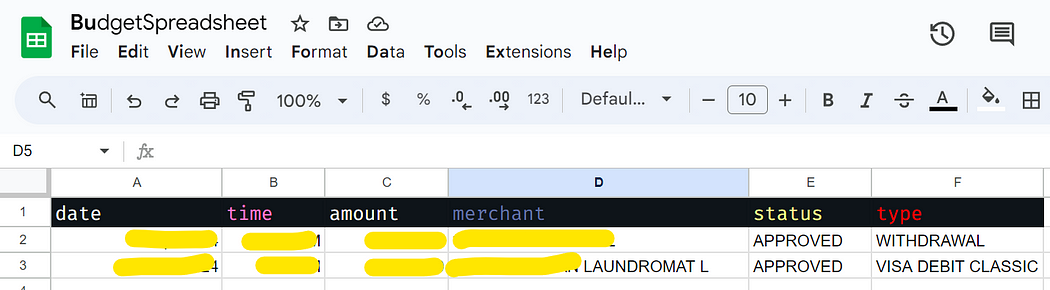

Uploading Transaction Data

So lets say yesterday I went out and conducted a transaction at the supermarket. This would mean that within the last 24 hours there would be a new transaction within my email.

If there is transaction data how would it get to my google spreadsheet?

This is where our final script, upload.py, would come into play.

def upload_tx_data(tx_data: List[InvoiceData]) -> None:

# scope for the google sheet

scope = ['https://spreadsheets.google.com/feeds','https://www.googleapis.com/auth/drive']

# credentials for the google sheet

creds = ServiceAccountCredentials.from_json_keyfile_name(filename='GSHEETS.json', scopes=scope)

# authorize the clientsheet

client = gspread.authorize(creds)

# the file path for the csv file

budget_spreadsheet = client.open('BudgetSpreadsheet')

# get the first sheet of the Spreadsheet

budget_spreadsheet_instance = budget_spreadsheet.get_worksheet(0)

# transaction data that is being passed off to the dataframe

# Convert the list of InvoiceData objects to a DataFrame

df = pd.DataFrame([tx.model_dump() for tx in tx_data])

# Write the DataFrame to Google Sheets

budget_spreadsheet_instance.append_rows(values=df.values.tolist(), value_input_option='USER_ENTERED')

Inside of main.py we passed the transaction data to this function which creates an instance of a specific google spreadsheet based on its name having been given authorization with my credentials.

The data from the transactions is then transformed into lists and appended to the last unoccupied row of the spreadsheet.

Here’s an example of what that looks like:

There you have it folks.

In conclusion, automating transaction logging using a Python script can significantly streamline the process of tracking financial activities. By leveraging AI models and open-source tools, users can efficiently extract, filter, and upload transaction data from emails to a spreadsheet.

This automation not only saves time but also reduces the chances of missing important transactions.

Embracing technology to simplify administrative tasks like transaction logging showcases the power of innovation in everyday workflows.

If you're looking to enhance your productivity through automation, exploring similar solutions tailored to your needs could be a game-changer.

If you have an automation that you want for yourself that falls in the category of admin task for yourself or even your business.

Lets talk. I’m taking 3 users right now for free.

Click here so we can start a conversation.

I'm also on: Twitter, Github & LinkedIn

Requests and questions: If you have a project in mind that you'd like me to work on or if you have any questions about the concepts I've explained, don't hesitate to let me know. I'm always looking for new ideas and I love helping to resolve any doubts you might have.

Subscribe to my newsletter

Read articles from Jonathan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Jonathan

Jonathan

Writer | Reader | Developer from 🇯🇲 | Building Langchain Bots