AI-Powered PCs Projected to Reach 167 Million Shipments by 2027

Spheron Network

Spheron Network

The exponential surge in AI has convinced businesses that incorporating AI is no longer a choice but a necessity. With LLM models becoming ubiquitous, companies are compelled to revisit their operational strategies. The enormous potential of AI across industries has triggered businesses to actively seek ways to integrate these intelligent technologies into their daily workflows.

The need for powerful computing resources has become more pressing as AI grows unprecedentedly. Just as a car requires fuel to run efficiently, AI demands a robust computing infrastructure to perform its tasks effectively. AI requires significant computing power to analyze customer data or optimize supply chain logistics. The surge in AI applications across sectors underscores the critical need for companies to fortify their computing infrastructure. Without sufficient resources, AI's potential remains untapped, making it imperative that businesses fully invest in powerful hardware to leverage AI in their daily operations.

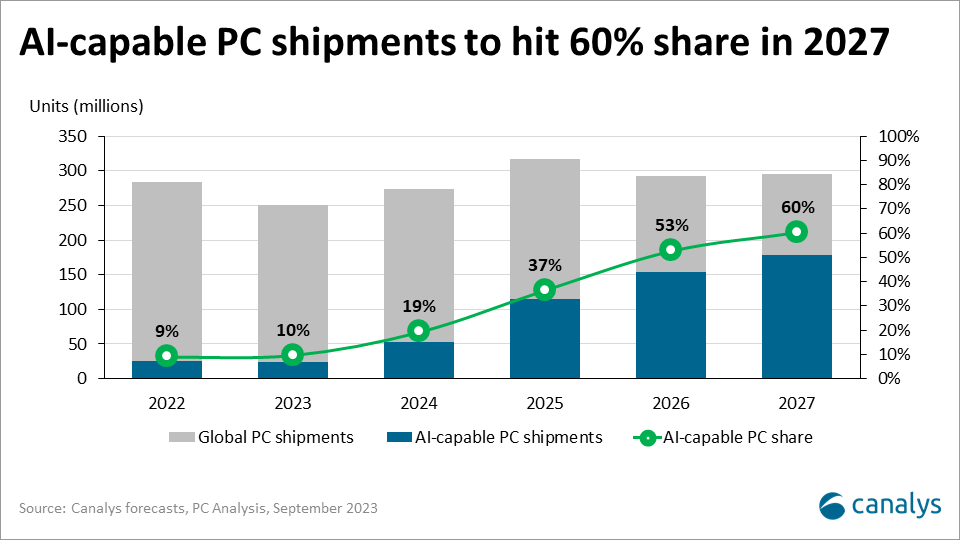

As outlined in the International Data Corporation (IDC) report, the PC systems market, featuring built-in AI computing capabilities, is anticipated to experience a robust 60% growth over the next three years.

The International Data Corporation (IDC) has projected a substantial increase in the shipment of PCs equipped with specialized system-on-a-chip (SoC) for handling general AI tasks from 2023 to 2027. The forecast predicts a surge from 50 million units in 2024 to an estimated 167 million by the end of 2027. According to IDC, the growth of AI-enabled PCs is driven by the introduction of specialized silicon, called neural processing units (NPUs), by PC silicon vendors. These NPUs are classified into three types that enhance the efficiency of AI tasks.

- Hardware-enabled AI PCs: The latest chips from leading players like Qualcomm, Apple, AMD, and Intel now ship with NPUs with <40 tera operations per second (TOPS) performance. This enables specific AI features to be processed locally, providing you with the best possible experience.

- Next-generation AI PCs: Qualcomm, AMD, and Intel's upcoming launches in 2024 will be a game-changer in AI. With NPUs boasting an impressive 40-60 TOPS performance and an AI-first operating system, these devices will offer persistent and pervasive AI capabilities previously unheard of. Moreover, Microsoft is also gearing up to make major updates to Windows 11 to take advantage of these high-TOPS NPUs. It's safe to say that the future of AI looks incredibly promising with these new developments.

- Advanced AI PCs: These devices are projected to offer more than 60 TOPS of NPU performance. Although no vendors have announced such products yet, IDC foresees their emergence in the near future and plans to incorporate them into upcoming updates.

IDC forecasts robust growth of NPU-based systems, predicting that PC systems utilizing cloud-based AI services will double those that rely on NPUs. This signals a shift towards AI integration in diverse PC categories, reflecting the industry’s evolution towards enhanced AI capabilities.

More AI-optimized processors are set for release in the coming quarters

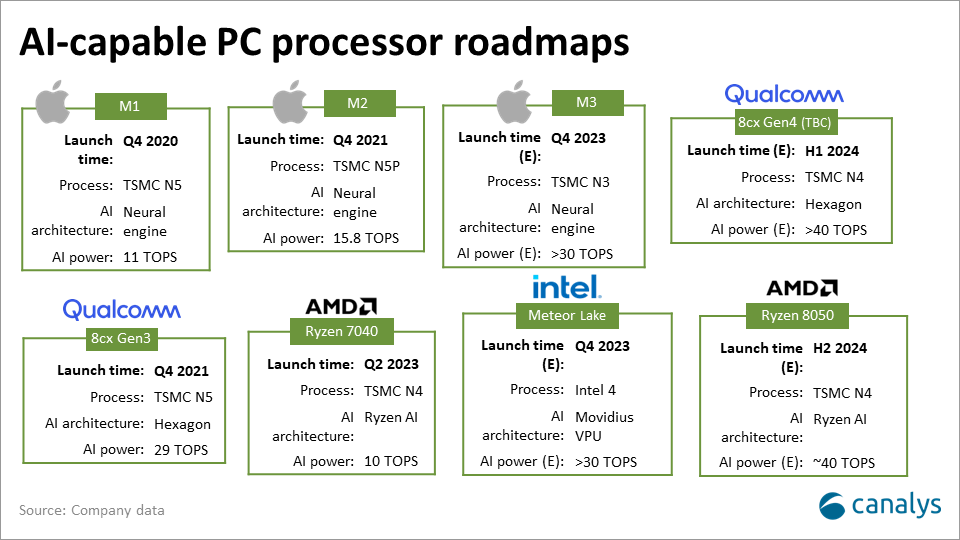

To evaluate the rapidly evolving landscape of AI-capable PCs, it is imperative to conduct a thorough examination of each processor manufacturer's strategic direction and roadmap. Apple has taken the lead in this domain by introducing AI integration with its M1 Neural Engine in Q4 2020. With the upcoming M3 series and Intel's exit from Mac products, Apple has become the first wholly AI-capable PC manufacturer by Q2 2023.

On the other hand, Qualcomm aims for a greater market presence by enhancing its ARM-based 8cx Gen3 chipset's AI capabilities and integrating it with Windows Copilot. Their 2024 upgrade featuring the Nuvia architecture will surely make a significant impact. In the x86 realm, AMD has taken the lead by introducing its AI offering with the "Phoenix" Ryzen 7040 series in Q2 2023, which has stolen a march over Intel's Meteor Lake debut.

The move made by AMD has been a game-changer as it encouraged PC OEMs to adopt their technology early, which helped them capitalize on the market's momentum. And by H2 2024, AMD's anticipated 8050 series is expected to promise further AI enhancements. However, despite its late entry, Intel has emphasized AI by embedding the Movidius VPU in its Meteor Lake range. Given Intel's market dominance, this strategy is set to drive more significant AI-PC adoption from 2024 onward. With its forthcoming Arrow Lake and Lunar Lake editions, Intel will up the ante about AI chipsets, positioning itself at the forefront of the AI-capable PC revolution.

Moreover, vendors with substantial shipment volumes should consider developing their processors using ARM or RISC-V architectures. While this approach would involve higher initial costs, it offers optimized performance, power efficiency, and enhanced security. Over time, as shipment volumes grow, this strategy can reduce costs, granting vendors greater resilience and ownership over their technology.

Demand for components will escalate as AI tools advance

The current definition of an AI-capable PC is just the foundational benchmark. For advanced AI software and tools, enhanced component performance becomes vital. Canalys predicts that AI functionality available to users will evolve rapidly over the coming years, ramping up complexity. These advancements will amplify the need for components such as memory, storage, and GPUs, eventually tailored to specific use cases. As optimized Large Language Models (LLMs) become pre-installed on PCs, a combination of increased storage capacity with high-speed interfaces becomes essential. Similarly, running these LLMs will necessitate more memory and a robust GPU.

This big demand will cause many chip makers to make special AI chips. It’s expected to keep growing for many more years. At Spheron Network, we’re working on a Decentralized network of powerful GPUs. We’re optimistic about the future and forever Bullish!

Subscribe to my newsletter

Read articles from Spheron Network directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Spheron Network

Spheron Network

On-demand DePIN for GPU Compute