[AWS] S3Bucket - lifecycle + Terraform Project 09

Mohamed El Eraki

Mohamed El ErakiTable of contents

Inception

Hello everyone, This article is part of The Terraform + AWS series, And it's not depend on the previous articles, I use this series to publish out Projects & Knowledge.

Overview

Hello Everyone, Managing your storage lifecycle provides you the capabilities to transition your objects between Amazon S3 Storage Classes, Amazon S3 Lifecycle configuration is a set of rules that defined actions that S3 applied to a group of objects, There are two types of actions:

Transition actions:

Transition the group of objects between storage classes based on multiple factors i.e. filtration, set of days, and storage class type. For example you may choose to transition objects to S3 Standard-IA storage class 30 days after Creation time, Then transition again to S3 Glacier 90 days after Creation time.Expiration actions:

These actions define when objects expire. Amazon S3 deletes expired objects on your behalf. for example you may choose to set expiration time on group of objects 365 days after creation time Therefor the S3 will delete the expired objects on your behalf.

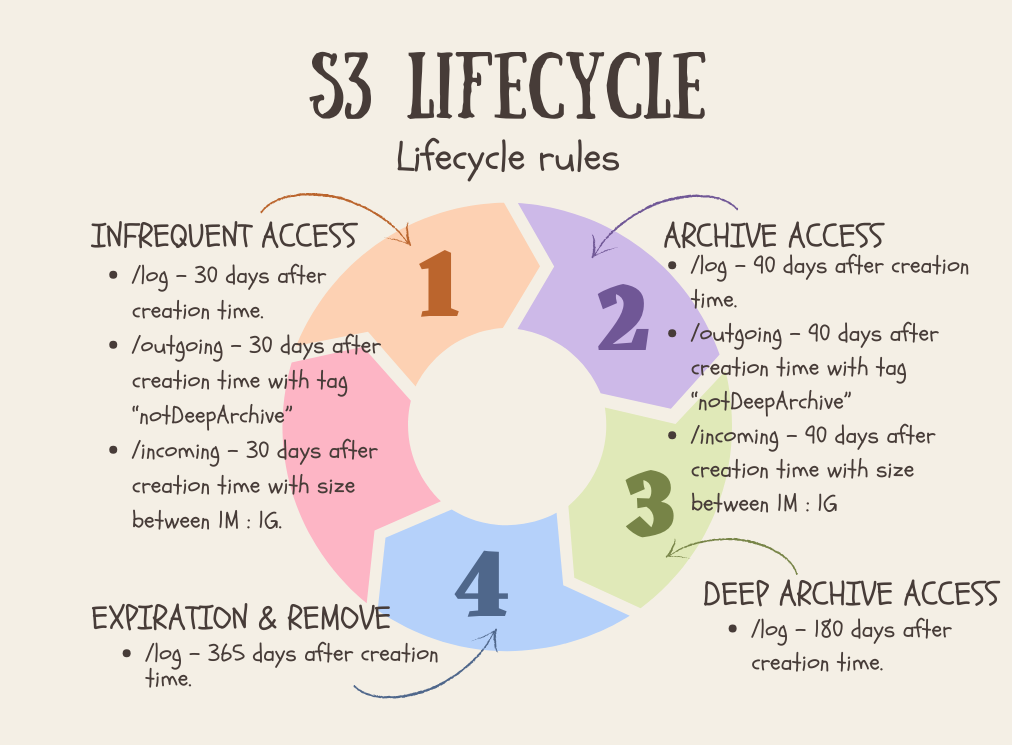

Today's Example will Create an Amazon S3 storage and configure the S3 Lifecycle rules as as the following:

Transition all files under /log to infrequent access (i.e. Standard-IA) 30 consecutive days after creation time.

Transition all files under /log to Archive access (i.e. Glacier) 90 consecutive days after creation time.

Transition all files under /log to Deep Archive access (i.e. Glacier Deep Archive) 180 consecutive days after creation time.

Remove all files under /log 365 consecutive days after creation time.

Transition all files under /outgoing with tag "notDeepArchive" to infrequent access (i.e. Standard-IA) 30 consecutive days after creation time.

Transition all files under /outgoing to Archive access (i.e. Glacier) with tag "notDeepArchive" 90 consecutive days after creation time.

Transition all files under /incoming with size between 1MB to 1G to infrequent access (i.e. Standard-IA) 30 consecutive days after creation time.

Transition all files under /incoming with size between 1MB to 1G to Archive access (i.e. Glacier) 90 consecutive days after creation time.

Building-up Steps

The Architecture Design Diagram:

Building-up Steps details:

Today will Build up an S3 Bucket, S3 Directories objects, and configure the S3 lifecycle with rules that mentioned above, The Infrastructure will build-up Using 𝑻𝒆𝒓𝒓𝒂𝒇𝒐𝒓𝒎.✨

Deploy an S3 Bucket.

Create Directories in the root path of the deployed S3 called outgoing, Incoming, and logs.

Deploy lifecycle resources configurations rules as mentioned above.

enough talking, let's go forward...😉

Clone The Project Code

Create a clone to your local device as the following:

pushd ~ # Change Directory

git clone https://github.com/Mohamed-Eleraki/terraform.git

pushd ~/terraform/AWS_Demo/16-S3BucketArchive

- open in a VS Code, or any editor you like

code . # open the current path into VS Code.

Terraform Resources + Code Steps

Once you opened the code into your editor, will notice that the resources have been created. However will discover together how Create them steps by step.

Configure the Provider

- Create a new file called

configureProvider.tfHold the following content

# Configure aws provider

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

# Configure aws provider

provider "aws" {

region = "us-east-1"

profile = "eraki" # Replace this with your AWS CLI profile name,

# Or remove it if you're using the default one.

}

Create S3 Bucket Resources

- Create a new file called

s3.tfThen create an S3 Bucket.

# Deploy an S3 Bucket and directorites

resource "aws_s3_bucket" "s3_01" {

bucket = "eraki-s3-dev-01"

}

- Deploy S3 directories objects

# Create outgoing directory

resource "aws_s3_object" "directory_object_s3_01_outgoing" {

bucket = aws_s3_bucket.s3_01.id

key = "outgoing/"

content_type = "application/x-directory"

}

# Create incoming directory

resource "aws_s3_object" "directory_object_s3_01_incoming" {

bucket = aws_s3_bucket.s3_01.id

key = "incoming/"

content_type = "application/x-directory"

}

# Create logs directory

resource "aws_s3_object" "directory_object_s3_01_logs" {

bucket = aws_s3_bucket.s3_01.id

key = "logs/"

content_type = "application/x-directory"

}

- Deploy lifecycle resources configurations

# Deploy lifecycle resource configuration

resource "aws_s3_bucket_lifecycle_configuration" "lifeCycle_configs" {

bucket = aws_s3_bucket.s3_01.id

#######################

# logs directory rule #

#######################

rule {

id = "log-directory"

filter { # filter the bucket based on the path prefix

prefix = "logs/"

}

transition { # move the files to infrequent access tier 30 days after creation time

days = 30

storage_class = "STANDARD_IA"

}

transition { # move the files to Archive tier 90 days after creation time.

days = 90

storage_class = "GLACIER"

}

transition { # move the files to the deep archive tier 180 days after creation time.

days = 180

storage_class = "DEEP_ARCHIVE"

}

expiration { # Delete objects a year after creation time.

days = 365

}

# Enable the rule

status = "Enabled"

}

###########################

# outgoing directory rule #

###########################

rule {

id = "outgoing-directory"

filter {

tag { # filter objects based on objects tag & under outgoing directory object

key = "Name"

value = "notDeepArchvie"

}

and {

prefix = "outgoing/"

}

}

transition { # Move to Infrequent access tier 30 days after creation time

days = 30

storage_class = "STANDARD_IA"

}

transition { # Move to Archive access tier 90 days after creation time

days = 90

storage_class = "GLACIER"

}

expiration { # Delete objects a year after creation time.

days = 365

}

# Enable the rule

status = "Enabled"

}

###########################

# incoming directory rule #

##########################

rule {

id = "incoming-directory"

filter {

prefix = "incoming/"

# Filter files it's size is between 1MB to 1G

object_size_greater_than = 1000000 # in Bytes

object_size_less_than = 1073741824 # in Bytes

}

transition { # Move to Infrequent access tier 30 days after creation time

days = 30

storage_class = "STANDARD_IA"

}

transition { # Move to Archive access tier 90 days after creation time

days = 90

storage_class = "GLACIER"

}

# Enable the rule

status = "Enabled"

}

}

Apply Terraform Code

After configured your Terraform Code, It's The exciting time to apply the code and just view it become to Real. 😍

- First the First, Let's make our code cleaner by:

terraform fmt

- Plan is always a good practice (Or even just apply 😁)

terraform plan

- Let's apply, If there's No Errors appear and you're agree with the build resources

terraform apply -auto-approve

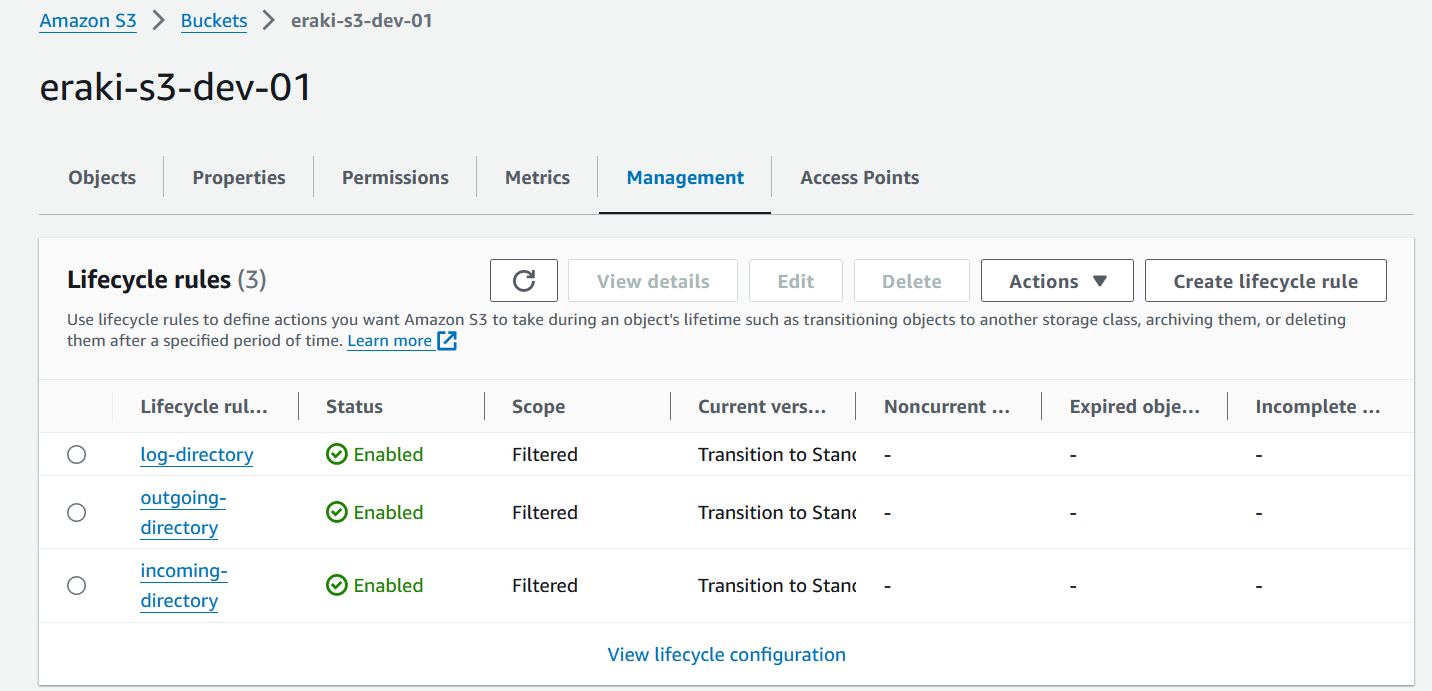

Check S3 lifecycle configuration

Open-up your S3 Bucket.

Press on Management, view the rules that we created as the following

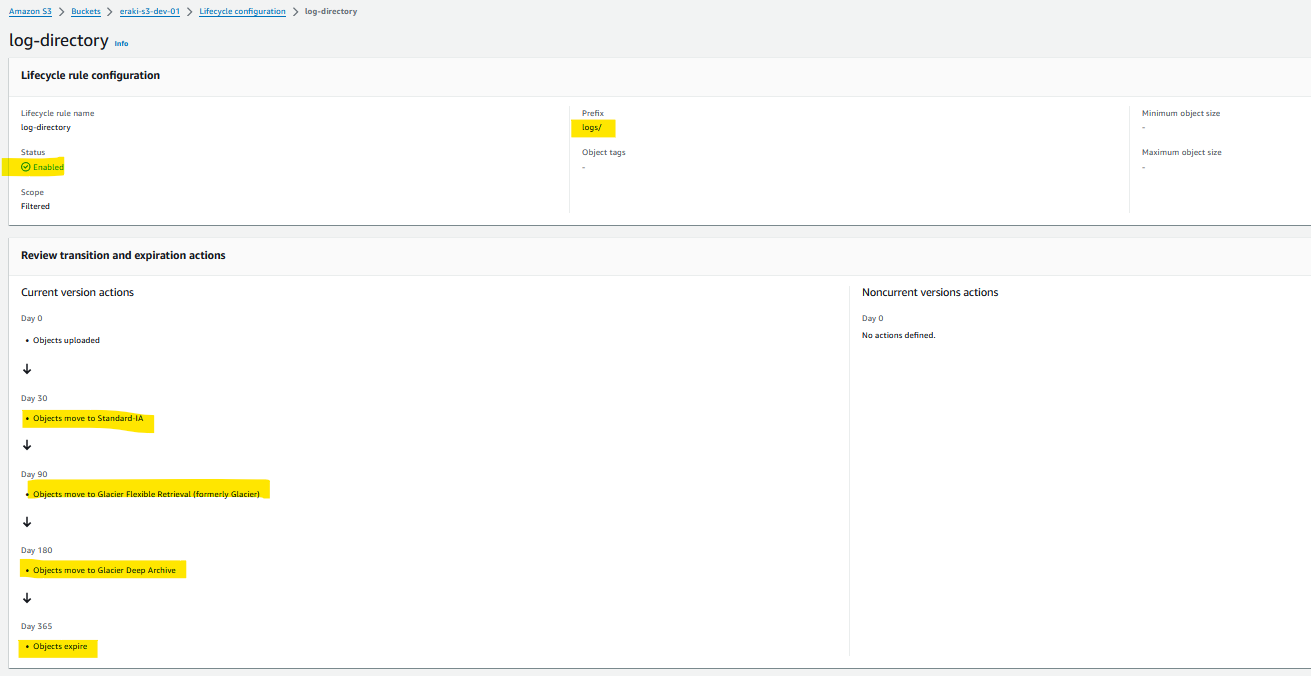

- Open-up any rule to view it's configurations

Destroy environment

The Destroy using terraform is very simple, However we should first destroy the Access keys.

- Destroy all resources using terraform

terraform destroy -auto-approve

Conclusion

leveraging S3 lifecycle configurations to automatically transition files to different storage classes based on predefined criteria like path, size, and tags offers significant benefits. This approach optimizes storage costs by ensuring that data is stored in the most cost-effective manner while maintaining accessibility and compliance requirements. By efficiently managing data lifecycle, organizations can streamline operations, reduce manual intervention, and enhance overall data management practices within their AWS S3 environment.

Resources

That's it, Very straightforward, very fast🚀. Hope this article inspired you and will appreciate your feedback. Thank you.

Subscribe to my newsletter

Read articles from Mohamed El Eraki directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Mohamed El Eraki

Mohamed El Eraki

Cloud & DevOps Engineer, Linux & Windows SysAdmin, PowerShell, Bash, Python Scriptwriter, Passionate about DevOps, Autonomous, and Self-Improvement, being DevOps Expert is my Aim.