Setting Up Cross-Region Internal Application Load Balancer on Google Cloud using Terraform CDK: A Comprehensive Guide

Anton Ermak

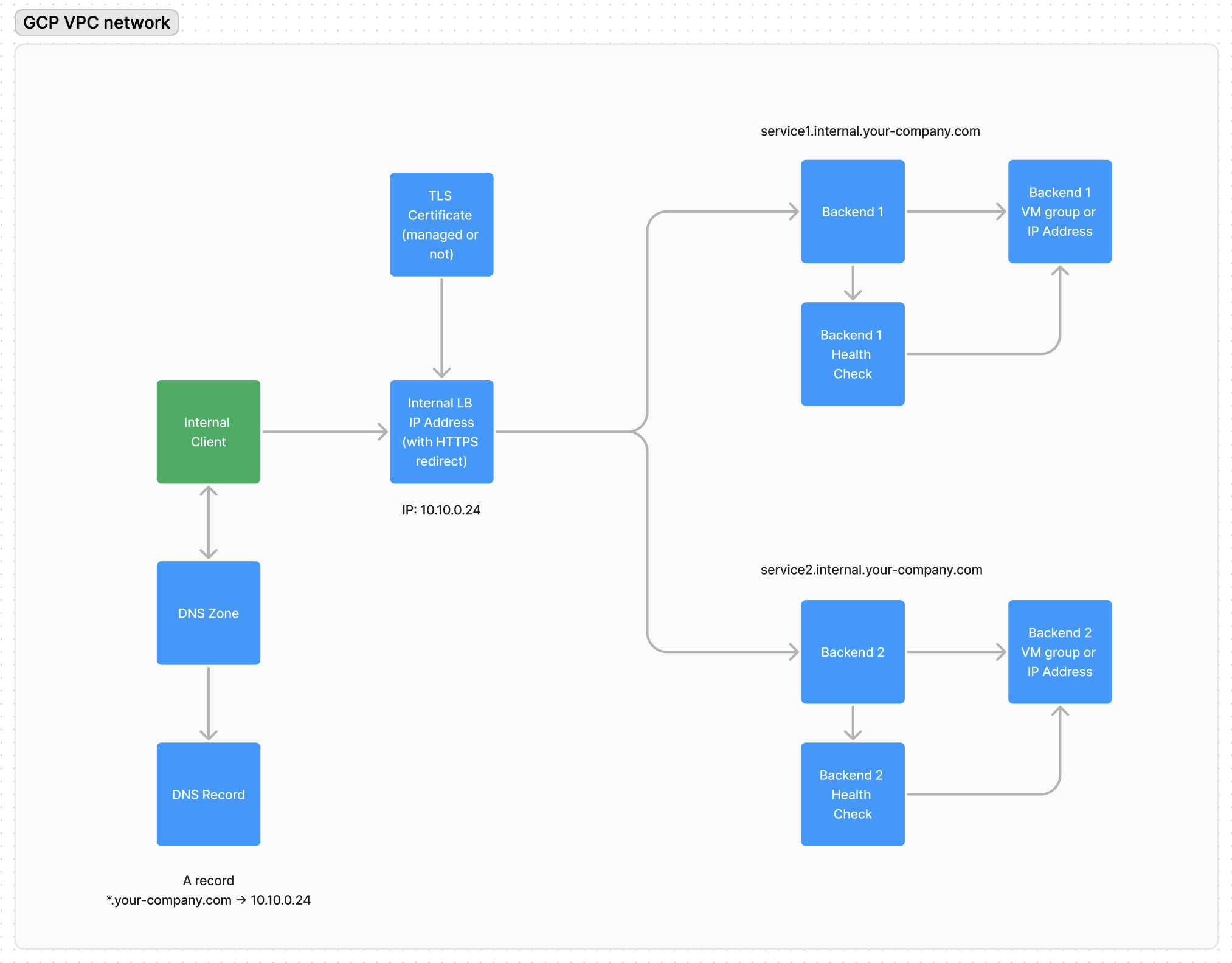

Anton ErmakIn this article, I will explain how to set up an Internal Load Balancer in Google Cloud. The article will be useful for those who want to configure an L7 level HTTPS/HTTP balancer for accessing internal services without an external Internet address, set up TLS certificates, and DNS records.

HTTP(S) request lifecycle in the network

Consider the request curl https://service1.internal.your-company.com. The following steps are taken to receive a response:

The client resolves the DNS record

service1.internal.your-company.comto the internal address10.10.2.24.The HTTP client (in this case

curl) establishes a TCP connection with the IP Balancer at the address10.10.2.24and sends an HTTP(S) request.If the request is made using the HTTPS protocol, a TLS handshake takes place, and a certificate is obtained. In this case,

*.internal.your-company.com. The client confirms the security of the connection, and the request is encrypted with a session key. In the case of an HTTP request, there is an HTTP redirect to the HTTPS protocol.Using URL Map and the

Hostheader in the request, the Balancer redirects the request to the appropriate backend.The backend (VM machine/Cloud Run instance/etc.) processes the request and sends a response.

The client

curldisplays the response on the screen.

Depending on the type of load balancer (regional or cross-regional), the IP address can be in one region and network or across different ones. Furthermore, DNS records can be configured to get the closest IP address to the client. This setup is less common and can be done similarly to the example in this article.

The internal client could be your software or, for example, a VPN gateway (Wireguard, TailScale/Twingate, etc.).

Setting up

To set up the infrastructure, we will use Terraform. At the moment (2024), GCP does not fully support all required resources in the GCP Console interface. Additionally, since there are quite a few blocks, creating them manually through the gcloud API is very labor-intensive.

I will be using Kotlin and CDKTF in the examples, but you can use HCL or an another language for CDKTF.

I highly recommend this CDKTF article if you have not used it before.

Domain name and Google-managed certificate

First, we will need an internal certificate. There are several options here:

Use a subdomain of a domain you already manage. This way, your browser will work with the site seamlessly through a chain of trusted certificates. For example

*.internal.your-company.com.Use a separate private zone, for instance

your-company.internal. In this case, you will need to create your Certificate Authority (CA) and add it to the browsers of your employees or services. Based on the private key created by the CA, you can generate new certificates.

The first option is simpler; GCP can create and manage such a certificate on its own using DNS-challenge, (very similar to ACME DNS-challenge in Let's Encrypt).

Let's do this in Terraform (CDKTF):

val auth = CertificateManagerDnsAuthorization.Builder.create(scope, "internal-auth")

.name("your-company-internal-auth")

.domain("internal.your-company.com")

.build()

val certificate = CertificateManagerCertificate.Builder.create(scope, "internal-cert")

.name("your-company-internal-certificate")

.scope("ALL_REGIONS")

.managed(

CertificateManagerCertificateManaged.builder()

.domains(listOf("*.internal.your-company.com"))

.dnsAuthorizations(listOf(auth.id))

.build()

)

.build()

After that, you will need to add a CNAME record in the DNS panel of your domain. As a result, the status in the certificate list should change from "pending" to "active" in 10-15 minutes.

Networks and Static Internal IP Address

Most likely, when you created a new project in GCP, you received a VPC network named "default" and subnets in each region.

Since they were already created outside of Terraform, we need to use "data sources" instead of "resources".

val network: DataGoogleComputeNetwork = DataGoogleComputeNetwork.Builder.create(scope, "default-network")

.name("default")

.build()

val subnetwork: DataGoogleComputeSubnetwork =

DataGoogleComputeSubnetwork.Builder.create(scope, "us-central1-subnetwork")

.name("us-central-subnetwork")

.region("us-central1")

.build()

We will need to create a static IP address to access the load balancer. GCP supports accessing an internal cross-regional load balancer from different regions using a single IP address, and there is also the option to create a separate IP address in each region.

We will use one address in one region us-central1, accessible to all subnetworks.

val ipAddress = ComputeAddress.Builder.create(scope, "internal-lb-ip-address")

.name("l7-internal-lb-ip-address")

.subnetwork(subnetwork.id)

.address("10.128.0.23") // make sure to use an IP address in range

.addressType("INTERNAL")

.region("us-central1")

.purpose("SHARED_LOADBALANCER_VIP")

.build()

Furthermore, we will need a specialized subnet where GCP will create Envoy proxies:

val proxyNetwork = GoogleComputeSubnetwork.Builder.create(scope, "internal-proxy-global-subnetwork")

.name("internal-proxy-global-subnetwork")

.ipCidrRange("10.0.4.0/24") // make sure to get a range not overlapping with existing ones

.purpose("GLOBAL_MANAGED_PROXY")

.role("ACTIVE")

.region("us-central1") // you need to add this subnetwork to as many regions as you want

.network(network.id)

.build()

Configuration of backends for the load balancer

In general, since we started building the infrastructure using CDKTF, we can consider the backend as the following entity:

data class ServiceBackend(

/**

* A user-readable name of a resource

*/

val name: String,

/**

* Terraform resource id

*/

val resourceId: String,

/**

* Hostnames to access the backend

*/

val hostnames: List<String>,

)

This will allow us to add as many backends as we need. Additionally, we can manage routing rules using a list of hostnames for each backend.

This way, we can add aliases for one backend or create hierarchical balancers (although the latter is not needed often).

Let's implement routing rules:

val backends: List<ServiceBackend> = listOf(...)

val httpsUrlMap = ComputeUrlMap.Builder.create(scope, "internal-url-map")

.name("l7-internal-url-map")

.hostRule(

backends.map {

ComputeUrlMapHostRule.builder()

.hosts(it.hostnames)

.pathMatcher(it.name)

.build()

}

)

.pathMatcher(

backends.map {

ComputeUrlMapPathMatcher.builder()

.name(it.name)

.defaultService(it.resourceId)

.build()

}

)

.defaultService(backends[0].name)

.build()

In this case, the default backend will be assigned the first backend (defaultService). Unfortunately, GCP requires us to use some backend. You can create your own as a 404 fallback and deploy it on Cloud Run or a VM instance.

Example is given below. I recommend to implement it in a separate file:

class SampleBackend(scope: Construct, id: String) : Construct(scope, id) {

val serviceBackend: ServiceBackend

init {

val healthCheck = ComputeHealthCheck.Builder.create(scope, "healthcheck")

.name("healthcheck")

.tcpHealthCheck(

ComputeHealthCheckTcpHealthCheck.builder()

.portName("http")

.build()

)

.build()

val computeInstance = DataGoogleComputeInstance.Builder.create(scope, "compute-instance")

.selfLink("<a link to already created instance>")

.build()

val computeInstanceGroup = ComputeInstanceGroup.Builder.create(scope, "backend-instance-group")

.zone("us-central1-a")

.instances(listOf(computeInstance.selfLinkInput))

.name("backend-instance-group")

.namedPort(

listOf(

ComputeInstanceGroupNamedPort.builder()

.name("http")

.port(80)

.build()

)

)

.build()

val backend = ComputeBackendService.Builder.create(scope, "backend")

.name("backend")

.protocol("HTTP")

.loadBalancingScheme("INTERNAL_MANAGED")

.healthChecks(listOf(healthCheck.id))

.backend(

listOf(

ComputeBackendServiceBackend.builder()

.group(computeInstanceGroup.id)

.balancingMode("UTILIZATION")

.capacityScaler(1.0)

.build()

)

)

.build()

serviceBackend = ServiceBackend(

name = backend.name,

resourceId = backend.id,

hostnames = listOf("sample-vm-backend.internal.your-company.com")

)

}

}

Notice that we've created an instance of ServiceBackend which we can use later to register the backend in the load balancer.

Forwarding rule and DNS configuration

Let's create a proxy, assign it a certificate, and specify routing rules:

val httpsProxy = ComputeTargetHttpsProxy.Builder.create(scope, "internal-https-proxy")

.name("internal-target-https-proxy")

.urlMap(httpsUrlMap.id)

.certificateManagerCertificates(listOf(certificate.id))

.build()

Connect our static IP address and proxy:

ComputeGlobalForwardingRule.Builder.create(scope, "internal-forwaring-rule")

.name("l7-internal-forwarding-rule-443")

.dependsOn(listOf(proxyNetwork))

.ipAddress(ipAddress.address)

.ipProtocol("TCP")

.loadBalancingScheme("INTERNAL_MANAGED")

.portRange("443")

.network(network.id)

.target(httpsProxy.id)

.subnetwork(subnetwork.id)

.build()

At this stage, the balancer is created and available at the static IP address in your project.

Create a DNS record in Cloud DNS and point it to our static address.

// I use data* statement here because this zone was already created outside of Terraform

val managedDnsZone = DataGoogleDnsManagedZone.Builder.create(scope, "internal-dns-zone")

.name("internal")

.build()

DnsRecordSet.Builder.create(scope, "internal-dns")

.name("*.${managedDnsZone.dnsName}")

.type("A")

.rrdatas(listOf(ipAddress.address))

.ttl(300)

.managedZone(managedDnsZone.name)

.build()

Now, for any node in the default VPC, you will get the created static IP address of the created balancer.

$ dig foobar.internal.your-company.com

...

;; ANSWER SECTION:

foobar.internal.your-company.ai. 300 IN A 10.128.0.23

Resource Organization

When using Terraform CDK, there is an opportunity to organize resources into a Construct type. This way, we can encapsulate all the resources created above into a special object. For example:

class InternalLb(

scope: Construct,

name: String,

backends: List<ServiceBackend>,

) : Construct(scope, name) {

init {

// all resources that we've defined before

}

}

Then we can use the created load balancer balancer as follows:

class MainStack(scope: Construct, id: String) : TerraformStack(scope, id) {

init {

GoogleProvider.Builder.create(this, "gcp")

.project("<your-project>")

.build()

// ServiceBackend is defined above

val serviceBackend = SampleBackend(...).serviceBackend

InternalLb(

scope = this,

name = "internal-lb",

backends = listOf(serviceBackend),

)

}

}

The stack can be deployed as follows:

$ cdktf deploy

Conclusion

In this article we have covered the most important features of creating a Cross-Region Internal Application Load Balancer.

With this approach you can easily register any backend and configure routing.

Subscribe to my newsletter

Read articles from Anton Ermak directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by