Unlocking the Potential of Chain-of-Thought Prompting in Large-Scale Language Models

NovitaAI

NovitaAI

Explore how Chain-of-Thought (CoT) prompting enhances Large Language Models (LLMs) across various domains, from arithmetic reasoning to common sense and symbolic reasoning in our blog.

Introduction

Large Language Models (LLMs) have transformed the landscape of artificial intelligence, offering unparalleled capabilities in both understanding and generating natural language. Nonetheless, their proficiency in executing intricate reasoning tasks has been a focal point of extensive investigation. One approach showing promise in this arena is Chain-of-Thought (CoT) prompting. This piece delves into the nuances of CoT prompting and its ramifications for the future trajectory of LLMs.

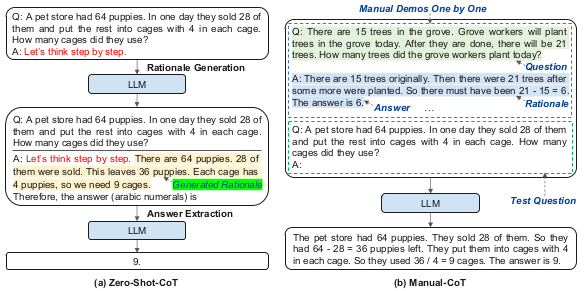

CoT prompting, as outlined in a recent paper, is a strategy designed to prompt LLMs to elucidate their reasoning processes. This entails furnishing the model with a set of exemplars where the reasoning pathway is explicitly delineated in a few-shot manner. The expectation is for the LLM to emulate a similar reasoning trajectory when responding to the prompt. This methodology has demonstrated a notable enhancement in the model’s efficacy for tasks necessitating complex reasoning.

A primary advantage of CoT prompting lies in its capacity to bolster the performance of LLMs across tasks entailing arithmetic, commonsense, and symbolic reasoning. Research has indicated substantial performance improvements, particularly with models boasting approximately 100 billion parameters. Conversely, smaller models have exhibited tendencies towards generating illogical chains of thought, resulting in diminished accuracy compared to conventional prompting techniques.

Understanding Chain-of-Thought Prompting

Essentially, CoT prompting entails directing the LLM to engage in step-by-step thinking. This involves presenting the model with a few-shot exemplar delineating the reasoning process. The model is subsequently tasked with adhering to a comparable chain of thought when formulating its response to the prompt. Such a method proves particularly efficacious for intricate tasks demanding a sequence of reasoning steps prior to generating a response.

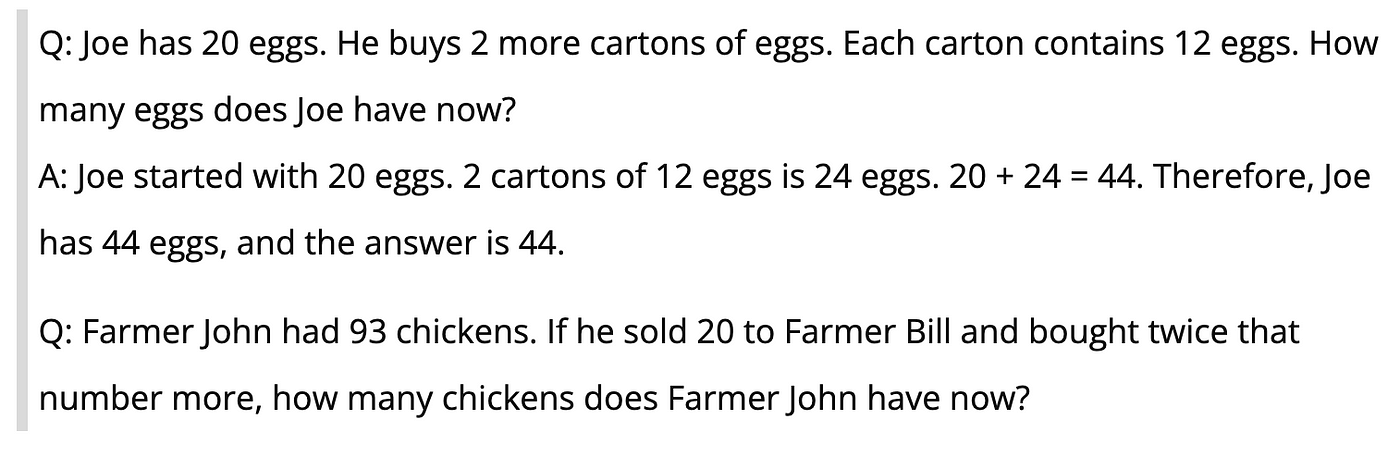

Here’s an example of a CoT prompt utilizing a few-shot strategy:

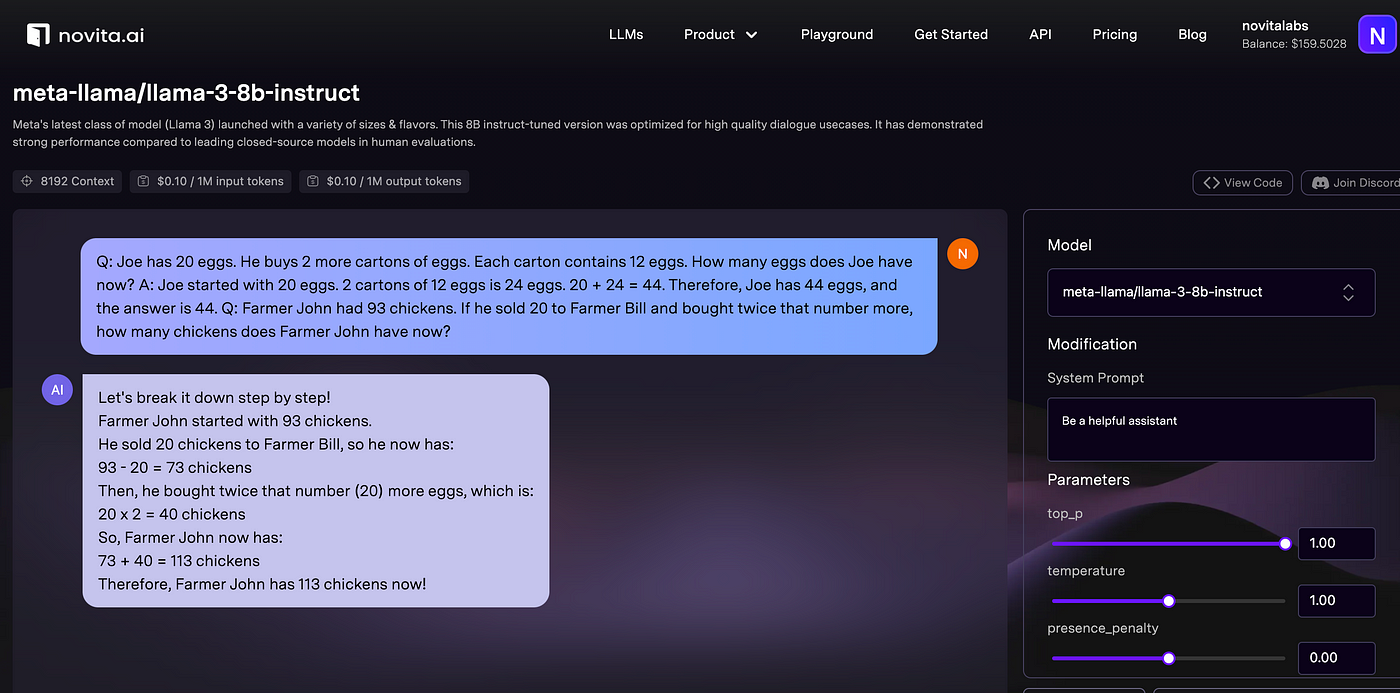

Here is how the Large Language Model(provided by novita.ai) answers:

CoT prompting can extend to a zero-shot context as well. In this scenario, it entails appending a phrase such as “Let’s approach this step by step” to the initial prompt, which can complement few-shot prompting. This minor inclusion has proven effective in enhancing the model’s efficacy for tasks where the prompt lacks ample examples to draw from.

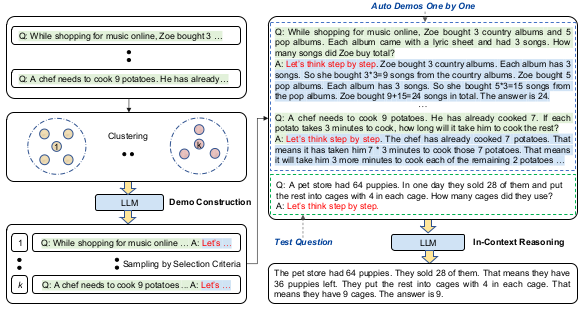

The Challenge of Manual-CoT

Despite the remarkable achievements of Manual-CoT, the manual creation of demonstrations presents obstacles. Various tasks demand distinct demonstrations, and crafting effective ones can entail considerable effort. To overcome this challenge, researchers advocate for an automated CoT prompting approach known as Auto-CoT. This method harnesses LLMs with the prompt “Let’s approach this step by step” to autonomously generate reasoning chains for demonstrations.

The Role of Diversity in Auto-CoT

A pivotal finding from the study underscores the significance of diversity in the automated construction of demonstrations. Researchers observed that reasoning chains generated automatically frequently contain errors. To mitigate the impact of these errors, they introduce an automated CoT prompting technique named Auto-CoT. Auto-CoT selects questions with diverse attributes and generates reasoning chains to formulate demonstrations. Through harnessing diversity, Auto-CoT endeavors to enhance the caliber of automatically constructed demonstrations.

Benefits of Chain of Thought Prompting

Utilizing Chain of Thought (CoT) prompting with Large Language Models (LLMs) offers numerous advantages, fostering more effective and efficient interactions. Here are the key benefits:

Enhanced Precision: CoT prompting guides the model through a sequence of prompts, notably increasing the likelihood of obtaining accurate and pertinent responses. This structured approach aids in refining the model’s comprehension, resulting in more precise outputs.

Improved Control: Chains provide a structured framework for engaging with LLMs, empowering users with greater control over the model’s outputs. By following a sequence of prompts, users can steer the conversation toward their intended direction, minimizing the risk of unintended or irrelevant results.

Consistent Context Retention: Adaptive learning within chains ensures consistent preservation of context throughout the conversation. This retention of context fosters more coherent and meaningful interactions as the model maintains a memory of the ongoing dialogue.

Efficiency: CoT prompting streamlines the interaction process, saving time by obviating the necessity for multiple inputs. Users can achieve specific results more efficiently, particularly when targeting a specific outcome from an LLM prompt.

Enhanced Reasoning Capabilities: CoT prompting encourages LLMs to focus on solving problems one step at a time rather than considering the entire challenge simultaneously. This approach augments the reasoning abilities of LLMs, facilitating a more systematic and practical problem-solving process.

Types of Chain of Thought Prompting

In the realm of Chain-of-Thought (CoT) prompting, two effective strategies have emerged, both crucial in enhancing interactions with Large Language Models (LLMs). Now, let’s explore the intricacies of these methods:

Multimodal CoT

Multimodal CoT prompting injects a dynamic element into conventional text-based interactions by integrating various input modes, including images, audio, or video.

Users kickstart the Chain by furnishing a multimodal prompt, presenting a more intricate context for the LLM to interpret and address. Follow-up prompts can further incorporate various modalities, facilitating a deeper comprehension of the user’s input. The inclusion of diverse modalities enhances the context, empowering the model to better grasp the subtleties of the user’s intention. Multimodal inputs can elicit more imaginative responses from the LLM, broadening the scope for generating varied and contextually pertinent content.

If you want to know more information about multimodal language models, you can check our blog: Large Multimodal Models(LMMs): A Gigantic Leap in AI World

Least-to-Most Prompting

Least-to-Most Prompting is a strategy that commences the chain with a minimalist prompt and progressively augments complexity in subsequent prompts. The interaction starts with a basic and broad prompt, enabling the model to offer an initial response. As the chain unfolds, users can gradually introduce more details, specifications, or intricacies, steering the model towards a more nuanced and precise output. This incremental approach facilitates a gradual enhancement of the model’s comprehension, mitigating the risk of misinterpretations at the outset of the interaction.

Least-to-Most Prompting enables users to adjust the complexity of the task based on the initial response of the model, ensuring a more personalized and pragmatic interaction.

Auto-CoT Implementation

Auto-CoT involves two primary steps:

Segmenting questions from a provided dataset into eight clusters — sentence-BERT is employed to encode the questions, after which clusters are established according to cosine similarity.

Choosing a representative question from each cluster and crafting its reasoning chain using Zero-Shot-CoT alongside straightforward heuristics — these heuristics entail refraining from selecting questions exceeding 60 tokens or reasoning chains comprising more than five steps. These heuristics are designed to enhance the likelihood of the automatically generated response being accurate.

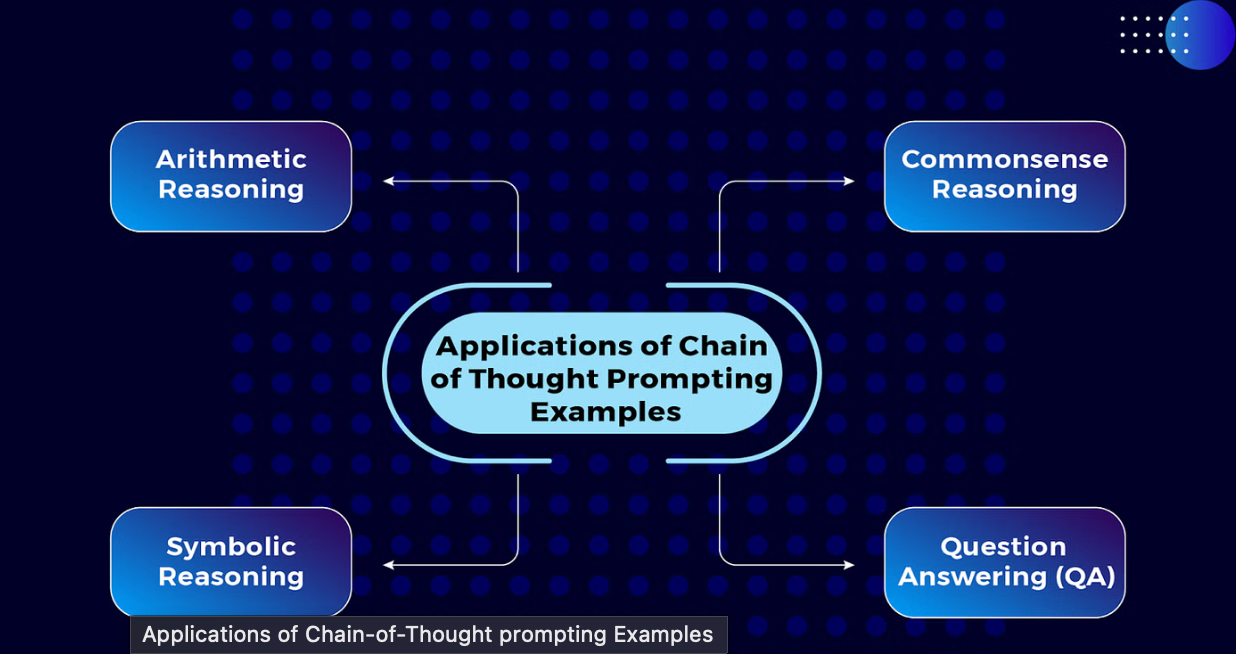

Applications of Chain-of-Thought Prompting

Chain-of-Thought (CoT) Prompting finds application across diverse domains, underscoring its versatility in augmenting the capabilities of Large Language Models (LLMs). Here are several noteworthy applications alongside examples:

Arithmetic Reasoning

The difficulty of solving math word problems for language models is well-known. By integrating with a 540-billion-parameter language model, CoT prompting achieves comparable or superior performance on benchmarks such as MultiArith and GSM8K. With CoT, the model effectively addresses arithmetic reasoning tasks, particularly excelling with larger model sizes. This application underscores CoT’s capacity to enhance mathematical problem-solving capabilities.

Commonsense Reasoning

CoT’s impact on enhancing language models’ reasoning abilities in the realm of common sense is evident in this application. Commonsense reasoning tasks, which involve grasping physical and human interactions based on general knowledge, can be challenging for natural language understanding systems. CoT prompting proves effective in tasks such as CommonsenseQA, StrategyQA, date understanding, and sports comprehension. While model size typically influences performance, CoT introduces additional enhancements, notably benefiting sports comprehension tasks.

Symbolic Reasoning

Symbolic reasoning tasks often present hurdles for language models, particularly with standard prompting methods. However, CoT prompting empowers LLMs to tackle tasks like last letter concatenation and coin flips with impressive solve rates. It facilitates symbolic reasoning and aids in length generalization, enabling models to handle longer inputs during inference. This application highlights CoT’s significant potential in enhancing a model’s capability to execute complex symbolic reasoning tasks.

Question Answering (QA)

CoT prompting enhances Question Answering (QA) by breaking down complex questions into logical steps. This methodology aids the model in comprehending the question’s structure and the interconnections among its elements. CoT prompts multi-hop reasoning, wherein the model iteratively gathers and integrates information from various sources. This iterative process results in enhanced inference and more precise answers. By delineating reasoning steps, CoT also mitigates common errors and biases in responses. CoT’s application in QA underscores its effectiveness in deconstructing intricate problems, thereby fostering improved reasoning and understanding in language models.

Limitations and Future Directions

Although CoT prompting holds potential, it is not devoid of drawbacks. Primarily, it demonstrates performance enhancements solely with models comprising around 100 billion parameters. Conversely, smaller models often generate irrational chains of thought, resulting in decreased accuracy compared to conventional prompting methods. Additionally, the efficacy gains from CoT prompting typically correlate with the model’s size.

Notwithstanding these limitations, CoT prompting marks a notable advancement in augmenting the reasoning aptitude of LLMs. Subsequent research endeavors will likely concentrate on honing this approach and delving into avenues to bolster its effectiveness across diverse tasks and model dimensions.

Conclusion

Chain-of-Thought (CoT) prompting stands as a significant breakthrough in the realm of artificial intelligence, particularly in augmenting the reasoning capabilities of Large Language Models (LLMs). By prompting these models to elucidate their reasoning process, CoT has demonstrated potential in enhancing performance across intricate tasks demanding arithmetic, commonsense, and symbolic reasoning. Despite its limitations, CoT heralds promising prospects for the future evolution of LLMs.

As we push the boundaries of LLM capabilities, techniques like CoT prompting will prove indispensable. By fostering a step-by-step thinking approach and encouraging explanation of reasoning, we not only enhance model performance on complex tasks but also gain invaluable insights into their inner mechanisms. While the road to achieving fully reasoning LLMs remains lengthy, methodologies like CoT prompting undoubtedly set us on the right trajectory.

Originally published at novita.ai

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.

Subscribe to my newsletter

Read articles from NovitaAI directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by