Trying out Prometheus Operator's Alertmanager and Alertmanager Config Custom Resources

Karuppiah Natarajan

Karuppiah Natarajan

In this post, we are going to look at how we can use Alertmanager and AlertmanagerConfig custom resource to create and manage and configure Alertmanager

From https://karuppiah.dev/trying-out-prometheus-operator blog post I showed how to get started with the Prometheus Operator. Follow the same if you haven't. This will kind of be a follow to that blog post

Now, with kube-prometheus installed (from the previous blog post), you will have Alertmanager and AlertmanagerConfig CRDs installed

$ kubectl get crds | rg alertmanager

alertmanagerconfigs.monitoring.coreos.com 2024-05-02T11:16:16Z

alertmanagers.monitoring.coreos.com 2024-05-02T11:16:17Z

Note: I use rg here instead of grep

Let's look at the instances of custom resources for these CRD types

$ kubectl get alertmanagers

No resources found in default namespace.

$ kubectl get alertmanagers --all-namespaces

NAMESPACE NAME VERSION REPLICAS READY RECONCILED AVAILABLE AGE

monitoring main 0.27.0 3 3 True True 74m

$ kubectl get alertmanagerconfig

No resources found in default namespace.

There's just one ☝️1️⃣ Alertmanager defined, which is running the Alertmanager

Let's define some sample configurations for Alertmanager using AlertmanagerConfig

Let's start with some dummy configuration just to test things out. Let's see if we can define two AlertmanagerConfig custom resources and see if it gets merged and configures Alertmanager

# alertmanager-config.yaml

apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata:

name: config-example

labels:

alertmanagerConfig: example

spec:

route:

groupBy: ['job']

groupWait: 30s

groupInterval: 5m

repeatInterval: 12h

receiver: 'webhook'

receivers:

- name: 'webhook'

webhookConfigs:

- url: 'http://example.com/'

# alertmanager-second-config.yaml

apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata:

name: config-example-2

labels:

alertmanagerConfig: example

spec:

route:

groupBy: ['job']

groupWait: 30s

groupInterval: 5m

repeatInterval: 12h

receiver: 'webhook'

receivers:

- name: 'webhook'

webhookConfigs:

- url: 'http://example.com/'

I'm going to apply these later

I learned the spec of AlertmanagerConfig custom resource using kubectl explain -

$ kubectl explain alertmanagerconfig

GROUP: monitoring.coreos.com

KIND: AlertmanagerConfig

VERSION: v1alpha1

DESCRIPTION:

AlertmanagerConfig configures the Prometheus Alertmanager,

specifying how alerts should be grouped, inhibited and notified to external

systems.

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an object.

Servers should convert recognized schemas to the latest internal value, and

may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

metadata <ObjectMeta>

Standard object's metadata. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata

spec <Object> -required-

AlertmanagerConfigSpec is a specification of the desired behavior of the

Alertmanager configuration.

By definition, the Alertmanager configuration only applies to alerts for

which

the `namespace` label is equal to the namespace of the AlertmanagerConfig

resource.

$ kubectl explain alertmanagerconfig.spec

GROUP: monitoring.coreos.com

KIND: AlertmanagerConfig

VERSION: v1alpha1

FIELD: spec <Object>

DESCRIPTION:

AlertmanagerConfigSpec is a specification of the desired behavior of the

Alertmanager configuration.

By definition, the Alertmanager configuration only applies to alerts for

which

the `namespace` label is equal to the namespace of the AlertmanagerConfig

resource.

FIELDS:

inhibitRules <[]Object>

List of inhibition rules. The rules will only apply to alerts matching

the resource's namespace.

muteTimeIntervals <[]Object>

List of MuteTimeInterval specifying when the routes should be muted.

receivers <[]Object>

List of receivers.

route <Object>

The Alertmanager route definition for alerts matching the resource's

namespace. If present, it will be added to the generated Alertmanager

configuration as a first-level route.

Now let's look at how to configure Alertmanager using the AlertmanagerConfig custom resource

You can read how to configure Alertmanager in general, and how Alertmanager and AlertmanagerConfig are related to each other from the following official docs -

https://prometheus-operator.dev/docs/user-guides/alerting/#managing-alertmanager-configuration

https://prometheus-operator.dev/docs/user-guides/alerting/#using-alertmanagerconfig-resources

Since I'm trying to merge the two AlertmanagerConfig custom resources into one, I'll be following the doc - https://prometheus-operator.dev/docs/user-guides/alerting/#using-alertmanagerconfig-resources

I'll be defining spec.alertmanagerConfigSelector of Alertmanager. This is how the Prometheus Operator will be able to connect that a particular AlertmanagerConfig belongs to one or more Alertmanagers. Yes, you read that right, since AlertmanagerConfig is a standalone thing and Alertmanager only tells which AlertmanagerConfig to select using the spec.alertmanagerConfigSelector, multiple Alertmanager custom resources can point to the same set of AlertmanagerConfig. How cool is that? 😁😄😃😀 This way, you can reuse AlertmanagerConfig and configure multiple Alertmanagers too, in case you want to :)

Again, you can use kubectl explain to look at the spec of Alertmanager custom resource

$ kubectl explain alertmanager

...

$ kubectl explain alertmanager.spec

...

$ kubectl explain alertmanager.spec.alertmanagerConfigSelector

...

$ kubectl explain alertmanager.spec.alertmanagerConfigSelector

GROUP: monitoring.coreos.com

KIND: Alertmanager

VERSION: v1

FIELD: alertmanagerConfigSelector <Object>

DESCRIPTION:

AlertmanagerConfigs to be selected for to merge and configure Alertmanager

with.

FIELDS:

matchExpressions <[]Object>

matchExpressions is a list of label selector requirements. The requirements

are ANDed.

matchLabels <map[string]string>

matchLabels is a map of {key,value} pairs. A single {key,value} in the

matchLabels

map is equivalent to an element of matchExpressions, whose key field is

"key", the

operator is "In", and the values array contains only "value". The

requirements are ANDed.

Both my AlertmanagerConfigs have the label alertmanagerConfig: example, so I can use the following in Alertmanager's spec

spec:

alertmanagerConfigSelector:

matchLabels:

alertmanagerConfig: example

Before doing the change, I'm gonna keep a watch on my logs -

You can look at the Prometheus Operator logs, and Alertmanager logs, all using stern , something like this -

$ stern -n monitoring prometheus-operator

$ stern -n monitoring alertmanager-main-*

You will see logs after your change.

For the change, this is how I did it -

$ kubectl edit alertmanager -n monitoring main

And this is how I changed the YAML -

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: monitoring.coreos.com/v1

kind: Alertmanager

metadata:

creationTimestamp: "2024-05-02T11:17:20Z"

generation: 1

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.27.0

name: main

namespace: monitoring

resourceVersion: "1570"

uid: 33fb19f8-6512-4206-b9f2-24f646c0ae46

spec:

alertmanagerConfigSelector:

matchLabels:

alertmanagerConfig: example

image: quay.io/prometheus/alertmanager:v0.27.0

nodeSelector:

kubernetes.io/os: linux

podMetadata:

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.27.0

portName: web

replicas: 3

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 4m

memory: 100Mi

retention: 120h

secrets: []

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: alertmanager-main

version: 0.27.0

status:

availableReplicas: 3

conditions:

- lastTransitionTime: "2024-05-02T11:19:25Z"

message: ""

observedGeneration: 1

reason: ""

status: "True"

type: Available

- lastTransitionTime: "2024-05-02T11:18:31Z"

message: ""

observedGeneration: 1

reason: ""

status: "True"

type: Reconciled

paused: false

replicas: 3

unavailableReplicas: 0

updatedReplicas: 3

And then saved it

Now I'm going to apply the Alertmanager configurations

$ kubectl apply -n monitoring -f /Users/karuppiah.n/every-day-log/alertmanager-config.yaml

alertmanagerconfig.monitoring.coreos.com/config-example created

$ kubectl apply -n monitoring -f /Users/karuppiah.n/every-day-log/alertmanager-second-config.yaml

alertmanagerconfig.monitoring.coreos.com/config-example-2 created

When the above configurations are applied, you will notice that the config-reloader sidecar containers in all the Alertmanager instances will have a Reload triggered log, and Alertmanager's main container / server container have Loading configuration file log and Completed loading of configuration file, like this -

alertmanager-main-2 alertmanager ts=2024-05-02T14:58:22.958Z caller=coordinator.go:113 level=info component=configuration msg="Loading configuration file" file=/etc/alertmanager/config_out/alertmanager.env.yaml

alertmanager-main-2 alertmanager ts=2024-05-02T14:58:22.960Z caller=coordinator.go:126 level=info component=configuration msg="Completed loading of configuration file" file=/etc/alertmanager/config_out/alertmanager.env.yaml

alertmanager-main-2 config-reloader level=info ts=2024-05-02T14:58:22.964016458Z caller=reloader.go:424 msg="Reload triggered" cfg_in=/etc/alertmanager/config/alertmanager.yaml.gz cfg_out=/etc/alertmanager/config_out/alertmanager.env.yaml watched_dirs=/etc/alertmanager/config

alertmanager-main-1 alertmanager ts=2024-05-02T14:58:27.754Z caller=coordinator.go:113 level=info component=configuration msg="Loading configuration file" file=/etc/alertmanager/config_out/alertmanager.env.yaml

alertmanager-main-1 alertmanager ts=2024-05-02T14:58:27.755Z caller=coordinator.go:126 level=info component=configuration msg="Completed loading of configuration file" file=/etc/alertmanager/config_out/alertmanager.env.yaml

alertmanager-main-1 config-reloader level=info ts=2024-05-02T14:58:27.954138209Z caller=reloader.go:424 msg="Reload triggered" cfg_in=/etc/alertmanager/config/alertmanager.yaml.gz cfg_out=/etc/alertmanager/config_out/alertmanager.env.yaml watched_dirs=/etc/alertmanager/config

alertmanager-main-0 alertmanager ts=2024-05-02T14:58:39.058Z caller=coordinator.go:113 level=info component=configuration msg="Loading configuration file" file=/etc/alertmanager/config_out/alertmanager.env.yaml

alertmanager-main-0 alertmanager ts=2024-05-02T14:58:39.059Z caller=coordinator.go:126 level=info component=configuration msg="Completed loading of configuration file" file=/etc/alertmanager/config_out/alertmanager.env.yaml

alertmanager-main-0 config-reloader level=info ts=2024-05-02T14:58:39.062383067Z caller=reloader.go:424 msg="Reload triggered" cfg_in=/etc/alertmanager/config/alertmanager.yaml.gz cfg_out=/etc/alertmanager/config_out/alertmanager.env.yaml watched_dirs=/etc/alertmanager/config

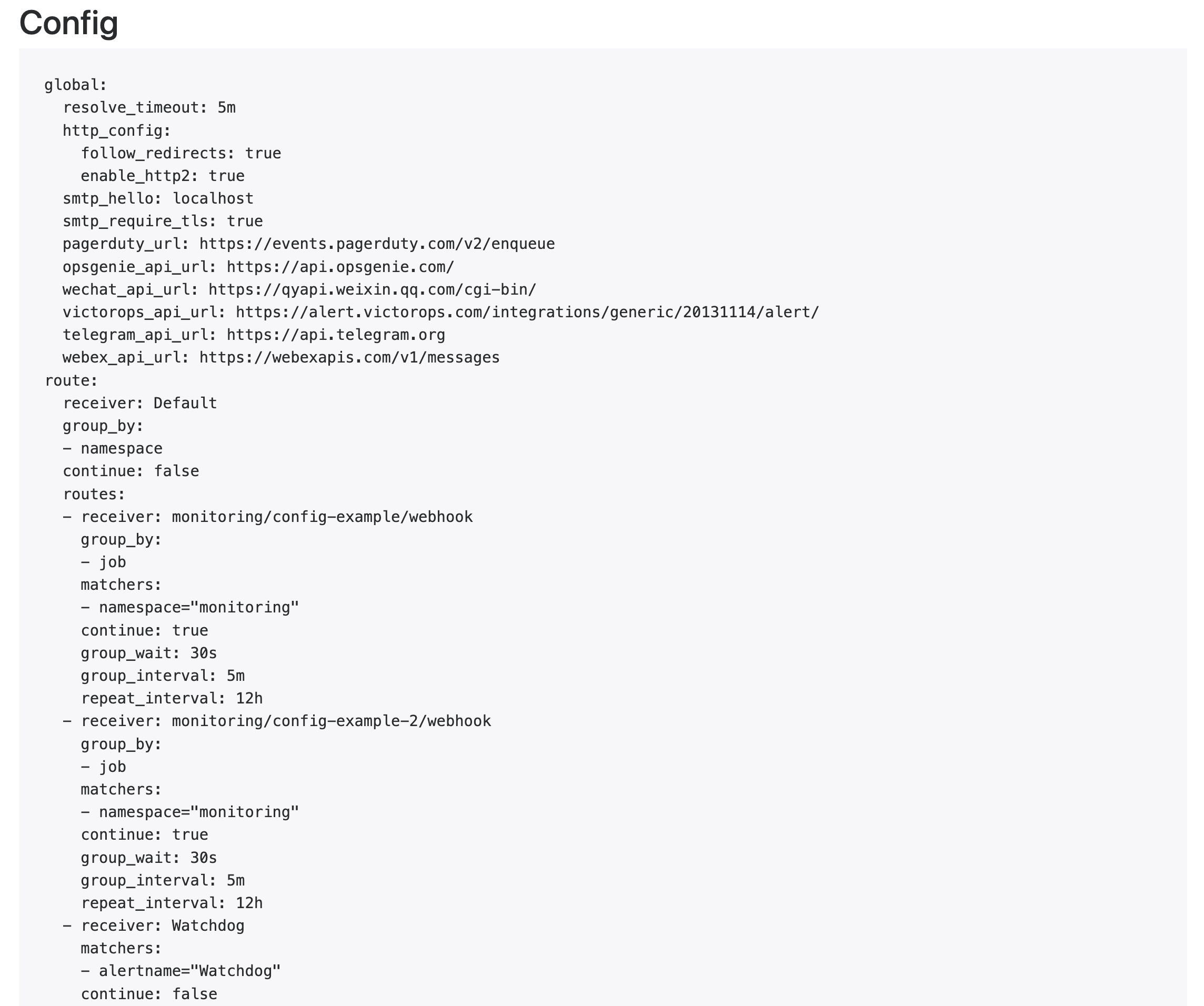

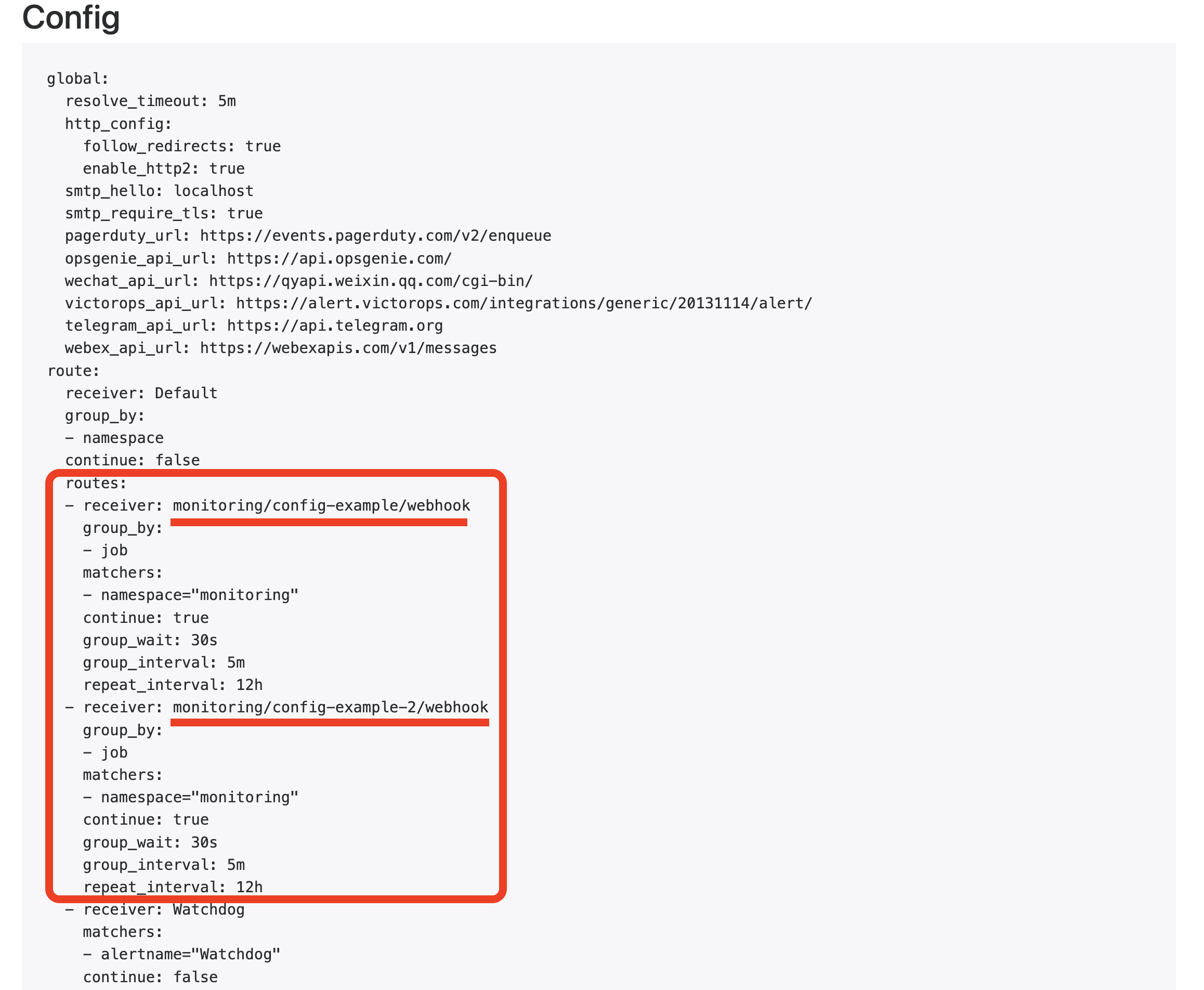

Now, if I port forward Alertmanager's Web UI port and checkout the Alertmanager Configuration in the Web UI, I'll see this -

Notice how it says config-example based on the name of the AlertmanagerConfig custom resource name :)

That is in the routes list, we can see the same in the receivers list -

So, this way, it's clear that one can create multiple AlertmanagerConfigs and they can all be merged to one Alertmanager Configuration

Let's also try using a global Alertmanager Configuration using Alertmanager custom resource's spec.alertmanagerConfiguration, where we can mention the name of the AlertmanagerConfiguration custom resource instance's name in spec.alertmanagerConfiguration.name and there are more fields under spec.alertmanagerConfiguration like global, templates

$ kubectl explain alertmanager.spec.alertmanagerConfiguration

GROUP: monitoring.coreos.com

KIND: Alertmanager

VERSION: v1

FIELD: alertmanagerConfiguration <Object>

DESCRIPTION:

alertmanagerConfiguration specifies the configuration of Alertmanager.

If defined, it takes precedence over the `configSecret` field.

This is an *experimental feature*, it may change in any upcoming release

in a breaking way.

FIELDS:

global <Object>

Defines the global parameters of the Alertmanager configuration.

name <string>

The name of the AlertmanagerConfig resource which is used to generate the

Alertmanager configuration.

It must be defined in the same namespace as the Alertmanager object.

The operator will not enforce a `namespace` label for routes and inhibition

rules.

templates <[]Object>

Custom notification templates.

$ kubectl explain alertmanager.spec.alertmanagerConfiguration.global

GROUP: monitoring.coreos.com

KIND: Alertmanager

VERSION: v1

FIELD: global <Object>

DESCRIPTION:

Defines the global parameters of the Alertmanager configuration.

FIELDS:

httpConfig <Object>

HTTP client configuration.

opsGenieApiKey <Object>

The default OpsGenie API Key.

opsGenieApiUrl <Object>

The default OpsGenie API URL.

pagerdutyUrl <string>

The default Pagerduty URL.

resolveTimeout <string>

ResolveTimeout is the default value used by alertmanager if the alert does

not include EndsAt, after this time passes it can declare the alert as

resolved if it has not been updated.

This has no impact on alerts from Prometheus, as they always include EndsAt.

slackApiUrl <Object>

The default Slack API URL.

smtp <Object>

Configures global SMTP parameters.

$ kubectl explain alertmanager.spec.alertmanagerConfiguration.templates

GROUP: monitoring.coreos.com

KIND: Alertmanager

VERSION: v1

FIELD: templates <[]Object>

DESCRIPTION:

Custom notification templates.

SecretOrConfigMap allows to specify data as a Secret or ConfigMap. Fields

are mutually exclusive.

FIELDS:

configMap <Object>

ConfigMap containing data to use for the targets.

secret <Object>

Secret containing data to use for the targets.

Let's use the global Alertmanager Configuration along with the existing separate Alertmanager Configurations we have, which are scattered across AlertmanagerConfig custom resource instances, and see if we get a completely merged Alertmanager Configuration

I'm going to use the following dummy global Alertmanager Configuration -

# alertmanager-global-config.yaml

apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata:

name: config-example-global

spec:

route:

groupBy: ['job']

groupWait: 25s

groupInterval: 6m

repeatInterval: 13h

receiver: 'secret-webhook'

receivers:

- name: 'secret-webhook'

webhookConfigs:

- url: 'http://random-example.com/'

Keep your Prometheus Operator's Logs streaming, Alertmanager's Logs (server, config-reloader sidecar) streaming, all using stern or similar tool (kubectl logs etc)

And then first apply the AlertmanagerConfig custom resource instance which is required to mention in the Alertmanager custom resource instance. Remember to create it in the same namespace as the Alertmanager custom resource instance!

$ kubectl apply -n monitoring -f /Users/karuppiah.n/every-day-log/alertmanager-global-config.yaml

alertmanagerconfig.monitoring.coreos.com/config-example-global created

Finally, mention the name of the AlertmanagerConfig in the Alertmanager custom resource instance under spec.alertmanagerConfiguration.name like this -

$ kubectl edit alertmanager -n monitoring main

My Alertmanager custom resource instance looks like this now after the edit -

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: monitoring.coreos.com/v1

kind: Alertmanager

metadata:

creationTimestamp: "2024-05-02T11:17:20Z"

generation: 3

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.27.0

name: main

namespace: monitoring

resourceVersion: "40052"

uid: 33fb19f8-6512-4206-b9f2-24f646c0ae46

spec:

alertmanagerConfigSelector:

matchLabels:

alertmanagerConfig: example

alertmanagerConfiguration:

name: config-example-global

image: quay.io/prometheus/alertmanager:v0.27.0

nodeSelector:

kubernetes.io/os: linux

podMetadata:

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.27.0

portName: web

replicas: 3

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 4m

memory: 100Mi

retention: 120h

secrets: []

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: alertmanager-main

version: 0.27.0

status:

availableReplicas: 3

conditions:

- lastTransitionTime: "2024-05-07T14:49:46Z"

message: ""

observedGeneration: 3

reason: ""

status: "True"

type: Available

- lastTransitionTime: "2024-05-07T14:21:05Z"

message: ""

observedGeneration: 3

reason: ""

status: "True"

type: Reconciled

paused: false

replicas: 3

unavailableReplicas: 0

updatedReplicas: 3

Notice the spec.alertmanagerConfiguration.name being config-example-global

You will notice Prometheus Operator logs after this change and also logs in the Alertmanager config-reloader sidecar

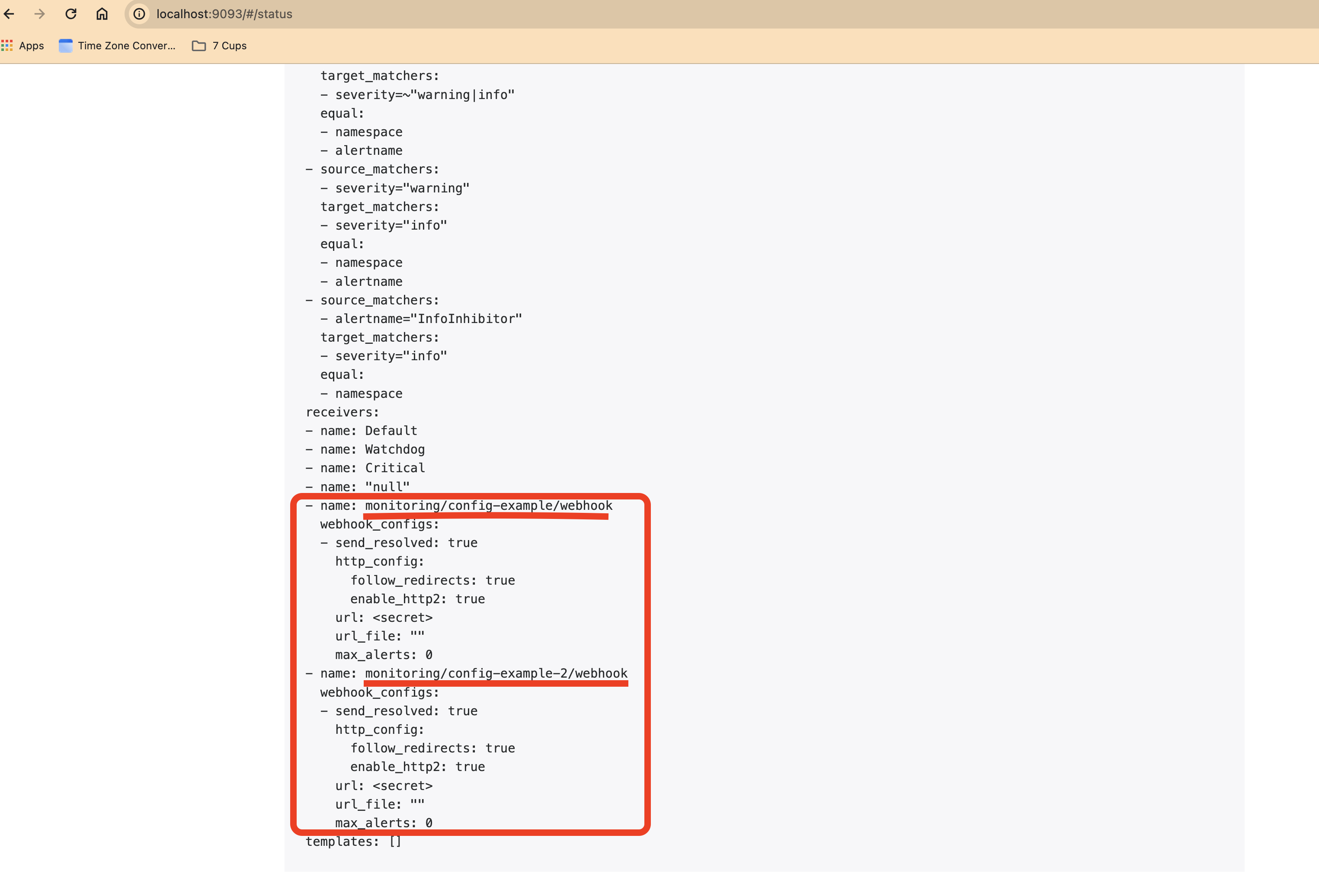

If you port forward to the Alertmanager Kubernetes service, then you can see how the Alertmanager Configuration has changed and show all the configurations we have -

$ kubectl --namespace monitoring port-forward svc/alertmanager-main 9093

Forwarding from 127.0.0.1:9093 -> 9093

Forwarding from [::1]:9093 -> 9093

Handling connection for 9093

Handling connection for 9093

Handling connection for 9093

Handling connection for 9093

Now the Alertmanager Configuration looks like this -

global:

resolve_timeout: 5m

http_config:

follow_redirects: true

enable_http2: true

smtp_hello: localhost

smtp_require_tls: true

pagerduty_url: https://events.pagerduty.com/v2/enqueue

opsgenie_api_url: https://api.opsgenie.com/

wechat_api_url: https://qyapi.weixin.qq.com/cgi-bin/

victorops_api_url: https://alert.victorops.com/integrations/generic/20131114/alert/

telegram_api_url: https://api.telegram.org

webex_api_url: https://webexapis.com/v1/messages

route:

receiver: monitoring/config-example-global/secret-webhook

group_by:

- job

continue: false

routes:

- receiver: monitoring/config-example/webhook

group_by:

- job

matchers:

- namespace="monitoring"

continue: true

group_wait: 30s

group_interval: 5m

repeat_interval: 12h

- receiver: monitoring/config-example-2/webhook

group_by:

- job

matchers:

- namespace="monitoring"

continue: true

group_wait: 30s

group_interval: 5m

repeat_interval: 12h

group_wait: 25s

group_interval: 6m

repeat_interval: 13h

receivers:

- name: monitoring/config-example-global/secret-webhook

webhook_configs:

- send_resolved: true

http_config:

follow_redirects: true

enable_http2: true

url: <secret>

url_file: ""

max_alerts: 0

- name: monitoring/config-example/webhook

webhook_configs:

- send_resolved: true

http_config:

follow_redirects: true

enable_http2: true

url: <secret>

url_file: ""

max_alerts: 0

- name: monitoring/config-example-2/webhook

webhook_configs:

- send_resolved: true

http_config:

follow_redirects: true

enable_http2: true

url: <secret>

url_file: ""

max_alerts: 0

templates: []

If you notice, there's top level route with the receiver we have mentioned in the global configuration and the group_by, group_wait, group_interval, repeat_interval, and the config-example-global receiver is also defined in the receivers section - name: monitoring/config-example-global/secret-webhook.

Also, one important thing to note is, how the casing in of the fields in the Kubernetes custom resource is different from the casing of the fields in the actual Alertmanager Configuration. For example,

group_by in Alertmanger Configuration YAML vs groupBy in AlertmanagerConfig Kubernetes custom resource

group_wait in Alertmanger Configuration YAML vs groupWait in AlertmanagerConfig Kubernetes custom resource

group_interval in Alertmanger Configuration YAML vs groupInterval in AlertmanagerConfig Kubernetes custom resource

repeat_interval in Alertmanger Configuration YAML vs repeatInterval in AlertmanagerConfig Kubernetes custom resource

So, be careful when creating the AlertmanagerConfig Kubernetes custom resource instance YAMLs and any related Kubernetes custom resource instance YAMLs. Look at the kubectl explain <resource-type-name> documentation

Note that kubectl explain <resource-type-name> works only against a live running Kubernetes cluster which has the resource type mentioned in the argument, which could be a built-in resource or a custom resource

To check if a resource type exists in the Kubernetes cluster, just use one of the following -

$ kubectl get <resource-type-name>

$ kubectl api-resources | grep --color --ignore-case <resource-type-name>

$ # if it's a CRD

$ kubectl get crd | grep --color --ignore-case <resource-type-name>

That's all I had for this blog post. You can also check how to provide Alertmanager Configuration using Kubernetes Secrets, either using the convention that the Prometheus Operator follows alertmanager-<name-of-alertmanager-custom-resource-instance>, follow - https://prometheus-operator.dev/docs/user-guides/alerting/#using-a-kubernetes-secret . Or else use the spec.configSecret field of the Alertmanager custom resource

$ kubectl explain alertmanager.spec.configSecret

GROUP: monitoring.coreos.com

KIND: Alertmanager

VERSION: v1

FIELD: configSecret <string>

DESCRIPTION:

ConfigSecret is the name of a Kubernetes Secret in the same namespace as the

Alertmanager object, which contains the configuration for this Alertmanager

instance. If empty, it defaults to `alertmanager-<alertmanager-name>`.

The Alertmanager configuration should be available under the

`alertmanager.yaml` key. Additional keys from the original secret are

copied to the generated secret and mounted into the

`/etc/alertmanager/config` directory in the `alertmanager` container.

If either the secret or the `alertmanager.yaml` key is missing, the

operator provisions a minimal Alertmanager configuration with one empty

receiver (effectively dropping alert notifications).

In my case, somehow the Kubernetes secret was already there 🤔🤨🧐. I'm not sure how. It's an unknown for me. I'll read more about it and maybe write ✍️🖋️✒️🖊️✏️ about it later, in another blog post. Here's what I found out -

$ kubectl get secret -n monitoring

NAME TYPE DATA AGE

alertmanager-main Opaque 1 5d6h

alertmanager-main-generated Opaque 1 5d6h

alertmanager-main-tls-assets-0 Opaque 0 5d6h

alertmanager-main-web-config Opaque 1 5d6h

grafana-config Opaque 1 5d6h

grafana-datasources Opaque 1 5d6h

prometheus-k8s Opaque 1 5d6h

prometheus-k8s-tls-assets-0 Opaque 0 5d6h

prometheus-k8s-web-config Opaque 1 5d6h

$ kubectl get secret -n monitoring alertmanager-main

NAME TYPE DATA AGE

alertmanager-main Opaque 1 5d6h

$ kubectl get secret -n monitoring alertmanager-main -o yaml

apiVersion: v1

data:

alertmanager.yaml: Imdsb2JhbCI6CiAgInJlc29sdmVfdGltZW91dCI6ICI1bSIKImluaGliaXRfcnVsZXMiOgotICJlcXVhbCI6CiAgLSAibmFtZXNwYWNlIgogIC0gImFsZXJ0bmFtZSIKICAic291cmNlX21hdGNoZXJzIjoKICAtICJzZXZlcml0eSA9IGNyaXRpY2FsIgogICJ0YXJnZXRfbWF0Y2hlcnMiOgogIC0gInNldmVyaXR5ID1+IHdhcm5pbmd8aW5mbyIKLSAiZXF1YWwiOgogIC0gIm5hbWVzcGFjZSIKICAtICJhbGVydG5hbWUiCiAgInNvdXJjZV9tYXRjaGVycyI6CiAgLSAic2V2ZXJpdHkgPSB3YXJuaW5nIgogICJ0YXJnZXRfbWF0Y2hlcnMiOgogIC0gInNldmVyaXR5ID0gaW5mbyIKLSAiZXF1YWwiOgogIC0gIm5hbWVzcGFjZSIKICAic291cmNlX21hdGNoZXJzIjoKICAtICJhbGVydG5hbWUgPSBJbmZvSW5oaWJpdG9yIgogICJ0YXJnZXRfbWF0Y2hlcnMiOgogIC0gInNldmVyaXR5ID0gaW5mbyIKInJlY2VpdmVycyI6Ci0gIm5hbWUiOiAiRGVmYXVsdCIKLSAibmFtZSI6ICJXYXRjaGRvZyIKLSAibmFtZSI6ICJDcml0aWNhbCIKLSAibmFtZSI6ICJudWxsIgoicm91dGUiOgogICJncm91cF9ieSI6CiAgLSAibmFtZXNwYWNlIgogICJncm91cF9pbnRlcnZhbCI6ICI1bSIKICAiZ3JvdXBfd2FpdCI6ICIzMHMiCiAgInJlY2VpdmVyIjogIkRlZmF1bHQiCiAgInJlcGVhdF9pbnRlcnZhbCI6ICIxMmgiCiAgInJvdXRlcyI6CiAgLSAibWF0Y2hlcnMiOgogICAgLSAiYWxlcnRuYW1lID0gV2F0Y2hkb2ciCiAgICAicmVjZWl2ZXIiOiAiV2F0Y2hkb2ciCiAgLSAibWF0Y2hlcnMiOgogICAgLSAiYWxlcnRuYW1lID0gSW5mb0luaGliaXRvciIKICAgICJyZWNlaXZlciI6ICJudWxsIgogIC0gIm1hdGNoZXJzIjoKICAgIC0gInNldmVyaXR5ID0gY3JpdGljYWwiCiAgICAicmVjZWl2ZXIiOiAiQ3JpdGljYWwi

kind: Secret

metadata:

creationTimestamp: "2024-05-02T11:17:20Z"

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.27.0

name: alertmanager-main

namespace: monitoring

resourceVersion: "880"

uid: 59ff212e-f2af-48eb-a19e-ba0f69246dce

type: Opaque

$ kubectl get secret -n monitoring alertmanager-main -o jsonpath='{.data.alertmanager\.yaml}'

Imdsb2JhbCI6CiAgInJlc29sdmVfdGltZW91dCI6ICI1bSIKImluaGliaXRfcnVsZXMiOgotICJlcXVhbCI6CiAgLSAibmFtZXNwYWNlIgogIC0gImFsZXJ0bmFtZSIKICAic291cmNlX21hdGNoZXJzIjoKICAtICJzZXZlcml0eSA9IGNyaXRpY2FsIgogICJ0YXJnZXRfbWF0Y2hlcnMiOgogIC0gInNldmVyaXR5ID1+IHdhcm5pbmd8aW5mbyIKLSAiZXF1YWwiOgogIC0gIm5hbWVzcGFjZSIKICAtICJhbGVydG5hbWUiCiAgInNvdXJjZV9tYXRjaGVycyI6CiAgLSAic2V2ZXJpdHkgPSB3YXJuaW5nIgogICJ0YXJnZXRfbWF0Y2hlcnMiOgogIC0gInNldmVyaXR5ID0gaW5mbyIKLSAiZXF1YWwiOgogIC0gIm5hbWVzcGFjZSIKICAic291cmNlX21hdGNoZXJzIjoKICAtICJhbGVydG5hbWUgPSBJbmZvSW5oaWJpdG9yIgogICJ0YXJnZXRfbWF0Y2hlcnMiOgogIC0gInNldmVyaXR5ID0gaW5mbyIKInJlY2VpdmVycyI6Ci0gIm5hbWUiOiAiRGVmYXVsdCIKLSAibmFtZSI6ICJXYXRjaGRvZyIKLSAibmFtZSI6ICJDcml0aWNhbCIKLSAibmFtZSI6ICJudWxsIgoicm91dGUiOgogICJncm91cF9ieSI6CiAgLSAibmFtZXNwYWNlIgogICJncm91cF9pbnRlcnZhbCI6ICI1bSIKICAiZ3JvdXBfd2FpdCI6ICIzMHMiCiAgInJlY2VpdmVyIjogIkRlZmF1bHQiCiAgInJlcGVhdF9pbnRlcnZhbCI6ICIxMmgiCiAgInJvdXRlcyI6CiAgLSAibWF0Y2hlcnMiOgogICAgLSAiYWxlcnRuYW1lID0gV2F0Y2hkb2ciCiAgICAicmVjZWl2ZXIiOiAiV2F0Y2hkb2ciCiAgLSAibWF0Y2hlcnMiOgogICAgLSAiYWxlcnRuYW1lID0gSW5mb0luaGliaXRvciIKICAgICJyZWNlaXZlciI6ICJudWxsIgogIC0gIm1hdGNoZXJzIjoKICAgIC0gInNldmVyaXR5ID0gY3JpdGljYWwiCiAgICAicmVjZWl2ZXIiOiAiQ3JpdGljYWwi

$ kubectl get secret -n monitoring alertmanager-main -o jsonpath='{.data.alertmanager\.yaml}' | base64 --decode

"global":

"resolve_timeout": "5m"

"inhibit_rules":

- "equal":

- "namespace"

- "alertname"

"source_matchers":

- "severity = critical"

"target_matchers":

- "severity =~ warning|info"

- "equal":

- "namespace"

- "alertname"

"source_matchers":

- "severity = warning"

"target_matchers":

- "severity = info"

- "equal":

- "namespace"

"source_matchers":

- "alertname = InfoInhibitor"

"target_matchers":

- "severity = info"

"receivers":

- "name": "Default"

- "name": "Watchdog"

- "name": "Critical"

- "name": "null"

"route":

"group_by":

- "namespace"

"group_interval": "5m"

"group_wait": "30s"

"receiver": "Default"

"repeat_interval": "12h"

"routes":

- "matchers":

- "alertname = Watchdog"

"receiver": "Watchdog"

- "matchers":

- "alertname = InfoInhibitor"

"receiver": "null"

- "matchers":

- "severity = critical"

"receiver": "Critical"

So, that's the Alertmanager Configuration I found in the Kubernetes Secret. I have no idea how it got there as a Kubernetes Secret, what put it, why etc. Something to read and learn

Anyways, that's all I had to talk about :) As a last note, you can check the full spec of Alertmanager custom resource definition and also AlertmanagerConfig custom resource definition. I'm attaching Alertmanager's custom resource definition's full spec below ⬇️👇

Full spec for Alertmanager as of this writing for the given version of Prometheus Operator and Alertmanager custom resource definition -

$ kubectl explain alertmanager.spec

GROUP: monitoring.coreos.com

KIND: Alertmanager

VERSION: v1

FIELD: spec <Object>

DESCRIPTION:

Specification of the desired behavior of the Alertmanager cluster. More

info:

https://github.com/kubernetes/community/blob/master/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

FIELDS:

additionalPeers <[]string>

AdditionalPeers allows injecting a set of additional Alertmanagers to peer

with to form a highly available cluster.

affinity <Object>

If specified, the pod's scheduling constraints.

alertmanagerConfigMatcherStrategy <Object>

The AlertmanagerConfigMatcherStrategy defines how AlertmanagerConfig objects

match the alerts.

In the future more options may be added.

alertmanagerConfigNamespaceSelector <Object>

Namespaces to be selected for AlertmanagerConfig discovery. If nil, only

check own namespace.

alertmanagerConfigSelector <Object>

AlertmanagerConfigs to be selected for to merge and configure Alertmanager

with.

alertmanagerConfiguration <Object>

alertmanagerConfiguration specifies the configuration of Alertmanager.

If defined, it takes precedence over the `configSecret` field.

This is an *experimental feature*, it may change in any upcoming release

in a breaking way.

automountServiceAccountToken <boolean>

AutomountServiceAccountToken indicates whether a service account token

should be automatically mounted in the pod.

If the service account has `automountServiceAccountToken: true`, set the

field to `false` to opt out of automounting API credentials.

baseImage <string>

Base image that is used to deploy pods, without tag.

Deprecated: use 'image' instead.

clusterAdvertiseAddress <string>

ClusterAdvertiseAddress is the explicit address to advertise in cluster.

Needs to be provided for non RFC1918 [1] (public) addresses.

[1] RFC1918: https://tools.ietf.org/html/rfc1918

clusterGossipInterval <string>

Interval between gossip attempts.

clusterLabel <string>

Defines the identifier that uniquely identifies the Alertmanager cluster.

You should only set it when the Alertmanager cluster includes Alertmanager

instances which are external to this Alertmanager resource. In practice, the

addresses of the external instances are provided via the

`.spec.additionalPeers` field.

clusterPeerTimeout <string>

Timeout for cluster peering.

clusterPushpullInterval <string>

Interval between pushpull attempts.

configMaps <[]string>

ConfigMaps is a list of ConfigMaps in the same namespace as the Alertmanager

object, which shall be mounted into the Alertmanager Pods.

Each ConfigMap is added to the StatefulSet definition as a volume named

`configmap-<configmap-name>`.

The ConfigMaps are mounted into

`/etc/alertmanager/configmaps/<configmap-name>` in the 'alertmanager'

container.

configSecret <string>

ConfigSecret is the name of a Kubernetes Secret in the same namespace as the

Alertmanager object, which contains the configuration for this Alertmanager

instance. If empty, it defaults to `alertmanager-<alertmanager-name>`.

The Alertmanager configuration should be available under the

`alertmanager.yaml` key. Additional keys from the original secret are

copied to the generated secret and mounted into the

`/etc/alertmanager/config` directory in the `alertmanager` container.

If either the secret or the `alertmanager.yaml` key is missing, the

operator provisions a minimal Alertmanager configuration with one empty

receiver (effectively dropping alert notifications).

containers <[]Object>

Containers allows injecting additional containers. This is meant to

allow adding an authentication proxy to an Alertmanager pod.

Containers described here modify an operator generated container if they

share the same name and modifications are done via a strategic merge

patch. The current container names are: `alertmanager` and

`config-reloader`. Overriding containers is entirely outside the scope

of what the maintainers will support and by doing so, you accept that

this behaviour may break at any time without notice.

enableFeatures <[]string>

Enable access to Alertmanager feature flags. By default, no features are

enabled.

Enabling features which are disabled by default is entirely outside the

scope of what the maintainers will support and by doing so, you accept

that this behaviour may break at any time without notice.

It requires Alertmanager >= 0.27.0.

externalUrl <string>

The external URL the Alertmanager instances will be available under. This is

necessary to generate correct URLs. This is necessary if Alertmanager is not

served from root of a DNS name.

forceEnableClusterMode <boolean>

ForceEnableClusterMode ensures Alertmanager does not deactivate the cluster

mode when running with a single replica.

Use case is e.g. spanning an Alertmanager cluster across Kubernetes clusters

with a single replica in each.

hostAliases <[]Object>

Pods' hostAliases configuration

image <string>

Image if specified has precedence over baseImage, tag and sha

combinations. Specifying the version is still necessary to ensure the

Prometheus Operator knows what version of Alertmanager is being

configured.

imagePullPolicy <string>

Image pull policy for the 'alertmanager', 'init-config-reloader' and

'config-reloader' containers.

See https://kubernetes.io/docs/concepts/containers/images/#image-pull-policy

for more details.

imagePullSecrets <[]Object>

An optional list of references to secrets in the same namespace

to use for pulling prometheus and alertmanager images from registries

see

http://kubernetes.io/docs/user-guide/images#specifying-imagepullsecrets-on-a-pod

initContainers <[]Object>

InitContainers allows adding initContainers to the pod definition. Those can

be used to e.g.

fetch secrets for injection into the Alertmanager configuration from

external sources. Any

errors during the execution of an initContainer will lead to a restart of

the Pod. More info:

https://kubernetes.io/docs/concepts/workloads/pods/init-containers/

InitContainers described here modify an operator

generated init containers if they share the same name and modifications are

done via a strategic merge patch. The current init container name is:

`init-config-reloader`. Overriding init containers is entirely outside the

scope of what the maintainers will support and by doing so, you accept that

this behaviour may break at any time without notice.

listenLocal <boolean>

ListenLocal makes the Alertmanager server listen on loopback, so that it

does not bind against the Pod IP. Note this is only for the Alertmanager

UI, not the gossip communication.

logFormat <string>

Log format for Alertmanager to be configured with.

logLevel <string>

Log level for Alertmanager to be configured with.

minReadySeconds <integer>

Minimum number of seconds for which a newly created pod should be ready

without any of its container crashing for it to be considered available.

Defaults to 0 (pod will be considered available as soon as it is ready)

This is an alpha field from kubernetes 1.22 until 1.24 which requires

enabling the StatefulSetMinReadySeconds feature gate.

nodeSelector <map[string]string>

Define which Nodes the Pods are scheduled on.

paused <boolean>

If set to true all actions on the underlying managed objects are not

goint to be performed, except for delete actions.

podMetadata <Object>

PodMetadata configures labels and annotations which are propagated to the

Alertmanager pods.

The following items are reserved and cannot be overridden:

* "alertmanager" label, set to the name of the Alertmanager instance.

* "app.kubernetes.io/instance" label, set to the name of the Alertmanager

instance.

* "app.kubernetes.io/managed-by" label, set to "prometheus-operator".

* "app.kubernetes.io/name" label, set to "alertmanager".

* "app.kubernetes.io/version" label, set to the Alertmanager version.

* "kubectl.kubernetes.io/default-container" annotation, set to

"alertmanager".

portName <string>

Port name used for the pods and governing service.

Defaults to `web`.

priorityClassName <string>

Priority class assigned to the Pods

replicas <integer>

Size is the expected size of the alertmanager cluster. The controller will

eventually make the size of the running cluster equal to the expected

size.

resources <Object>

Define resources requests and limits for single Pods.

retention <string>

Time duration Alertmanager shall retain data for. Default is '120h',

and must match the regular expression `[0-9]+(ms|s|m|h)` (milliseconds

seconds minutes hours).

routePrefix <string>

The route prefix Alertmanager registers HTTP handlers for. This is useful,

if using ExternalURL and a proxy is rewriting HTTP routes of a request,

and the actual ExternalURL is still true, but the server serves requests

under a different route prefix. For example for use with `kubectl proxy`.

secrets <[]string>

Secrets is a list of Secrets in the same namespace as the Alertmanager

object, which shall be mounted into the Alertmanager Pods.

Each Secret is added to the StatefulSet definition as a volume named

`secret-<secret-name>`.

The Secrets are mounted into `/etc/alertmanager/secrets/<secret-name>` in

the 'alertmanager' container.

securityContext <Object>

SecurityContext holds pod-level security attributes and common container

settings.

This defaults to the default PodSecurityContext.

serviceAccountName <string>

ServiceAccountName is the name of the ServiceAccount to use to run the

Prometheus Pods.

sha <string>

SHA of Alertmanager container image to be deployed. Defaults to the value of

`version`.

Similar to a tag, but the SHA explicitly deploys an immutable container

image.

Version and Tag are ignored if SHA is set.

Deprecated: use 'image' instead. The image digest can be specified as part

of the image URL.

storage <Object>

Storage is the definition of how storage will be used by the Alertmanager

instances.

tag <string>

Tag of Alertmanager container image to be deployed. Defaults to the value of

`version`.

Version is ignored if Tag is set.

Deprecated: use 'image' instead. The image tag can be specified as part of

the image URL.

tolerations <[]Object>

If specified, the pod's tolerations.

topologySpreadConstraints <[]Object>

If specified, the pod's topology spread constraints.

version <string>

Version the cluster should be on.

volumeMounts <[]Object>

VolumeMounts allows configuration of additional VolumeMounts on the output

StatefulSet definition.

VolumeMounts specified will be appended to other VolumeMounts in the

alertmanager container,

that are generated as a result of StorageSpec objects.

volumes <[]Object>

Volumes allows configuration of additional volumes on the output StatefulSet

definition.

Volumes specified will be appended to other volumes that are generated as a

result of

StorageSpec objects.

web <Object>

Defines the web command line flags when starting Alertmanager.

Annexure

Version Information of all software and source code used:

Prometheus Operator: v0.73.2

Alertmanager: 0.27.0

kube-prometheus git repo - 71e8adada95be82c66af8262fb935346ecf27caa commit SHA

$ kind version

kind v0.21.0 go1.21.6 darwin/amd64

$ docker version

Client:

Cloud integration: v1.0.35+desktop.5

Version: 24.0.7

API version: 1.43

Go version: go1.20.10

Git commit: afdd53b

Built: Thu Oct 26 09:04:20 2023

OS/Arch: darwin/amd64

Context: desktop-linux

Server: Docker Desktop 4.26.1 (131620)

Engine:

Version: 24.0.7

API version: 1.43 (minimum version 1.12)

Go version: go1.20.10

Git commit: 311b9ff

Built: Thu Oct 26 09:08:02 2023

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.25

GitCommit: d8f198a4ed8892c764191ef7b3b06d8a2eeb5c7f

runc:

Version: 1.1.10

GitCommit: v1.1.10-0-g18a0cb0

docker-init:

Version: 0.19.0

GitCommit: de40ad0

$ docker info

Client:

Version: 24.0.7

Context: desktop-linux

Debug Mode: false

Plugins:

buildx: Docker Buildx (Docker Inc.)

Version: v0.12.0-desktop.2

Path: /Users/karuppiah.n/.docker/cli-plugins/docker-buildx

compose: Docker Compose (Docker Inc.)

Version: v2.23.3-desktop.2

Path: /Users/karuppiah.n/.docker/cli-plugins/docker-compose

dev: Docker Dev Environments (Docker Inc.)

Version: v0.1.0

Path: /Users/karuppiah.n/.docker/cli-plugins/docker-dev

extension: Manages Docker extensions (Docker Inc.)

Version: v0.2.21

Path: /Users/karuppiah.n/.docker/cli-plugins/docker-extension

feedback: Provide feedback, right in your terminal! (Docker Inc.)

Version: 0.1

Path: /Users/karuppiah.n/.docker/cli-plugins/docker-feedback

init: Creates Docker-related starter files for your project (Docker Inc.)

Version: v0.1.0-beta.10

Path: /Users/karuppiah.n/.docker/cli-plugins/docker-init

sbom: View the packaged-based Software Bill Of Materials (SBOM) for an image (Anchore Inc.)

Version: 0.6.0

Path: /Users/karuppiah.n/.docker/cli-plugins/docker-sbom

scan: Docker Scan (Docker Inc.)

Version: v0.26.0

Path: /Users/karuppiah.n/.docker/cli-plugins/docker-scan

scout: Docker Scout (Docker Inc.)

Version: v1.2.0

Path: /Users/karuppiah.n/.docker/cli-plugins/docker-scout

Server:

Containers: 1

Running: 1

Paused: 0

Stopped: 0

Images: 39

Server Version: 24.0.7

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Using metacopy: false

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Cgroup Version: 2

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: io.containerd.runc.v2 runc

Default Runtime: runc

Init Binary: docker-init

containerd version: d8f198a4ed8892c764191ef7b3b06d8a2eeb5c7f

runc version: v1.1.10-0-g18a0cb0

init version: de40ad0

Security Options:

seccomp

Profile: unconfined

cgroupns

Kernel Version: 6.5.11-linuxkit

Operating System: Docker Desktop

OSType: linux

Architecture: x86_64

CPUs: 12

Total Memory: 7.665GiB

Name: docker-desktop

ID: 0eebd655-d584-445e-bdfe-76ec2911d485

Docker Root Dir: /var/lib/docker

Debug Mode: false

HTTP Proxy: http.docker.internal:3128

HTTPS Proxy: http.docker.internal:3128

No Proxy: hubproxy.docker.internal

Experimental: false

Insecure Registries:

core.harbor.domain

hubproxy.docker.internal:5555

127.0.0.0/8

Live Restore Enabled: false

WARNING: daemon is not using the default seccomp profile

More docs references:

https://prometheus-operator.dev/docs/operator/ has all sections starting with design https://prometheus-operator.dev/docs/operator/design/ and more.

Specifically around alerting - https://prometheus-operator.dev/docs/user-guides/alerting/

Subscribe to my newsletter

Read articles from Karuppiah Natarajan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Karuppiah Natarajan

Karuppiah Natarajan

I like learning new stuff - anything, including technology. I love tinkering with new tools, systems and services, especially open source projects