Quick and Easy Apache Airflow Setup Tutorial

Vipin

Vipin

Apache Airflow is an open-source platform designed to manage workflows, specifically data pipelines. It was created by Airbnb to handle their increasingly complex workflows and allows users to:

Define workflows using Python code. This makes them easier to maintain, collaborate on, and test.

Schedule workflows to run at specific times (e.g., daily) or based on events (e.g., a new file being added).

Monitor workflows as they run to see their progress and identify any issues.

Hands-on Practice:

Pre-requisite:

Docker Desktop should be installed.

Setup steps:

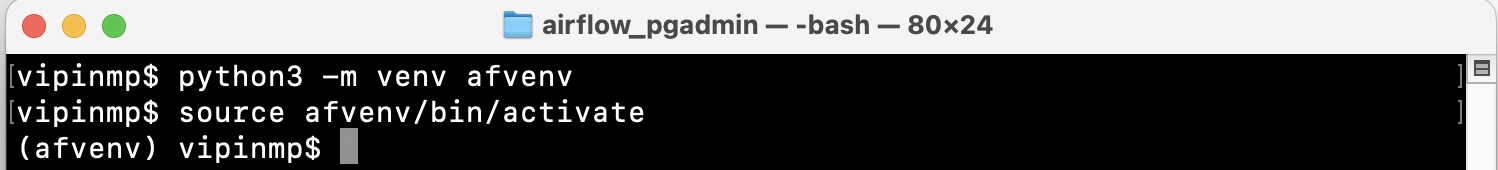

Go to Visual Studio Code and create virtual environment.

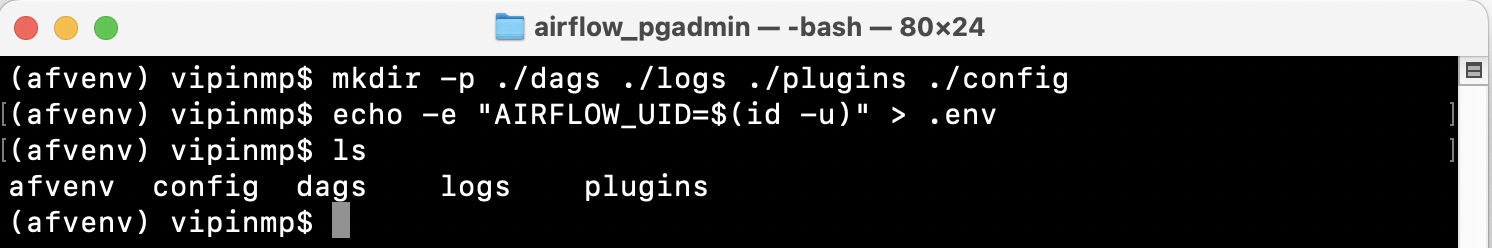

Create dags, logs, plugins and config folder.

mkdir -p ./dags ./logs ./plugins ./config echo -e "AIRFLOW_UID=$(id -u)" > .env

Get ‘

docker-compose.yamlwith the below curl command.

curl -LfO 'https://airflow.apache.org/docs/apache-airflow/2.10.4/docker-compose.yaml'Initialize the database

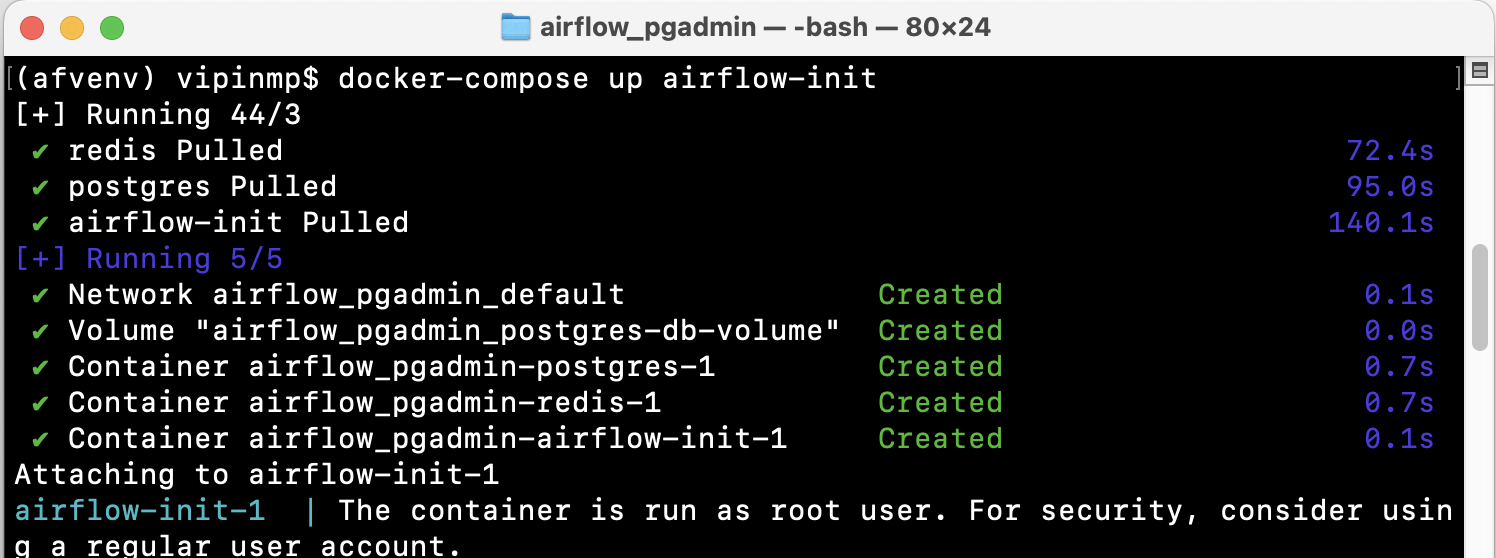

docker-compose up airflow-init

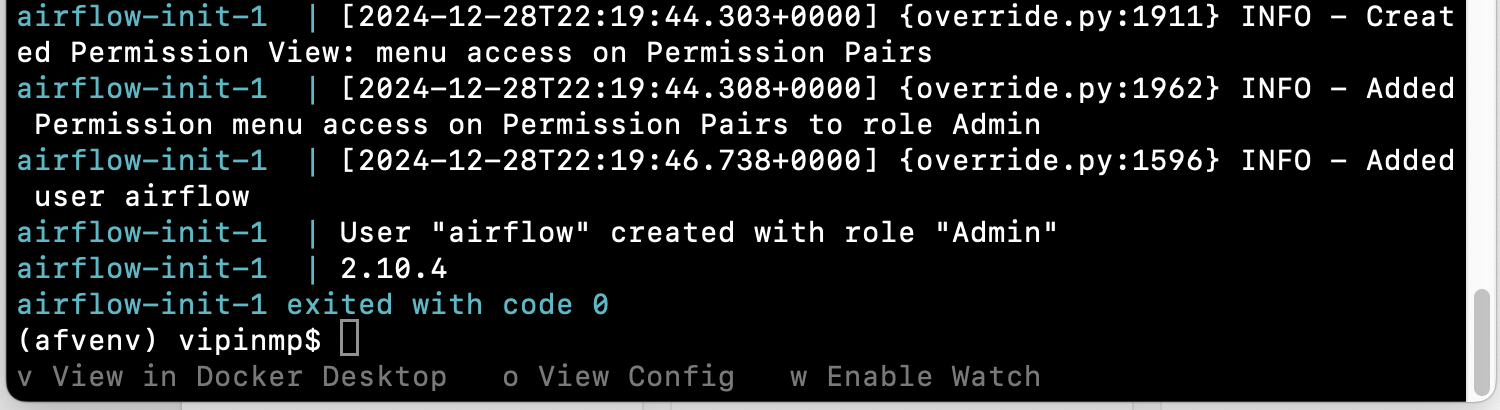

After initialization is complete, you should see a message like this:

The account created has the login airflow and the password airflow

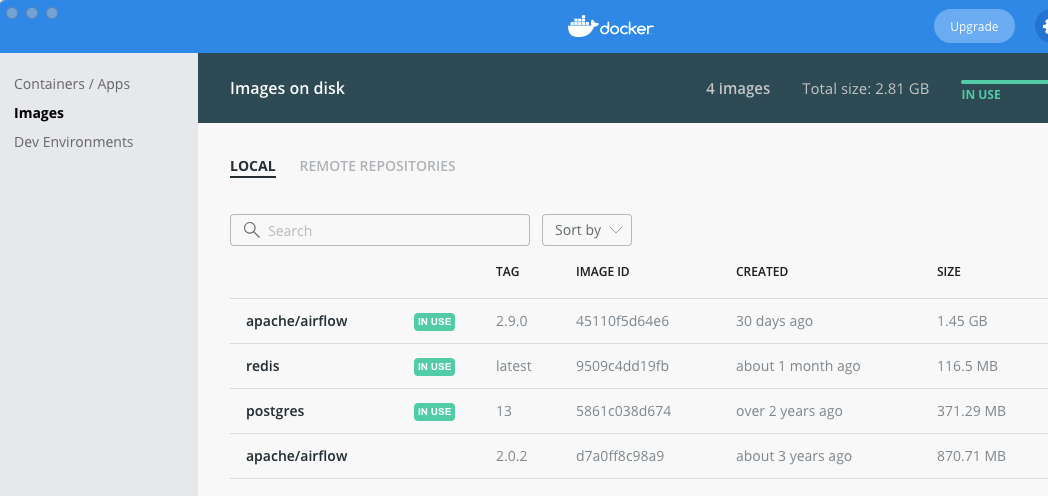

Once the process is finished successfully, Docker Desktop will pull the following images.

Running airflow

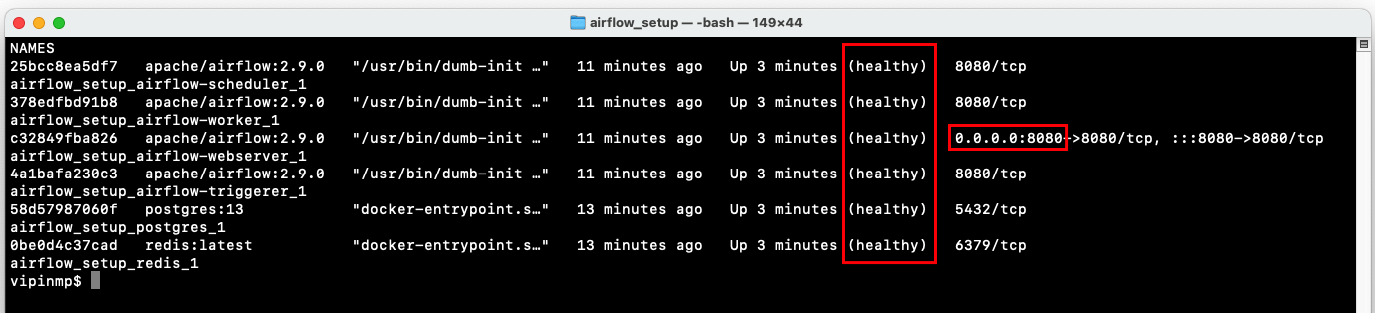

docker-compose upIn a second terminal you can check the condition of the containers and make sure that no containers are in an unhealthy condition:

docker ps

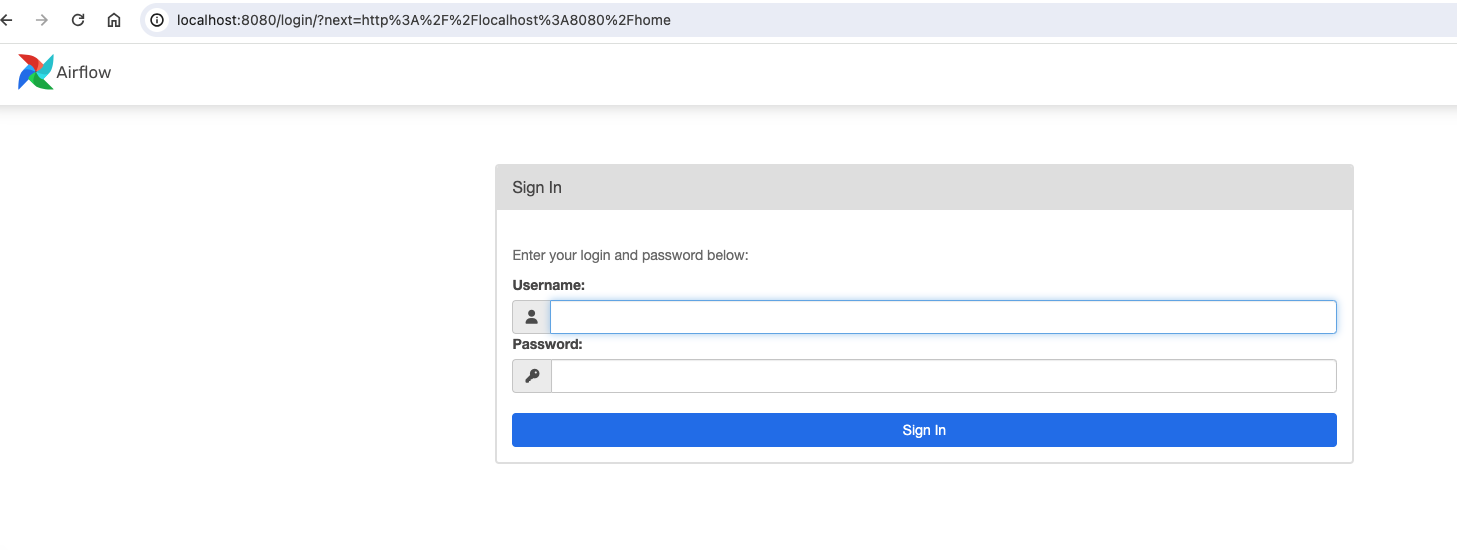

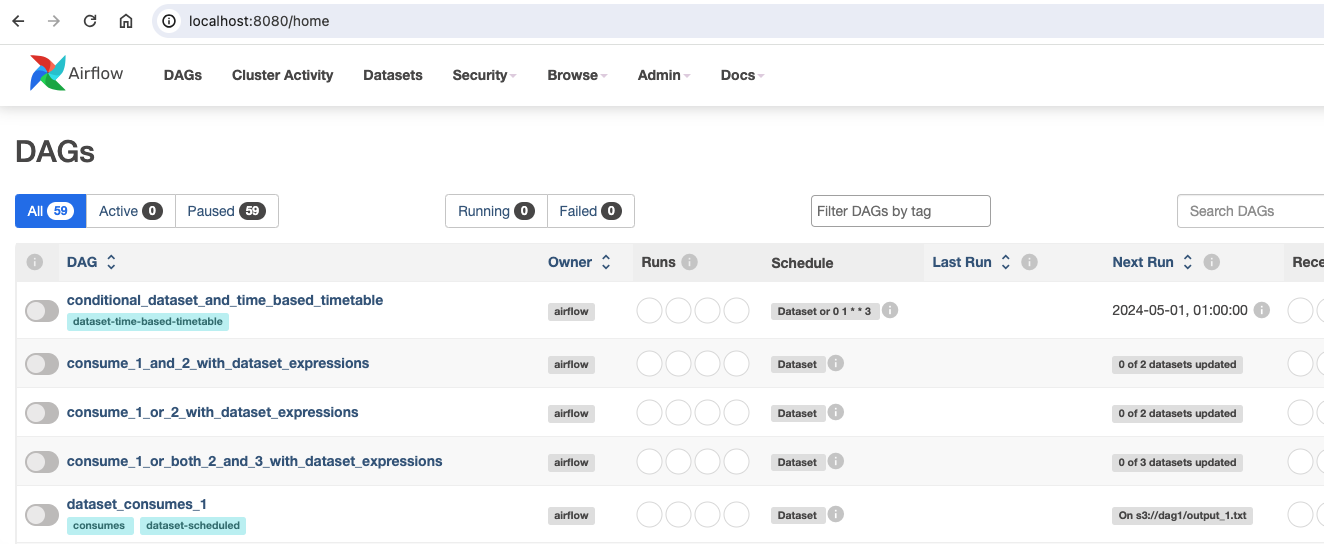

Accessing the environment After starting Airflow, you can interact via a browser using the web interface.

Login with username: airflow and password: airflow

Troubleshooting tips:

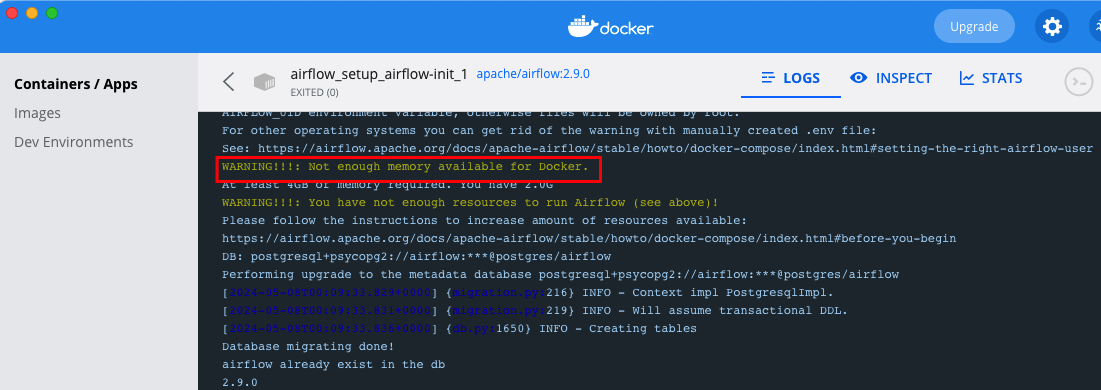

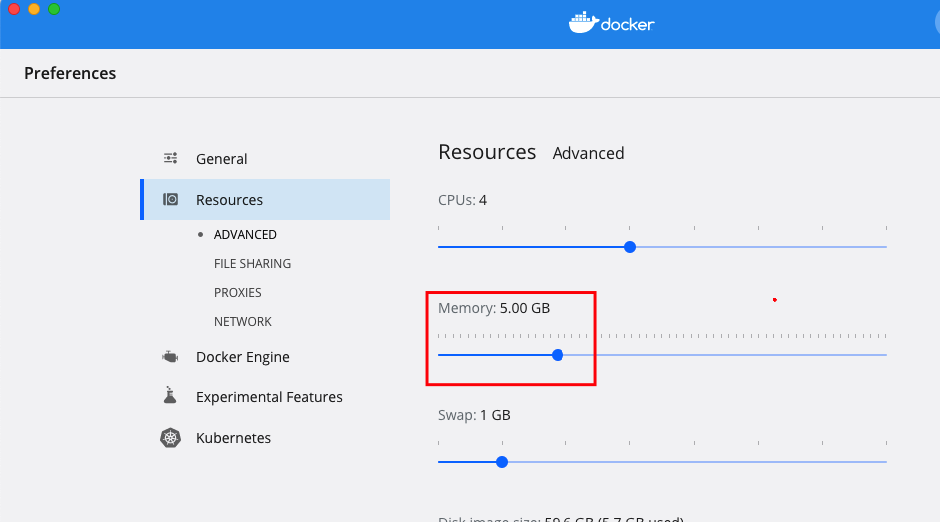

\>In case you come across the below error

\>Go to the resource section and increase the memory size

Subscribe to my newsletter

Read articles from Vipin directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vipin

Vipin

Highly skilled Data Test Automation professional with over 10 years of experience in data quality assurance and software testing. Proven ability to design, execute, and automate testing across the entire SDLC (Software Development Life Cycle) utilizing Agile and Waterfall methodologies. Expertise in End-to-End DWBI project testing and experience working in GCP, AWS, and Azure cloud environments. Proficient in SQL and Python scripting for data test automation.