xLSTM better than Transformer models?

Tinz Twins

Tinz Twins

Hi AI Enthusiasts,

Welcome to this week's Magic AI, where we bring you exciting updates from the world of Artificial Intelligence (AI) and technology. A lot has happened in the last week.

This week's Magic AI tool is an automation powerhouse. Perfect for meetings, sales calls, coaching sessions, and YouTube videos.

Let's explore this week's AI news together. Stay curious! 😎

In today's Magic AI:

xLSTM: Language model from Europe

Apple invests in AI infrastructure

DeepSeek unveils a new open-source LLM

Magic AI tool of the week

Hand-picked articles of the week

📚 Our Books and recommendations for you

Top AI news of the week

💬 xLSTM: Language model from Europe

The European AI startup NXAI, founded by AI pioneer Sepp Hochreiter, has presented a new architecture for language models in a paper. The new architecture is based on the familiar LSTM architecture. And it is called xLSTM (short for Extended Long Short-Term Memory).

LSTMs have a kind of built-in short-term memory. The new xLSTM architecture combines the transformer technology with LSTM. The research question of the paper is:

"How far do we get in language modeling when scaling LSTMs to billions of parameters, leveraging the latest techniques from modern LLMs, but mitigating known limitations of LSTMs?"

According to the paper, larger xLSTM models could compete with current large language models. The researchers have enhanced LSTM architecture through exponential gating with memory mixing and a new memory structure. For a more in-depth look at the new architecture, you should check out the full paper.

Our thoughts

We have often used the LSTM architecture in the past for time series forecasts or anomaly detection in quality control. The LSTM architecture is powerful, but LSTMs cannot compete with state-of-the-art LLMs like Llama 3.

The new architecture expands the LSTM architecture so that the new architecture has the potential to be a real competitor to actual transformer models like Llama 3. We find the approach very exciting and will continue to follow this topic.

✍🏽 What is your opinion on this new LSTM architecture?

📚 Book recommendation (it's free) to learn more about state-of-the-art Deep Learning models:Dive into Deep Learning

More information

🍎 Apple invests in AI infrastructure

Apple plans to integrate its own chips into servers. The demand for computing power for generative AI is increasing, and Apple is planning several functions in the new operating systems. Generative AI requires big server parks with much computing power.

According to the famous analyst Jeff Pu, Apple's manufacturing partner Foxconn builds "AI servers" based on Apple's M2-Ultra-SoC. In 2025, the M4 will then be integrated into the servers. A few days ago, Apple unveiled the new iPad Pro, which already uses the new M4 chip. Apple calls the new M4 chip an AI powerhouse.

Our thoughts

Apple has been using its own ARM chips in Macs since 2020. Apple develops hardware and software hand in hand, which is the reason for the impressive performance of Apple computers.

In addition, Apple has developed the efficient machine learning framework MLX for Apple chips. It's like PyTorch but optimized for Apple hardware. It's an open-source framework, available on GitHub with many examples.

✍🏽 What is your opinion on Apple hardware for machine learning?

More information

🤖 DeepSeek unveils a new open-source LLM

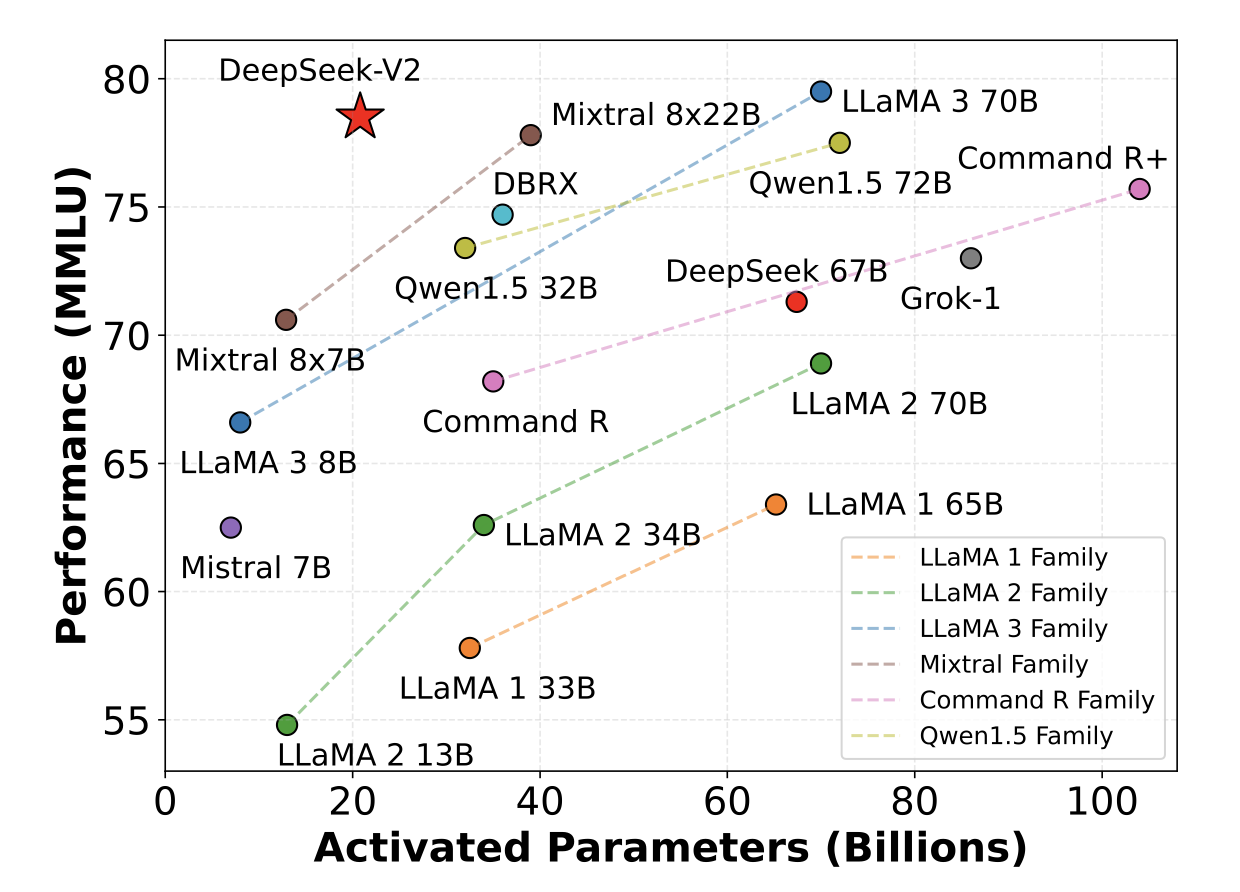

The AI startup DeepSeek just presented DeepSeek-V2, an open-source mixture-of-experts (MoE) large language model. This open-source model has 236 billion parameters and supports a context length of 128,000 tokens.

DeepSeek-V2 achieves high performance in benchmarks. DeepSeek has adjusted the mixture-of-experts architecture and shows the potential of large open-source models. You can check out the official paper to learn more about this new open-source model. It also requires significantly fewer parameters than many of its competitors.

Image by DeepSeek

DeepSeek-V2 is available on HuggingFace. In addition, you can use the cost-effective API of DeepSeek-V2.

Our thoughts

According to the benchmarks, DeepSeek-V2 seems to be a powerful open-source LLM. But it also has its weaknesses. It works especially well in Chinese because they used mainly data from China for training. In addition, it has its strengths, especially in math and coding tasks. The first tests also show that the model answers questions from a Chinese perspective.

More information

DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model - arXiv

DeepSeek-V2 is a Chinese flagship open source Mixture-of-Experts model - The Decoder

Magic AI tool of the week

🪄 CastMagic*- Automate tedious tasks with ease

Do you find writing meeting summaries or follow-up e-mails tedious? Then, you should check out the tool CastMagic! This tool turns your audio into content.

Imagine you have a sales call. Then, you need a summary of the call, including the customer's name, asked questions, sentiment, next steps, and so on. Right!

CastMagic can do all of this for you. The tool does all the tedious work for you, and you can focus on the conversation with your customer. It's a win for you and your customers.

Articles of the week

📖 You might also be interested in these blog articles:

Understand and Implement an Artificial Neural Network from Scratch

Responsible Development of an LLM Application + Best Practices

Thanks for reading, and see you next time.

- Tinz Twins

P.S. Have a nice weekend! 😉😉

* Disclosure: The links are affiliate links, which means we will receive a commission if you purchase through these links. There are no additional costs for you.

Subscribe to my newsletter

Read articles from Tinz Twins directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Tinz Twins

Tinz Twins

Hey, we are the Tinz Twins! 👋🏽 👋🏽 We both have a Bachelor's degree in Computer Science and a Master's degree in Data Science. In our blog articles, we deal with topics around Data Science.