Sidecar Containers at work!

Rahul Poonia

Rahul Poonia

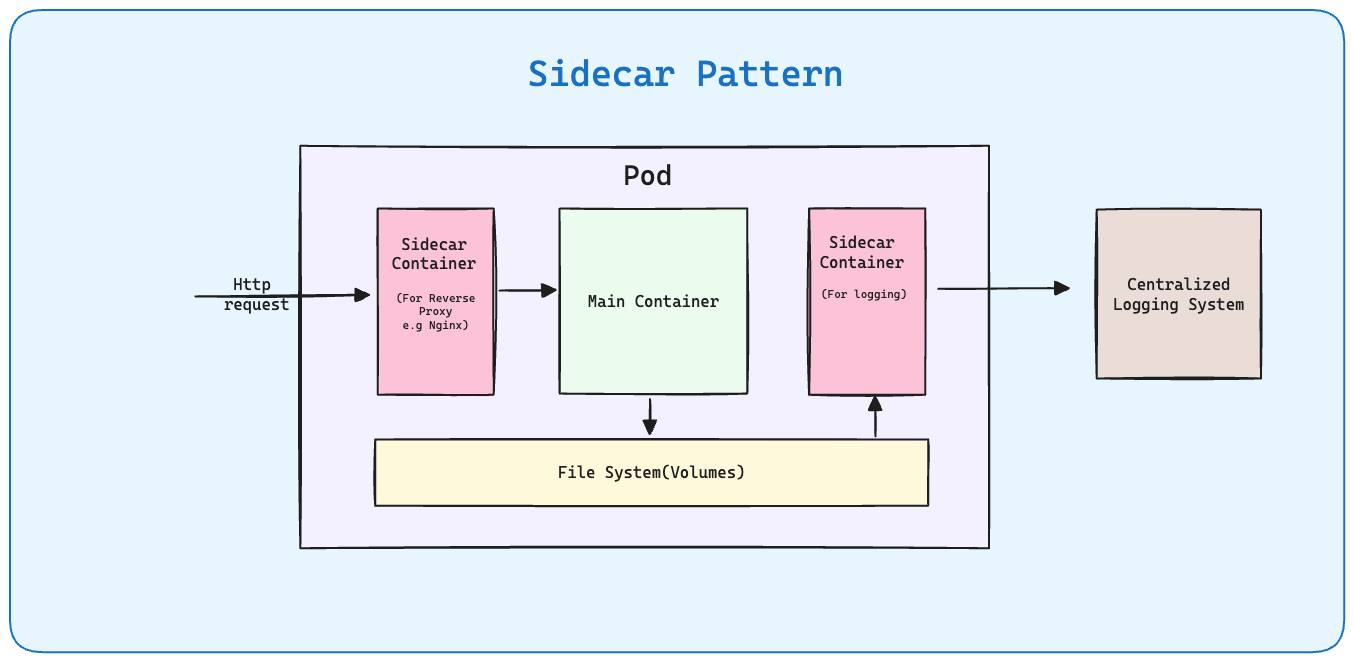

The sidecar pattern is a software architectural pattern in which an additional container, known as a "sidecar container," is deployed alongside the main application container within a pod in a containerized environment such as Kubernetes. The sidecar container provides supporting role to the main application container.

Following are the key aspects of sidecar containers

Separation of Concerns: Main container deals with the main business functionality while additional container deals with supporting role like logging etc

Independent lifecycle: Sidecar containers can be started, stopped and restarted independent of app containers

Shared resources : Two containers share the same pod resources and storage namespaces.

Latency : Very low latency in communication between main and sidecar container as they share the same network space

We will explore sidecar containers by creating a real world application.

Components of architecture

We will be creating a flask application that will return a simple response of "Hello, World!" on GET API call. This application represents the main business logic. We will support this application with two more functionalities viz one reverse proxy and other logging using sidecar pattern. The architecture would look something like this:

Here are the major components of the architecture:

Main container : This container runs the main business application.

Nginx sidecar container : Nginx is used for reverse proxying requests to the main container. In a real world application, we need a web server like Nginx that can act as a reverse proxy for our main application. This helps in ensuring scalable and resellient handling of large number of requests with minimum latency.

Sidecar container for logging : Something like logging can be outsourced to a separate container. This container can be tasked to forward the logs to a centralized logging sytem for further analysis.

File system : For sharing some data across containers.

All the three containers are running in a single pod of kubernetes cluster. They share the pod resources in terms of cpu and memory.

Prerequisites to go forward : You have kubernetes cluster up and running either on your local system using minikube or some cloud platform. I will be using Azure Kubernetes Cluster(AKS). The commands remain exactly the same.

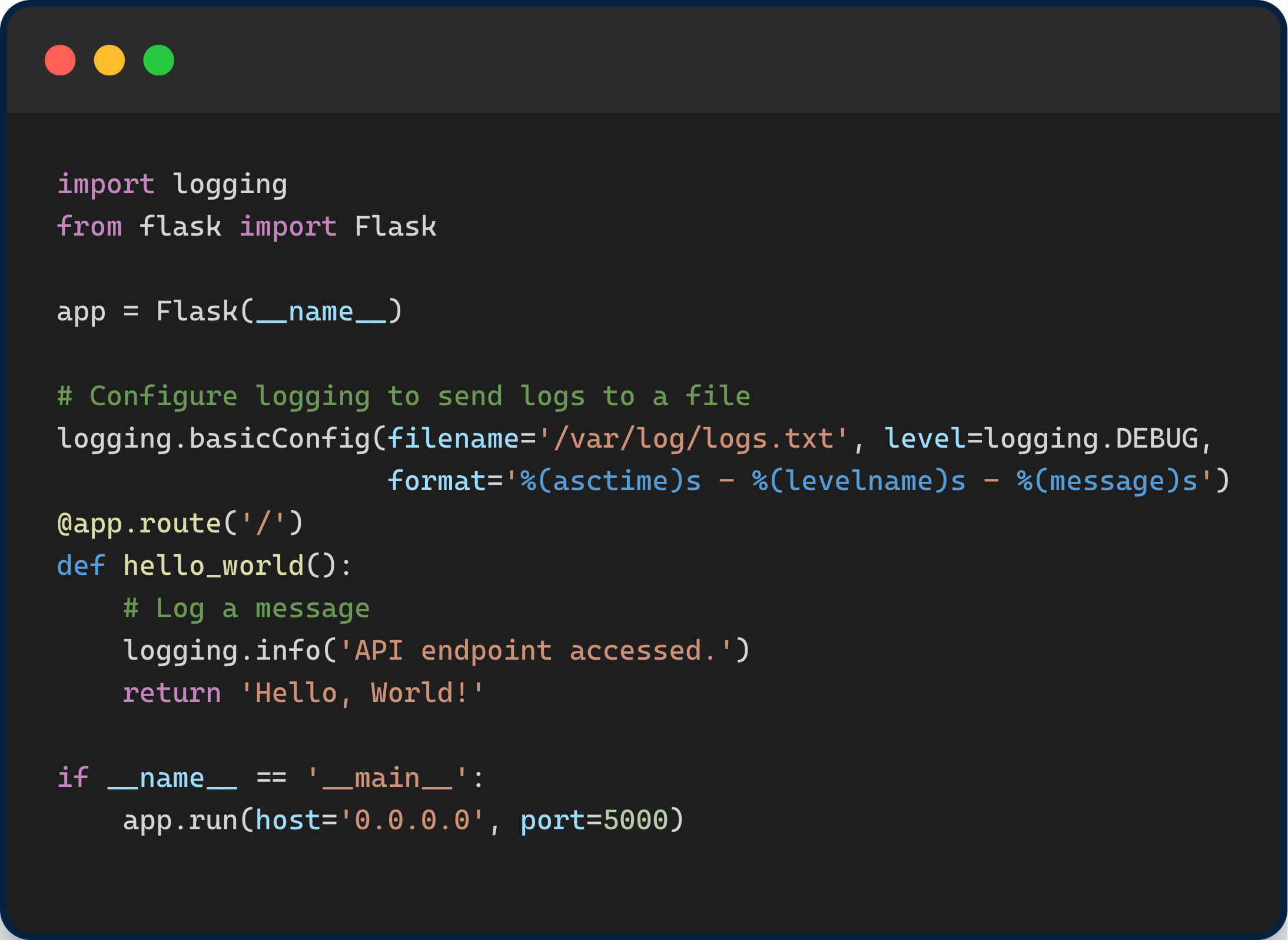

Flask Application

First of all, we will create a simple flask application app.py. This app has an endpoint that returns Hello, World! Also we will configure logging so that everytime we recieve a request we log it in a separate file with directory var/log/logs.txt.

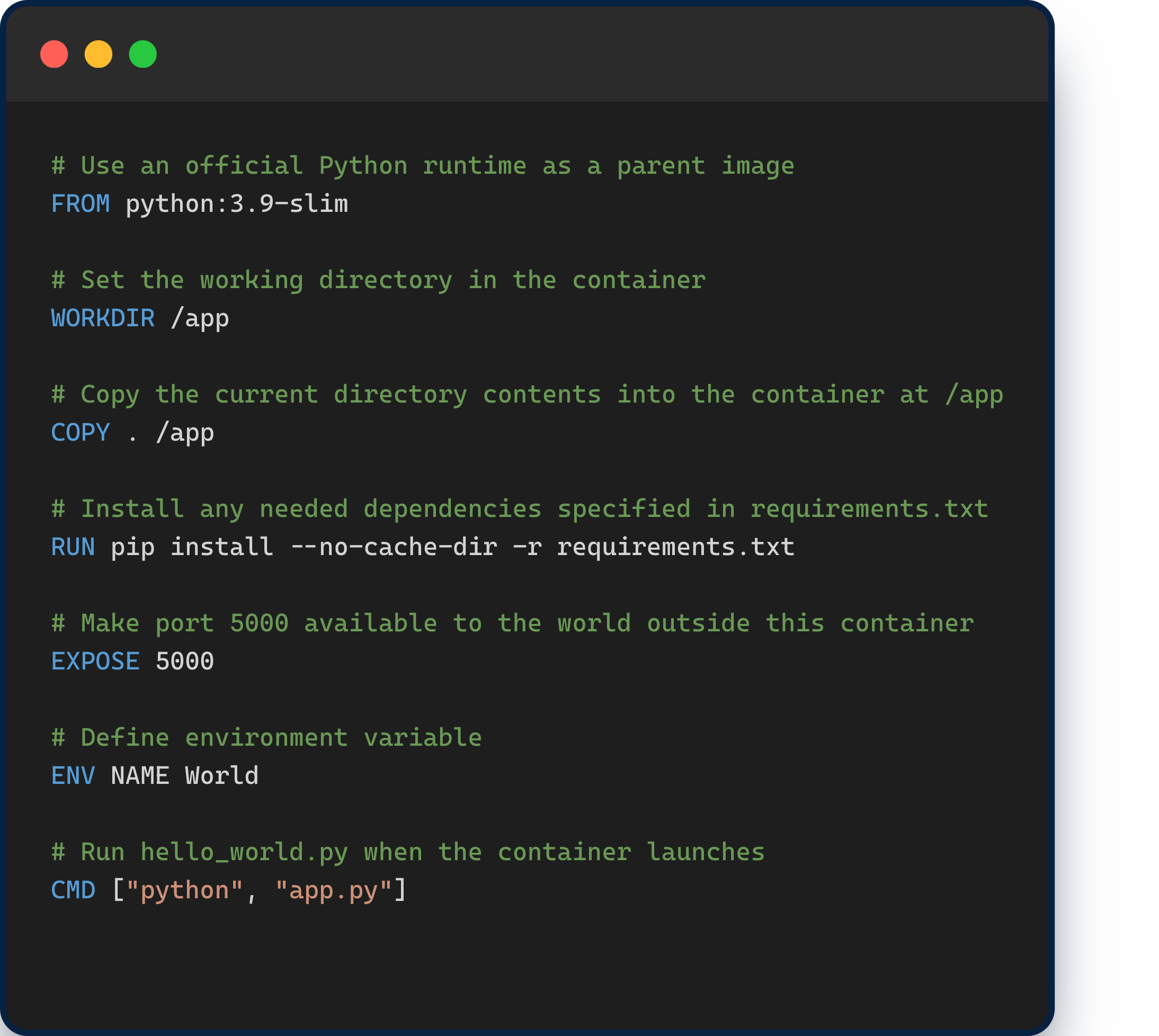

Dockerfile

To run the application in a container we need to create a docker file for this in the same. This will be a simple dockerfile built on the base image of python.

Please see below code along with comments to understand how dockerfile is used to cotainerize this flask application.

With this dockerfile we can build the image using following command:

docker buildx build -t rahulpoonia20793/sidecarcontainers . --platform linux/amd64

Further we can push this to Dockerhub, to be pulled later when needed using following command:

docker push rahulpoonia20793/sidecarcontainers:latest

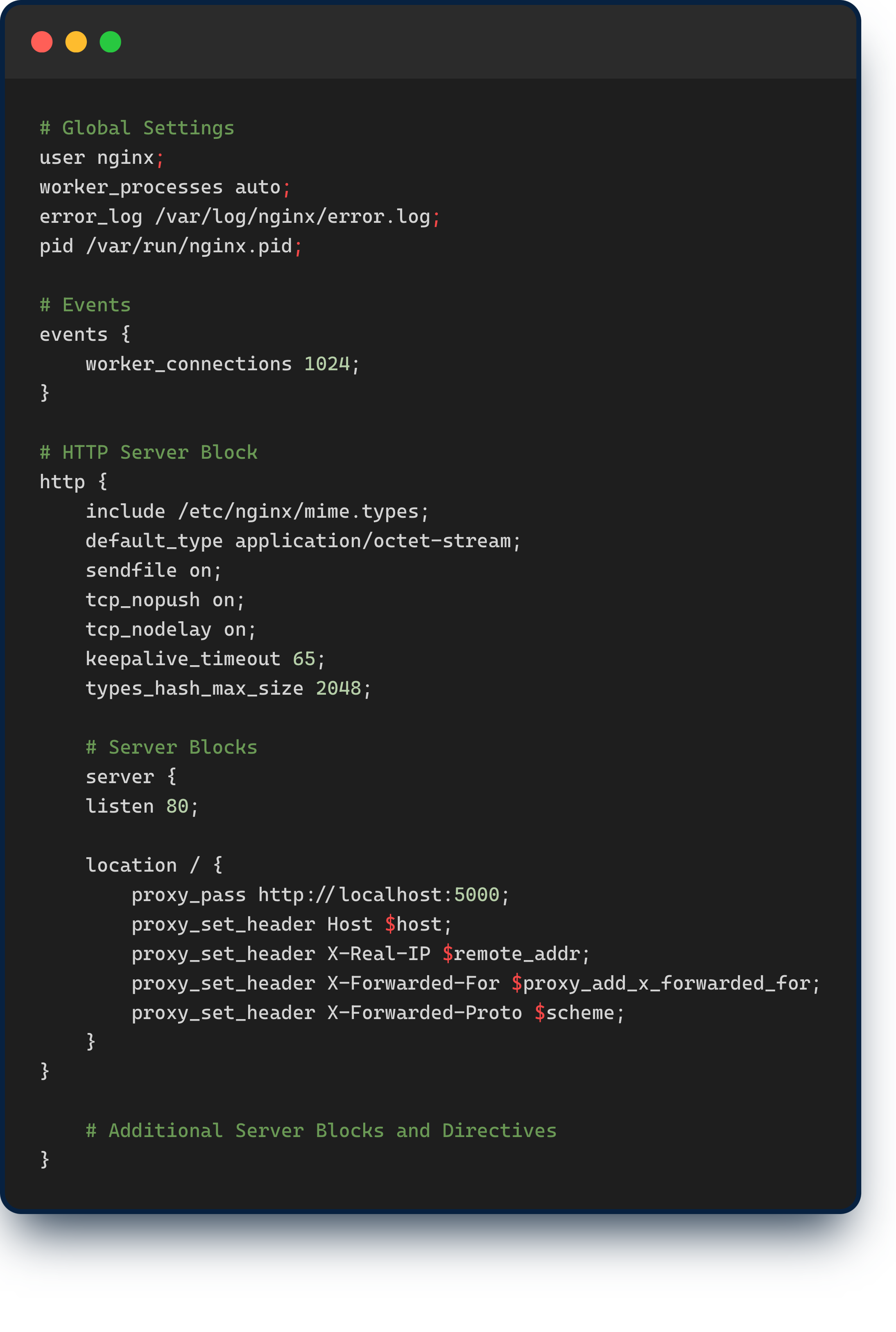

Sidecar container as a reverse proxy

Now, that we have the image of main application ready, we will now work on setting up Nginx for reverse proxying. This Nginx server running in one container, will direct the request to our main application container.

We will be using publically available base image of Nginx viz : nginx:latest. So we need not worry about this part.

Nginx requires a config file to direct requests appropriately. Checkout the following config file nginx.conf that needs to be added as well.

The most important thing to note here is that this server is configured to listen on port 80 and proxy_pass to localhost on port 5000. Localhost would work because nginx container and main application container both are part of same network namespace within the same pod.

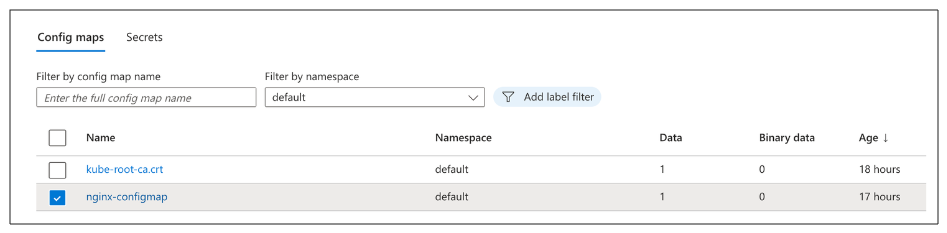

These configs will be used by nginx server from a config-map mounted on volume path. We will create that config map in kubernetes cluster as follows:

kubectl create configmap nginx-configmap --from-file=nginx.conf

The config-map will appear as this in aks cluster as follows:

Sidecar container as a log scrapper

Third and last container that we need to setup is for logging purposes. We have configured main application to send all logs to var/log/logs.txt as shown above. This container will pick those logs and send to stdout. We can configure it to send to some centralized logging as well.

To achieve this we will use base image of alpine:latest which is a lightweight Linux distribution. On top of this, we will just write a shell command to pick logs from the above file and send to stdout.

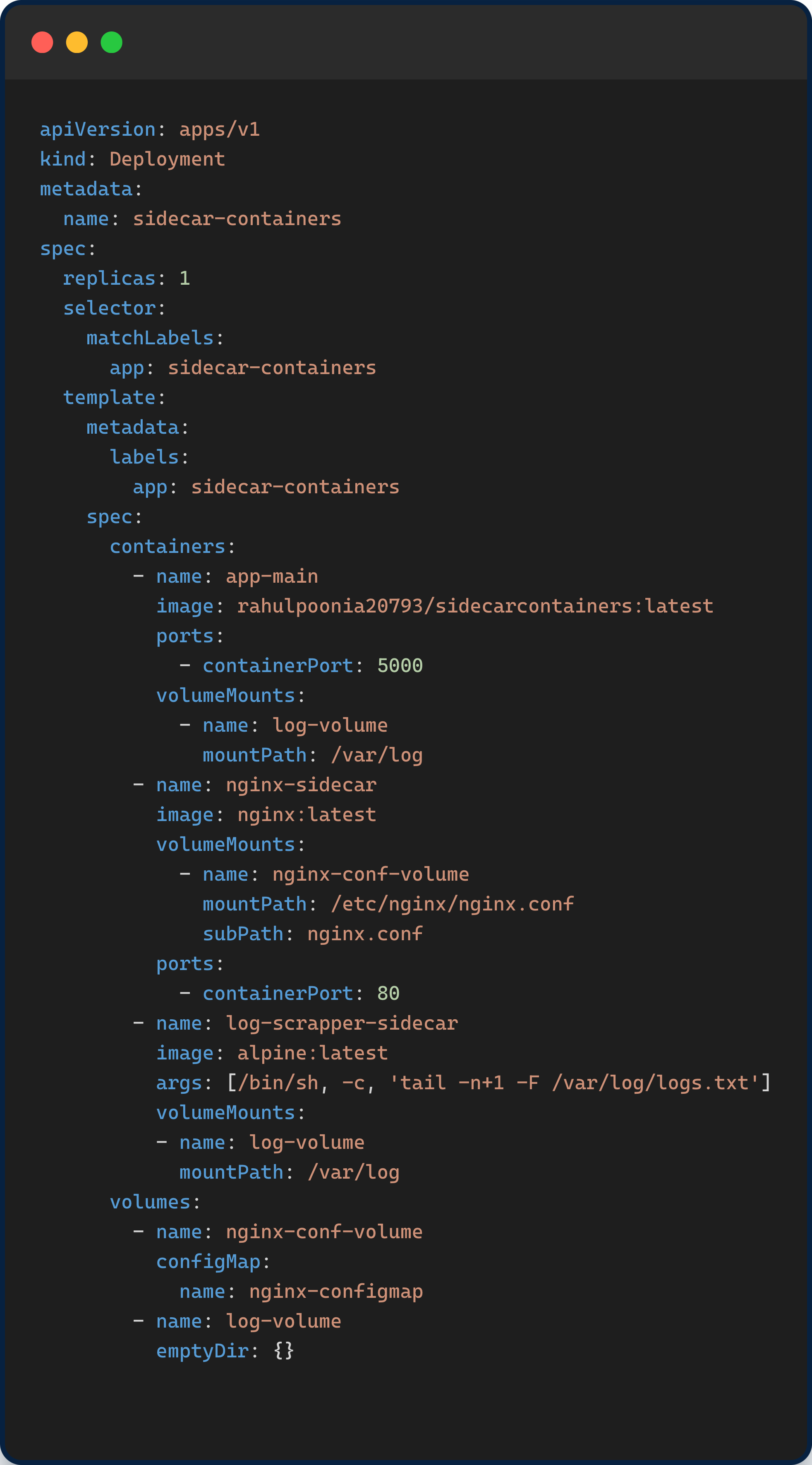

K8s Deployment Object

We need to create a deployment.yaml file that will be used to create deployment in kubernetes cluster. Here is the yaml file to be used

Lets understand this file by breaking into all the elements

volumes

In Kubernetes, a volume is a directory, possibly with some data in it, which is accessible to the containers in a Pod. When we define a volume in a Kubernetes Deployment object, we are specifying a volume that will be available to all containers in the pods created by that deployment. There are two volumes defined

log-volume : To work with logs generated by app-main container

nginx-conf-volume : To make nginx configmap access to the nginx-sidecar container.

app-main container

This element has following fields

Docker image :

rahulpoonia20793/sidecarcontainers:latestto be used for deployment that was published as shown above.Port : Port 5000 where our application will be listening to in the container.

Volume mount :

log-volumedirectory where logs will be written.

nginx-sidecar container

This element has following fields

Docker image :

nginx:latestto be used for deployment.Port : Port 80 where this reverse proxy will be listening to in the container.

Volume mount :

nginx-conf-volumevolume from where nginx-sidecar container will get its configs.

log-scrapper-sidecar container

This element has following fields

Docker image :

alpine:latestto be used for deployment.Args : A shell command that will read all the logs from specified file directory.

Volume mount :

log-volumedirectory from where this container will read logs from.

We will use following command to create the deployment.

kubectl apply -f deployment.yaml

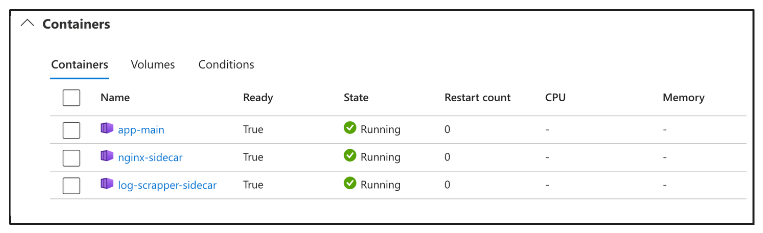

We shall see a single pod with three containers up and running as below:

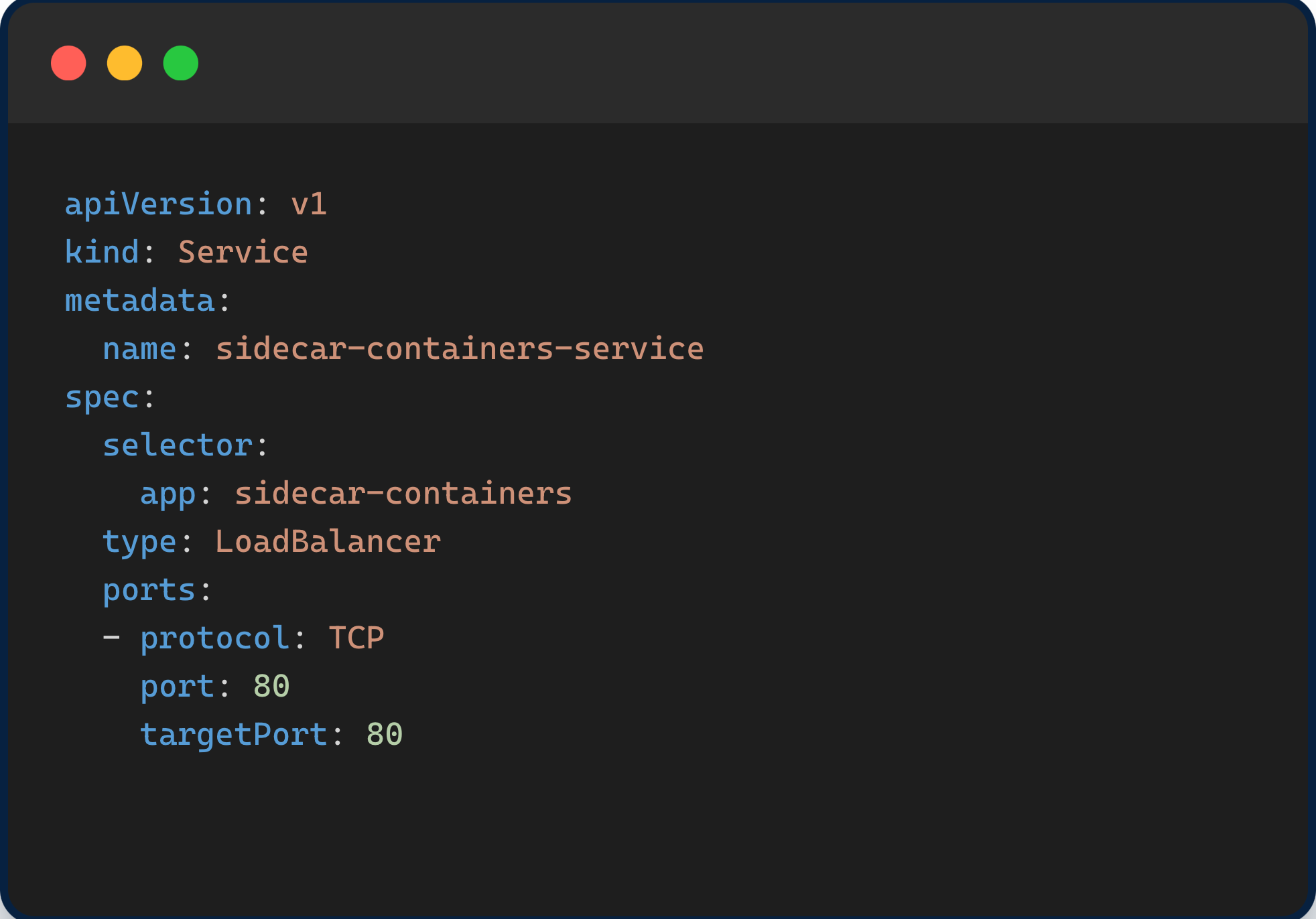

K8s Service Object

Finally we need to make sure that this whole set up is accessible to the outside world over http(s). The nginx server would receive the requests from outside the kubernetes via service object. Pods are ephemeral, they spawn and die very frequently. That's why we need a service layer that has a fixed ip address and is exposed to the world. service.yaml file would look like this

We will use following command to create the service object

kubectl apply -f service.yaml

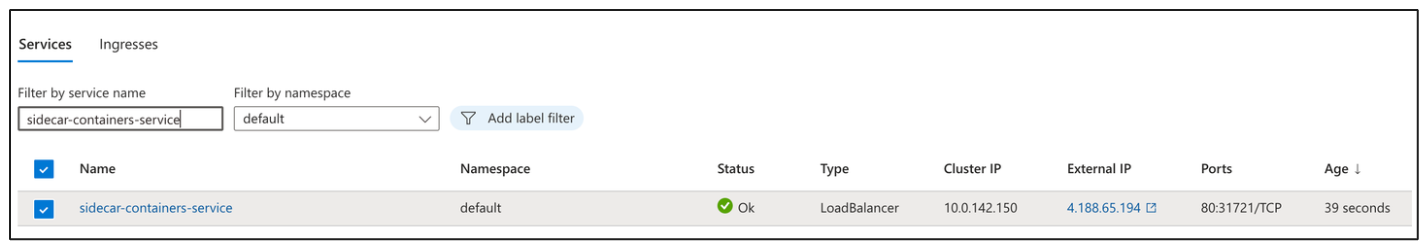

The service object looks like this.

Giving it a go!

Now that we have everything setup we will test it out to see how things are working.

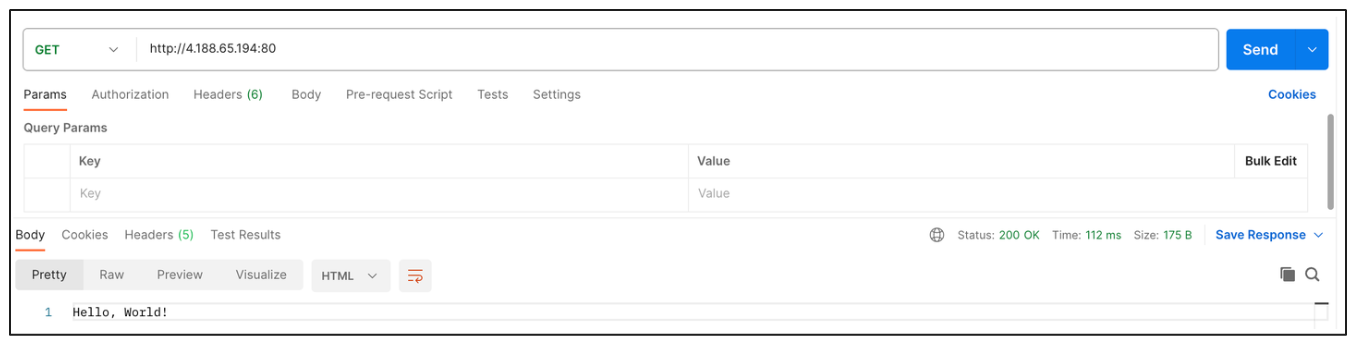

We will try out the hello world API using Service's External IP with port 80 as that is where the service is listening.

So we are receiving the correct response.

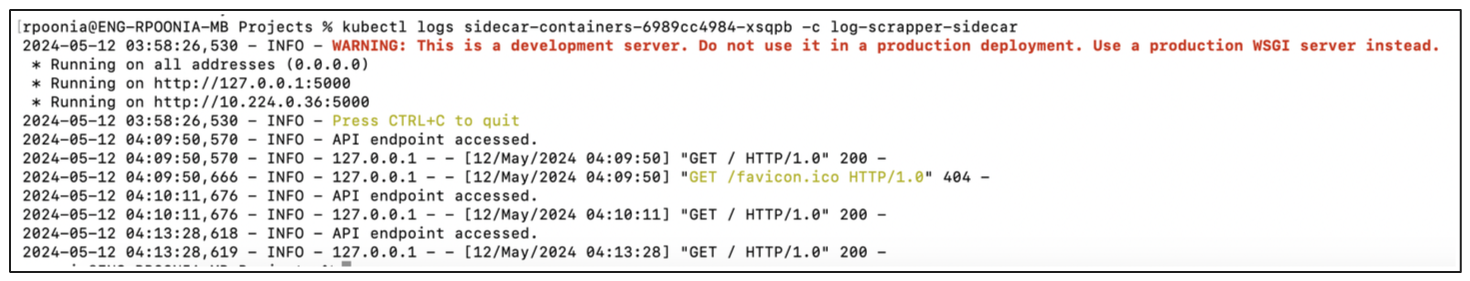

Lets check out the logs to verify everything. Commands to be used:

Get the pod first using command :

kubectl get podsGet logs from pod name using command :

kubectl logs sidecar-containers-6989cc4984-xsqpb -c log-scrapper-sidecar

The logs we created using our application are visible on log-scrapper-container stdout.

You can also checkout the logs of nginx-sidecar similarly.

Conclusion

In conclusion, sidecar containers offer a powerful paradigm for enhancing the functionality, resilience, and manageability of applications deployed in Kubernetes and other container orchestration platforms. By running alongside primary application containers within the same Pod, sidecar containers extend the capabilities of the application without introducing complexity or dependencies into its core logic. As organizations continue to embrace microservices architectures and cloud-native technologies, sidecar containers emerge as indispensable components in the toolkit for building robust, flexible, and resilient distributed systems.

You can find the complete codebase here in my github account. Give it a star if you find it useful.

If you liked this blog, don't forget to give it a like. Also, follow my blog and subscribe to my newsletter. I share content related to software development and scalable application systems regularly on Twitter as well.

Do reach out to me!!

Cover image source : https://www.fermyon.com/blog/scaling-sidecars-to-zero-in-kubernetes

Subscribe to my newsletter

Read articles from Rahul Poonia directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by