Understanding Load Balancers: How They Work, Types, Algorithms, and Use Cases

Abhinav Singh

Abhinav Singh

In the realm of web infrastructure, load balancers play a pivotal role in ensuring optimal performance, reliability, and scalability. As websites and applications handle increasing traffic loads, the need for efficient distribution of incoming requests becomes imperative. Load balancers act as traffic managers, evenly distributing incoming requests across multiple servers, thereby preventing any single server from becoming overwhelmed. In this comprehensive guide, we delve into the intricacies of load balancers, exploring how they work, the different types, and algorithms governing their operation, and their diverse use cases.

Introduction to Load Balancers

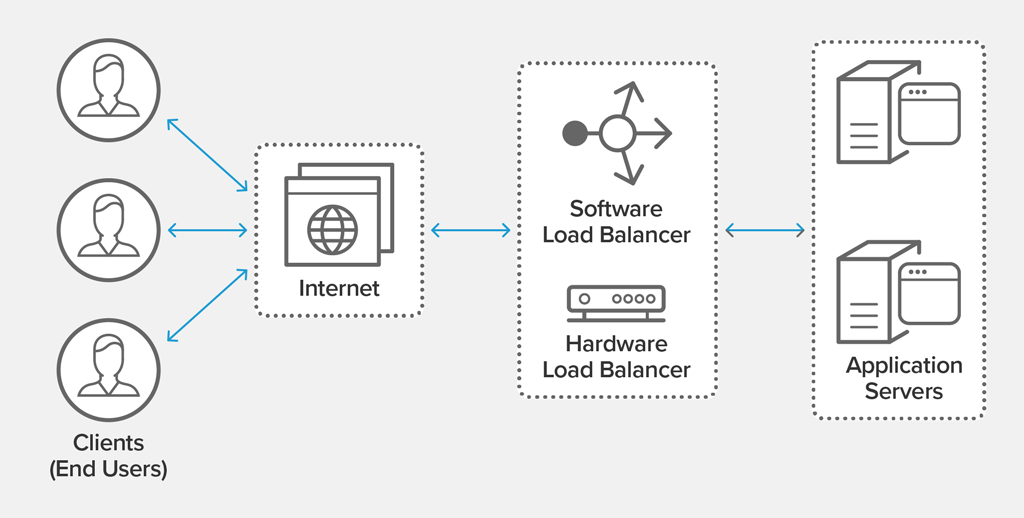

Load balancers serve as intermediaries between clients and servers, ensuring that incoming requests are distributed efficiently among multiple backend servers. By spreading the workload across servers, load balancers enhance the reliability, availability, and scalability of web applications.

How Load Balancers Work

Load balancers operate by receiving incoming traffic and distributing it across multiple servers based on predefined algorithms. They monitor the health and performance of backend servers, directing requests away from failed or overloaded servers. Load balancers can be hardware-based appliances or software-based solutions, often integrated into application delivery controllers (ADCs) or cloud-based services.

Types of Load Balancers

Layer 4 Load Balancers

Layer 4 load balancers operate at the transport layer of the OSI model, primarily focusing on routing decisions based on network and transport layer information such as IP addresses and TCP/UDP ports. They offer high-speed, low-latency traffic management, making them suitable for TCP and UDP-based protocols.

Layer 7 Load Balancers

Layer 7 load balancers function at the application layer of the OSI model, providing advanced traffic management capabilities by inspecting application-level data such as HTTP headers, cookies, and content. They offer features like content-based routing, SSL termination, and session persistence, making them ideal for handling complex web applications and protocols.

Load Balancing Algorithms

Load balancers employ various algorithms to distribute traffic effectively among backend servers. Some commonly used algorithms include:

Round Robin: Requests are sequentially circularly distributed among servers, ensuring an equal share of the workload.

Least Connections: Incoming requests are directed to the server with the fewest active connections, thereby evenly distributing the load.

IP Hash: The source IP address of the client is hashed to determine the destination server, ensuring session persistence for subsequent requests from the same client.

Least Response Time: Requests are routed to the server with the fastest response time, optimizing performance and user experience.

Weighted Round Robin: Servers are assigned weights based on their capacity or performance, allowing for a proportional distribution of traffic.

Use Cases of Load Balancers

Load balancers find wide-ranging applications across various industries and environments. Some common use cases include:

High-traffic Websites: Load balancers help distribute incoming web traffic among multiple servers, ensuring optimal performance and availability for high-traffic websites.

Web Applications: Load balancers enable horizontal scaling of web applications by adding or removing servers dynamically based on demand, thus enhancing scalability and resource utilization.

Enterprise Networks: Load balancers are deployed in enterprise networks to improve application delivery, enhance security, and optimize resource utilization across data centers.

Cloud Infrastructure: Load balancers play a crucial role in cloud environments, facilitating dynamic scaling, fault tolerance, and efficient resource allocation across virtualized infrastructure.

Challenges and Considerations

While load balancers offer numerous benefits, they also pose certain challenges and considerations:

Complexity: Configuring and managing load balancers, especially in large-scale deployments, can be complex and require specialized expertise.

Performance Overhead: Load balancers introduce a certain level of performance overhead due to additional processing and routing logic.

Single Point of Failure: In some architectures, load balancers can become single points of failure, necessitating redundancy and failover mechanisms.

Security Concerns: Load balancers must be configured securely to prevent potential vulnerabilities and mitigate security risks.

Cost: Depending on the scale and features required, load balancers can incur significant costs, especially in cloud-based environments.

Conclusion

Load balancers serve as indispensable components of modern web infrastructure, enabling efficient distribution of incoming traffic, and enhancing the reliability, scalability, and performance of web applications. By intelligently routing requests among multiple servers, load balancers ensure optimal resource utilization and seamless user experience. Understanding the different types of load balancers, algorithms governing their operation, and diverse use cases is essential for designing resilient and scalable web architectures in today's dynamic computing environments. While load balancers offer numerous benefits, they also pose challenges that must be carefully addressed to maximize their effectiveness and ensure a robust web infrastructure.

Subscribe to my newsletter

Read articles from Abhinav Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by