What Happens When You Type 'google.com' in Your Browser and Press Enter?

Temitayo Daisi-Oso

Temitayo Daisi-OsoTable of contents

- Table of Contents:

- Introduction

- URL Parsing

- Domain Name System (DNS) Resolution

- Transmission Control Protocol/Internet Protocol (TCP/IP)

- Firewalls and Intrusion Detection Systems (IDS)

- Secure Socket Layer (SSL)/Transport Layer Security (TLS) in HTTPS

- Load Balancing

- Web Servers

- Application Servers

- Database Servers

- Conclusion

Table of Contents:

Introduction

URL Parsing

Domain Name System (DNS) Resolution

Transmission Control Protocol/Internet Protocol (TCP/IP)

Firewalls and Intrusion Detection Systems (IDS)

Secure Socket Layer (SSL)/Transport Layer Security (TLS) in HTTPS

Load Balancing

Web Servers

Application Servers

Database Servers

Conclusion

Introduction

When users input "google.com" into their browsers and press Enter, a series of intricate operations ensue, rendering content on their screens. This article delves into each phase of this process, elucidating their interconnections and the data exchanges underpinning web communication, with Google's website serving as a prime example.

URL Parsing

URL Parsing is the process of breaking down a URL (Uniform Resource Locator) into its component parts to understand its structure and extract relevant information. Let's explore this fundamental concept in web development, using Google as our case study.

Scheme

The first component of a URL is the scheme, which in this case is "https". The scheme indicates the protocol that will be used to access the resource, in this case, Hypertext Transfer Protocol Secure (HTTPS).

Domain

Following the scheme is the domain component. In our example, the domain is "www.google.com". This component identifies the specific location of the resource on the internet. ## Path The path specifies the specific resource or location on the server. For example, in "https://www.google.com/", the path is "/", indicating the root directory.

Query

Parameters Sometimes, URLs include query parameters, which are additional data appended to the URL to provide information to the server. For instance, in "https://www.google.com/search?q=url+parsing", the query parameter is "q=url+parsing", which indicates the search query.

Fragment

Fragments, also known as anchors, specify a specific section within a document. They are preceded by a hash (#) symbol. For example, in "https://www.google.com/#about", the fragment is "about", directing the browser to a specific section of the page.

Parsing and Resolution

When a user enters a URL into the browser, the browser parses the URL to extract its components. It then resolves the domain to an IP address using DNS (Domain Name System) lookup. After establishing a connection to the server, the browser sends an HTTP request, including any query parameters, to retrieve the requested resource. Finally, the server responds with the requested content, which the browser renders for the user to view.

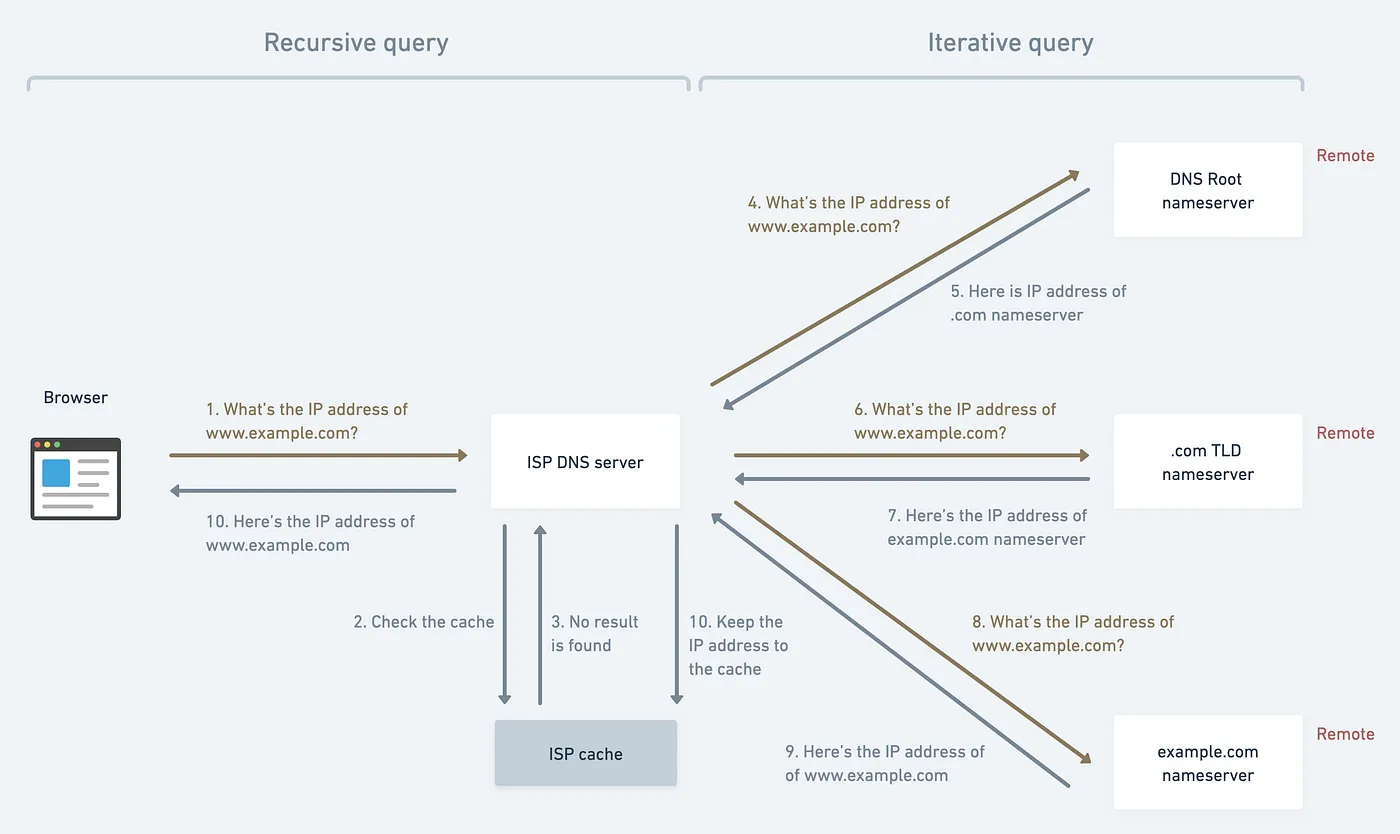

Domain Name System (DNS) Resolution

Following URL parsing, the browser initiates a DNS resolution process to map the domain name (e.g., google.com) to its corresponding IP address. This involves querying DNS servers, traversing hierarchical domain structures, and caching mechanisms to expedite future lookups, thus facilitating seamless navigation.

DNS Lookup

Upon receiving the URL request, the browser initiates a DNS (Domain Name System) lookup. It sends a query to a DNS resolver, typically provided by the Internet Service Provider (ISP) or configured in the network settings. A recursive query process starts.

Root Nameserver

If the DNS resolver doesn't have the IP address for "google.com" cached, it contacts a root name server. The root name server directs the resolver to the appropriate Top-Level Domain (TLD) server based on the domain extension (.com in this case).

TLD Nameserver

The resolver then contacts the TLD server responsible for the ".com" domain. The TLD server provides the IP address of the authoritative name server for "google.com".

Authoritative Nameserver

The resolver queries the authoritative name server for "google.com" to obtain the IP address associated with the domain.

IP Address Retrieval

The authoritative name server responds with the IP address of Google's servers (e.g., 172.217.3.78).

Connection

Armed with the IP address, the browser establishes a connection to Google's server using the HTTP or HTTPS protocol. Here, the ISP also stores the IP address in its cache.

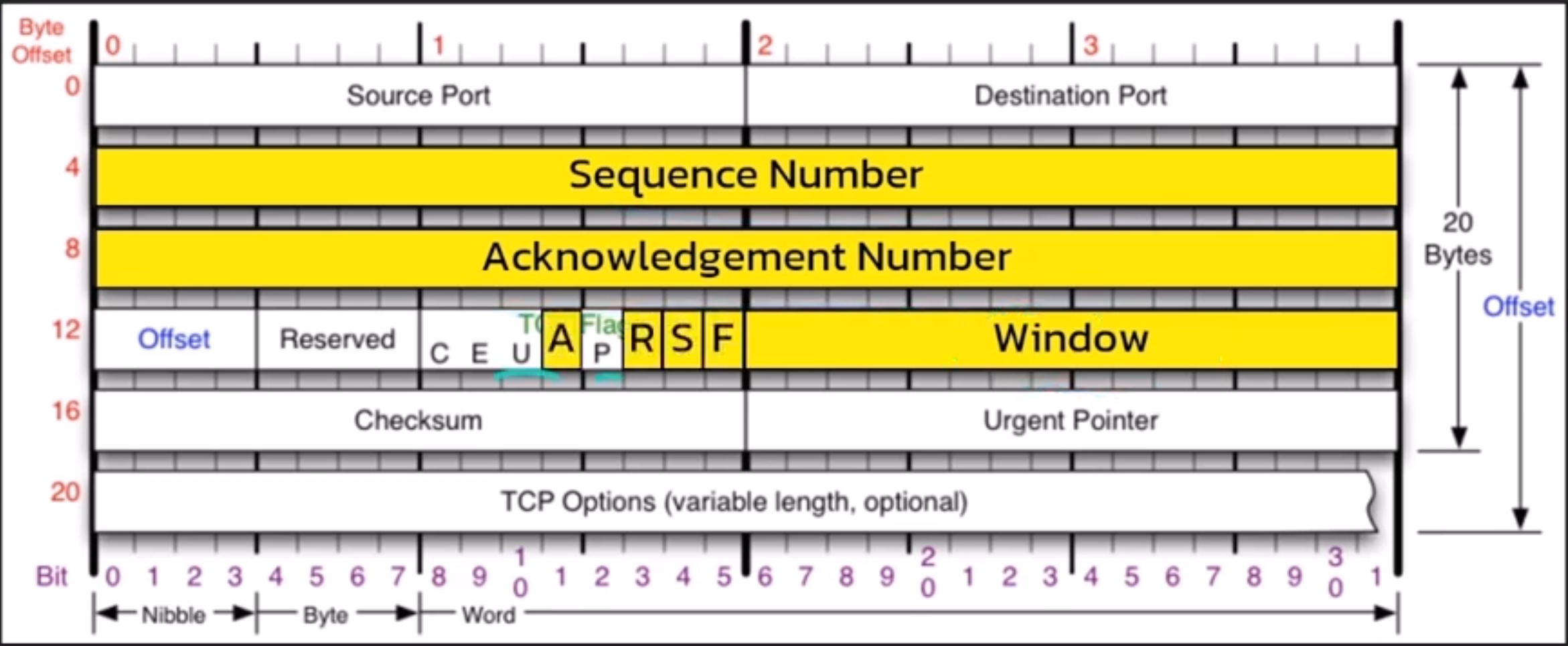

Transmission Control Protocol/Internet Protocol (TCP/IP)

Armed with the resolved IP address, the browser establishes a TCP/IP connection with the designated web server. TCP/IP governs the reliable transmission of data packets across networks, orchestrating processes such as packet encapsulation, routing, and error detection to ensure data integrity and delivery.

TCP/IP 3-Way Handshake

Critical to connection establishment is the TCP/IP 3-way handshake, a synchronized exchange of control messages between client and server.

Through a series of SYN, SYN-ACK, and ACK packets, both parties negotiate connection parameters and verify readiness, laying the groundwork for subsequent data transmission.

Firewalls and Intrusion Detection Systems (IDS)

To fortify network defences, firewalls and IDS come into play, enforcing access control policies and detecting anomalous activities. Firewalls act as a barrier between a trusted internal network and untrusted external networks, such as the Internet. They monitor and control incoming and outgoing network traffic based on predetermined security rules.

Stateful Inspection

One of the core techniques employed by firewalls is stateful inspection. Unlike traditional packet-filtering firewalls that examine individual packets in isolation, stateful inspection maintains context about active connections. It tracks the state of active connections and only permits packets that belong to established sessions, thus enhancing security by preventing unauthorized access.

Packet Filtering

Packet-filtering firewalls inspect packets based on predefined criteria, such as source and destination IP addresses, ports, and protocol types. They compare packet headers against a set of rules to determine whether to allow or block the packets. By filtering packets at the network layer, these firewalls provide a basic level of protection against unauthorized access and network-based attacks.

Application Layer Proxies

Application layer proxies operate at the application layer of the OSI model, allowing them to inspect and filter traffic at a higher level of granularity. Instead of merely examining packet headers, application layer proxies analyze the contents of data packets, enabling more advanced filtering based on application-specific protocols and content types. This deep packet inspection capability enhances security by detecting and blocking malicious or unauthorized content before it reaches the internal network.

Anomaly Detection

IDS employ anomaly detection techniques to identify deviations from normal patterns of network traffic. By establishing baseline profiles of normal network behavior, IDS can detect and alert administrators to anomalous activities that may signify security incidents, such as unusual spikes in network traffic, unexpected changes in user behavior, or unauthorized access attempts.

Signature-Based Detection

In addition to anomaly detection, IDS utilize signature-based detection methods to identify known patterns of malicious activity, such as virus signatures, malware payloads, or attack signatures associated with specific exploits. IDS compare network traffic against a database of predefined signatures and generate alerts when a match is found, enabling rapid detection and response to known threats

Secure Socket Layer (SSL)/Transport Layer Security (TLS) in HTTPS

HTTPS (Hypertext Transfer Protocol Secure) is a crucial protocol used for secure communication over the internet. It builds upon the HTTP protocol by adding an extra layer of security through encryption, ensuring that sensitive data transmitted between the client and server remains confidential and secure. HTTPS leverages SSL/TLS (Secure Sockets Layer/Transport Layer Security) protocols, which are cryptographic protocols that establish a secure connection between the client and server.

Encryption Mechanisms

SSL/TLS protocols employ various encryption mechanisms to protect data transmissions from eavesdropping and tampering attempts. This includes symmetric encryption, where data is encrypted and decrypted using the same key, and asymmetric encryption, where a pair of public and private keys are used for encryption and decryption. These encryption mechanisms ensure that even if intercepted, the data remains unreadable to unauthorized parties.

SSL/TLS Handshake

The SSL/TLS handshake is a crucial process that occurs when establishing a secure connection between the client and server. It involves several steps:

ClientHello: The client initiates the handshake by sending a "ClientHello" message to the server, indicating support for SSL/TLS and listing the cryptographic algorithms and protocols it can use.

ServerHello: The server responds with a "ServerHello" message, selecting the highest version of SSL/TLS supported by both the client and server and specifying the chosen cryptographic parameters.

Certificate Exchange: The server sends its digital certificate to the client, which contains the server's public key and other identifying information. The client verifies the authenticity of the certificate, ensuring that it was issued by a trusted Certificate Authority (CA) and has not been tampered with.

Key Exchange: Using the public key from the server's certificate, the client and server perform a key exchange to establish a shared secret key for symmetric encryption. This key will be used to encrypt and decrypt data transmitted between them.

Cipher Negotiation: The client and server negotiate the encryption algorithm and parameters to be used for securing the connection. This includes selecting a cipher suite that provides the desired level of security and performance.

Session Establishment: Once the key exchange and cipher negotiation are complete, the SSL/TLS handshake culminates in the establishment of a secure communication channel between the client and server. Subsequent data transmissions are encrypted using the agreed-upon encryption algorithms and keys, ensuring confidentiality and integrity.

Certificate Validation

As part of the SSL/TLS handshake, the client verifies the authenticity of the server's digital certificate to ensure that it has been issued by a trusted CA and belongs to the intended server. This process helps prevent man-in-the-middle attacks and ensures the integrity of the communication channel.

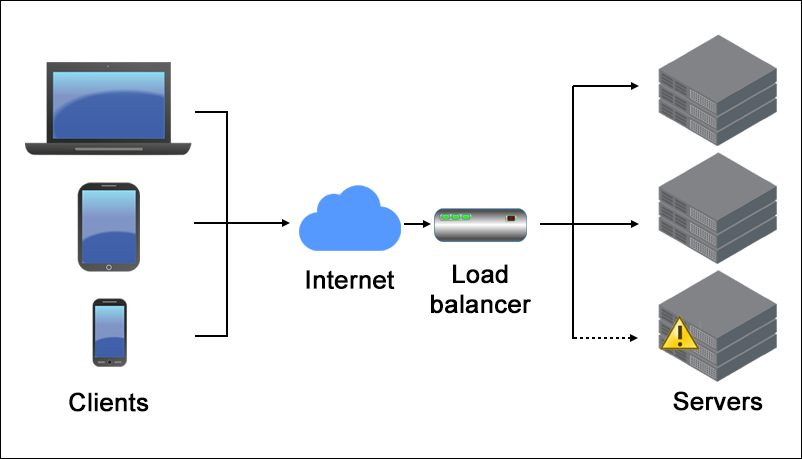

Load Balancing

Load balancers like Haproxy orchestrate traffic distribution across server clusters, optimizing resource utilization and ensuring fault tolerance. Employing algorithms such as round-robin and least connections, load balancers dynamically adapt to traffic patterns, enhancing scalability and availability.

When you type "google.com" into your browser, load balancing orchestrates traffic distribution across Google's server clusters to optimize resource utilization and ensure fault tolerance.

Incoming Request Handling

As soon as you hit enter after typing "google.com," your browser sends an HTTP request to access the Google website. This request is received by a load balancer, such as HAProxy, which acts as the entry point to Google's server infrastructure.

Traffic Distribution

HAProxy dynamically distributes incoming requests across multiple backend servers hosting the Google website. It employs algorithms like round-robin or least connections to evenly spread the workload and prevent any single server from becoming overwhelmed with traffic.

Optimized Performance

By distributing traffic efficiently, HAProxy ensures that each backend server receives an appropriate share of requests, maximizing resource utilization and minimizing response times. This optimization enhances the overall performance of the Google website, allowing it to handle large volumes of traffic without experiencing slowdowns or downtime.

Fault Tolerance

HAProxy plays a crucial role in ensuring fault tolerance for the Google website. It continuously monitors the health and availability of backend servers, automatically removing any servers that may be experiencing issues or downtime from the load-balancing pool. This proactive approach helps maintain the availability and reliability of the Google website, even in the event of server failures or maintenance.

Scalability

As the number of users accessing the Google website fluctuates throughout the day, HAProxy dynamically adapts to changing traffic patterns. It scales the number of backend servers up or down based on demand, ensuring that the website remains responsive and accessible to users, regardless of fluctuations in traffic volume.

Web Servers

Nginx, renowned for its lightweight and high-performance architecture, serves as the cornerstone of web server infrastructure. Configuration directives, server blocks, and caching mechanisms enable Nginx to efficiently handle HTTP/HTTPS requests, mitigate latency, and scale gracefully under heavy loads.

Browser Request Handling

Upon pressing Enter, your browser initiates an HTTP request to access the Google website. This request is received by a network of servers, including those equipped with Nginx as the primary web server software.

Nginx Configuration Directives

Nginx's configuration directives play a crucial role in determining how incoming requests are handled and served. These directives define various aspects of server behavior, such as listening ports, server names, request routing, and SSL/TLS encryption.

Server Blocks

Nginx utilizes server blocks, also known as virtual hosts, to host multiple websites or applications on a single server instance. Each server block contains configuration settings specific to a particular domain or subdomain, allowing Nginx to differentiate between incoming requests and route them to the appropriate website or application.

Handling HTTP/HTTPS Requests

Nginx efficiently handles both HTTP and HTTPS requests, leveraging its lightweight and high-performance architecture to process incoming traffic rapidly. By supporting secure connections through SSL/TLS encryption, Nginx ensures the confidentiality and integrity of data transmitted between your browser and the Google servers.

Caching Mechanisms

Nginx's caching mechanisms play a crucial role in mitigating latency and improving website performance. By caching frequently accessed resources, such as images, scripts, and static content, Nginx reduces the need to fetch these resources from the origin server repeatedly. This results in faster page load times and a smoother browsing experience for users accessing the Google website.

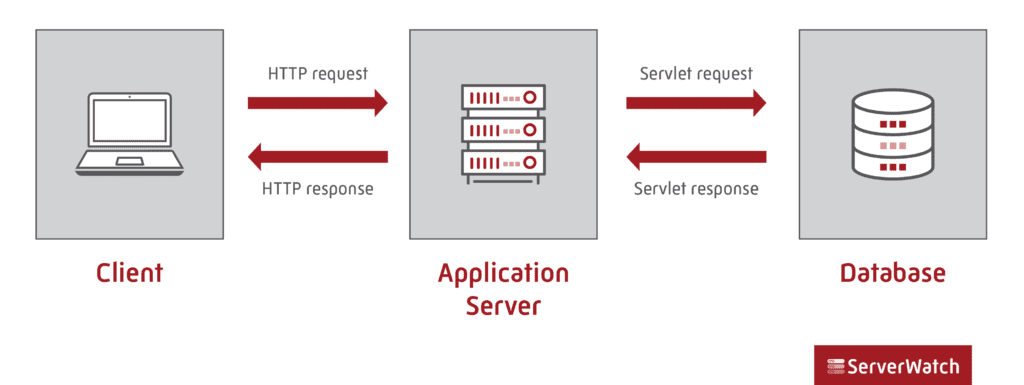

Application Servers

Application servers collaborate with web servers to process dynamic content, execute business logic, and deliver personalized experiences. Leveraging frameworks like Django or Node.js, application servers interface with databases, generate dynamic HTML, and handle user interactions, enriching web applications with responsiveness and interactivity.

Application Server Integration

Behind the scenes, application servers such as those powered by frameworks like Django or Node.js come into play. These application servers collaborate closely with web servers to handle dynamic content generation, execute complex business logic, and facilitate user interactions.

Execution of Business Logic

Upon receiving the HTTP request, the application server executes the necessary business logic to process the request. This may involve querying databases, retrieving relevant data, performing calculations, or accessing external services to fulfil the user's request.

Dynamic Content Generation

Application servers generate dynamic HTML content in real-time based on the user's request and the current state of the application. This dynamic content may include search results, personalized recommendations, or interactive elements tailored to the user's preferences and behavior.

Interface with Databases

Application servers interface with databases to retrieve and manipulate data as required by the user's request. This interaction may involve querying databases to retrieve search results, update user profiles, or fetch relevant information to be displayed on the webpage.

Enriching User Experience

By leveraging frameworks like Django or Node.js, application servers enrich web applications with responsiveness and interactivity. They facilitate seamless user interactions, such as form submissions, AJAX requests, or real-time updates, enhancing the overall user experience and engagement.

Personalized Experiences

Application servers play a crucial role in delivering personalized experiences to users by dynamically generating content tailored to their preferences, location, browsing history, and other relevant factors. This personalization adds value to the user experience and increases user satisfaction and retention.

Integration with Web Servers

Finally, application servers seamlessly integrate with web servers like Nginx to deliver the generated dynamic content to the user's browser. Web servers handle the transmission of data between the application server and the browser, ensuring efficient and reliable delivery of content to users worldwide.

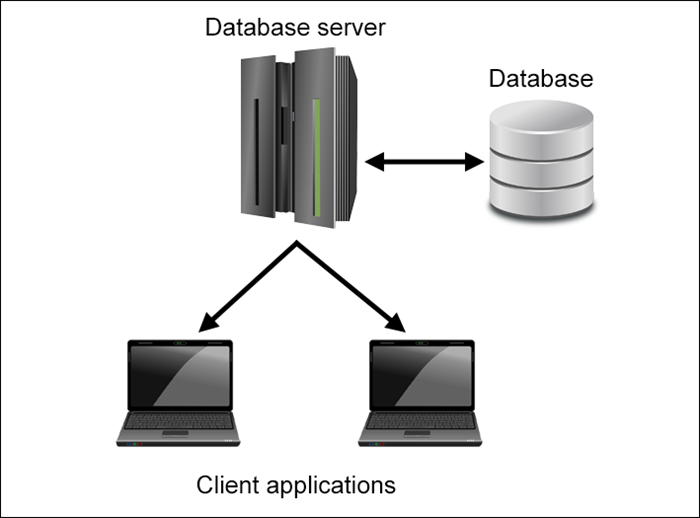

Database Servers

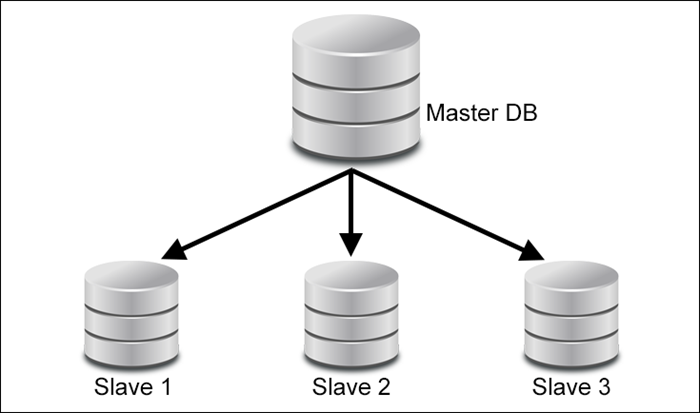

Database servers employ replication models like Master-Slave Primary-Replica to ensure data redundancy and fault tolerance. By replicating data across multiple nodes, database systems enhance availability and resilience, safeguarding against hardware failures and data corruption.

Database Server Integration

Behind the scenes, database servers form an integral part of the infrastructure supporting the Google website. These servers employ sophisticated replication models, such as Master-Slave or Primary-Replica, to ensure data redundancy and fault tolerance.

Data Replication

Database servers replicate data across multiple nodes using replication models like Master-Slave or Primary-Replica. In these models, a master database server (or primary node) serves as the authoritative source of data, while one or more replica servers (or slave nodes) maintain copies of the data. Changes made to the master database are asynchronously propagated to the replica servers, ensuring that they remain synchronized and up-to-date.

Enhanced Availability

By replicating data across multiple nodes, database systems enhance availability and resilience. In the event of a hardware failure or network outage affecting the master database server, one of the replica servers can seamlessly take over, ensuring uninterrupted access to data and minimizing downtime for users accessing the Google website.

Fault Tolerance

Database replication models provide fault tolerance by safeguarding against hardware failures and data corruption. In the event of a failure affecting the master database server, one of the replica servers can automatically assume the role of the master, ensuring continuity of service and preventing data loss or corruption.

Data Integrity

Database replication models also contribute to data integrity by ensuring that changes made to the master database are accurately and reliably replicated to the replica servers. This ensures that all nodes in the database cluster maintain consistent and synchronized copies of the data, minimizing the risk of discrepancies or inconsistencies.

Load Distribution

In addition to providing fault tolerance and data redundancy, database replication models can also contribute to load distribution and scalability. By distributing read-only queries across multiple replica servers, database systems can handle a higher volume of concurrent requests and improve overall system performance.

Conclusion

From URL parsing to database replication, each facet of the process contributes to the seamless delivery of content when users navigate to "google.com" and trigger a request. By unravelling these intricacies, we gain deeper insights into the underlying mechanisms that power modern web communication and user experiences.

Subscribe to my newsletter

Read articles from Temitayo Daisi-Oso directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Temitayo Daisi-Oso

Temitayo Daisi-Oso

Meet Temitayo, a coding virtuoso and a cool visionary with an unquenchable thirst for innovation. Focused on joining the Mars exploration team, he dreams of propelling humanity to new heights. At the same time, Temitayo aspires to make a mark in Meta, developing cutting-edge software for Mixed Reality. With each line of code, he's shaping a future where technology not only reaches the stars but also transforms our daily reality.