Difference between RandomForestClassifier and RandomForestRegressor

Vanshika Kumar

Vanshika Kumar

Random Forest is widely recognized as a powerful ensemble learning algorithm in machine learning due to its ability to combine multiple decision trees for improved predictive accuracy and robustness.

However, the Random Forest algorithm has two specific implementations: RandomForestClassifier for classification tasks and RandomForestRegressor for regression tasks, each addressing different types of problems.

Random Forest is a method that builds multiple decision trees during training and predicts the most common class (classification) or average prediction (regression) of the trees.

Each tree is constructed using a random subset of features and training data, a technique known as bagging, which stands for "Bootstrap Aggregating."

The Random Forest model makes its final prediction by combining the predictions of all the individual trees. For classification problems, the majority vote of the trees determines the final class prediction, while for regression problems, the mean or median of the individual tree predictions is used as the final prediction.

The Random Forest algorithm is renowned for its robustness and versatility, as it can effectively manage both categorical and numerical data.

Key Features of the Random Forest Algorithm:

Ensemble Method: Combines multiple decision trees to reduce variance and bias.

Versatility: Random Forest can be utilized for both classification and regression tasks, showcasing its flexibility.

Robustness: Random Forest is less prone to overfitting compared to single decision trees, enhancing its stability in predictive modeling.

Parameter Tuning: Offers several hyperparameters for tuning, such as n_estimators, max_depth, and min_samples_split.

RandomForestClassifier:

The RandomForestClassifier is a version of the Random Forest algorithm designed explicitly for solving classification tasks. It is used when the target variable is categorical, representing distinct classes or labels.

In classification, the goal is to predict the class to which a set of input features belongs. For example, classifying whether an email is spam or not, determining the species of an iris flower based on its petal and sepal measurements, or identifying handwritten digits from image data.

The RandomForestClassifier builds multiple decision trees, each trained on a random subset of the training data and features. During prediction, each tree in the ensemble casts a vote for the class it predicts, and the final class prediction is determined by the majority vote of all the trees.

Key Characteristics:

Output: predicts the class label for given input samples.

Mode of Operation: Outputs the class that is predicted most frequently by the individual trees in the ensemble.

Use Case: Ideal for binary and multiclass classification problems such as sentiment analysis, image recognition, and fraud detection.

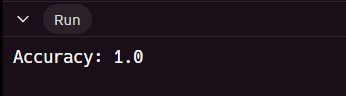

Here's an example code snippet for using RandomForestClassifier in Python with scikit-learn:

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Load dataset

iris = load_iris()

X = iris.data

y = iris.target

# Split dataset into training set and test set

X_train, X_test, y_train, y_test = train_test_split(X,

y,

test_size=0.3,

random_state=42)

# Create a RandomForestClassifier object

clf = RandomForestClassifier(n_estimators=100, random_state=42)

# Train the model using the training sets

clf.fit(X_train, y_train)

# Predict the response for test dataset

y_pred = clf.predict(X_test)

# Model Accuracy

print("Accuracy:", accuracy_score(y_test, y_pred))

RandomForestRegressor:

In contrast, the RandomForestRegressor is tailored for regression tasks, aiming to predict a numerical value when the target variable is continuous.

Regression problems involve predicting a quantitative value based on a set of input features. Examples include predicting house prices based on features like square footage, number of bedrooms, and location; forecasting stock prices based on historical data; or estimating the fuel efficiency of a car based on its engine specifications.

Like the RandomForestClassifier, the RandomForestRegressor builds multiple decision trees, but instead of predicting a class label, each tree predicts a numerical value. The final prediction is obtained by averaging or taking the median of the predictions from all the individual trees in the ensemble.

Key Characteristics:

Output: Predicts a continuous output value for given input samples.

Mean Prediction: Outputs the average prediction calculated from the individual tree predictions in the ensemble.

Use Case: Ideal for predicting continuous values like house prices, stock prices, and temperature forecasts.

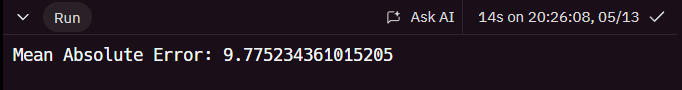

Here's an example code snippet for using RandomForestRegressor in Python with scikit-learn:

from sklearn.ensemble import RandomForestRegressor

from sklearn.datasets import make_regression

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_absolute_error

# Generate a random regression dataset

X, y = make_regression(n_samples=1000,

n_features=10,

n_informative=5,

random_state=42)

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X,

y,

test_size=0.2,

random_state=42)

# Create a RandomForestRegressor instance

regr = RandomForestRegressor(n_estimators=100, random_state=42)

# Train the regressor

regr.fit(X_train, y_train)

# Make predictions on the test set

y_pred = regr.predict(X_test)

# Evaluate the model's performance using Mean Absolute Error (MAE)

mae = mean_absolute_error(y_test, y_pred)

print(f"Mean Absolute Error: {mae}")

Key Differences Between RandomForestClassifier and RandomForestRegressor:

While both RandomForestClassifier and RandomForestRegressor are based on the same Random Forest algorithm, they differ in their approaches to handling the target variable and making predictions.

1. Target Variable: The RandomForestClassifier is designed to handle categorical target variables, where the goal is to predict the class or label. In contrast, the RandomForestRegressor is designed to handle continuous target variables, where the goal is to predict a numerical value.

2. Prediction Method: In the RandomForestClassifier, the final prediction is determined by taking the majority vote of the individual trees' predictions. Each tree casts a vote for the class it predicts, and the class with the most votes is chosen as the final prediction. On the other hand, in the RandomForestRegressor, the final prediction is obtained by averaging or taking the median of the numerical predictions from all the individual trees.

3. Evaluation Metrics: Classification tasks commonly use metrics like accuracy, precision, recall, and F1-score, while regression tasks rely on metrics such as mean squared error (MSE), mean absolute error (MAE), and R-squared. For classification problems, metrics such as accuracy, precision, recall, and F1-score are commonly used, while for regression problems, metrics like mean squared error (MSE), mean absolute error (MAE), and R-squared are more appropriate.

4. Hyperparameters: RandomForestClassifier has a class_weight parameter for handling imbalanced datasets, while RandomForestRegressor includes a max_features parameter to control the number of features considered during node splitting. For example, the RandomForestClassifier has a class_weight parameter to handle imbalanced datasets, while the RandomForestRegressor has a max_features parameter to control the number of features considered when splitting a node.

| Feature | RandomForestClassifier | RandomForestRegressor |

| Purpose | Designed for classification tasks, it predicts discrete class labels. | Tailored for regression tasks, predicting continuous values. |

| Output Type | Predicts the class label for given input samples. | Predicts a continuous output value for given input samples. |

| Operation Mode | Outputs the class, that is the mode of the classes of the individual trees. | Outputs the mean prediction of the individual trees. |

| Use Case | Ideal for binary and multiclass classification problems. | Ideal for predicting continuous values, such as house prices or stock prices. |

| Example Code | python<br>from sklearn.ensemble import RandomForestClassifier<br>clf = RandomForestClassifier(n_estimators=100)<br>clf.fit(X_train, y_train)<br>y_pred = clf.predict(X_test) | python<br>from sklearn.ensemble import RandomForestRegressor<br>reg = RandomForestRegressor(n_estimators=100)<br>reg.fit(X_train, y_train)<br>y_pred = reg.predict(X_test) |

In summary, the RandomForestClassifier is tailored for classification problems where the target variable is categorical and the goal is to predict the class or label. On the other hand, the RandomForestRegressor is designed for regression problems, where the target variable is continuous and the goal is to predict a numerical value.

While both implementations utilize the Random Forest algorithm's capabilities, they vary in handling target variables, making predictions, evaluating metrics, and utilizing specific hyperparameters.

Subscribe to my newsletter

Read articles from Vanshika Kumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vanshika Kumar

Vanshika Kumar

Sage Code Hooter: A dedicated "Python" and "Blockchain Enthusiast" Also, I write blogs!!!