Our learnings on AWS Glue Jobs Billing

DataChef

DataChef

On the surface, the billing of AWS Glue Spark Jobs seems quite straightforward.

O.44$ per DPU (Data Processing Unit) per Hour. The amount of DPUs is defined by the selected Job type and amount of workers. Clear, right?

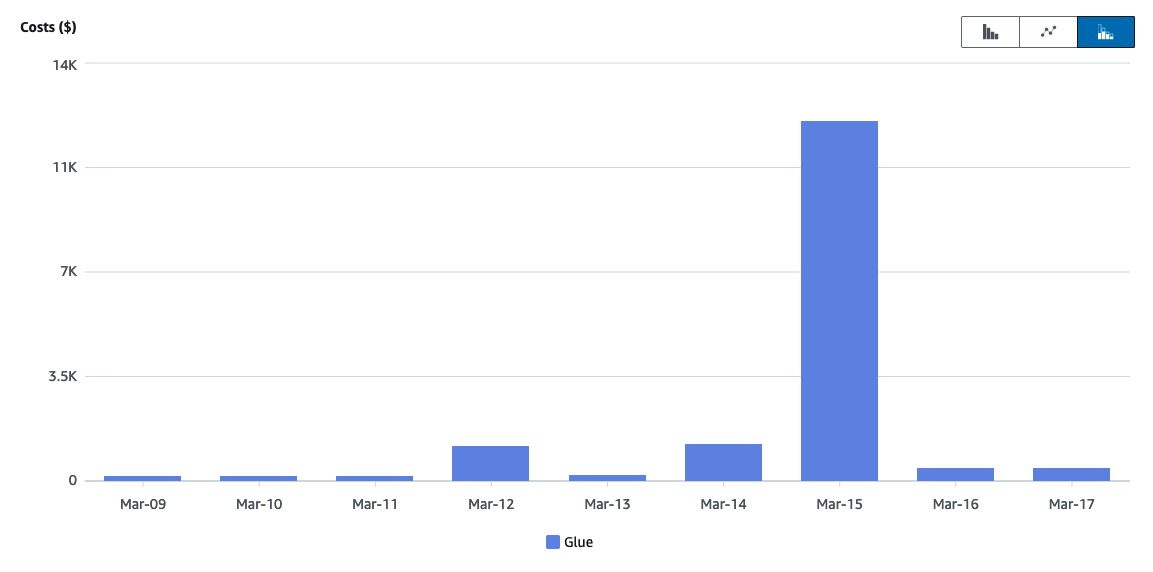

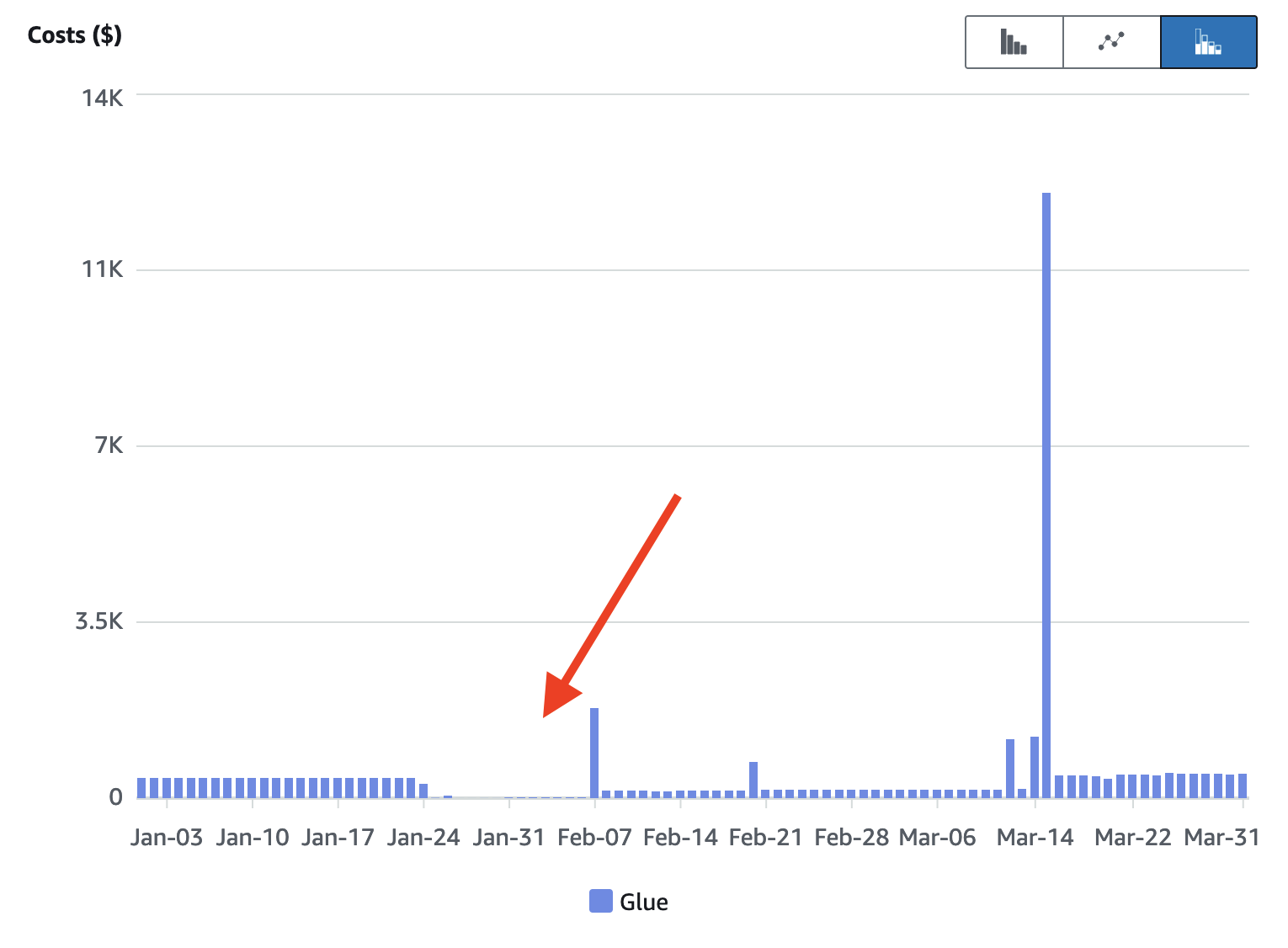

It seemed so, but after running several Spark Structured Streaming jobs on AWS Glue for a few months, we suddenly noticed unusually high Glue costs for the current month. After digging further, we discovered a cost spike of over ten thousand dollars, in a single day!

Investigation

We immediately went on a hunt to find all the AWS Glue resources that were contributing to this huge cost spike, but we found nothing. What has been consuming over 25000 DPU Hours in a single day?

Baffled, we contacted AWS support right away. They came back to us and said that the charges were legit and this huge cost spike was due to long-running batch jobs that were billed at termination on the 15th of March.

Looking back we found long periods of low spending when all the streaming jobs were up and running, and a few (smaller) cost spikes on the days when we had to reboot a few of them to deploy changes.

This seemed like an odd way to bill streaming jobs, after all, by definition they are supposed to be always up and running. Billing them at termination is simply a wrong billing model for streaming tasks, which can have deep repercussions on a company's budgeting.

AWS support tried to reproduce the billing anomaly but found that Glue Streaming jobs are billed daily. What happened here then?

Finding the Root Cause

AWS Glue offers 2 job types for Spark jobs:

Glue ETL: For running batch jobs, billed at termination

Glue Streaming: For running Spark Structured Streaming jobs, billed daily

AWS support confirmed that our job has been running on a Glue ETL job type (unfortunately we could not diagnose this by ourselves as users cannot see the Glue job type of a specific job run).

We dived into our CDK code, we saw that:

There was a bug deploying Spark Streaming scripts on Glue ETL jobs

The Glue ETL job properties were configured to not have a timeout (as we were deploying a streaming job configuration)

As it turns out, Glue can happily deploy and run Spark Structured Streaming tasks on a Glue ETL job type, simply treating it as a very (very) long-running batch job.

As highlighted by our story, this could result in very misleading billing cycles.

Our Takeaways

When deploying Spark Streaming jobs on AWS Glue, it is up to the user to ensure that the Glue Job type and the Spark trigger type align. Hence, make sure to raise an exception at build time if they don't.

Monitor your spending

Most importantly, you should have AWS budgets set up on your account to catch cost anomalies as soon as they occur.

This is something that took us some time to notice and we have since built and deployed a cost-monitoring construct to quickly provision AWS budgets to all our accounts right from the start.

Subscribe to my newsletter

Read articles from DataChef directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

DataChef

DataChef

DataChef, founded in 2019, is a boutique consultancy specializing in AI and data management. With a diverse team of 20, we focus on developing Data Mesh, Data Lake, and predictive analytics solutions via AWS, improving operational efficiency, and creating industry-specific machine learning applications.