How to: Build a custom job module on Lilypad

Steven DeveloperSteve Coochin

Steven DeveloperSteve Coochin

We are already experiencing the grasp of an AI revolution and seeing the demand for a greater need for GPU-intensive processing. While AI adoption use has brought with it a massive uptick in productivity, it has also increased the infrastructure complexity and hardware required to operate heavy computational workloads. This capability is becoming increasingly important for fields that require heavy computational power, such as data science and gaming.

At Lilypad, our mission is to democratise and decentralise access so anyone can access the GPU needed to run containerised heavy compute workloads. Building a custom job module for the Lilypad network is the first step towards achieving this goal, provided the container is deterministic and adheres to a few prerequisites. This ensures that computational resources are optimised, processing times are reduced, and scalability is enhanced.

So, let's examine how to build a custom module for the Lilypad Network, starting with a quick introduction to Lilypad and its functions.

A look under the surface of Lilypad

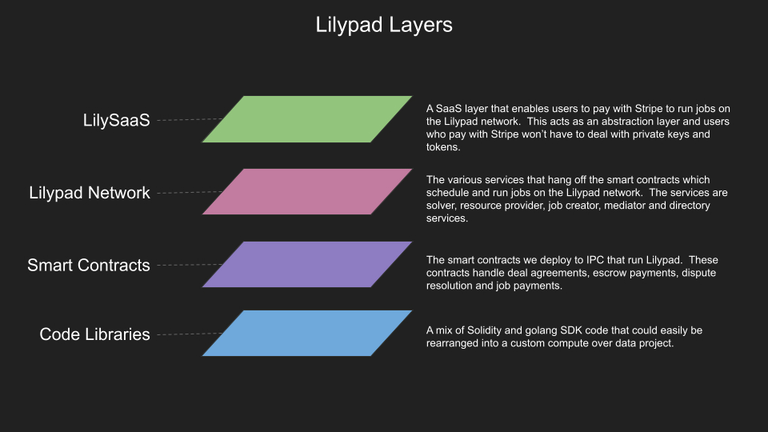

Lilypad is a distributed computational network that provides the essential infrastructure for AI inference, machine learning training, and decentralised science (DeSci), among other applications. It is not directly aimed at solving accessibility issues for AI models but focuses on alleviating the challenges of securing high-performance AI hardware through decentralised AI computational services. Lilypad's mission addresses the significant barriers encountered in integrating high-performance computing for AI technologies into their workflows, setting itself apart from traditional centralised systems that often limit access to necessary compute resources.

Lilypad democratises access to high-performance computing by establishing a verifiable, trustless, and decentralised computational network. It leverages decentralised infrastructures to improve efficiency, transparency, and accessibility, supporting a broad spectrum of applications from AI job inference to supply chain analysis. This open-computer network ensures users can access and execute AI models and jobs independently, promoting a scalable and interoperable ecosystem across various blockchain environments. Lilypad’s commitment is further emphasised through plans for an incentivised testnet and the decentralisation of mediators, aiming to refine the network’s robustness and user engagement before it’s full-scale launch.

Getting started building a custom job module

Before we begin this adventure into the decentralised compute space, we need to get some things set up. You are going to need:

Install the Lilypad CLI, see the getting started and setup guide to learn more.

With all that in place, you will need a containerised application that adheres to deterministic behaviour. This means it should consistently produce the same output given the same initial state and inputs, regardless of the environment in which it runs. To achieve determinism, your containerised module must avoid elements that could lead to variability in its execution, such as timestamps, random number generators without fixed seeds, or reliance on local system configuration.

For example, if you're developing a machine learning model for image classification, ensure that the model initialisation always starts with the same random seed from an -i input variable. This means every time the model is trained with the same data set under identical conditions, it will produce the same model parameter and can be rerun by a mediator to obtain the same resulting output.

To better demonstrate how this works, we will take a containerised version of the classic cowsay cow ASCII art generation demo, and add in what we need for it to run as a module on the Lilypad Network.

Containerising and configuration

The first step is to containerise your application or codebase if it's not already. Remember, the goal here is to create a deterministic app with repeatable execution so its success can be validated. It is important to note that if you already have a containerised application in mind, you can skip to the next part on the Lilypad template.

Containerising

Navigate to a working directory in your dev environment and create and open a Dockerfile using your code editor of choice. Add the standard containerised cowsay to the first line by adding the following FROM grycap/cowsay:latest.

This version of the cowsay demo uses an input as part of the runtime to define a specific ASCII input message, which ordinarily would be generated using:

docker run --rm grycap/cowsay /usr/games/cowsay "Hello World"

So, we will tweak that slightly to utilise an environmental variable at runtime to generate that a little more dynamically. Define a default value for that variable by adding ENV DEFAULT_MESSAGE="Hello World" as the next line in our Dockerfile. This will serve as a catch all, just in case there is no MESSAGE variable defined as part of the runtime.

This brings us to the most vital part of the Dockerfile, the ENTRYPOINT, which ties everything together and makes that runtime run. Using the code from the original container example combined with our more dynamic approach, will use /usr/games/cowsay \"$MESSAGE\"and then set an overarching env variable after the entrypoint to set our default value for MESSAGE.

So the whole Dockerfile will look like this

FROM grycap/cowsay:latest

ENV DEFAULT_MESSAGE="Hello World"

ENTRYPOINT ["/bin/sh", "-c", " /usr/games/cowsay \"$MESSAGE\""]

ENV MESSAGE="$DEFAULT_MESSAGE"

Running this in a docker container, once built using docker build -t lilysay ., is now as easy as executing docker run -e MESSAGE=”Hello Docker World” lilysay.

This then brings us to the next step, which is running the container on Lilypad

Configuring the Lilypad Network template

For the module to run on the network, we need the containerised image to be published in a public, accessible location referenced within the template file. This can be hosted in a multiple of locations, providing it’s publicly available so that the job can be rerun if needed to validate and replicate the outcome.

At it’s core, Lilypad codebase is written largely in Golang which brings with it the speed and extensibility required to run off-chain compute with on-chain guarantees. This is carried on into the Lilypad module templates, which define how a job needs to be run, similar to that of a Docker file. Once the image is published publicly, create a lilypad_module.json.tmplin the project root, and populate with the following:

{

"machine": {

"gpu": 0,

"cpu": 1000,

"ram": 100

},

"job": {

"APIVersion": "V1beta1",

"Spec": {

"Deal": {

"Concurrency": 1

},

"Docker": {

"Entrypoint": [

"/bin/sh",

"-c",

"/usr/games/cowsay \"$MESSAGE\""

],

"EnvironmentVariables": [

{{ if .Message }}"{{ subst "MESSAGE=%s" .Message }}"{{else}}"Message=Hello World"{{ end }}

],

"Image": "lilypadnetwork/lilysay:0.0.6@sha256:9dea5ce25fce2cb0b53729b7aeabd139951a21356c63a11922a58b1d199050cd"

},

"Engine": "Docker",

"Network": {

"Type": "None"

},

"PublisherSpec": {

"Type": "IPFS"

},

"Resources": {

"GPU": ""

},

"Timeout": 1800,

"Verifier": "Noop"

}

}

}

Here's a breakdown of what each element of the Lilypad job spec does and why its required:

APIVersion: Specifies the API version for the job.

Spec: Contains the detailed job specifications:

Deal: Sets the concurrency to 1, ensuring only one job instance runs at a time.

Docker: Configures the Docker container for the job

Entrypoint: Defines the command to be executed in the container, which sets up the

cowsaycommand.EnvironmentVariables: This can be utilised to set env vars for the containers runtime, in the example above we use Go templating to set the MESSAGE variable dynamically from the CLI.

Image: Specifies the image to be used (lilypadnetwork/lilysay:0.0.6), Its important to note to reference the SHA256 hash if you are using Docker.

Engine: In our example we are using Docker as the container runtime.

Network: Specifies that the container does not require networking (Type: "None").

PublisherSpec: Sets the method for publishing job results to IPFS.

Resources: Indicates no additional GPU resources are needed (GPU: ""). As this is a very light job so does not need intensive processing.

Timeout: Sets the maximum duration for the job to 1800 seconds (30 minutes).

Verifier: Specifies "Noop" as the verification method, meaning no verification is performed.

Once all the Lilypad configurations are in place, we are ready to give it a run. Git push this spec to a GitHub repo, which can be alongside other components or as the sole inhabitant of the repository. Then add a git tag which is referenced from the Lilypad command line, along with the repository in question:

lilypad run github.com/lilypad-tech/lilypad-module-lilysay:0.1.0 -i Message='Hello lilypad world'

You’ll also notice here that there's an input flag -i Message=, which is passed to the Golang template within the EnvironmentVariables section of the template and can then be instigated from the command line.

And the end result is…

I've added a few more features to the final version of this Lilypad module, which we call Lilysay and is available on GitHub. The final version also adds a Image= flag option with the ability to change the ascii images from the command line.

Set up Lilypad and test it out

That covers what you need to know about building a containerised module to run on Lilypad. We would love to hear about your projects and ideas. Join the growing Lilypad community on Discord, share your thoughts on the network, and discuss the exciting things you want to build.

Subscribe to my newsletter

Read articles from Steven DeveloperSteve Coochin directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Steven DeveloperSteve Coochin

Steven DeveloperSteve Coochin

Chief Innovation Officer Lilypad.tech 30+ years Developer 10+ years Data Analyst 10+ years Developer Relations