When to Use Random Forest: A Comprehensive Guide

Amey Pote

Amey Pote

Introduction

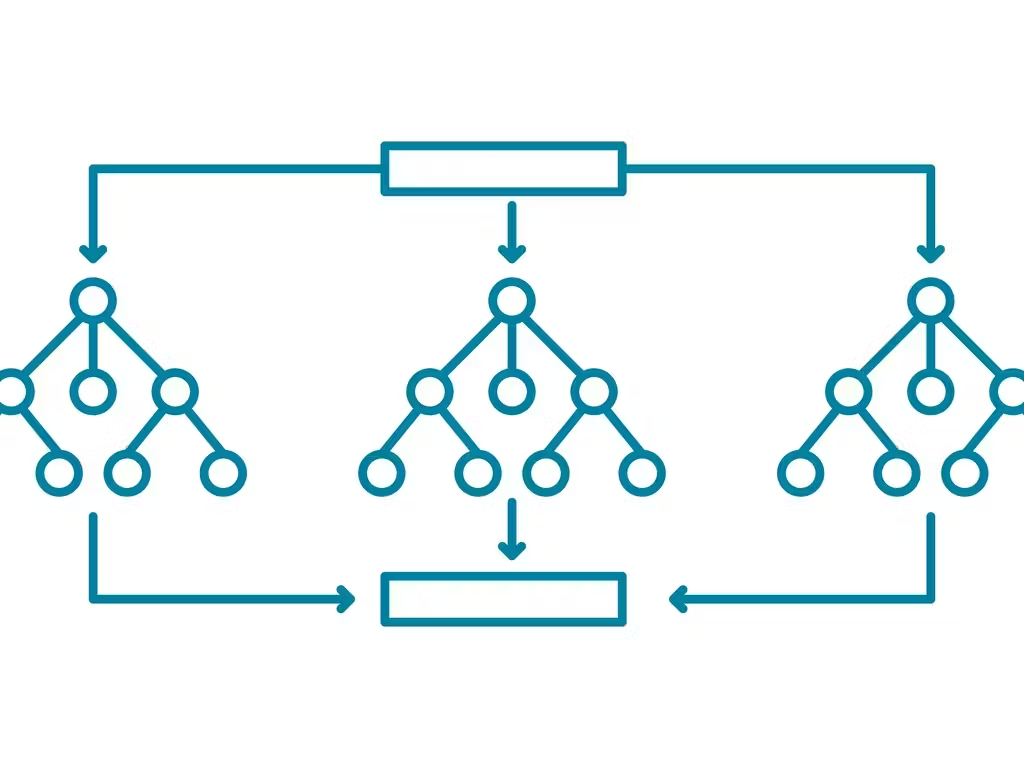

Random Forest is a powerful and versatile machine learning algorithm that excels in various scenarios, making it a popular choice among data scientists and machine learning practitioners. Here’s an in-depth look at when to use Random Forest, highlighting its strengths and limitations.

1. Handling Outliers

Random Forest, being an ensemble of decision trees, is inherently robust to outliers. Decision trees split the data into distinct regions and are less affected by extreme values compared to other algorithms like linear regression. This robustness makes Random Forest a suitable choice for datasets with outliers, as it reduces the impact of these anomalies on the model’s performance.

2. No Assumptions About Data Distribution

One of the key advantages of Random Forest is that it does not assume any specific underlying distribution for the data. This flexibility allows it to handle a wide variety of data types and distributions, making it a versatile tool for different kinds of predictive modelling tasks.

3. Handling Collinearity in Features

Random Forest can implicitly manage collinearity among features. When two or more features are highly correlated, the algorithm's information gain from splitting on one feature diminishes the predictive power of the others. Thus, Random Forest naturally accounts for feature collinearity, which can otherwise complicate model training and interpretation in linear models.

4. Feature Selection

Random Forest can be used effectively for feature selection. During training, it identifies and prioritizes the most important features by the number of times they are used for splitting or by their impact on reducing impurity. However, caution is needed when interpreting feature importance, especially in the presence of highly correlated features, as the importance scores might not accurately reflect the individual predictive power of each feature.

5. Interpreting Feature Relationships

If your primary goal is to understand the relationship between features and the target variable, Random Forest might not be the best choice. Algorithms like Logistic Regression or Lasso Regression are more suitable for understanding feature coefficients and their direct influence on the target variable. These models provide clearer insights into whether a feature has a positive or negative impact on the target and the magnitude of this effect.

6. Extrapolation Limitations

Random Forest is not ideal for extrapolation beyond the range of the training data. For example, if your training data includes house prices up to $700,000 and you need to predict the price of a house valued at $1,500,000, Random Forest will struggle as it has not seen similar data during training. Linear models, despite their simplicity, can extrapolate linearly beyond the training range, albeit with caution regarding accuracy.

7. Practical Applications

Here are some practical scenarios where Random Forest excels:

Classification Problems: Random Forest is particularly effective in classification tasks, such as spam detection, fraud detection, and medical diagnosis.

Regression Problems: It can also handle regression tasks, predicting continuous outcomes like house prices or temperature.

High-Dimensional Data: When working with datasets with a large number of features, Random Forest can effectively handle the complexity and reduce the risk of overfitting.

Conclusion

Random Forest is a versatile algorithm that offers robustness to outliers, flexibility in data distribution, and built-in mechanisms for handling collinearity and feature selection. However, it has limitations in interpretability and extrapolation. Understanding these strengths and weaknesses will help you determine when to use Random Forest and when to consider alternative models for your specific machine learning tasks.

Subscribe to my newsletter

Read articles from Amey Pote directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by