how to choose an activation function

Sanika Nandpure

Sanika Nandpure

Now that we've discussed several different activation functions, it is natural to ask--how do we choose which activation function to use? We will partially answer this question for now, and will fully answer it in a future post after the introduction of some more concepts.

The modified question for this article is thus: how do we choose the activation function for the output layer?

There are three possibilities:

Binary classification: use sigmoid activation for neurons in the output layer

Regression (y can have either (+) or (-) values): use linear regression (this includes univariate, multiple, and polynomial)

Regression (y can only be (0 or +)): use ReLU

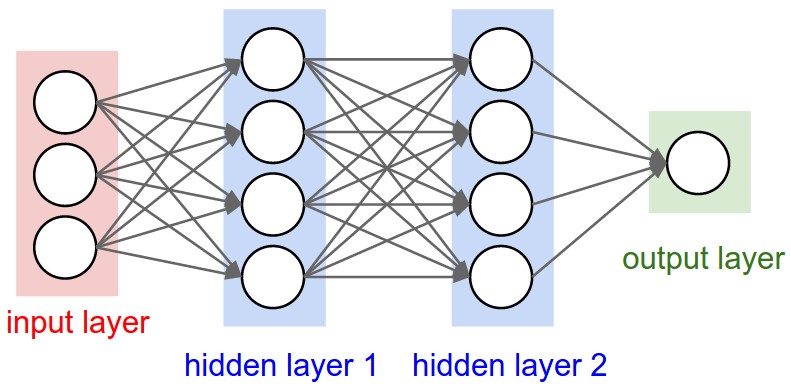

Remember that for hidden layers, the activation function should be ReLU by default regardless of what the neural network does.

Note that there are other activation functions available, but these should work well as a baseline.

(?) QUESTION: Why can’t we just use linear activation for each neuron? What is the purpose of an activation function?

If we use linear regression (aka no activation function) in each neuron, it would be the equivalent of just doing plain linear regression as we usually do in traditional AI models–so there would be no advantage of using a neural network. Furthermore, using linear regression in the hidden layers causes the overall neural network to be equivalent to the activation function used in the output layer. Using different activation functions such as sigmoid or ReLU in the hidden layers allows us to compound learning and make the model learn better.

Subscribe to my newsletter

Read articles from Sanika Nandpure directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sanika Nandpure

Sanika Nandpure

I'm a second-year student at the University of Texas at Austin with an interest in engineering, math, and machine learning.