multiclass classification using softmax activation

Sanika Nandpure

Sanika Nandpure

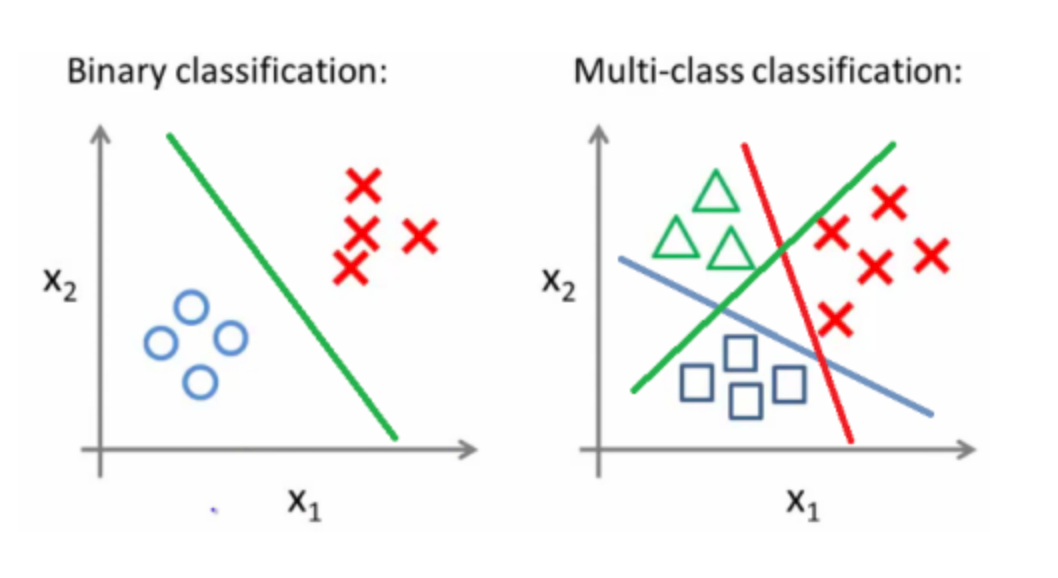

Multiclass classifcation is a problem where the target y can take on more than two possible values. Just as we used the logistic regression algorithm to categorize data into two different categories, we use the softmax algorithm to categorize data into multiple different categories.

zj = (wj · x) + b

where j = 1, 2, 3…N and N is the number of categories.

All this means is that each category has its own set of weights and parameters. These weights and parameters are used to compute a probability of the data being in that particular category. All the probabilities across N categories must add up to 1.

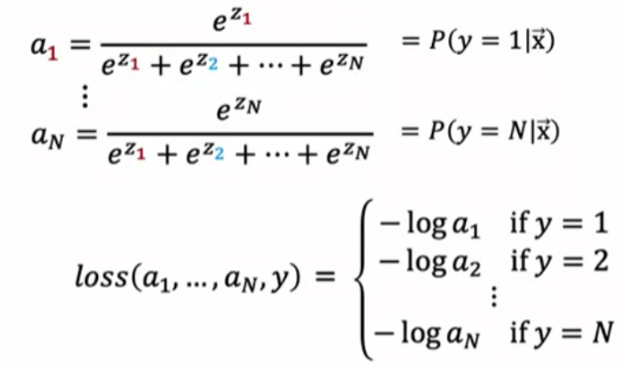

aj = ezj/Σk = 1Nezk = P(y = j|x)

The probability that the output is this category, a, is given as e to the power of the computed z for that category over the sum of all the other computer z’s for other categories.

Note that if you compute softmax regression with two categories, it reduces to a logistic regression.

COST FUNCTION FOR SOFTMAX REGRESSION

Essentially,

loss = -log(aj) if y = j

This is also known as crossentropy loss. This kind of loss for binary classification is known as binary crossentropy loss. Both of these kinds of losses have the same idea–the smaller aj is (i.e., the smaller the probability of y being j is), the higher the loss. Thus, the algorithm favors values for parameters that maximize the probability of the category being chosen for each category.

Note that TensorFlow calls crossentropy loss SparseCategoricalCrossentropy and binary crossentropy loss BinaryCrossentropy.

MULTI-LABEL CLASSIFICATION PROBLEM

This is a slightly tangential problem. The idea is that given a picture, the model should be able to label certain objects in the image.

One approach is to create a separate neural network for each object that the model should be able to detect. Alternatively, you could also train one neural network with three outputs so that you can use the same model to identify many different objects. In this case, the output layer will have a number of neurons equal to the number of objects since there are n different outputs possible where n is the number of different objects the model is expected to identify in the picture.

note

Subscribe to my newsletter

Read articles from Sanika Nandpure directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sanika Nandpure

Sanika Nandpure

I'm a second-year student at the University of Texas at Austin with an interest in engineering, math, and machine learning.