other types of layers

Sanika Nandpure

Sanika Nandpure

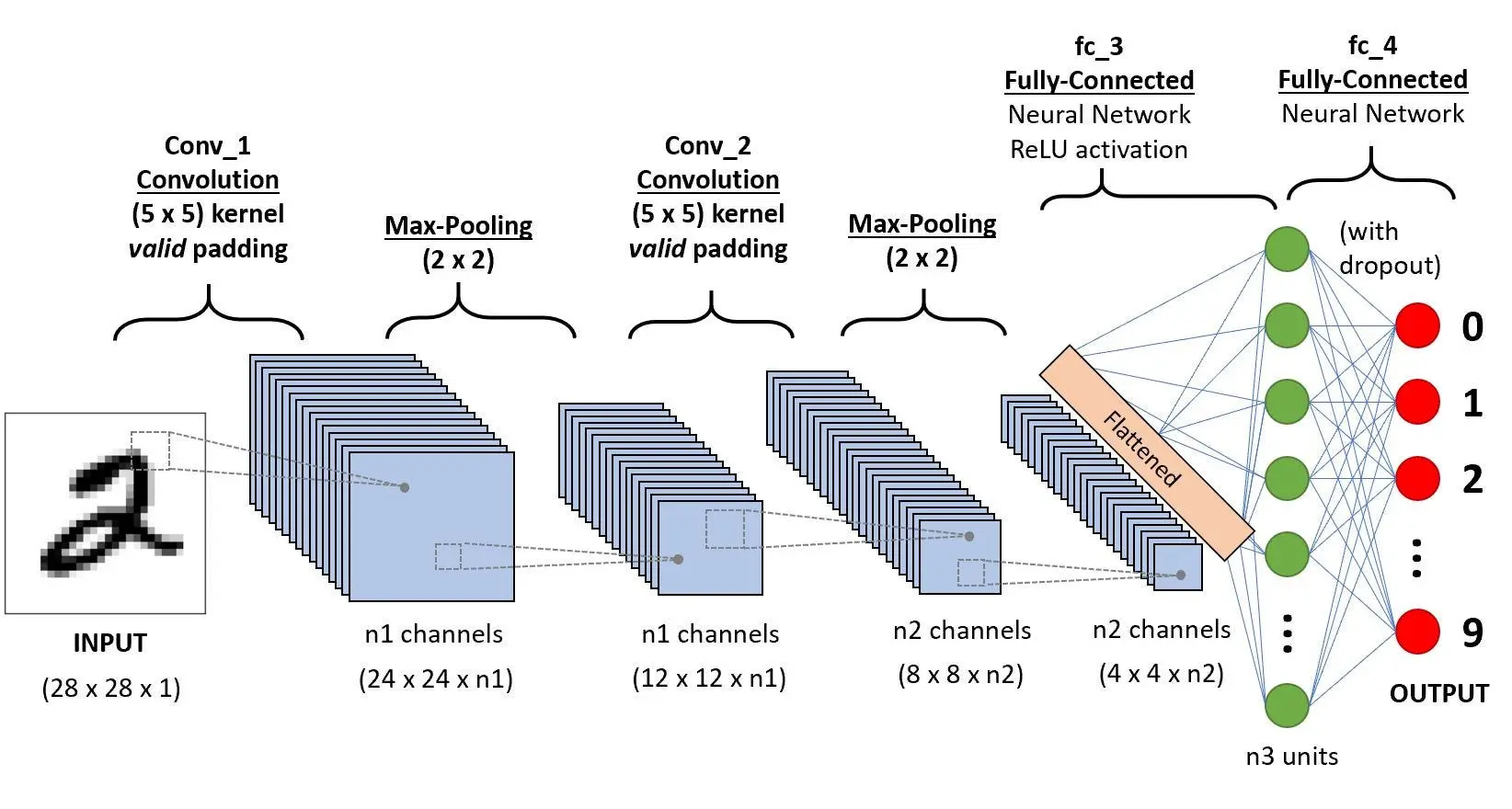

So far, we have looked at Dense layer types whose output becomes the input of another Dense layer. In a Dense layer, each neuron output is a function of all the activation outputs of the previous layer. Key word: all of the activation outputs of the previous layer. Not all types of layers do this. Another type of layer that you may see commonly is the convolutional layer. In a convolutional layer, each neuron only looks at a subset/part of the previous layer’s outputs.

Why might this be useful?

Speeds up computation because not all examples are used in each neuron

A convolutional neural network needs less training data because it makes maximum diverse use of the data it already has.

For the same reason, the CNN is also less prone to overfitting

In CNNs, there are many design choices we have to make such as how big the window of inputs that a single neuron looks at is and how many neurons each layer should have. By making these decisions wisely, we can make neural networks that are both powerful and efficient.

Subscribe to my newsletter

Read articles from Sanika Nandpure directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sanika Nandpure

Sanika Nandpure

I'm a second-year student at the University of Texas at Austin with an interest in engineering, math, and machine learning.