Istio v1.22: Trying latest Ambient mode

Srujan Reddy

Srujan Reddy

Need for Istio

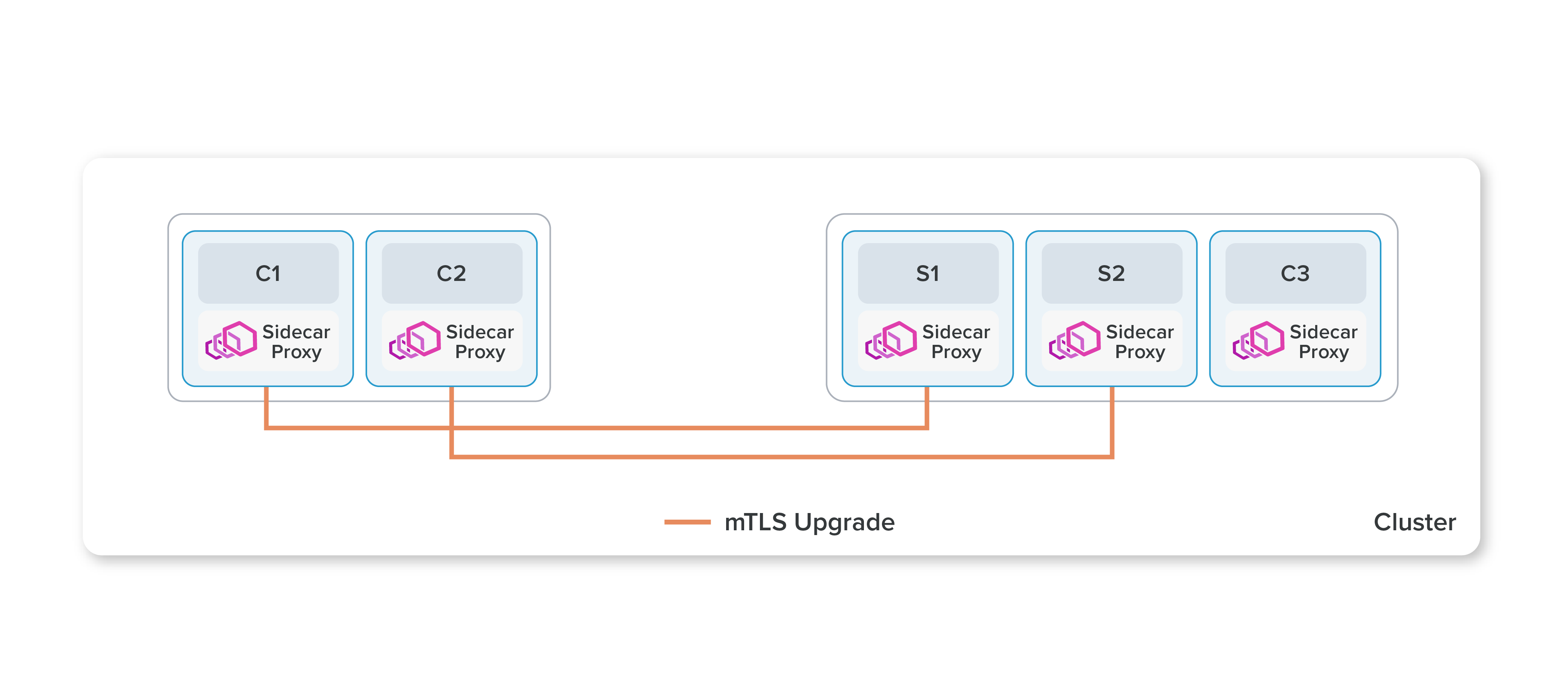

Istio is a service mesh for Kubernetes clusters. It offers many features like zero trust network using mTLS, Traffic management, Routing, observability using Kiali etc. Well how does this do it? By inserting a lightweight sidecar container as proxy in all the pods. So traffic flows to and from these proxy containers. In layman terms, if Kubernetes cluster is a town with paved roads, istio installs highways with traffic signals, CCTV cameras installed, and a centralized Traffic HQ.

Limitations with Istio

The problem with istio is that it needs dedicated resources for sidecar containers. Generally 128Mb memory and 100m CPU is given to the istio sidecars. The resource consumption is minimal, but when you add it to all the pods running in a cluster, it is a significant amount of resources.

The other problem is that traffic between pods has to do two hops i.e. to and from sidecar container, which adds to the latency, Yes minimal (~10-20ms) but still it adds up. This article explores some more of it.

What is Istio Ambient Mesh

On the surface, it is just a new way of proxying without injecting sidecar containers. This ups the istio's game bringing it equivalent to Cilium where Cilium uses eBPF for manipulating traffic. Istio now separates the concerns of L4 and L7 proxying by using two different ways to proxy traffic. This revolutionises the way of using Istio.

For L4 proxying, istio uses node proxy called ztunnel. It is a Daemonset which is deployed on every node and modifies the bidirectional traffic. It secures traffic using mTLS.

For L7 proxying, Istio uses waypoint proxy. It can be deployed as one for each service running in parallel with

Testing Istio in Ambient Mode

For this I am trying out the test setup for ambient mode written in Istio's blog

I have created a Kind cluster

➜ terraform_projects git:(main) ✗ kind create cluster --config=- <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

name: ambient

nodes:

- role: control-plane

- role: worker

- role: worker

EOF

Creating cluster "ambient" ...

✓ Ensuring node image (kindest/node:v1.29.2) 🖼

✓ Preparing nodes 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-ambient"

You can now use your cluster with:

kubectl cluster-info --context kind-ambient

Thanks for using kind! 😊

➜ terraform_projects git:(main) ✗ k get nodes

NAME STATUS ROLES AGE VERSION

ambient-control-plane Ready control-plane 5m58s v1.29.2

ambient-worker Ready <none> 5m34s v1.29.2

ambient-worker2 Ready <none> 5m34s v1.29.2

Now to install istio, I am using istioctl

➜ ~ istioctl install --set profile=ambient

This will install the Istio 1.21.2 "ambient" profile (with components: Istio core, Istiod, CNI, and Ztunnel) into the cluster. Proceed? (y/N) y

✔ Istio core installed

✔ Istiod installed

✔ CNI installed

✔ Ztunnel installed

✔ Installation complete Made this installation the default for injection and validation.

If we check the pods installed in istio-system,

➜ ~ k get pods -n istio-system

NAME READY STATUS RESTARTS AGE

istio-cni-node-blxdv 1/1 Running 0 8m2s

istio-cni-node-k5q9p 1/1 Running 0 8m2s

istio-cni-node-nmg86 1/1 Running 0 8m2s

istiod-ff94b9d97-6tzfp 1/1 Running 0 8m17s

ztunnel-dsfdh 1/1 Running 0 7m28s

ztunnel-kwnz7 1/1 Running 0 7m28s

ztunnel-z78gf 1/1 Running 0 7m28s

Both ztunnel and istio CNI are installed as daemonSets. ztunnel is to intercept the traffic flowing between nodes and CNI intercepts traffic between pods in a same node and redirects it through ztunnel. Like forcing the traffic to implement the ambient mode.

To test it, I have installed bookinfo application, sleep and notsleep pods as well.

➜ ~ k apply -f https://raw.githubusercontent.com/istio/istio/release-1.22/samples/bookinfo/platform/kube/bookinfo.yaml

service/details created

serviceaccount/bookinfo-details created

deployment.apps/details-v1 created

service/ratings created

serviceaccount/bookinfo-ratings created

deployment.apps/ratings-v1 created

service/reviews created

serviceaccount/bookinfo-reviews created

deployment.apps/reviews-v1 created

deployment.apps/reviews-v2 created

deployment.apps/reviews-v3 created

service/productpage created

serviceaccount/bookinfo-productpage created

deployment.apps/productpage-v1 created

➜ ~ k get pods

NAME READY STATUS RESTARTS AGE

details-v1-cf74bb974-8bmhv 0/1 ContainerCreating 0 32s

productpage-v1-87d54dd59-2mxrt 1/1 Running 0 32s

ratings-v1-7c4bbf97db-cxmps 1/1 Running 0 32s

reviews-v1-5fd6d4f8f8-sfzv4 0/1 ContainerCreating 0 32s

reviews-v2-6f9b55c5db-9wh4k 0/1 ContainerCreating 0 32s

reviews-v3-7d99fd7978-jm5sr 0/1 ContainerCreating 0 32s

➜ ~ kubectl apply -f https://raw.githubusercontent.com/linsun/sample-apps/main/sleep/sleep.yaml

serviceaccount/sleep created

service/sleep created

deployment.apps/sleep created

➜ ~ kubectl apply -f https://raw.githubusercontent.com/linsun/sample-apps/main/sleep/notsleep.yaml

serviceaccount/notsleep created

service/notsleep created

deployment.apps/notsleep created

As the ambient mode is enabled to induvidual namespaces, and we haven't enabled it yet. You can see that I can hit other pods from sleep pod and get response

➜ ~ kubectl exec deploy/sleep -- curl -s http://productpage:9080/

<!DOCTYPE html>

<html>

<head>

<title>Simple Bookstore App</title>

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width, initial-scale=1">

After setting the ambient mode using kubectl label namespace default istio.io/dataplane-mode=ambient I could see that the traffic is now flowing through ztunnel

➜ ~ kubectl label namespace default istio.io/dataplane-mode=ambient

namespace/default labeled

➜ ~ kubectl exec deploy/sleep -- curl -s http://productpage:9080/ | head -n1

<!DOCTYPE html>

➜ ~ kubectl exec deploy/sleep -- curl -s http://productpage:9080/ | head -n5

<!DOCTYPE html>

<html>

<head>

<title>Simple Bookstore App</title>

<meta charset="utf-8">

Here are the ztunnel logs for the same

2024-05-17T20:25:18.143503Z info access connection complete src.addr=10.244.1.4:42786 src.workload=sleep-97576b68f-ftqsh src.namespace=default src.identity="spiffe://cluster.local/ns/default/sa/sleep" dst.addr=10.244.2.8:15008 dst.hbone_addr=10.244.2.8:9080 dst.service=productpage.default.svc.cluster.local dst.workload=productpage-v1-87d54dd59-jvj79 dst.namespace=default dst.identity="spiffe://cluster.local/ns/default/sa/bookinfo-productpage" direction="outbound" bytes_sent=79 bytes_recv=1918 duration="128ms"

2024-05-17T20:25:19.755188Z info access connection complete src.addr=10.244.1.4:42796 src.workload=sleep-97576b68f-ftqsh src.namespace=default src.identity="spiffe://cluster.local/ns/default/sa/sleep" dst.addr=10.244.2.8:15008 dst.hbone_addr=10.244.2.8:9080 dst.service=productpage.default.svc.cluster.local dst.workload=productpage-v1-87d54dd59-jvj79 dst.namespace=default dst.identity="spiffe://cluster.local/ns/default/sa/bookinfo-productpage" direction="outbound" bytes_sent=79 bytes_recv=1918 duration="5ms"

2024-05-17T20:25:21.033103Z info access connection complete src.addr=10.244.1.4:42810 src.workload=sleep-97576b68f-ftqsh src.namespace=default src.identity="spiffe://cluster.local/ns/default/sa/sleep" dst.addr=10.244.2.8:15008 dst.hbone_addr=10.244.2.8:9080 dst.service=productpage.default.svc.cluster.local dst.workload=productpage-v1-87d54dd59-jvj79 dst.namespace=default dst.identity="spiffe://cluster.local/ns/default/sa/bookinfo-productpage" direction="outbound" bytes_sent=79 bytes_recv=1918 duration="7ms"

Other than mTLS, you can set Authorization policies for different workloads.

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: productpage-viewer

namespace: default

spec:

selector:

matchLabels:

app: productpage

action: ALLOW

rules:

- from:

- source:

principals: ["cluster.local/ns/default/sa/sleep", "cluster.local/ns/istio-system/sa/istio-ingressgateway-service-account"]

➜ ~ kubectl exec deploy/sleep -- curl -s http://productpage:9080/ | head -n1

<!DOCTYPE html>

➜ ~ kubectl exec deploy/notsleep -- curl -s http://productpage:9080/ | head -n1

command terminated with exit code 56

By using istio gateway, you can bring up waypoint proxy to do L7 loadbalancing. Which creates waypoint proxy pods which will not be attached to application pods but stay as stand-alone pods.

Trying on EKS

I also tried the same demo in the EKS cluster, ztunnel daemonset is not coming up.

➜ ~ k get pods -n istio-system

NAME READY STATUS RESTARTS AGE

istio-cni-node-26bn9 1/1 Running 0 5m27s

istio-cni-node-kzd82 1/1 Running 0 5m27s

istio-cni-node-x99n2 1/1 Running 0 5m27s

istiod-5888647857-d9vdh 0/1 Pending 0 5m22s

ztunnel-7qr8l 0/1 Pending 0 5m22s

ztunnel-pgpsn 0/1 Pending 0 5m22s

ztunnel-z2kvc 0/1 Running 0 5m22s

The logs show that the pods are failing with XDS client connection error

2024-05-17T17:33:58.934625Z warn xds::client:xds{id=29} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:34:13.939490Z warn xds::client:xds{id=30} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:34:28.943546Z warn xds::client:xds{id=31} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:34:43.947796Z warn xds::client:xds{id=32} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:34:58.950405Z warn xds::client:xds{id=33} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:35:13.955870Z warn xds::client:xds{id=34} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:35:28.960054Z warn xds::client:xds{id=35} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:35:43.964064Z warn xds::client:xds{id=36} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:35:58.968351Z warn xds::client:xds{id=37} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:36:13.972471Z warn xds::client:xds{id=38} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:36:28.975409Z warn xds::client:xds{id=39} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

2024-05-17T17:36:43.979856Z warn xds::client:xds{id=40} XDS client connection error: gRPC connection error:status: Unknown, message: "client error (Connect)", source: tcp connect error: Connection refused (os error 111), retrying in 15s

Conclusion

I believe this is Istio's attempt to stay relevant and adress all the customer concerns and it hit nail in the head. Looking forward to test it further.

Subscribe to my newsletter

Read articles from Srujan Reddy directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Srujan Reddy

Srujan Reddy

I am a Kubernetes Engineer passionate about leveraging Cloud Native ecosystem to run secure, efficient and effective workloads.