Vext 1.9: Custom Input & Output Parameters, Slack Integration, Open AI GPT 4o, and More

Edward Hu

Edward Hu

We are excited to announce the release of Vext 1.9, packed with features designed to give you even more control and flexibility over your AI projects (LLM pipeline). This update brings significant enhancements, including custom input and output parameters, seamless Slack integration, and an easier way to load default system prompts. Let’s go through each of these new features and see how they can benefit your workflow.

Custom Input and Output Parameters

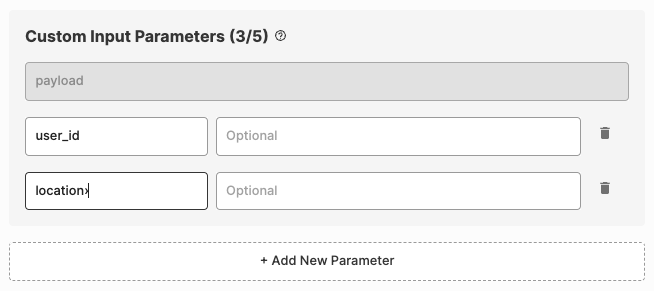

One of the main features of Vext 1.9 is the ability for users to define their own custom input and output parameters. This new functionality allows you to tailor your API calls to your specific needs, providing more flexibility and precision in your AI interactions.

Custom Input Parameters

Previously, Vext only supported a single input parameter, "payload". Now, you can define up to four custom input parameters to bring more contextual information into your LLM pipeline. For instance, instead of just sending a payload like this:

{

"payload": "Hello World!"

}

Now you can customize your input parameter in your project by clicking on the "Input" action, and with the following set up:

Note that you will need to configure this before you can call the API with these custom parameters.

Now, you can now send a more complex input with custom variables via API call:

{

"payload": "Hello World!",

"custom_variables": {

"user_id": "123456789",

"location": "San Francisco, CA"

}

}

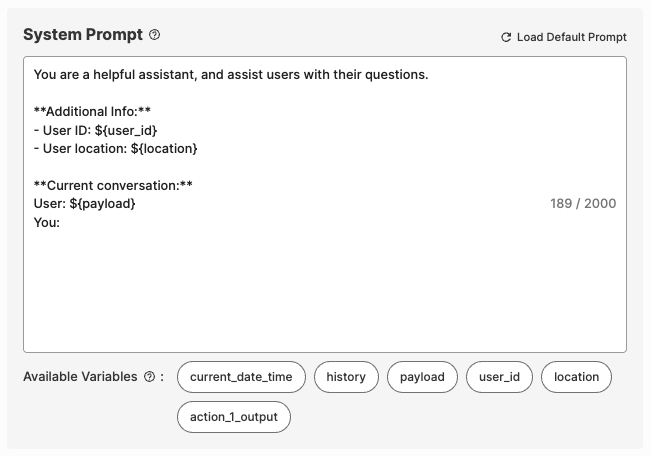

And then you'll be able to use these variables within the pipeline in any actions like so:

The prompt output on the system level upon execution will look something like this:

You are a helpful assistant, and assist users with their questions.

**Additional Info:**

- User ID: 123456789

- User location: San Francisco, CA

**Current conversation:**

User: Hello World!

You:

Here’s a cURL example of how this API call will look like:

curl -XPOST

-H 'Content-Type: application/json'

-H 'Apikey: Api-Key <API_KEY>'

-d '{

"payload": "Hello World!",

"custom_variables": {"user_id":"123456789","location":"San Francisco, CA"}

}' 'https://payload.vextapp.com/hook/$(endpoint_id)/catch/$(channel_token)'

This enhancement allows you to bring in additional data such as user location, preferences, or any other contextual information, making your AI interactions more robust and precise.

You can learn more about how to use this in the help center article.

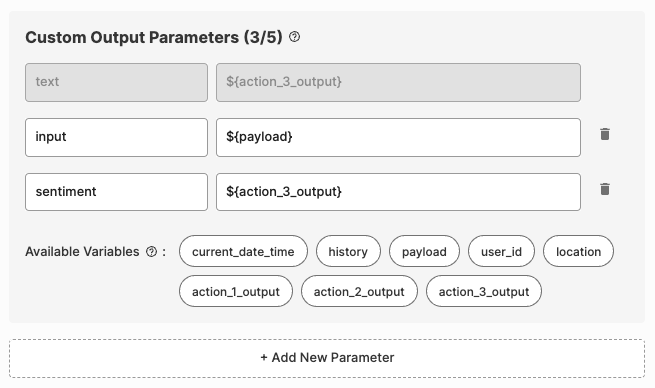

Custom Output Parameters

As for custom output parameters, this enables you to define up to four custom output fields. Previously, the output was limited to a single "text" field. Now, you can capture multiple pieces of information from different stages of your LLM pipeline or even directly from the custom input parameters you defined earlier.

For example, if one of your actions in your project is an LLM and is configured to determine the sentiment of a user query (say action #3), you can set up a custom output variable to capture this information.

You can customize your output parameter in your project by clicking on the "Output" action, and with the following set up:

Then when upon calling the API to this project, response will look like this:

{

"text": "Hello, how can I assist you today?",

"input": "Hello World!",

"sentiment": "happy"

}

This feature allows you to output more granular and detailed information from your AI processes, enhancing your ability to analyze and respond to user interactions.

You can learn more about how to use this in the help center article.

Slack Integration

You can now connect your Vext projects directly to your Slack workspace. This integration allows you to add the Vext bot to selected channels, authenticate your account, and engage with your internal teams seamlessly.

By integrating Vext with Slack, you can streamline communication and ensure that your team can interact with your customized AI directly within their daily workflow. Whether it's for sending updates, receiving notifications, or querying your internal knowledge base, the Slack integration makes it easier than ever to stay connected and informed.

Load Default System Prompt

We have added a new feature that allows you to load a system prompt template with a single click. Instead of creating a new project from scratch or copying from the help center, you can now quickly load a default system prompt and start customizing it to fit your needs.

This feature is designed to save you time and help you get up and running faster, making it ideal for both new users and seasoned professionals looking to streamline their workflow.

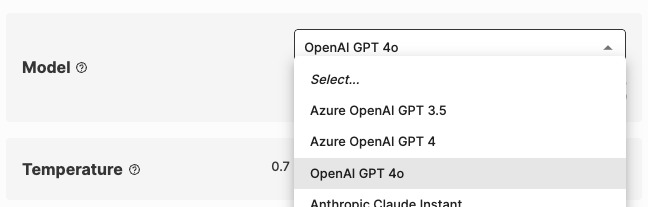

OpenAI GPT 4o

Another major highlight of Vext 1.9 is the integration of OpenAI's GPT 4o. This latest model from OpenAI is renowned for its speed and performance, rivaling GPT-4. With this integration, users can now select GPT 4o directly within Vext to power their AI projects.

Note that the GPT 4o here is provided directly by OpenAI, not Azure.

Subscribe to my newsletter

Read articles from Edward Hu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Edward Hu

Edward Hu

I'm the cofounder and CEO of Vext Technologies, an out-of-the-box RAG & LLM platform that fast-tracks custom AI development with your data.