Using The Phi3 SLM Locally In Microsoft Fabric For GenAI

Sandeep Pawar

Sandeep PawarMS Build kicked off today and it was dominated by AI and CoPilot (as expected), including the exciting AI Skills in Fabric. Microsoft also announced it's small but very capable phi-3 family of models. These "Small" Language Models, despite being small (3.8-14B parameters, 4K & 128K context length) punch above its weight, thanks to focus on quality of data used to train these models. The mini variant is only 2.2GB in size and is available in ONNX runtime with support for CPU which means we can use it in a Fabric notebook !

In this blog I show how you can use the microsoft/Phi-3-mini-4k-instruct model locally in your notebook, without any extra setup or subscriptions. As you test this model, you will see how good it is. I also settle the debate on why pineapple on pizza is great (not sure why it's even a debate :P)

Steps

Install the ONNX runtime

#install onnx runtime

!pip install --pre onnxruntime-genai --q

Create a directory to save the model. Be sure to mount a lakehouse first.

#create a directory for the model

import os

import shutil

import onnxruntime_genai as og

model_path = '/lakehouse/default/Files/phi3mini'

#Mount a lakehouse first

if not os.path.exists(model_path):

os.mkdir(model_path)

print(f"model will be downloaded to {model_path}")

else:

print(f"{model_path} exists")

Download the model from Hugging Face. This will take ~30 seconds.

!huggingface-cli download microsoft/Phi-3-mini-4k-instruct-onnx --include cpu_and_mobile/cpu-int4-rtn-block-32-acc-level-4/* --local-dir ./lakehouse/default/Files/phi3mini

Copy the downloaded files to a directory in the lakehouse

#copy files to a lakehouse from the temp directory

source_dir = os.path.abspath(".") + model_path

destination_dir = model_path

try:

shutil.copytree(source_dir, destination_dir, dirs_exist_ok=True)

print("Directory copied successfully.")

except Exception as e:

print(f"Error occurred: {e}")

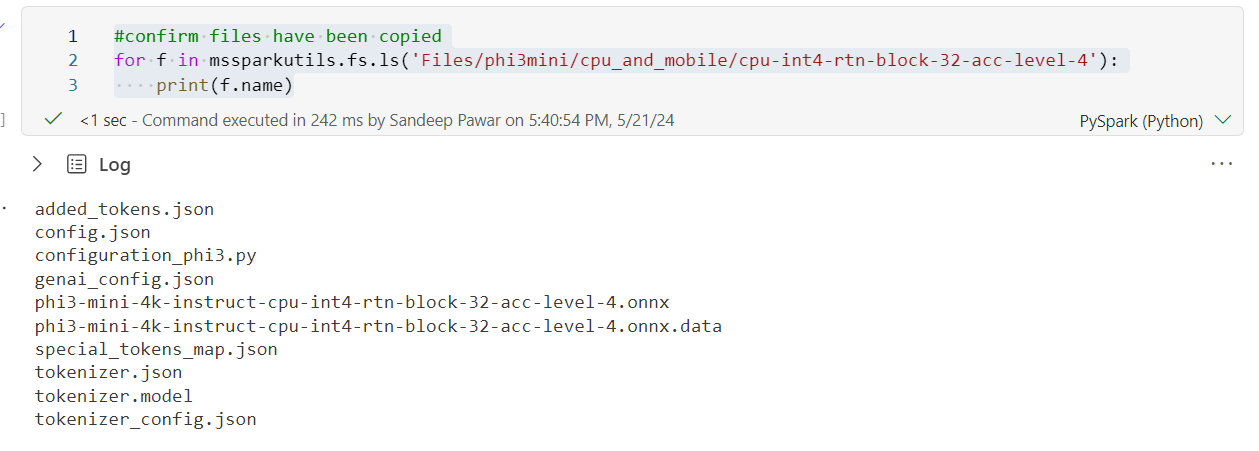

Confirm the model files have been downloaded

#confirm files have been copied

for f in mssparkutils.fs.ls('Files/phi3mini/cpu_and_mobile/cpu-int4-rtn-block-32-acc-level-4'):

print(f.name)

Now initialize the model, provide instructions and prompt and watch this little model perform so well.

#initialize the model

model = og.Model(model_path+'/cpu_and_mobile/cpu-int4-rtn-block-32-acc-level-4')

#tokenize

tokenizer = og.Tokenizer(model)

tokenizer_stream = tokenizer.create_stream()

#set params

search_options = {"max_length": 1024,"temperature":0.6}

params = og.GeneratorParams(model)

params.try_graph_capture_with_max_batch_size(1)

params.set_search_options(**search_options)

#provide instructions and prompts

instruction = "You are a renowned poet known for his playful poems"

prompt = " Write a poem to convince someone why pineapple on pizza is awesome"

prompt = f"<|system|>{instruction}<|end|><|user|>{prompt}<|end|><|assistant|>"

#tokenize prompt

input_tokens = tokenizer.encode(prompt)

params.input_ids = input_tokens

#generate response

generator = og.Generator(model, params)

while not generator.is_done():

generator.compute_logits()

generator.generate_next_token()

new_token = generator.get_next_tokens()[0]

print(tokenizer_stream.decode(new_token), end='', flush=True)

Here is the response I got :

In a land where flavors collide, A culinary adventure, side by side, A tale of tastes, a sweet and savory ride, A pineapple on pizza, a delightful stride.

A golden crown of fruit, a tropical sight, Glistening under the kitchen's warm light, A slice of paradise, a flavor so bright, Pineapple on pizza, a taste bud' fearsome fight.

Sweet and tangy, a flavor explosion, A symphony of tastes, a flavor fusion, A pizza slice, a pineapple's illusion, A culinary masterpiece, a gastronomic revolution.

Crispy crust, a foundation so firm, A bed for the fruit, a taste to affirm, A pineapple's sweetness, a flavor to confirm, A pizza slice, a culinary term.

Skeptics may scoff, critics may jeer, But taste buds will sing, and hearts will cheer, For the pineapple on pizza, a flavor so clear, A delicious delight, a culinary pioneer.

So here's to the pineapple, a fruit so bold, On a pizza slice, a story untold, A taste so unique, a flavor to behold, Pineapple on pizza, a culinary gold.

So come, take a bite, let your taste buds dance, In this flavorful world, give it a chance, Pineapple on pizza, a culinary romance, A taste so divine, a flavor so grand

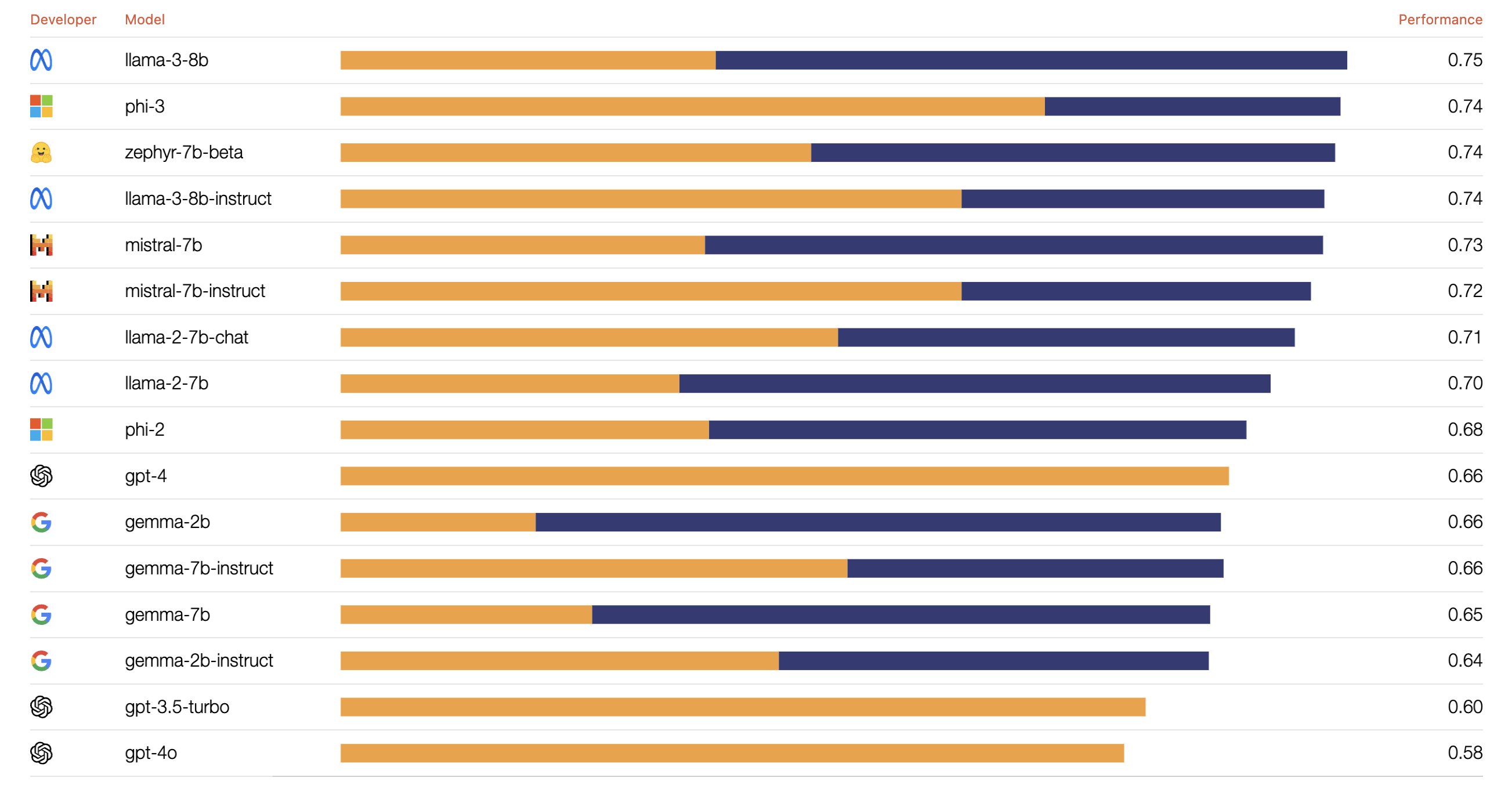

You are not going to use these models in Fabric for writing poems for sure so what are the applications? These small models are great for fine-tuning on industry, company, task specific data. Since these are so small, you can use them in Fabric easily. Predibase ranks phi-3 models higher than most other base models in its class.

I will write a blog on fine-tuning ! Stay tuned.

You can download the notebook from here.

References:

Subscribe to my newsletter

Read articles from Sandeep Pawar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sandeep Pawar

Sandeep Pawar

Microsoft MVP with expertise in data analytics, data science and generative AI using Microsoft data platform.