How to Deploy a .NET 8 API Using Docker, AWS EC2, and NGINX

Alex Taveras-Crespo

Alex Taveras-CrespoIn this article, I will guide you through deploying a .NET 8 Web API using Docker on an AWS EC2 instance. We'll cover the prerequisites, setting up the EC2 instance, installing necessary dependencies, running the Docker container, configuring a domain/subdomain, setting up NGINX as a reverse proxy, and enabling HTTPS with Certbot. By the end, you'll have a fully deployed API with no monthly hosting costs.

This is Part 2 of an ongoing series I'm writing regarding .NET 8 deployment. Part 1 can be accessed here, or on my profile.

If you followed along with Part 1, you have a working .NET 8 API with a Dockerfile in your repository. You can use that Dockerfile to create an image and run a Docker container, alongside the configuration variables. In this part, we will configure the EC2 instance that will run our dockerized API.

Prerequisites

To begin this article, there are some things you should have:

A .NET 8 Web API, with a Dockerfile in the root of the repo, checked into GitHub.

A working Docker installation

A way to SSH into the EC2 instance. I use the terminal, but an SSH client such as PuTTY or SmarTTY works, same with the EC2 instance connect in the AWS portal.

An AWS account, preferably new so that your free tier lasts a full year

A new domain name, or subdomain of an existing domain. You can buy them extremely cheap off of Porkbun or NameCheap.

Getting Started

What's EC2? Why are we using it?

EC2, formally known as Elastic Compute Cloud, is a cloud computing platform that allows us to rent virtual machines (VMs) on which to run anything from web servers to websites!

The reason why I'm using AWS EC2 over something like Lambda, Fargate, API Gateway, or Elastic Beanstalk is because of the perfect pairing between EC2 and Docker. This pairing makes the deployment process so much easier than other solutions - plus, you get to have full control over your resources.

Creating our EC2 Instance

Now, let's begin configuring our EC2 instance. You can pick whichever region you'd like - personally I am going to select us-east-2.

Navigate to the EC2 console, then select the Launch instance button. Give your instance a unique name.

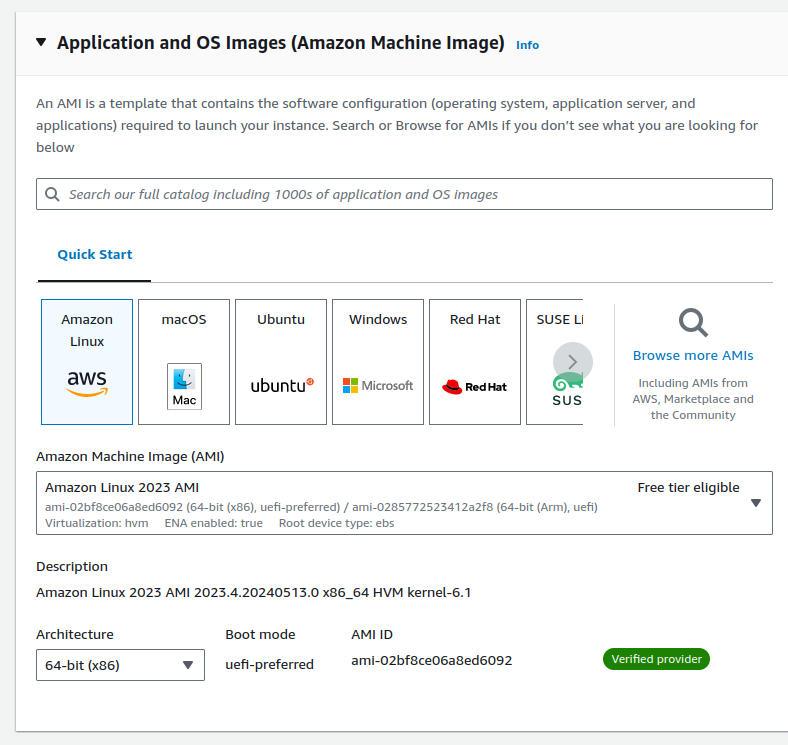

Select Amazon Linux 2023. It's based on Fedora, and I find it's the most reliable of the options here.

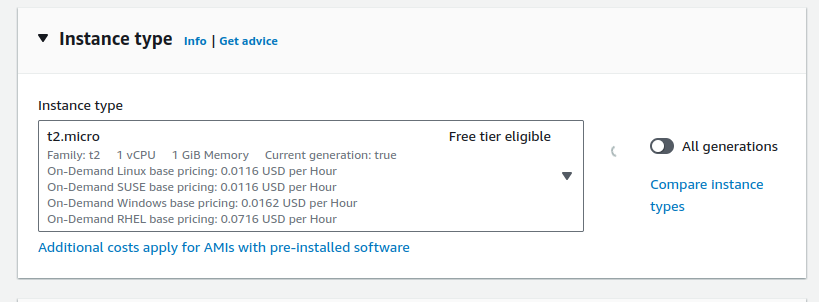

Ensure that you're using a t2.micro instance. Anything else is not free tier eligible, and will incur charges on your account.

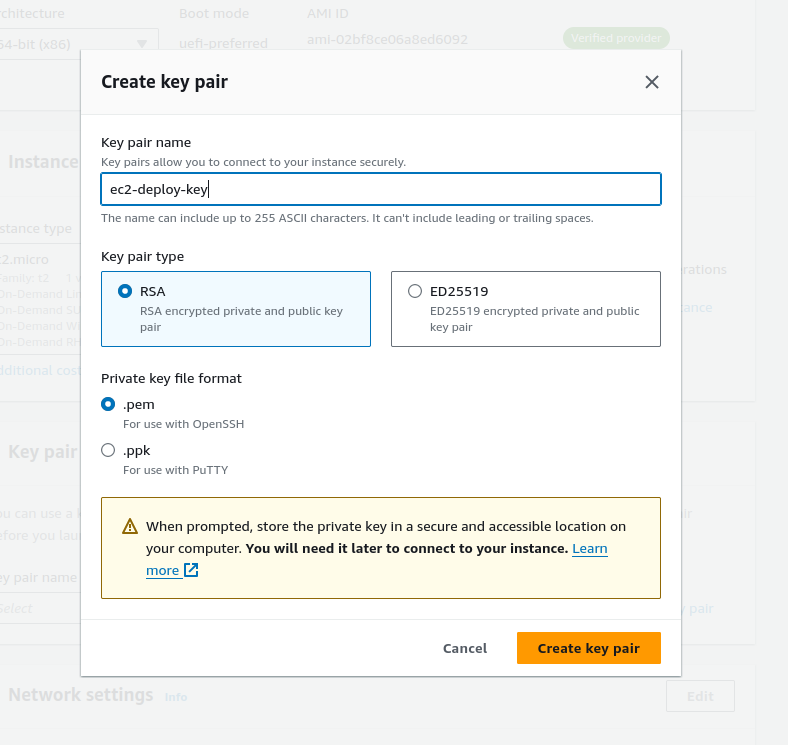

Make a key-pair for connecting to the EC2 instance via SSH. I'm on Linux, so I'm creating a .pem file. If you're using PuTTY or SmarTTY on Windows, make sure to create a .ppk file instead. Save the resulting file in a secure, easy to access location (I store mine in ~/dev/keys)

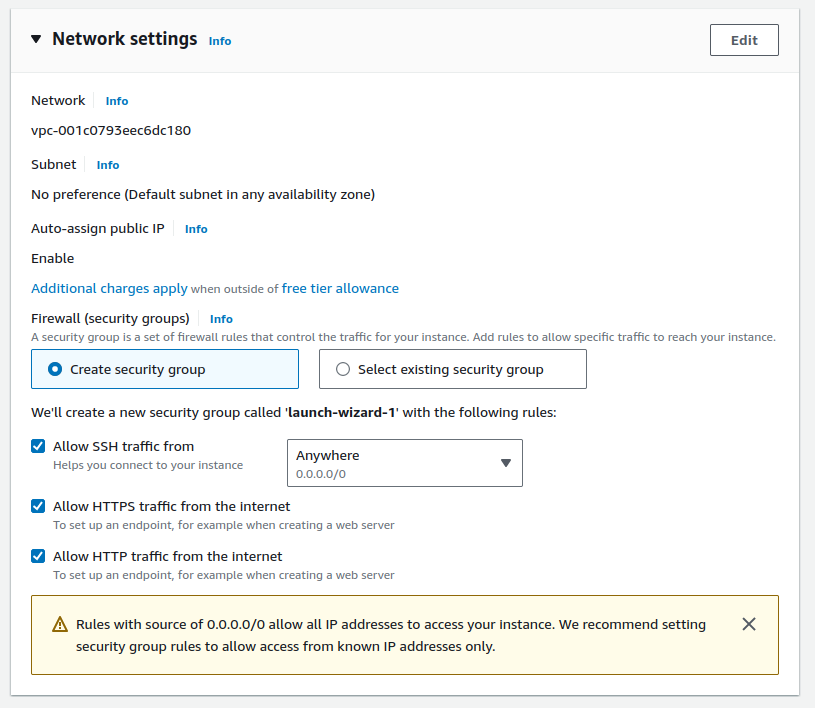

We'll let the instance setup a Virtual Private Cloud, or a VPC, which lets us launch AWS resources in an isolated virtual network. This will also setup a new Security Group. For now, set Allow SSH traffic from to be Anywhere, and enable the Allow HTTPS traffic from the internet and Allow HTTP traffic from the internet options. Leaving the SSH connection open to the entire internet is not the best idea, but we can fix that at the end. Finish the setup via the wizard.

Configuring our EC2 Instance

Now that our instance has been created, let's launch the instance by going to the EC2 console, navigate to the Instances tab on the left navbar, select Instances, then select our instance. Here, grab the public IPv4 address for SSH use.

Note: I will not be associating an Elastic IP with this EC2 instance. As of 2024, Elastic IPs incur a small charge (roughly $2.00 a month).

Earlier, we configured a key-value pair for SSH connection earlier. We're now going to use that to connect to our EC2 instance. I'm using Linux, so that's what I'll cover, but there are several tutorials on how to do this specifically on Windows, either with Powershell or an SSH client. Alternatively, you could just connect via the EC2 Instance Connect portal, and skip all of this.

SSH into EC2 Instance

First, I'll set the proper permissions on the key file.

chmod 400 /path/to/your/key/name.pem

Once those are set, we can then go ahead and SSH into our EC2 instance.

ssh -i /path/to/your/key/name.pem ec2-user@<public IP address of EC2 instance>

For reference, here's what my command looks like:

ssh -i ~/dev/keys/ec2-deploy-key.pem ec2-user@3.22.98.244

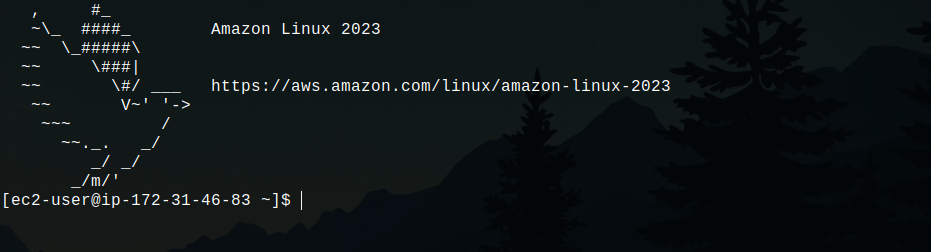

Downloading EC2 Dependencies

If everything went well, you should find yourself connected to your EC2 instance! Now there are a few important things we need to install here.

First, we'll install Docker which will allow us to run containers on our EC2 instance:

sudo yum update

sudo yum install docker -y

sudo systemctl start docker

#verify installation

docker --version

Once that's installed, we'll move onto installing Git so that we can clone the API repository with your code and Dockerfile:

sudo yum install git -y

# verify installation

git --version

git config --global user.name <Your Name>

git config --global user.email <your_email@example.com>

# verify config

git config user.name

git config user.email

Running the Docker Container

Now that we've installed Docker and Git, we're ready to get this container up and running!

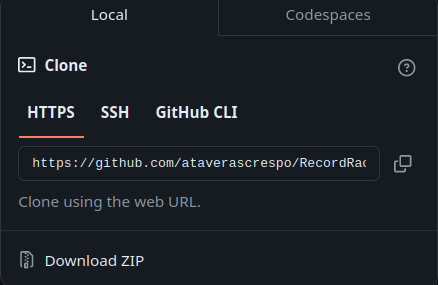

First, let's pull our repository down into our EC2 instance. You can put this in any path you like, I'll put it in ~/repos.

Get the clone HTTPS url from your GitHub repo, then run:

git clone <url>

This will pull the repository into your EC2 instance. cd into the new repository folder, then run:

sudo su(this switches you to the root user, makes it easier to run commands)

docker build -t your-image -f Dockerfile .

Once the image is build, let's create your container:

docker run -d -p 5184:5184 --name container_name your-image -e <your variables>

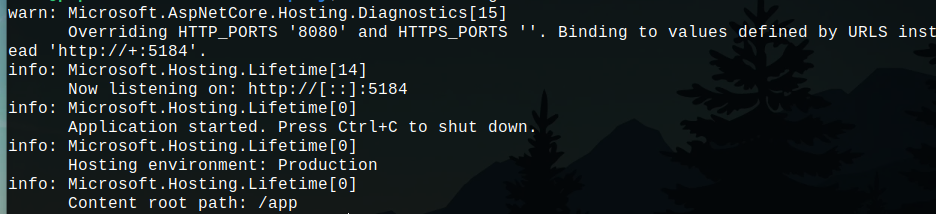

Now, if you followed the steps in Part 1 of this series, this Docker container should be running with no issue. You can verify that by running:

docker ps to see all running containers, then run:

docker logs container_name to see the logs of your container. For a .NET 8 API, you should see something like:

This means the dockerized app is running properly!

Using a Domain (or subdomain)

As mentioned in the prerequisites, I'm assuming you have access to either a domain or subdomain. For this, I'll be using a subdomain of my personal domain name, which is registered through NameCheap. Steps may vary for other platforms.

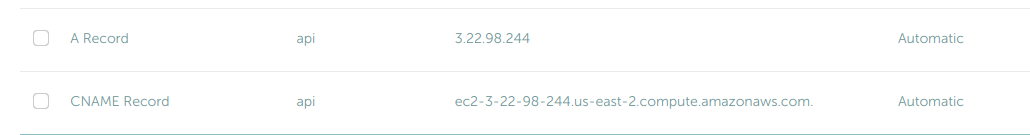

In your domain settings, create a new A Record, with the host pointing to either the root of the domain (@) or a valid subdomain (i.e api). The value will be the Public IPv4 address in your EC2 instance's details tab.

Then create a new CNAME Record, again with the host with the host pointing to either the root of the domain (@) or a valid subdomain (i.e api). The value will be the Public IPv4 DNS in your EC2 instance's details tab.

Whatever domain/subdomain you choose to use, make sure you select the same one for both the A and CNAME records.

Installing NGINX as a Reverse Proxy

Now that we have our EC2 instance pointing to a valid domain or subdomain, we are going to install NGINX as a reverse proxy.

A reverse proxy is a server that sits between client devices and web servers, forwarding client requests to the appropriate backend server and then delivering the server's response back to the clients.

NGINX will listen on a specific port (80 for HTTP, 443 for HTTPS) and forward the request to our dockerized API. Let's get that installed now.

SSH back into your EC2 instance, and run the following commands:

sudo yum update -y

sudo yum install nginx -y

sudo systemctl start nginx

This installs NGINX onto our EC2 instance and starts the service. Now, we'll modify the configuration file for NGINX to setup reverse proxying.

sudo nano /etc/nginx/nginx.conf

We'll use nano to modify the configuration file. Modify the server block to add the following reverse proxy settings:

server {

listen 80;

listen [::]:80;

server_name <YOUR_DOMAIN>;

location / {

proxy_pass http://<EC2_PRIVATE_IPV4_ADDRESS>:5184;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}

This allows us to listen on port 80 for IPv4 and IPv6 addresses. Replace <YOUR_DOMAIN> with your full domain/subdomain url (i.e api.domain.com), and <EC2_PRIVATE_IPV4_ADDRESS> with the private IPv4 address found in the EC2 instance details tab.

sudo nginx -t

sudo systemctl restart nginx

Now we have the HTTP protocol working, let's enable the HTTPS protocol.

SSL Certification with Certbot

SSL certificates are what allow websites to use the HTTPS protocol, which encrypts data between the browser and server. We want to use the HTTPS protocol for security practices, so let's enable it for our API:

sudo python3 -m venv /opt/certbot

sudo /opt/certbot/bin/pip install --upgrade pip

This uses a Python virtual environment to upgrade our pip version and prepare Certbot for installation. Certbot is a tool that assigns our server an SSL certificate via LetsEncrypt, which sets it up an HTTPS server.

Now, let's install Certbot, and verify the installation:

sudo /opt/certbot/bin/pip install certbot certbot-nginx

sudo ln -s /opt/certbot/bin/certbot /usr/bin/certbot

Now, run the following command to obtain a certificate:

sudo certbot --nginx

Go through all of the steps provided by the setup wizard, and eventually Certbot will provision a certificate and modify the NGINX configuration. And we now have HTTPS enabled! Try reaching your API at https:// then the name of your domain. You could also do some Postman testing to ensure your endpoints can be reached.

Updating the Container

To update the instance whenever you make changes to the codebase - you'll have to:

SSH into the EC2 instance

Navigate to the API repo path

Pull the latest changes

Build a new Docker image

Stop the current container

Start the container again with the new Docker image

EC2 Security Group Changes

Earlier, we set up the EC2 instance to accept SSH traffic from all IPs. This is not great security practice, so let's tighten that up.

Go to the EC2 console, select your instance then select the Security tab. This will show you all of your inbound and outbound rules. Select your group under Security groups, then in the new page, navigate to the Inbound rules tab and select Edit inbound rules.

Update the SSH inbound rule to My IP. That means that SSH traffic can only come from local machines at your IP address, rather than the entirety of the internet.

Conclusion

After following all of the steps, you now have a fully deployed .NET 8 Web API. Congrats!

Next Steps

The two next steps of this series are more or less optional.

Part 3 is a guide on implementing CI/CD for a .NET 8 API. It's optional because not everybody needs CI/CD on their project - maybe you're deploying a project or assignment you've finished. If your use case supports it, I would recommend following part 3.

Part 4 is a guide on setting up a database on AWS, for your API to save some data into. This one is also 'optional' - you are probably storing data in some database, and it doesn't have to be hosted through AWS. However, if you're looking to find a simple, free and effective solution, it's a great guide to follow.

Subscribe to my newsletter

Read articles from Alex Taveras-Crespo directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by