Deploying React App on K8s

Devarsh

Devarsh

Introduction

Hello Everyone,

To give a little context we've been working on the series and till now we've dockerized the react application using multi-stage Dockerfiles and Docker-Compose.

Today, we're going to deploy this react website on Kubernetes. So, if fortunately in production, the traffic increases then we can Kubernetes will upscale the react application by increasing the number of pods.

What you should expect from this blog?

You'll get your hands dirty on Pod, Deployment, and Services.

In this Blog, we're using ClusterIP and NodePort services of Kubernetes.

We will be leveraging AWS EKS in the next blog and at that time we will use Load Balancer Service and access our application from anywhere we want.

Let's start

Creating Manifest files

We will be directly creating Deployment files which will ultimately create the pod.

Why we're not creating the pod? OR Why we're creating Deployment?

Suppose the pod crashed due to heavy traffic or got deleted by human error. The react application will go down.

So, to prevent this from happening we'll be creating Deployment, which comes with so many benefits like we can scale it if the traffic increases on our website.

If any pod goes down, the deployment will auto-heal the pod, recreate the pod, and ultimately keep the desired number of pods alive.

As we have a three-tier architecture i.e. frontend, backend, and database. We'll be creating three separate deployment and service files.

Configuring Frontend

Using ClusterIP Service

Let's create a Deployment file for Frontend using the Docker Image (which is public): devarsh10/ocean-web

apiVersion: apps/v1

kind: Deployment

metadata:

name: ocean-web-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ocean-web

template:

metadata:

labels:

app: ocean-web

spec:

containers:

- name: ocean-web

image: devarsh10/ocean-web:0.1

ports:

- containerPort: 3000

command: ["npm","start"]

---

apiVersion: v1

kind: Service

metadata:

name: ocean-web-service

spec:

selector:

app: ocean-web

ports:

- protocol: TCP

port: 3000

targetPort: 3000

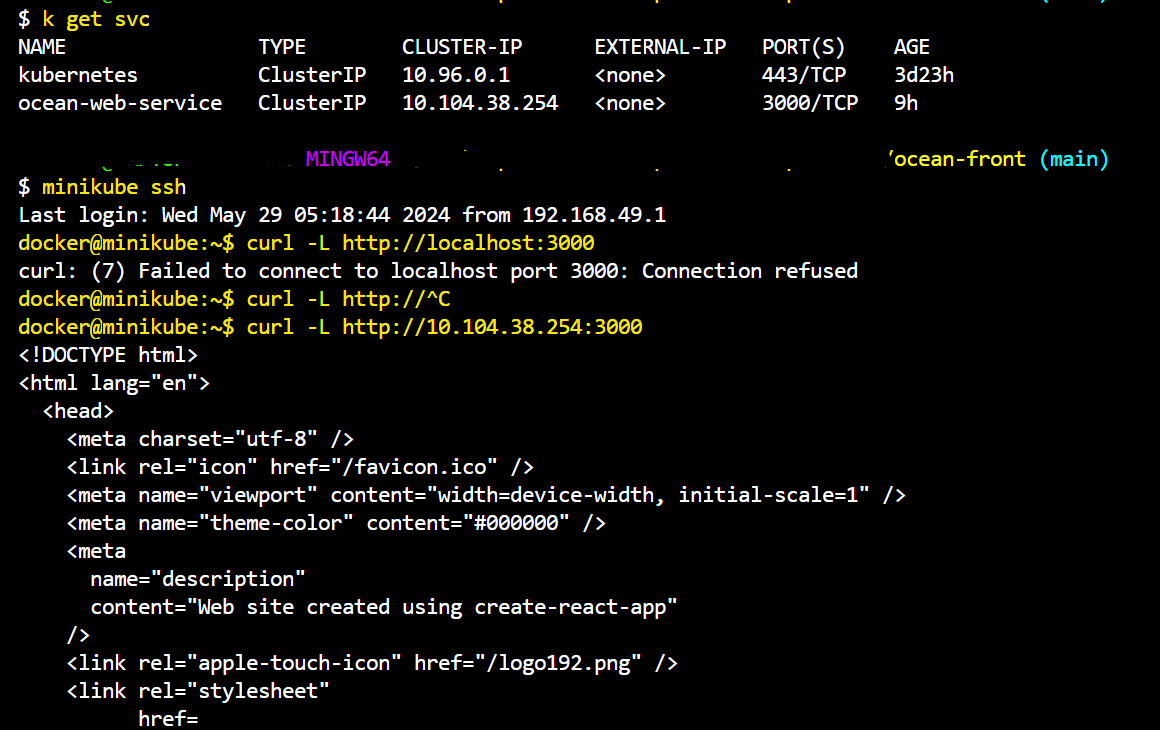

Here, we're using the service ClusterIP. As this react application is running on the minikube cluster we have to forward the port to access the react app from our PC.

But now when we try to access the react app from the minikube, we will not able to access it because we're not using NodePort.

NodePort forwards the external traffic to the Service. In the case of Cluster IP, the service is accessible within the cluster.

So, we'll be using NodePort. So that, we can access it from the minikube.

Changer in code,

---

apiVersion: v1

kind: Service

metadata:

name: ocean-web-service

spec:

selector:

app: ocean-web

ports:

- protocol: TCP

port: 3000

targetPort: 3000

nodePort: 30007 # we're just adding this two lines

type: NodePort # This one too.

Let's try.

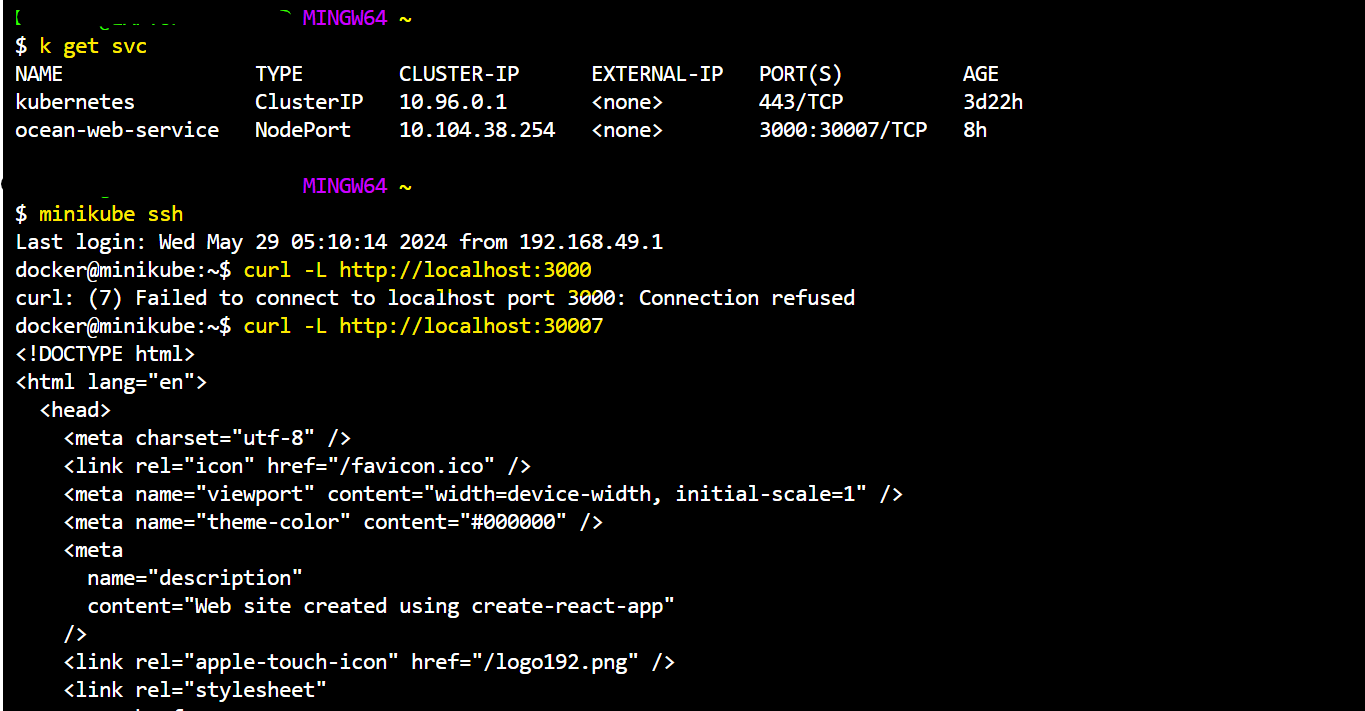

As you all can notice, when we try to access the React web app using port 3000, it fails to connect.

But when we try to connect on port 30007 we get a response. That's the use case of NodePort.

Checkpoint

Everyone, till now we've leveraged the services and made the React app accessible using NodePort.

Now, what we're going to do is,

We will create the deployment files of the Database.

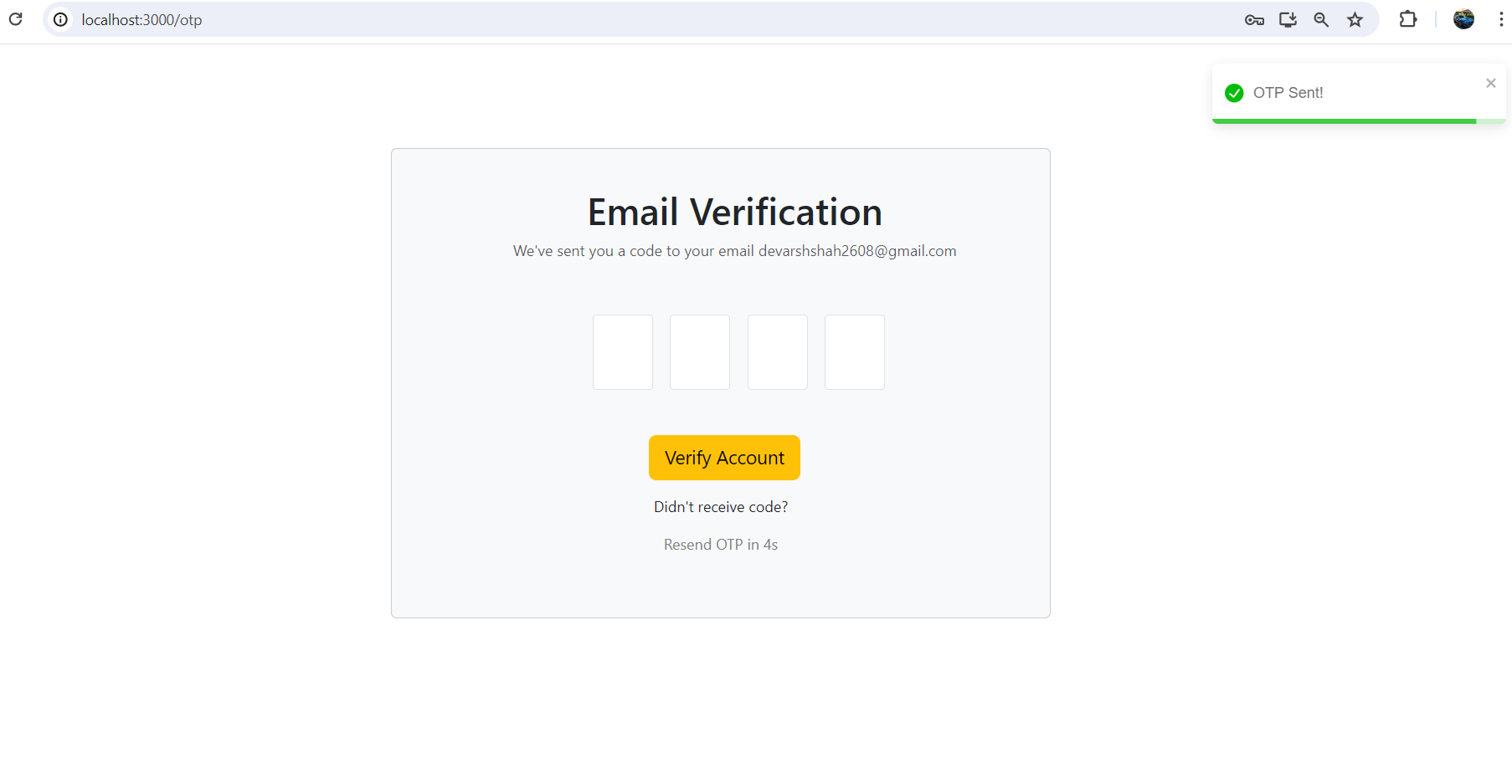

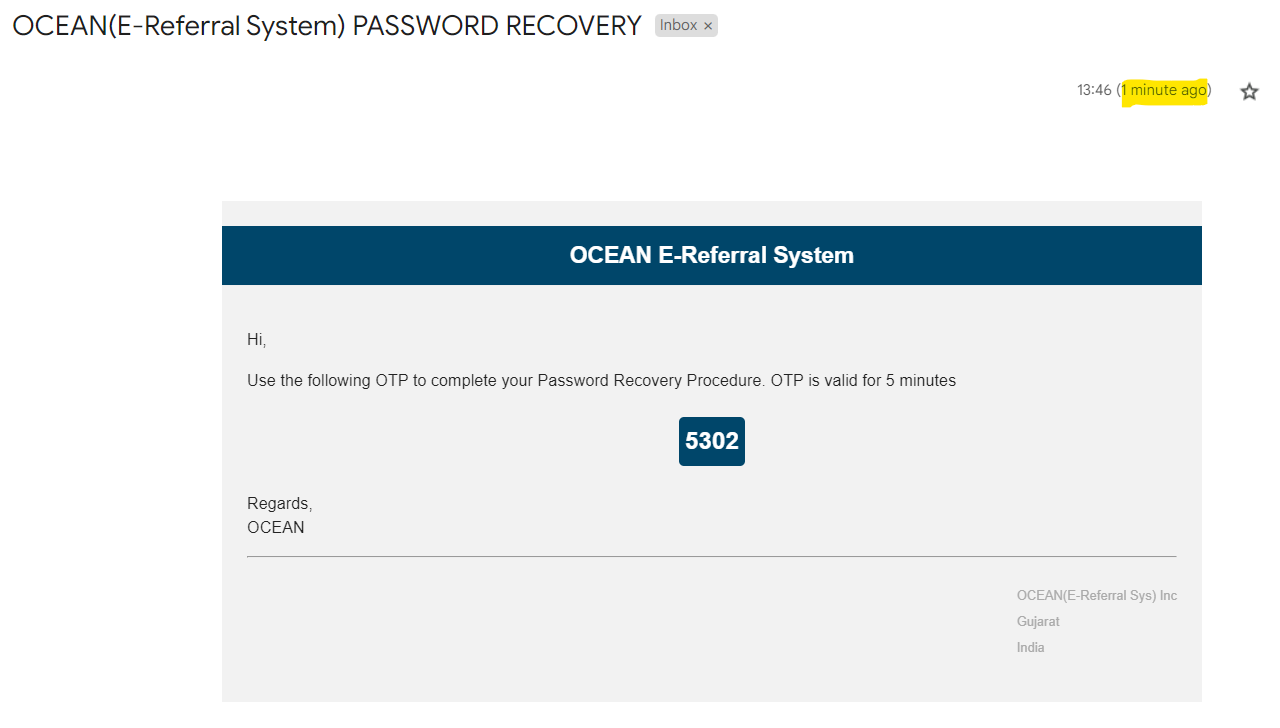

And the Backend, where the Nodemailer is configured. So, that we can send the OTP to reset the password.

Let's continue

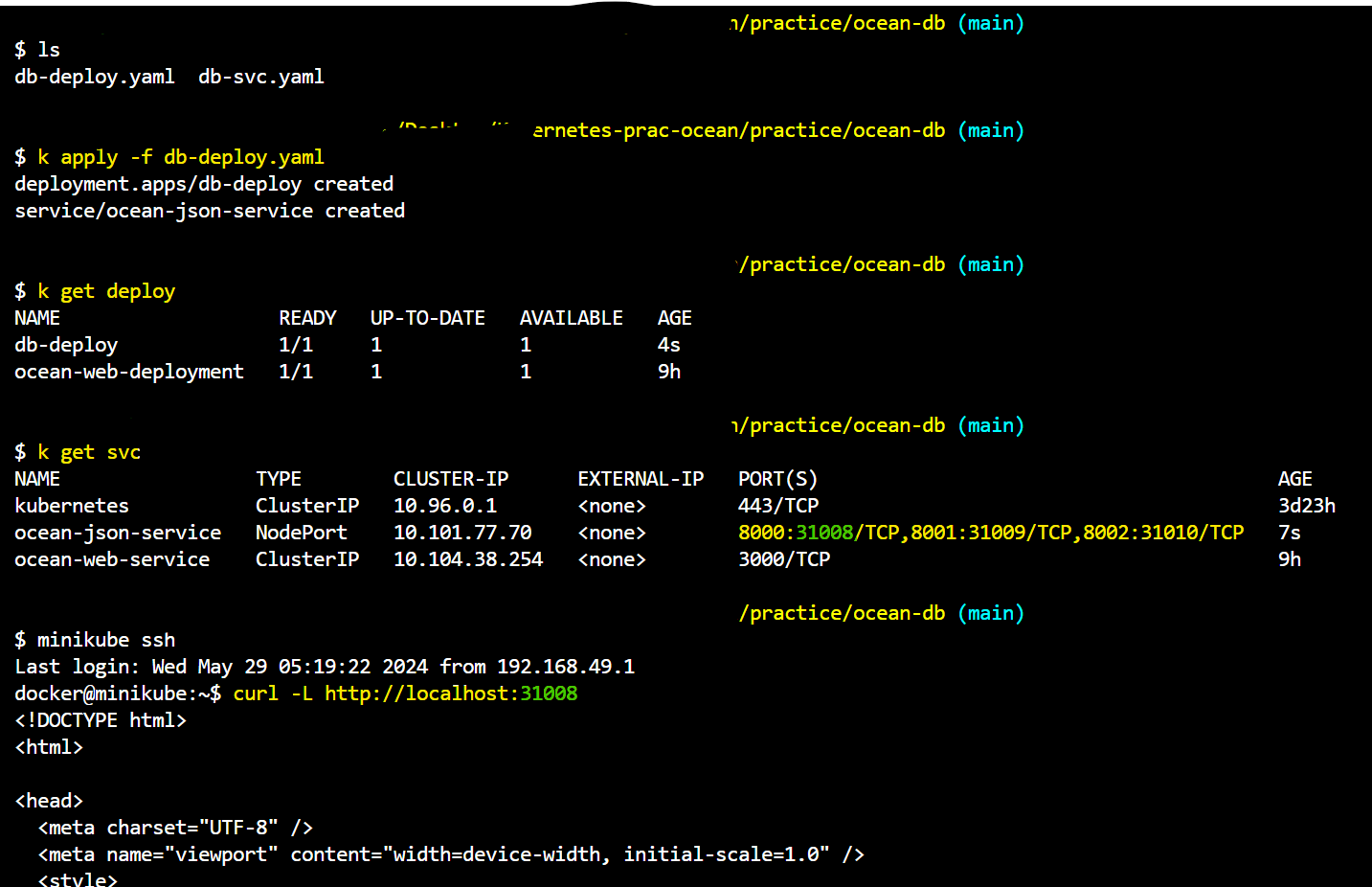

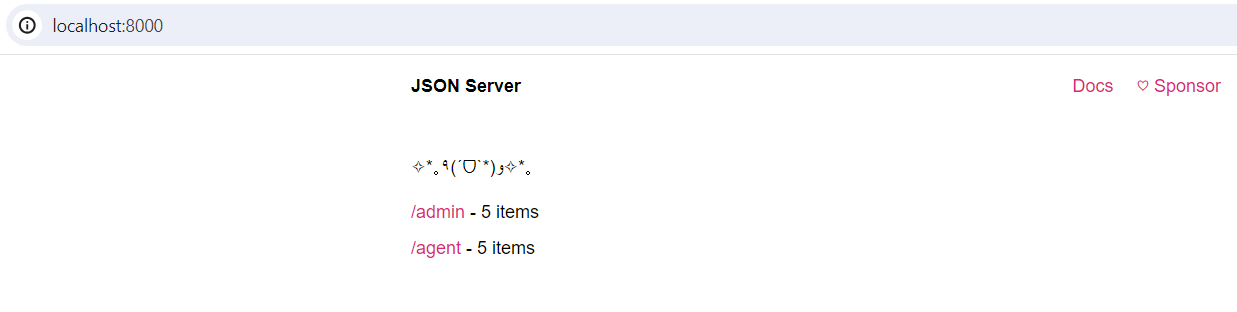

Configuring Database.

Here, the challenge is, that we've multiple databases running on different ports. So, let's create a Deployment manifest keeping that in mind.

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: db-deploy

name: db-deploy

spec:

replicas: 1

selector:

matchLabels:

app: db-deploy

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: db-deploy

spec:

containers:

- image: devarsh10/ocean-json:0.1

name: ocean-json

ports:

- containerPort: 3000

resources: {}

command: ["/bin/sh"]

args: ["-c","json-server --watch db.json --port 8000 --host 0.0.0.0 & json-server --watch db2.json --port 8001 --host 0.0.0.0 & json-server --watch

db3.json --port 8002 --host 0.0.0.0"]

status: {}

---

apiVersion: v1

kind: Service

metadata:

name: ocean-json-service

spec:

selector:

app: db-deploy

ports:

- name: port-8000

protocol: TCP

port: 8000

targetPort: 8000

nodePort: 31008

- name: port-8001

protocol: TCP

port: 8001

targetPort: 8001

nodePort: 31009

- name: port-8002

protocol: TCP

port: 8002

targetPort: 8002

nodePort: 31010

type: NodePort

Similarly, we can access the port 31009 and 31010.

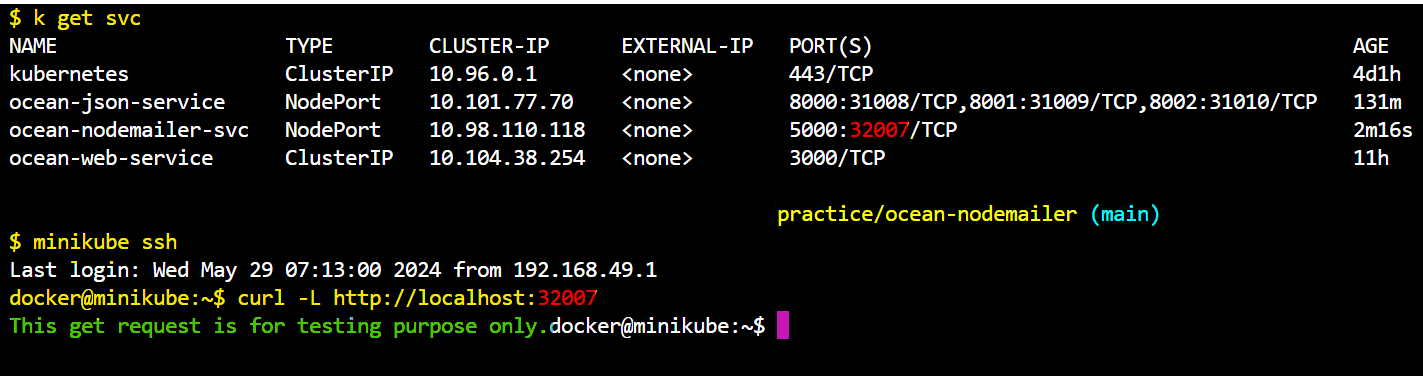

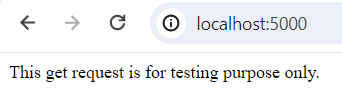

Now, configuring the Backend where the Nodemailer is configured.

Checkpoint

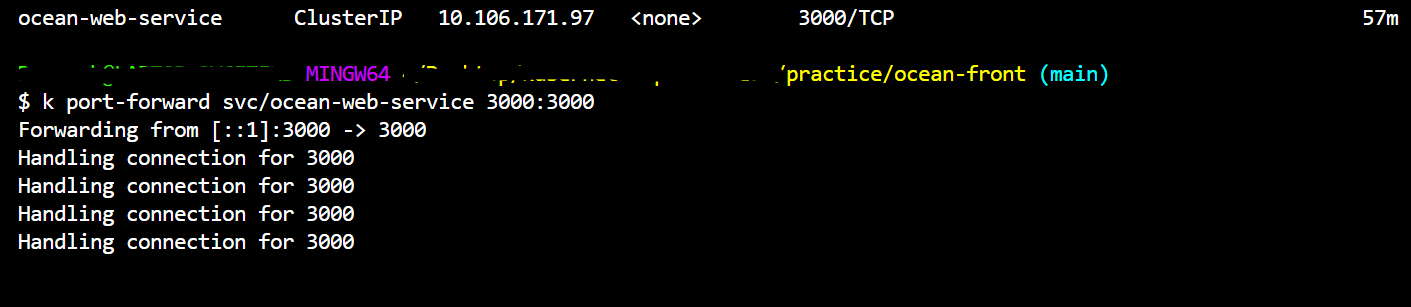

Till now, all three tiers are configured. Now it's time to access them from a PC.

Which is possible using port-forward. As of now we're neither using Load Balancer nor Ingress. So we've to use port-forward for now.

We will surely write a blog on Ingress and Load Balancer on Cloud(AWS most probably).

Port Forwarding

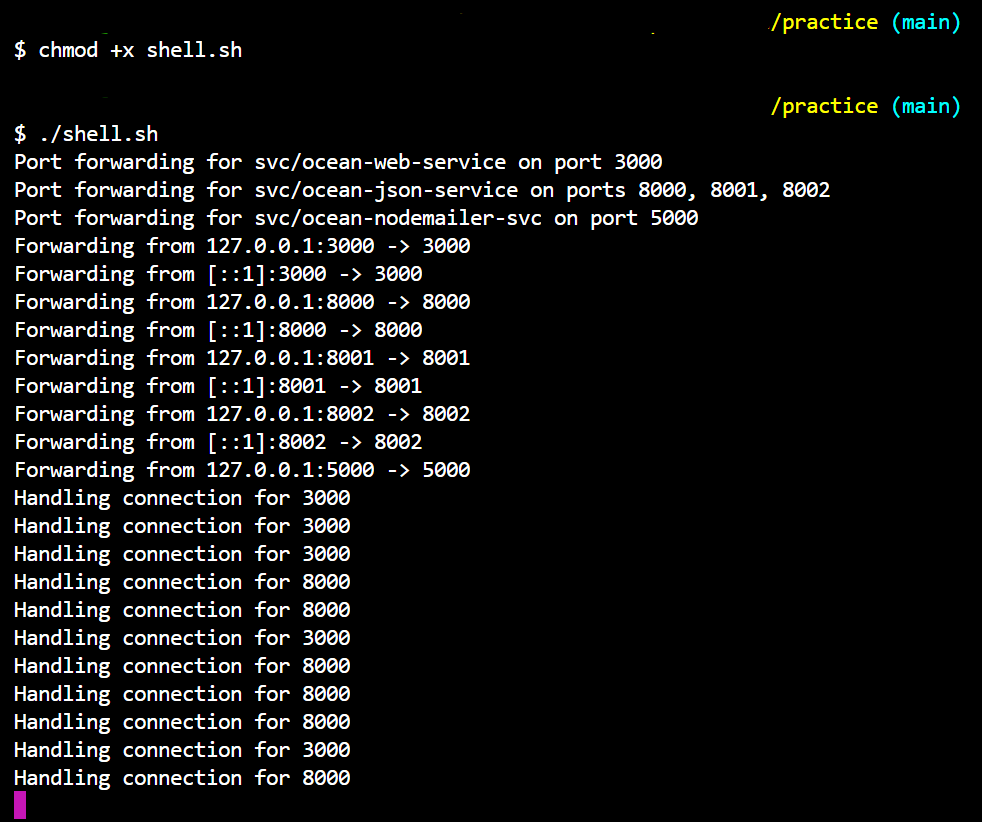

I have written a shell script, so that, we do not have to use write same command multiple times.

#!/bin/bash

# Port forward for ocean-web-service

kubectl port-forward svc/ocean-web-service 3000:3000 &

echo "Port forwarding for svc/ocean-web-service on port 3000"

# Port forward for ocean-json-service on multiple ports

kubectl port-forward svc/ocean-json-service 8000:8000 8001:8001 8002:8002 &

echo "Port forwarding for svc/ocean-json-service on ports 8000, 8001, 8002"

# Port forward for ocean-nodemailer-svc

kubectl port-forward svc/ocean-nodemailer-svc 5000:5000 &

echo "Port forwarding for svc/ocean-nodemailer-svc on port 5000"

# Wait for all background processes to finish

wait

To execute it, first, give the execute permission to it by using the command,

chmod +x shell.shand then execute it using./shell.sh.

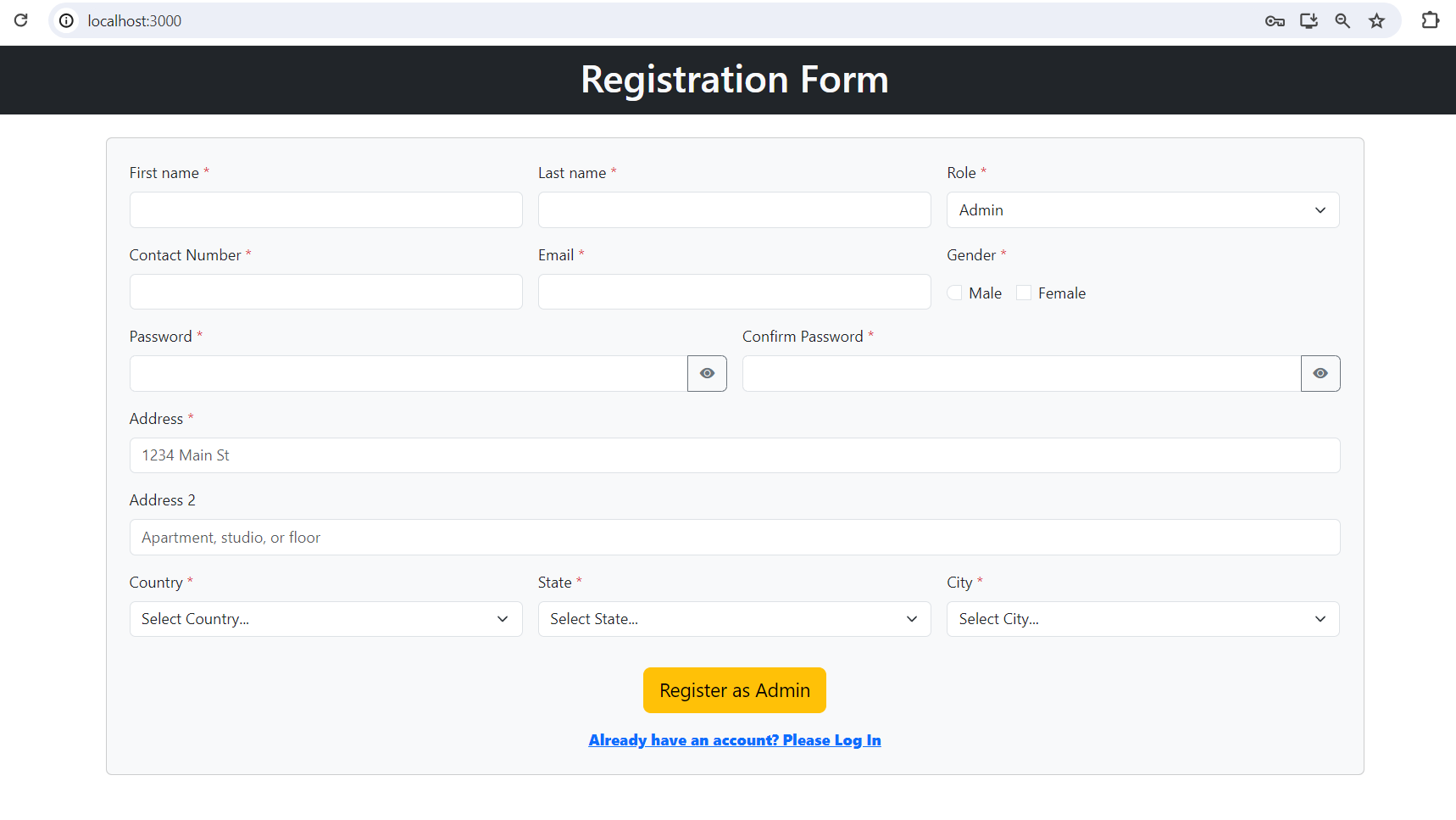

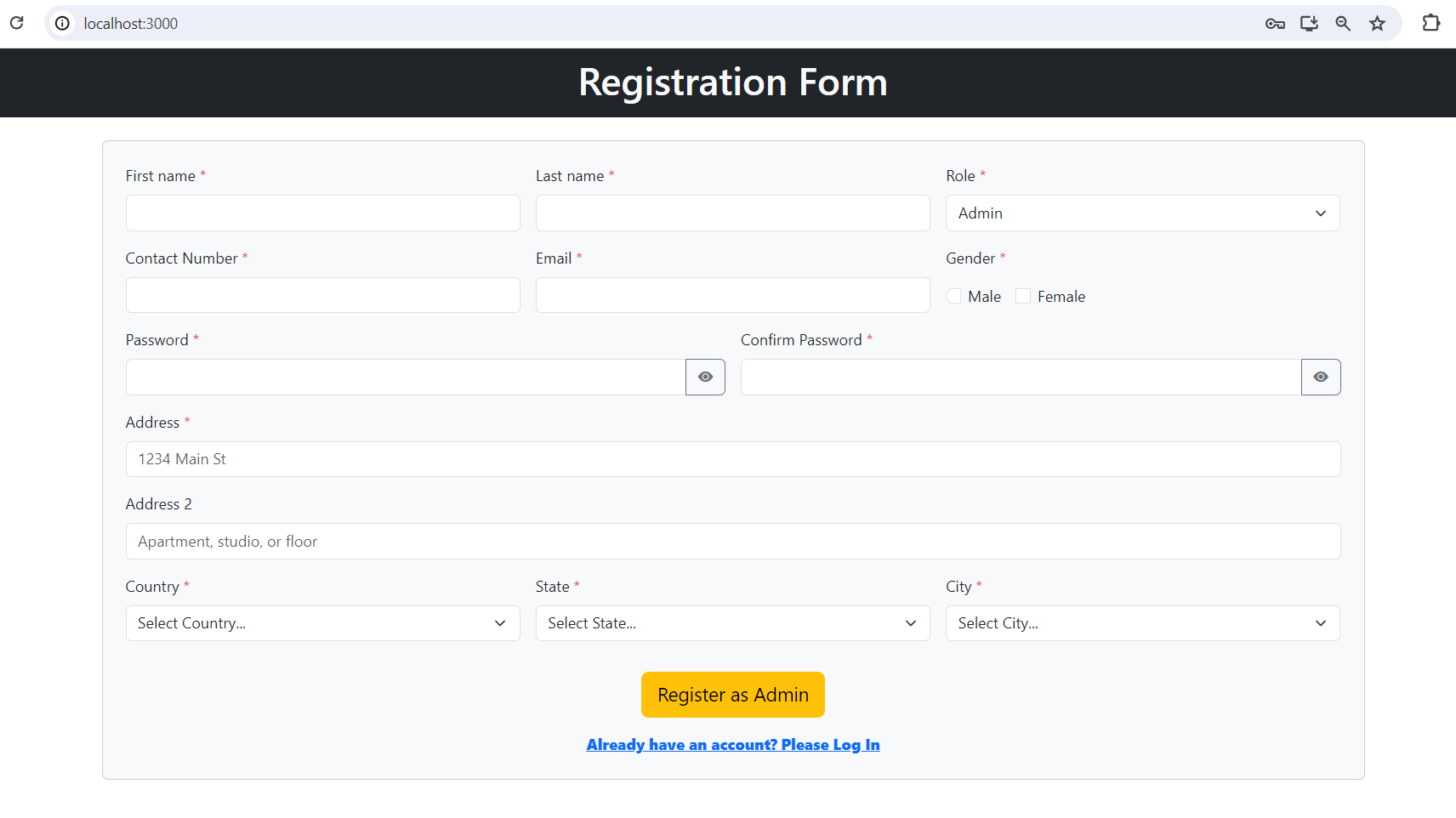

Moment of Truth (Execution)

- http://localhost:3000

- localhost:8000

- localhost:5000

Let's try resetting the password and see if the OTP is getting sent or not.

- Yes, OTP was sent.

- Let's check the mail

Success.

Terminal

Outro

So, that's it for the day everyone.

Now, you have hands-on experience in how to deploy a complex three-tier application on Kubernetes.

Next: We will be using a Load Balancer and Ingress. So that we can access our application from anywhere. Stay Tuned. Happy Coding.

Subscribe to my newsletter

Read articles from Devarsh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Devarsh

Devarsh

Hello Everyone, welcome to my blogging channel. Here, I simplify concepts for beginners. If you want to just follow the tutorial and clone the project for your resume, I simplify that process for you by making you understand the concepts. But, if you want to choose the other way around like you are learning skills to optimize the cost and build something. That's what an engineer would do. Please follow.