Log Parsing Tools Compared: Choosing Between Grep, Awk, and Sed

Prakhar tripathi

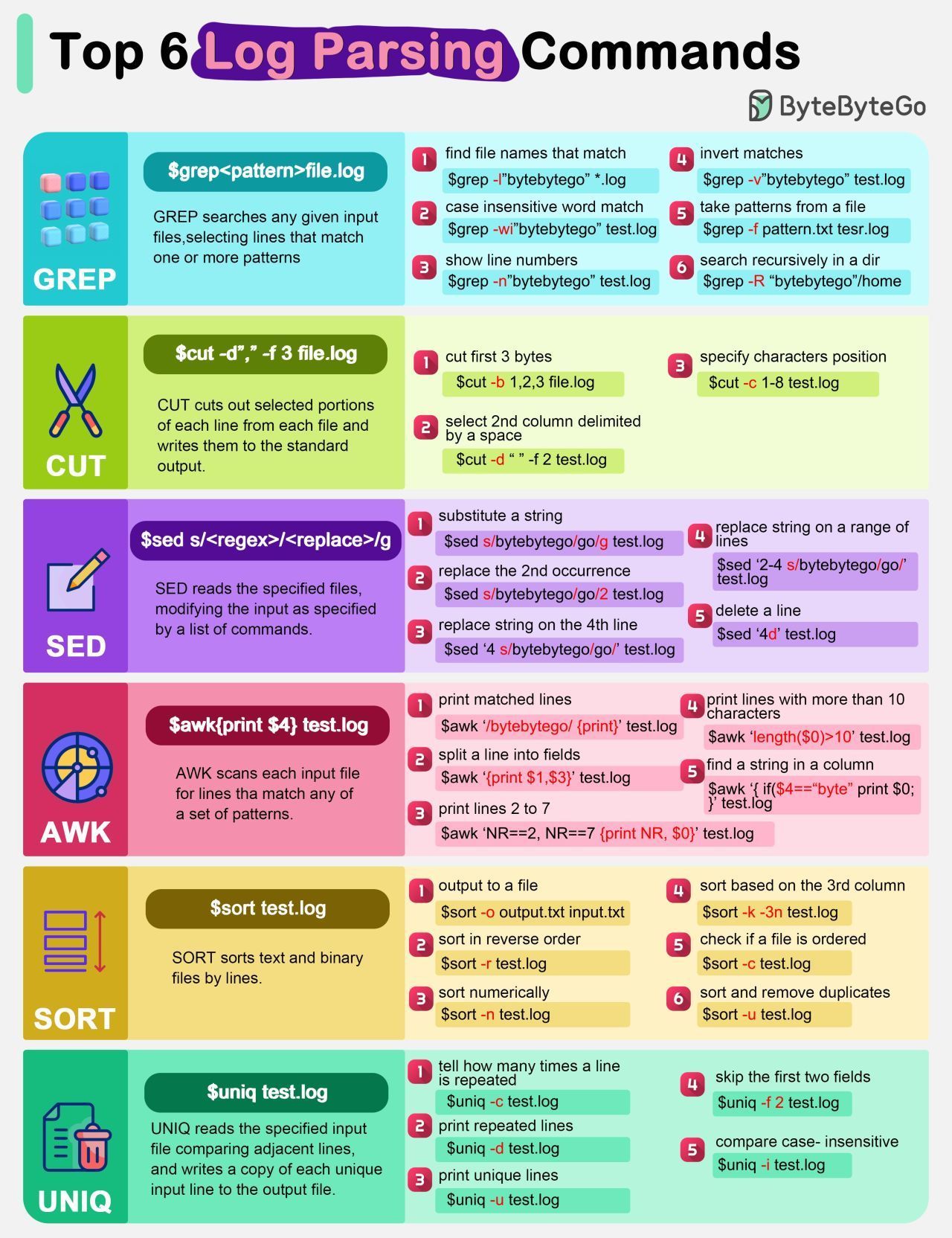

Prakhar tripathiLog parsing is an essential task in system administration, monitoring, and data analysis. It helps in identifying issues, understanding system behavior, and gaining insights from log files.

In this blog, we will explore the popular log parsing commands - grep, awk, and sed, and discuss how to choose the right tool based on your specific requirements.

Types of Commands

Grep

grep (Global Regular Expression Print) is a powerful search utility used for finding patterns within text files.

Awk

awk is a versatile programming language designed for text processing and data extraction.

Sed

sed (Stream Editor) is used for parsing and transforming text streams.

Key Considerations for Choosing the Right Log Parsing Tool:

Size of the Dataset: Large datasets require efficient tools to minimize processing time and resource usage.

Complexity of Parsing: Choose a tool that matches the complexity of the parsing and extraction tasks.

Infrastructure Available: Ensure the tool can run efficiently on your available infrastructure and within any resource constraints.

Performance Metrics: Evaluate execution time, CPU usage, memory usage, I/O performance, and scalability.

When to Use Grep, Awk, and Sed

Grep

Best For: Simple text searches and filtering lines based on patterns.

Performance: Fastest for simple searches with low CPU and memory usage.

Awk

Best For: Complex pattern matching, data extraction, and text manipulation and calculation.

Performance: Efficient for advanced text processing but slightly slower than

grepfor basic tasks.

Sed

Best For: Stream editing and simple text transformations.

Performance: Similar to

grepfor simple tasks, but less intuitive for complex parsing compared toawk.

Usage of Grep, Awk, and Sed Commands

Grep

# Find all lines containing "ERROR"

grep "ERROR" application.log

Awk

# Extract and print the second field from lines containing "ERROR"

awk '/ERROR/ {print $2}' application.log

Sed

# Replace all occurrences of "ERROR" with "WARNING"

sed 's/ERROR/WARNING/g' application.log

Practical Example: Using Awk vs Grep

Scenario: Extracting Fields from a Log File

Log File Example:

192.168.1.1 - - [12/Mar/2023:14:21:14 -0700] "GET /index.html HTTP/1.1" 200 2326

192.168.1.2 - - [12/Mar/2023:14:22:01 -0700] "POST /login HTTP/1.1" 403 534

192.168.1.3 - - [12/Mar/2023:14:23:08 -0700] "GET /images/logo.png HTTP/1.1" 200 456

Objective: Extract the IP address and the status code from each log entry.

Using Grep

# Grep cannot directly extract fields; we need to use additional tools like cut or awk.

grep "ERROR" access.log | awk '{print $1, $9}'

Using Awk

# Extract and print the first (IP address) and ninth (status code) fields

awk '{print $1, $9}' access.log

Conclusion

Choosing the right log parsing tool depends on your specific needs and the complexity of the tasks at hand. grep is ideal for simple searches, awk excels in complex data extraction and manipulation, and sed is powerful for stream editing and transformations.

Additionally, tools like Logstash, Splunk, and Elasticsearch offer high scalability and performance for handling large datasets(tens of gigabytes to terabytes), organizations need to consider the associated infrastructure and resource requirements as part of their deployment strategy.

Happy log parsing!

Credits: Bytebytego

Subscribe to my newsletter

Read articles from Prakhar tripathi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by