Deploying your favourite IPL Team's Applications as Microservices with Azure Kubernetes Service (AKS)

ferozekhan

ferozekhan

What is Ingress?

Ingress in Kubernetes is an API object that manages external access to the applications and services in a cluster, typically HTTP. Ingress, in most of its implementation is used for load balancing and name-based virtual hosting.

Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. Traffic routing is controlled by rules defined in the Ingress resource.

Here is a simple example where an Ingress sends all its traffic to one Service.

An Ingress may be configured to give Services externally-reachable URLs, load balance traffic, terminate SSL / TLS, and offer name-based virtual hosting. An Ingress controller is responsible for fulfilling the Ingress, usually with a load balancer, though it may also configure your edge router or additional frontends to help handle the traffic.

An Ingress does not expose arbitrary ports or protocols. Exposing services other than HTTP and HTTPS to the internet typically uses a service of type Service.Type=NodePort or Service.Type=LoadBalancer.

ClusterIP vs Ingress vs NodePort vs LoadBalanacer

Kubernetes provides several types of services to manage communication between different parts of your application. ClusterIP, Ingress, LoadBalancer, and NodePort are all ways of exposing services within your K8S cluster for external consumption. Here's a simplified explanation for each:

ClusterIP: It provides an internal IP address that can be used by other services within the same Kubernetes cluster to communicate with each other.

Use Case: Use ClusterIP for communication between different components of your application that are all inside the Kubernetes cluster.

NodePort: NodePort exposes a service on a static port on each worker node. This allows external access to the service from outside the cluster.

Use Case: Use NodePort when you need to access a service externally, but keep in mind that it exposes your service on every node's IP address.

LoadBalancer: LoadBalancer automatically provisions an external load balancer (if supported by your cloud provider) and assigns a unique external IP. It directs traffic to your service from outside the cluster.

Use Case: Use LoadBalancer when you want to expose your service to the internet and need automatic load balancing.

Ingress: An ingress controller acts as a reverse proxy and load balancer inside the Kubernetes cluster. It provides an entry point for external traffic based on the defined Ingress rules. Without the Ingress Controller, Ingress resources won’t work. The Ingress Controller doesn’t run automatically with a Kubernetes cluster, so you will need to configure your own. An ingress controller is typically a reverse web proxy server implementation in the cluster.

Use Case: We will be using nginx-ingress as our Ingress Controller for the purpose of this article. The Ingress Controller uses the Ingress Resource that has desired routing configured for user requests to reach your microservices with the help of the services deployed within the Kubernetes Cluster.

Deployment Steps

Prerequisites

Azure subscription: If you don't have an Azure subscription, create a free account before you begin

Sample Application Code can be found at my Github Repository HERE

You have already created your Docker Image and pushed to the DockerHub registry (optional). You may use the Sample code and Deployment Manifest Files as is and ready for use

Deploy your Kubernetes Cluster in Azure Kubernetes Service (AKS)

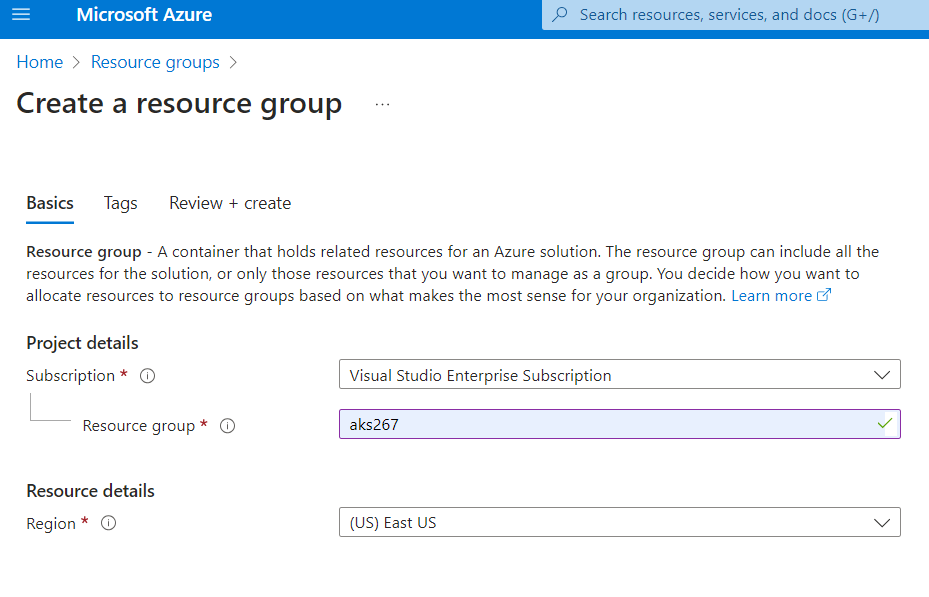

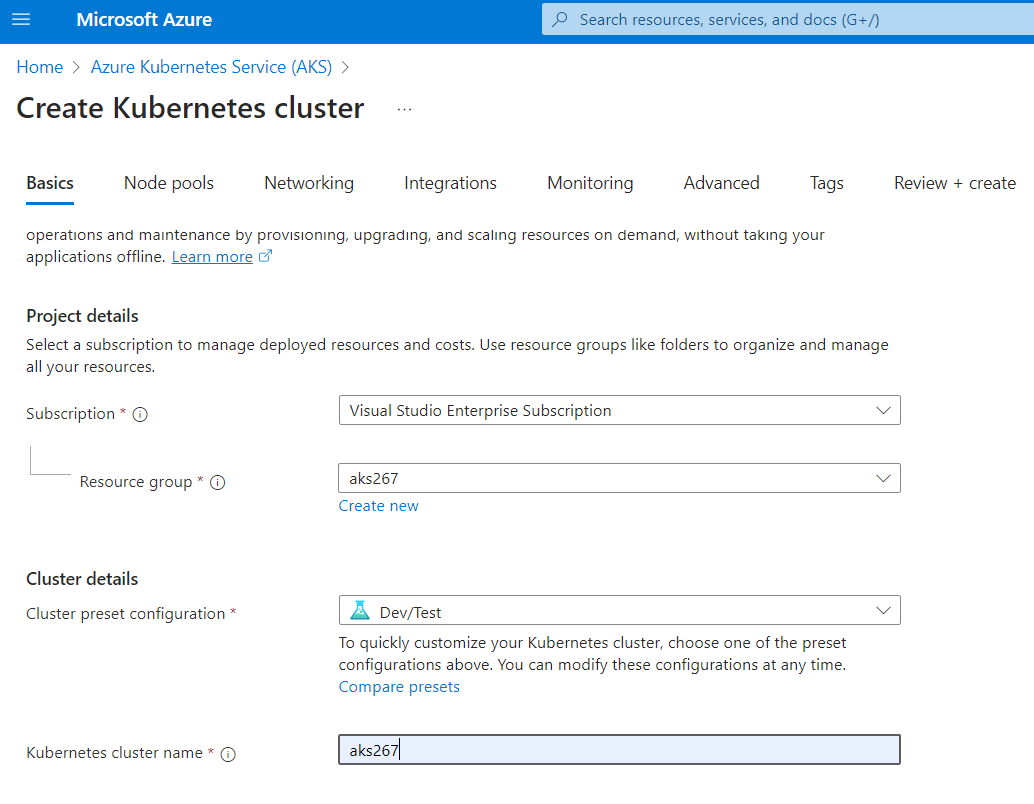

Let us login to the Azure Portal >> Search >> Azure Kubernetes Services >> Create Kubernetes Cluster with configurations as shown below

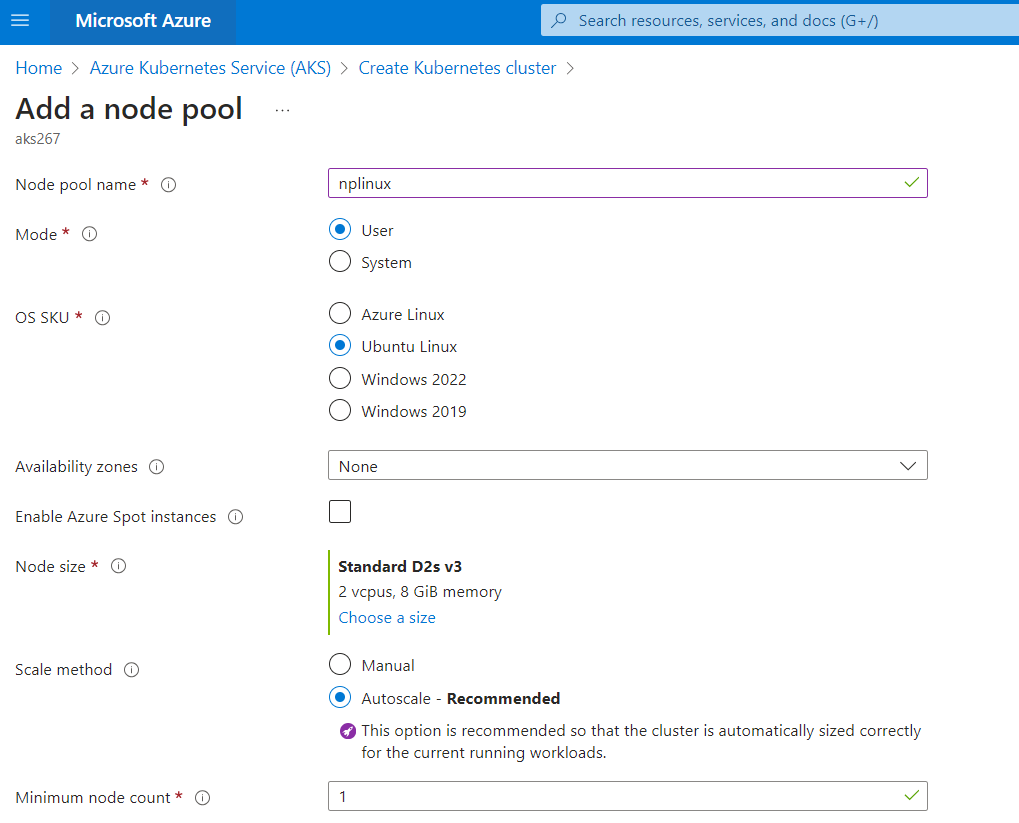

Click Add node pool >> Give the pool a name (nplinux) with User Mode and Node Size as D2s_v3 >> Add >> Review and Create

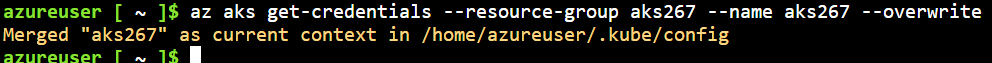

Connecting to the AKS Cluster

Let us now launch the Azure Cloud Shell. The Cloud Shell has the kubectl pre-installed.

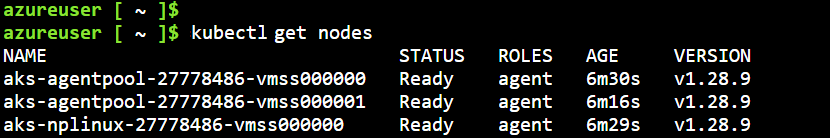

az aks get-credentials --resource-group aks267 --name aks267 --overwrite

This command downloads AKS credentials and configures the Kubernetes CLI to use them to communicate with the AKS Cluster. To verify our connection with the AKS Cluster, let us run the following command

kubectl get nodes

1 Deploying your Ingress Controller

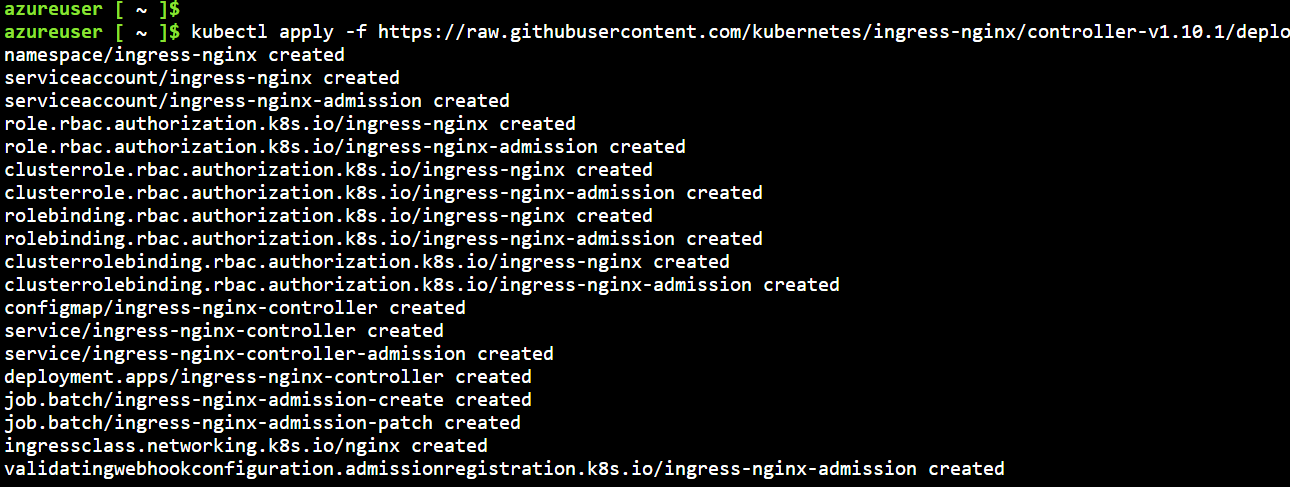

Let us FIRST deploy an Ingress controller to satisfy an Ingress before deploying the ingress resource configuration.

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.10.1/deploy/static/provider/cloud/deploy.yaml

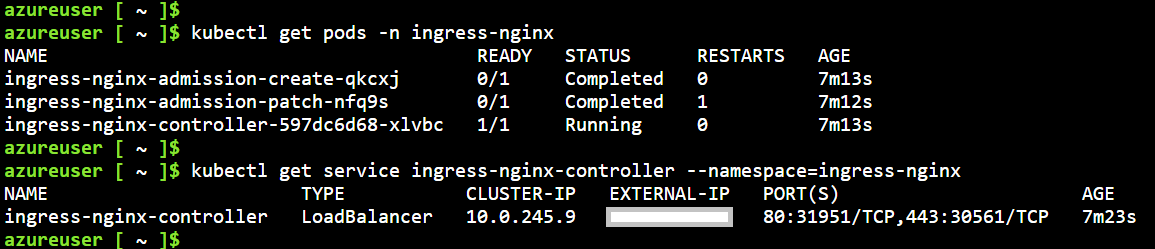

Let check for the Ingress Service and the pods running the same service

kubectl get pods --namespace=ingress-nginx

kubectl get service ingress-nginx-controller --namespace=ingress-nginx

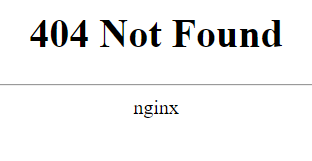

Browsing to this External IP address of the Load Balancer will show you the NGINX 404 Not Found. This is because we have not set up any routing rules for our services yet.

2 Deploy your multiple GoLang Applications to the AKS cluster

Let us now deploy our THREE GoLang web applications and route traffic between them using NGINX ingress. We will use the GitHub Repository HERE.

To deploy the application, we use the respective manifest files to create all the objects required to run the AKS GoLang web application. A Kubernetes manifest file defines a cluster's desired state, such as which container images to run. The manifest includes the following Kubernetes deployments and services.

In the Cloud Shell, let us download the sample repository files

git clone https://github.com/mfkhan267/ingress2024.git

cd ingress2024/k8s

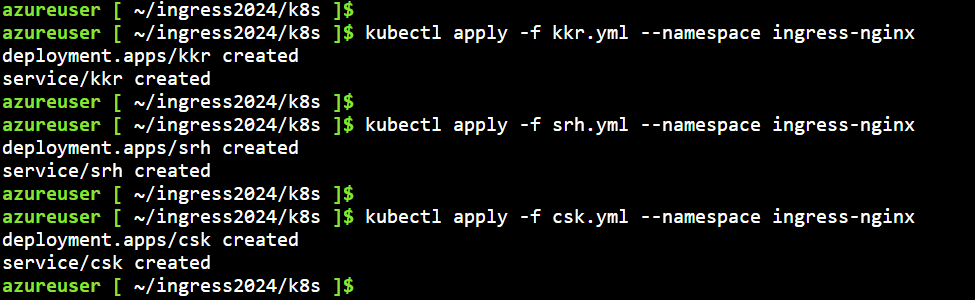

kubectl apply -f kkr.yml --namespace ingress-nginx

kubectl apply -f srh.yml --namespace ingress-nginx

kubectl apply -f csk.yml --namespace ingress-nginx

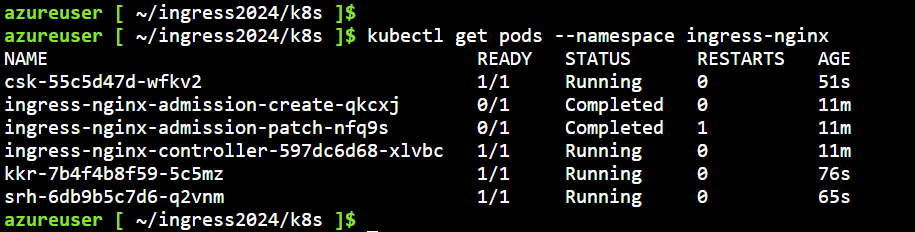

Check for new pods running your THREE GoLang web applications

3 Deploy your Ingress Resource Configuration

The Ingress Resource Configuration is what allows the nginx controller to route traffic to your microservices applications running in the Kubernetes cluster.

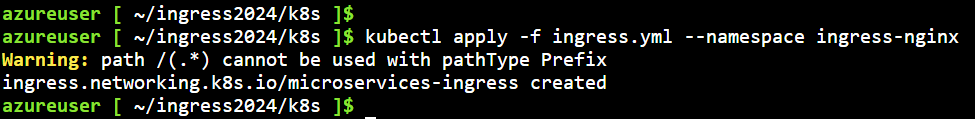

kubectl apply -f ingress.yml --namespace ingress-nginx

4 Testing your Web Applications that should now be running as part of your Kubernetes Deployment

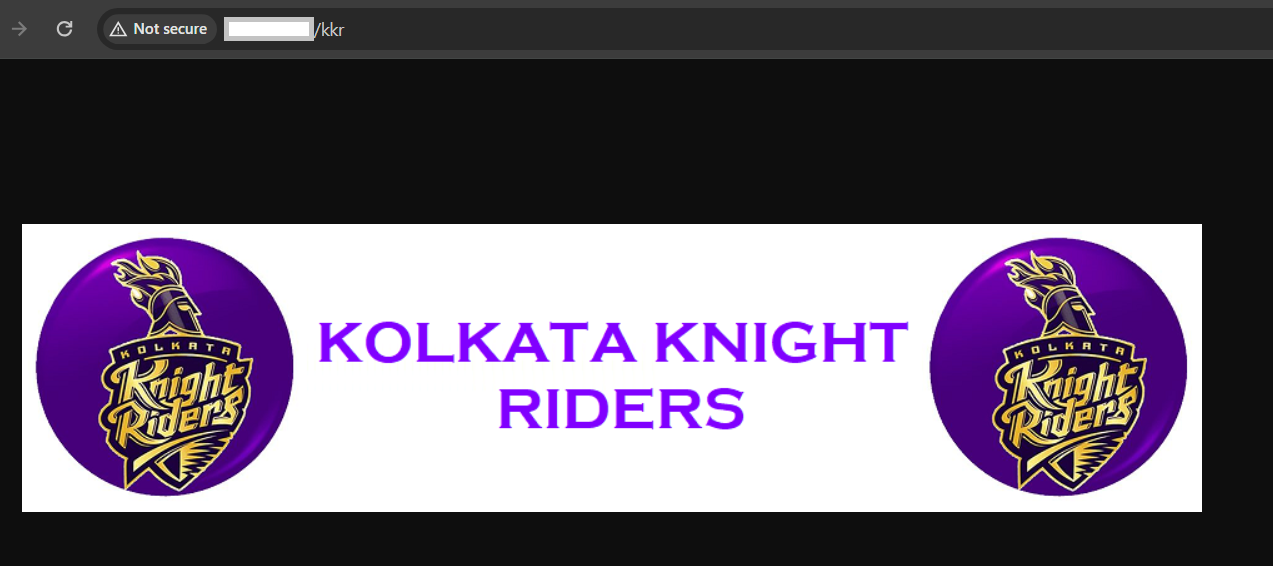

To access the KKR Team Application, let us browse to the EXTERNAL_IP/kkr

When you open the EXTERNAL_IP/kkr you should see the screen below:

To access the SRH Team Application, let us browse to the EXTERNAL_IP/srh

When you open the EXTERNAL_IP/srh you should see the screen below:

To access the CSK Team Application, let us browse to the EXTERNAL_IP/csk

When you open the EXTERNAL_IP/csk you should see the screen below:

For all the other routes like EXTERNAL_IP/xyz or EXTERNAL_IP/c123, the NGINX Controller should route traffic to the KKR Application by default.

That's all folks. Hope you enjoyed the end to end deployment of your containerised Web Applications exposed to the outside world using the nginx based ingress. You should now be comfortable running your containerised Applications as Containers inside your Kubernetes Cluster running on Azure Kubernetes Service.

Kindly share with the community. Until I see you next time. Cheers !

Subscribe to my newsletter

Read articles from ferozekhan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by