AWS Cloud Resume Challenge

Santosh Adhikari

Santosh Adhikari

1. Introduction

The AWS Cloud Resume Challenge is a practical project where we build and deploy a personal resume website using Amazon Web Services (AWS). It involves using AWS services like S3 for hosting, Lambda for serverless functions, and DynamoDB for data storage. The challenge also includes setting up a CI/CD pipeline and implementing Infrastructure as Code. This project helps to learn and showcase our cloud computing skills, making it a great addition to our professional portfolio.

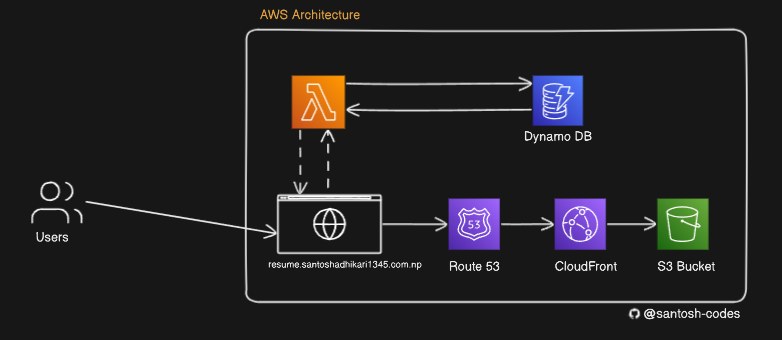

2. Architecture Overview

The architecture of my AWS Cloud Resume Challenge project involves several AWS services working together to deliver a seamless and performant resume website. Below is a diagram that illustrates the overall architecture:

Explanation of the Architecture

Users: Visitors access the resume website through their browsers.

Route 53: Manages DNS settings and directs traffic to the CloudFront distribution.

CloudFront: Acts as a content delivery network (CDN) to serve the website content from the S3 bucket, reducing latency and improving load times.

S3 Bucket: Stores the static website files (HTML, CSS, JavaScript).

Lambda Function: Executes serverless code to track visitor counts, interacting with DynamoDB.

DynamoDB: A NoSQL database that stores the visitor count data.

This architecture ensures high availability, scalability, and security while delivering a responsive and efficient user experience.

3. Services

As mentioned above, various services for Cloud, CI/CD and IaaS are used in this project. The detail list includes:

- AWS Services

S3

CloudFormation

AWS Certificate Manager

Route 53

Lambda

Dynamo DB

- CI/CD

- GitHub Actions

- Infrastructure as Code

- Terraform

- Programming Language

- Python

4. Setting Up the Project

Creating the Resume

Firstly to setup a project we need a static website . I made one for my portfolio using HTML, CSS and JavaScript. It was just a simple site with some personal information about me. I used a minimalist design to keep the content clear and easy to read.

5. Static Website Hosting with S3

Creating a S3 Bucket

With my resume ready, the next step was to host it on AWS. I started by creating an S3 bucket in the AWS Management Console. S3 (Simple Storage Service) is ideal for hosting static websites. I named my bucket according to AWS best practices, ensuring it was globally unique. After setting up the bucket and policies, I uploaded my HTML, CSS, and any other necessary files to the S3 bucket.

Creating a CloudFront Distribution

To make my resume website publicly accessible, I used Amazon CloudFront. CloudFront is a content delivery network (CDN) service that provides a fast and secure way to deliver my website to users around the world. I created a CloudFront distribution and configured it to use my S3 bucket as the origin. This setup allowed CloudFront to make my website public and cache my website content at edge locations globally, reducing latency and improving load times. I tested the URL provided by CloudFront to ensure that my site was accessible and displayed correctly.

6. Storing Data with DynamoDB

Setting Up DynamoDB Table

To add some dynamic functionality, I decided to store the visitor count data for my resume website. I used Amazon DynamoDB, a fully managed NoSQL database service. I created a DynamoDB table. The table was configured to store the visitor counts, with fields for the Id and the count value.

Writing Python Code

I wrote a simple Python script that increments a counter each time the resume page is loaded. The function interacts with DynamoDB, to store and retrieve the visitor count.

7. Adding Serverless Backend with AWS Lambda

Creating Lambda Functions

Lambda allows you to run code without provisioning or managing servers, making it perfect for serverless applications. Since we don't call the database directly from the website, I have used Lambda as the API that will trigger our website to call the Dynamo DB values. I created a Lambda function and used the above mentioned python script inside the function. While creating Lambda, I enabled Function URL option which gave me a public URL that I can use to invoke my lambda function from anywhere. I integrated the URL into my resume code base, so now whenever the Lambda function was triggered (each time someone visited my resume), it would update the visitor count in the DynamoDB table.

8. Setting Up a Custom Domain with Route 53 and AWS Certificate Manager

Registering a Domain with Route 53

To make my resume website more professional, I registered a custom domain using Amazon Route 53. I chose the domain www.santoshadhikari1345.com.np and created a subdomain resume.santoshadhikari1345.com.np specifically for the resume site. Route 53 made it easy to manage DNS settings and link my domain to AWS resources.

Obtaining an SSL Certificate with AWS Certificate Manager

To secure my website with HTTPS, I used AWS Certificate Manager (ACM) to obtain an SSL certificate. Since ACM certificates must be generated in the N. Virginia region to be used with CloudFront, I ensured my certificate was created in the correct region. This certificate was then associated with my CloudFront distribution to provide secure access to my resume site.

Configuring Route 53 to Use the CloudFront URL

I updated the DNS settings in Route 53 to point the resume.santoshadhikari1345.com.np subdomain to the CloudFront distribution URL. This involved creating an alias record ( A record) in Route 53 that mapped the subdomain to the CloudFront distribution, ensuring that visitors to my custom domain would be served the content from CloudFront.

9. Implementing CI/CD Pipeline

Setting Up GitHub Actions

For the continuous integration and continuous deployment (CI/CD) pipeline, Instead of using AWS CodeBuild, I chose to use GitHub Actions because I had plenty of experience with CodeBuild. I wanted to learn something new and GitHub Actions provided a flexible and integrated way to automate my deployment process directly from my GitHub repository.

Integrating with GitHub

I created a GitHub Actions workflow file in my repository. This workflow was triggered on every push to the main branch, building and deploying my resume website automatically. The workflow included steps to validate my code, upload the files to the S3 bucket, and invalidate the CloudFront cache to ensure the latest version was served to users. Learning GitHub Actions was a rewarding experience, as it broadened my CI/CD toolset and streamlined my deployment process.

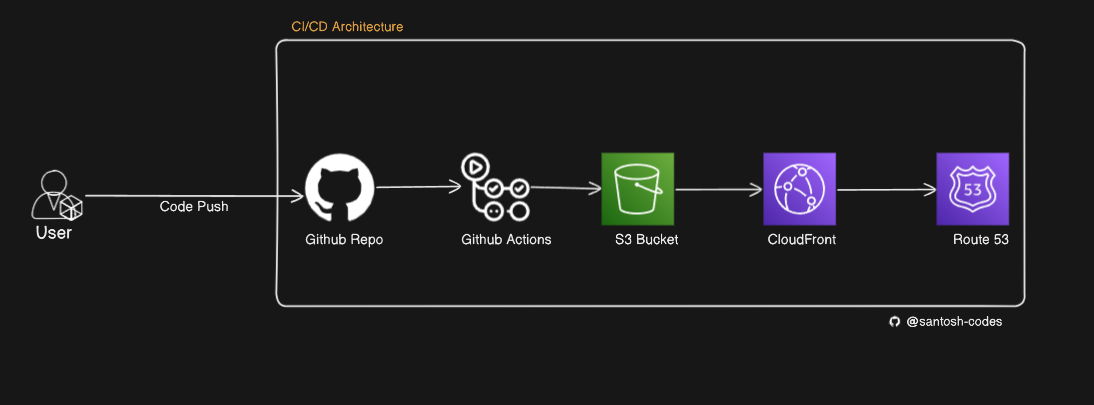

CI/CD Architecture Overview

The CI/CD pipeline for my resume website involves automating the deployment process using GitHub Actions. The architecture ensures that any changes pushed to the main branch of the GitHub repository are automatically deployed to the S3 bucket, and the CloudFront distribution is updated accordingly.

Explanation of the CI/CD Flow

User Pushes Code: The process begins when a user (developer) pushes changes to the main branch of the GitHub repository.

GitHub Actions: GitHub Actions, a flexible and integrated CI/CD tool, detects the push event and triggers the workflow defined in the repository.

Update S3 Bucket: The workflow includes steps to upload the updated website files (HTML, CSS, JavaScript) to the S3 bucket. This ensures that the S3 bucket always contains the latest version of the resume website.

Invalidate CloudFront Cache: After the S3 bucket is updated, the workflow includes a step to invalidate the CloudFront cache. This ensures that CloudFront serves the most recent version of the website to users, bypassing any cached content.

Route 53 Redirection: With the CloudFront cache invalidated, Route 53 can redirect requests to the updated CloudFront distribution, ensuring that users accessing

resume.santoshadhikari1345.com.npreceive the fresh, updated content.Benefits of the CI/CD Pipeline

Automation: The entire deployment process is automated, reducing manual intervention and minimizing the risk of errors.

Consistency: Every push to the main branch triggers the same set of actions, ensuring consistent deployments.

Efficiency: The pipeline ensures that updates are quickly and efficiently propagated to the live website, providing users with the latest content without delay.

10. Infrastructure as Code with Terraform

Writing Terraform Configurations

Instead of using AWS CloudFormation, I opted for Terraform for managing my infrastructure as code (IaC). This was my first time using Terraform, and I was excited to learn this powerful tool. Terraform allowed me to define my AWS resources, such as the S3 bucket, Lambda functions, DynamoDB table, and CloudFront distribution, in a declarative configuration file.

Deploying Resources

With my Terraform configuration files ready, I used the terraform apply command to provision the resources. Terraform handled the creation and configuration of all required AWS services, ensuring a consistent and repeatable infrastructure setup. This experience gave me valuable insights into Terraform's capabilities and how it compares to CloudFormation, enriching my overall cloud management skills.

11. Future Improvements

I have tried to incorporate all the necessary features to complete this challenge successfully, but there are still certain aspects missing in this project that I would love to enhance in the future.

Testing

Manual Testing: Before automating tests, it is crucial to perform thorough manual testing. This involves verifying that each feature of the resume website functions as expected. Manual tests should cover various scenarios, including different browsers and devices, to ensure compatibility and responsiveness.

Automated Testing with Selenium: To streamline the testing process and ensure ongoing reliability, automated tests can be implemented using Selenium. Selenium allows us to write scripts in Python to automatically perform actions on the website, such as navigating pages, filling out forms, and verifying content. This approach ensures that new changes do not introduce bugs and that the website continues to function correctly over time.

Performance Optimization

Further optimization of the website's performance can be achieved by enabling compression on CloudFront, optimizing images and assets, and using server-side rendering for dynamic content.

Security Enhancements

Strengthening security measures by implementing AWS WAF (Web Application Firewall) to protect against common web exploits and configuring IAM roles with least privilege principles to secure access to AWS resources.

Feature Expansion

Adding new features to the resume website, such as a contact form, a blog section, or integrating with LinkedIn API to fetch real-time data, can make the website more interactive and engaging.

Conclusion

The AWS Cloud Resume Challenge provided me with a comprehensive learning experience, covering a wide range of AWS services and best practices for deploying a web application in the cloud. By building and deploying my personal resume website, I gained hands-on experience with S3, CloudFront, Lambda, DynamoDB, and more. Implementing a CI/CD pipeline with GitHub Actions and managing infrastructure as code with Terraform enhanced my understanding of modern DevOps practices.

I have tried my best to include all the necessary features to complete this challenge, but there are still certain aspects missing in this project that I would love to enhance in the future. This resume website project will remain a dynamic and evolving part of my professional portfolio, demonstrating my commitment to learning and excellence in cloud architecture and development.

Access the Code

If you're interested in exploring the code behind this project, you can find all the files and configurations in my GitHub repository. This includes the HTML, CSS, and JavaScript for the website, as well as the Python scripts for Lambda functions, Terraform configurations for infrastructure, and GitHub Actions workflows for CI/CD.

For code access, visit my GitHub at: github.com/santosh-codes

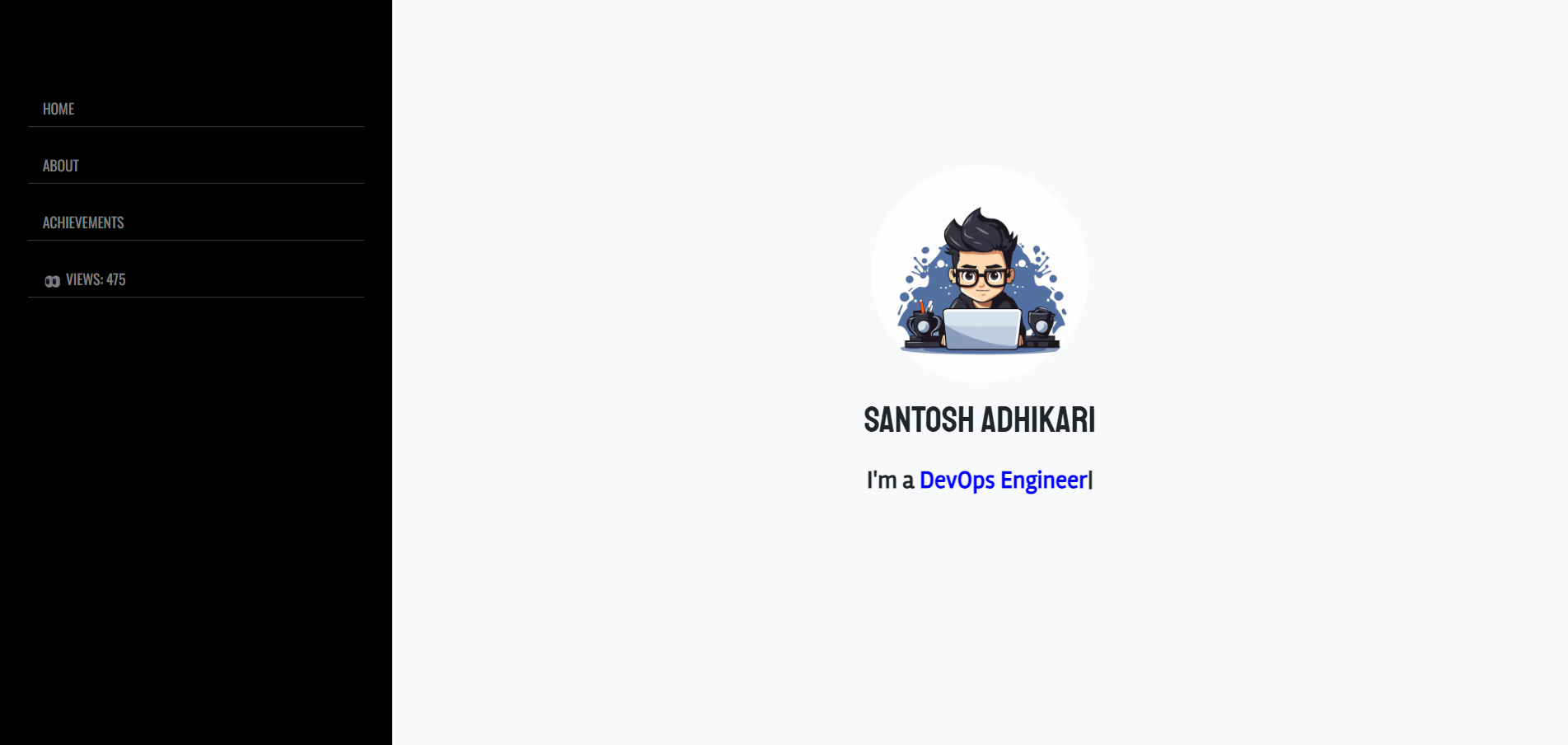

Website Preview

Website Link : resume.santoshadhikari1345.com.np

Subscribe to my newsletter

Read articles from Santosh Adhikari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Santosh Adhikari

Santosh Adhikari

A DevOps Engineer with a deep passion for AWS and Cloud Computing.