Network Observability using Hubble

Umair Khan

Umair Khan

Hubble

Hubble is a fully distributed networking observability platform.

Its built on top of Cilium and eBPF.

Hubble was created and specifically designed to make best use of these new eBPF powers.

Hubble Insights

Hubbel can provide insights in following areas.

Service dependencies & communication map

What services are communicating with each other? How frequently? What does the service dependency graph look like?

What HTTP calls are being made? What Kafka topics does a service consume from or produce to?

Network monitoring & alerting

Is any network communication failing? Why is communication failing? Is it DNS? Is it an application or network problem? Is the communication broken on layer 4 (TCP) or layer 7 (HTTP)?

Which services have experienced a DNS resolution problem in the last 5 minutes? Which services have experienced an interrupted TCP connection recently or have seen connections timing out? What is the rate of unanswered TCP SYN requests?

Application monitoring

What is the rate of 5xx or 4xx HTTP response codes for a particular service or across all clusters?

What is the 95th and 99th percentile latency between HTTP requests and responses in my cluster? Which services are performing the worst? What is the latency between services?

Security observability

- Which services had connections blocked due to network policy? What services have been accessed from outside the cluster? Which services have resolved a particular DNS name?

Operational Components

Hubble Server

Runs on each node as a part of Cilium agent operations.

Implements gRPC observer service, which provides access to network flows on a node.

Implements gRPC peer service used by Hubble relay to discover peer Hubble servers.

Hubble Peer Service

- Used by Hubble relay to discover avalible Hubble servers in the cluster.

Hubble Relay Deployment

Communicates with cluster wide Hubble peer service to discover Hubble servers in the cluster.

Keeps persistent connection with Hubble server gRPC API.

Exposes API for cluster wide observability.

Hubble Relay Service

Used by Hubble UI.

Can be exposed for use by Hubble CLI tool.

Hubble UI Deployment

- Act as service backend for Hubble UI service.

Hubble UI

- Used for cluster wide networking visualization.

Network Flows

Network flows are key concepts in Hubble.

Flows are like packet captures but instead of focusing on content of packet, flows focus on how packets flow through your Cilium managed Kubernetes cluster.

Flows include contextual information that helps us figure out where in the cluster a network packet is coming from, where it’s going, and whether it was dropped or forwarded, without having to rely on knowing the source and destination IP addresses of the packet.

Below is an example of flow captured using Hubble CLI tool that includes contextual metadata of packet.

{

"time": "2023-03-23T22:35:16.365272245Z",

"verdict": "DROPPED",

"Type": "L7",

"node_name": "kind-kind/kind-worker",

"event_type": {

"type": 129

},

"traffic_direction": "INGRESS",

"is_reply": false,

"Summary": "HTTP/1.1 PUT http://deathstar.default.svc.cluster.local/v1/exhaust-port"

"IP": {

"source": "10.244.2.73",

"destination": "10.244.2.157",

"ipVersion": "IPv4"

},

"source": {

"ID": 62,

"identity": 63675,

"namespace": "default",

"labels": [...],

"pod_name": "tiefighter"

},

"destination": {

"ID": 601,

"identity": 25788,

"namespace": "default",

"labels": [...],

"pod_name": "deathstar-54bb8475cc-d8ww7",

"workloads": [...]

},

"l4": {

"TCP": {

"source_port": 45676,

"destination_port": 80

}

},

"l7": {

"type": "REQUEST",

"http": {

"method": "PUT",

"Url": "http://deathstar.default.svc.cluster.local/v1/exhaust-port",

...

}

}

}

Filtering the Flow

To see the flow of events on a specific Kubernetes node.

hubble observe --followTo filter the flow to obtain the TIE fighter flow show before.

kubectl -n kube-system exec -ti pod/cilium-w7r54 -c cilium-agent -- hubble observe --from-label "class=tiefighter" --to-label "class=deathstar" --verdict DROPPED --last 1 -o jsonAbove command makes use of the

--from-labelfilter to make sure only flows with TIE fighter pods as the source are considered. The--to-labelfilter is used to make sure only flows with Death Star endpoint as the destination are considered. The--verdictfilter is used to ensure only flows forDROPPEDpackets are considered.To have cluster wide observability we need to enable the Hubble Relay service and expose that service to our local workstations.

cilium hubble port-forward &

hubble status

hubble observe --to-label "class=deathstar" --verdict DROPPED --all

The output of the

hubble observecommand includes flows from both Death Star service backend pods. You don't need to do anything but filter based on theclass=deathstarlabel. The Hubble Relay service makes the necessary requests to the Hubble server running on each node and aggregates the flow information.To get Hubble UI for visualization.

cilium hubble ui

Network Observability Example

We will use Hubble to observer network traffic of our cluster and than updated our CIliumNeworkPolicy accordingly.

First we need to install Hubble CLI locally.

hubble versionWe will establish a local port forwarded Hubble Relay service using the Cilium CLI tool.

cilium hubble port-forward &Check status of Hubble.

hubble statusNow lets make some requests from Xwing to Deathstar which will be denied and than observe them using Hubble CLI.

kubectl exec xwing -- curl --connect-timeout 2 -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

hubble observe --label "class=xwing" --last 10

We can see flow output that

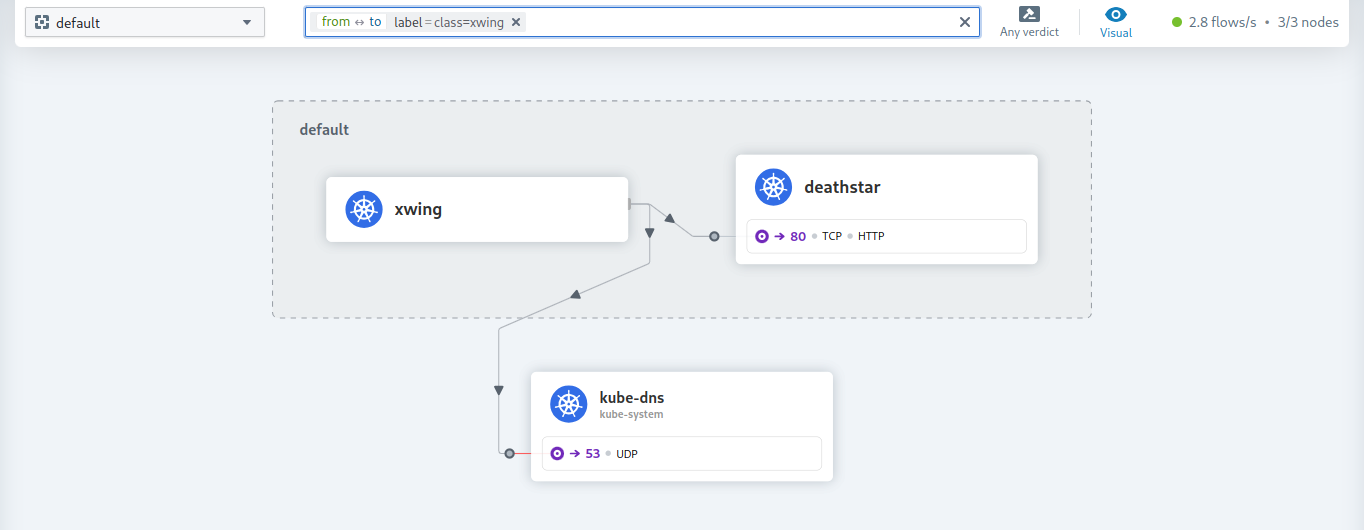

default/deathstar-*have droped but requests tokube-system/coredns-*are being forwared beacusecurlcommand used from the Xwing pod made DNS lookup requests to the clusterwide DNS service.Let’s use the Hubble UI and get a service-map view of the situation

cilium hubble uiBy gaetting in default namespace and applying

class=xwingfilter we can see following service map.

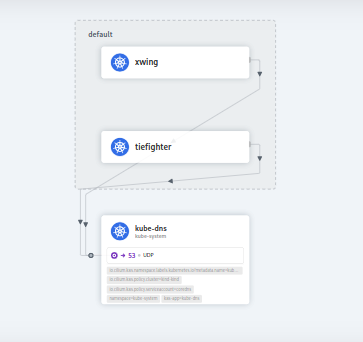

- By clicking on kube-dns we can see both Xwing and Tiefighter are communicating with it.

- We need to explicitly deny Xwing access to DNS service using network policy.

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "xwing-dns-deny"

spec:

endpointSelector:

matchLabels:

class: xwing

egressDeny:

- toEndpoints:

- matchLabels:

namespace: kube-system

k8s-app: kube-dns

The policy applies to all pods labeled

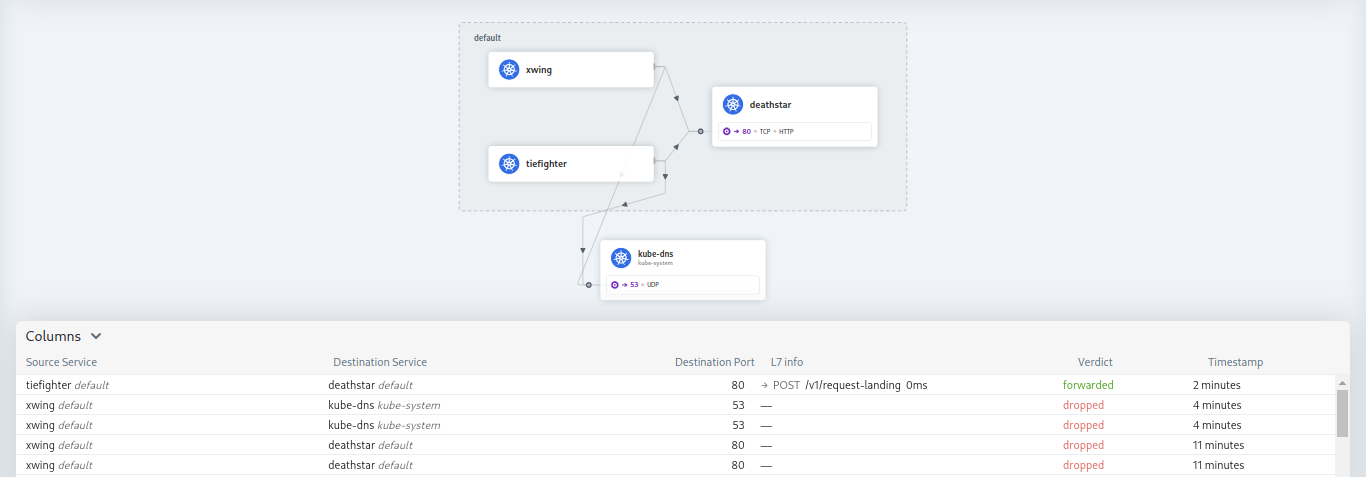

class=xwingand will restrict packet egress to any endpoint labeled ask8s-app=kube-dnsin the kube-system namespace.kubectl apply -f xwing-dns-deny-policy.yamlNow if we make requests from Xwing to Deathstar we will be able to see that flows between Xwing and DNS are being dropped.

kubectl exec xwing -- curl --connect-timeout 2 -s -XPOST deathstar.default.svc.cluster.local/v1/request-landingubble observe --label="class=xwing" --to-namespace "kube-system"We can also see that requests from Tiefighter to DNS are being forawarded.

kubectl exec tiefighter -- curl --connect-timeout 2 -s -XPOST deathstar.default.svc.cluster.local/v1/request-landinghubble observe --label="class=tiefighter" --to-namespace "kube-system" --last 1Here we can flows in Hubble UI also.

Subscribe to my newsletter

Read articles from Umair Khan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by