An Agnostic Approach to LLM, Vector DB, and Embedding for Semantic Search

Leandro Martins

Leandro Martins

With various solution possibilities in the market for LLM and Vector DB, thinking of an approach that can abstract these solutions becomes increasingly important for future maintenance or even solution changes. In this line of thought, the article aims to show an agnostic approach to LLM and Vector DB for semantic search.

What is a Vector DB?

The term "Vector Database" refers to a type of database management system specifically designed to store, index, and query vectors. These vectors are numerical representations in a data dimension, commonly used in machine learning and natural language processing (NLP) applications such as semantic search, recommendation systems, anomaly detection, among others.

In the image below, we can see a visual example of vectorized data within a dimension stored in a vector database. The closer the words are to each other, the more similar their context.

What is Embedding?

The term “embedding” refers to a fundamental technique in semantic search using vectors and many other machine learning and natural language processing (NLP) applications. Embedding transforms raw data, such as words, phrases, paragraphs, images, or any other type of information, into vectors of numbers. These vectors represent the data in a way that machines can understand and process.

Semantic Search

Semantic search using vectors is an advanced approach that goes beyond simple keyword matching, allowing the system to understand the meaning, or semantics, of what is being searched. This approach uses deep learning techniques and natural language processing (NLP) to interpret and find relevant content based on the actual meaning of the query, even if the exact words are not present in the documents or data items.

Proposed Approach

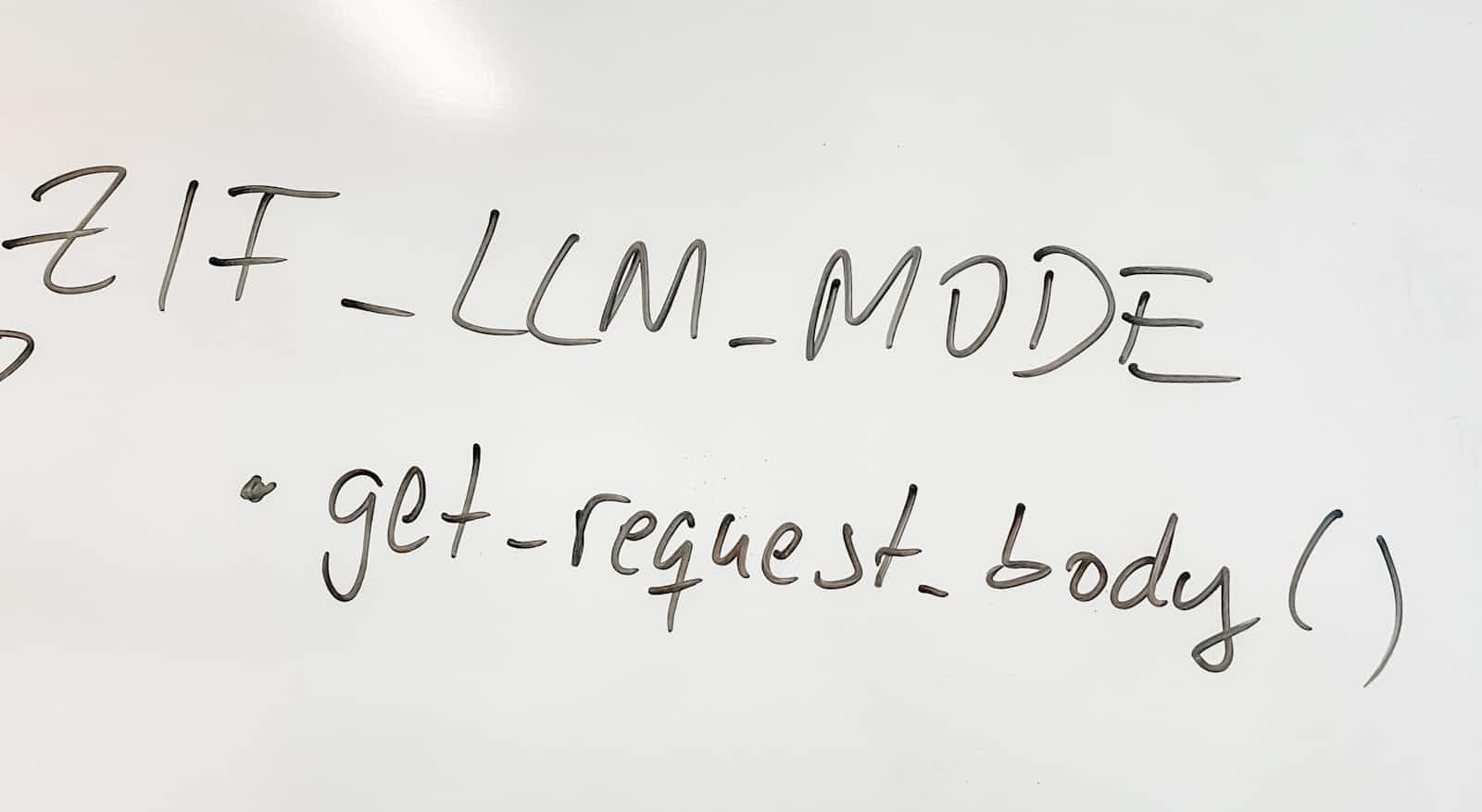

The image below shows an agnostic approach to LLM and Vector DB for a semantic search functionality using embeddings. I will detail each stage of the flow as presented.

Data Layer

- Database / AWS S3: The loaded data is then stored in a database or a cloud storage service, such as AWS S3, known for its durability and scalability.

Data Splitting

- Divide data (chunked): The data is divided into smaller parts, or "chunks," to facilitate processing. This is useful when dealing with large volumes of data that cannot be processed at once due to memory constraints or to optimize parallelism.

LLM Layer

- Embed Text: At this stage, the text data is converted into numerical vectors using a large-scale language model (LLM), such as those provided by OpenAI (GPT-3, for example) or AWS Badrock.

DB Layer

- Save embed text: After converting the texts into embeddings, these vectors are saved in a vector database. Here we have options like MongoDB, Pinecone, and Supabase. Each of these systems can be used to efficiently store and retrieve vectors.

User Input and Search

- Search embed text: The user's query is processed to search the vector database (using embeddings) for relevant texts that semantically match the user's query.

This approach aims to reduce dependency on a single solution, and it can obviously be improved and refined by better consulting the documentation of LLM and Vector DB.

Subscribe to my newsletter

Read articles from Leandro Martins directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Leandro Martins

Leandro Martins

I work as a software architect and developer, I'm curious and I like trying new things. I like writing and helping people.