Caching : So common yet impacts the max

Vishwajeet Singh

Vishwajeet Singh

What is Cache

When you're fixing something with a screwdriver at home or anywhere else, you don't put the screwdriver back in its place every time you use it. Instead, you keep it near your work area to save time and avoid going back and forth.

Similarly, computers save frequently used data temporarily in a fast storage space called cache memory, and this process is known as caching.

Now that you understand what caching is, let's see how it is used on the modern internet. From your DNS queries to watching live TV shows, caching is used almost everywhere.

Caching reduces overall load on the main database resulting faster performance, low latency.

Cache on different layers

• Client side cache.

• DNS cache.

• Web Server cache.

• Application cache

• Database cache.

• CDN - (Content Delivery Network) cache

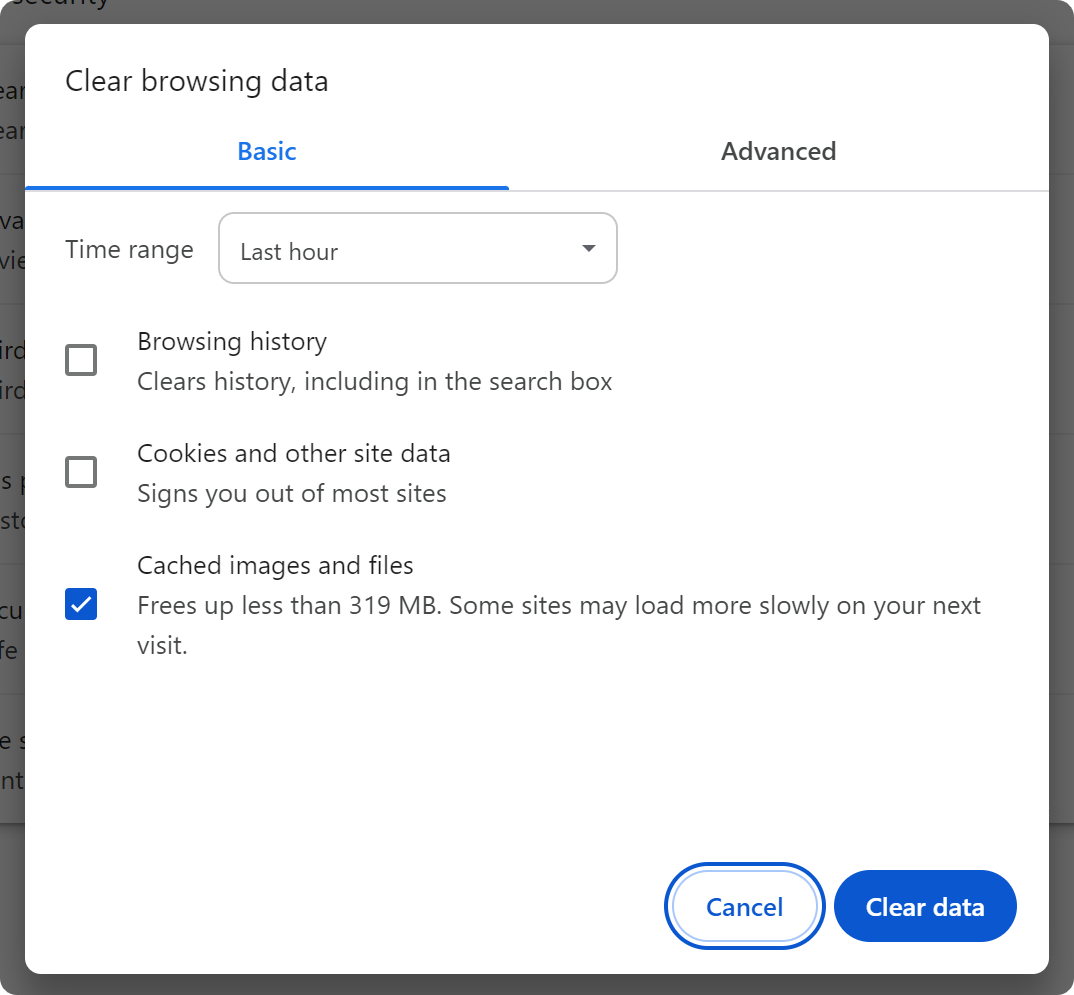

• Client side cache

Client-side cache is used to speed up access to web content and websites. It happens in the browser itself. When a user visits a web page, the browser stores it in the cache.

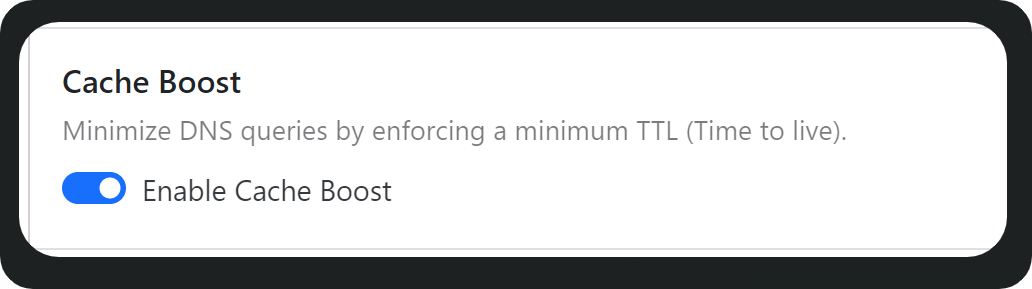

• DNS cache

Whenever we request something on the internet, the request goes to a DNS server to find the IP address to that request. DNS cache stores that IP, so the next time you want to visit the same source, it doesn't go to the DNS server to perform a full DNS query. Instead, the DNS cache is used, resulting in lower latency and faster load times.

• Web Server cache

It accelerates the retrieval of web content for web or application servers and also manages the server-side web sessions.

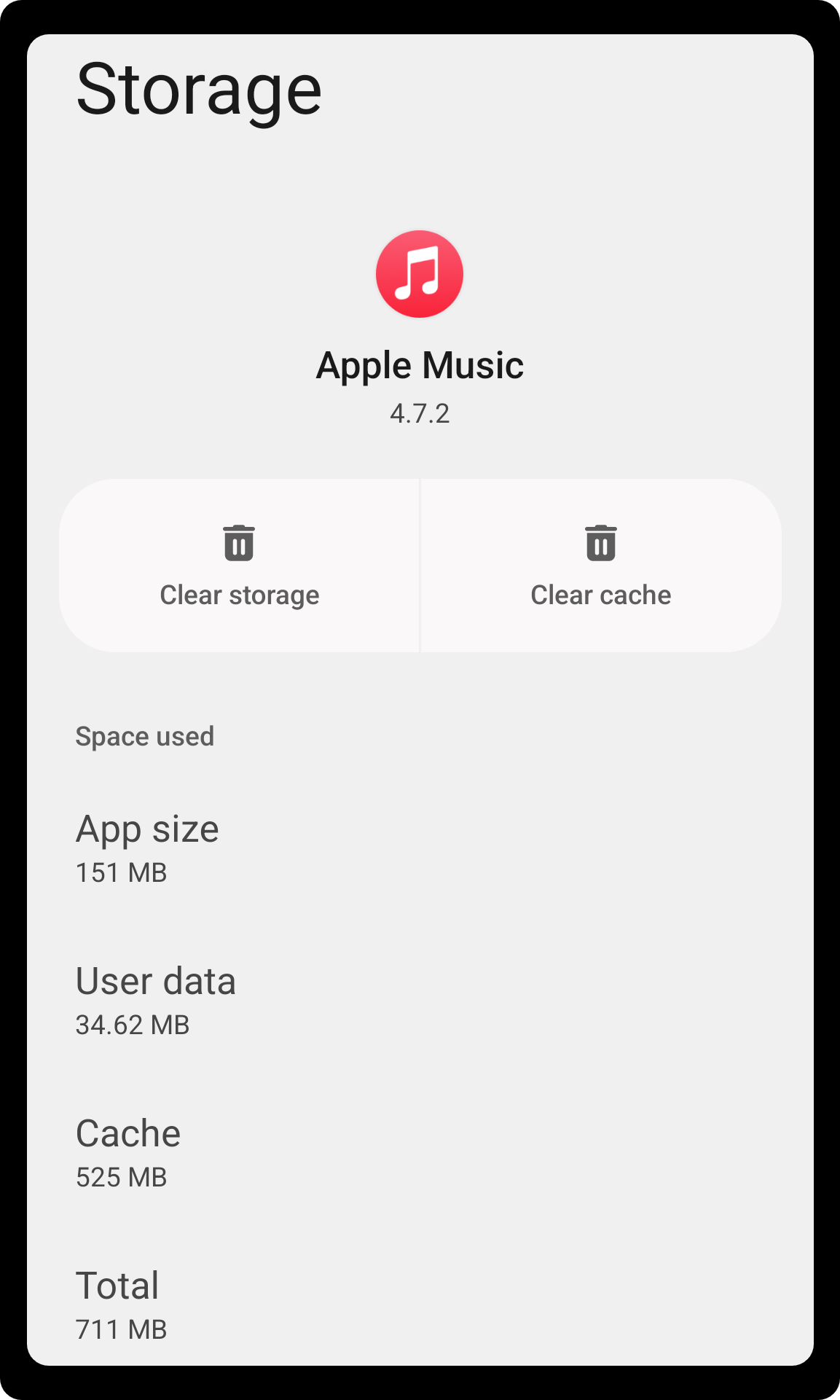

• Application cache

Applications use cached data for faster performance and loading times. This data is stored in local storage. Essentially, it keeps a cache directly on the application server. Each time a request is made to the service, the server will quickly return local, cached data if it exists..

• Database cache

Database cache reduces database query latency by using Database buffers, Key/Value Data stores.

• CDN - (Content Delivery Network) cache

Static media (images, videos, audio, etc.) are not stored on the main server to avoid overload. Instead, this media is served through a CDN, so the main application doesn't have to handle all the data requests.

Cache Invalidation

Cache invalidation means clearing the cache when changes are made to the database. Otherwise, users will receive outdated data, which is not ideal and can cause the application to behave incorrectly.

Their are 3 ways in which cache invalidation can be done :

• Write through cache

• Write around cache

• Write back cache

Let's see how they work :

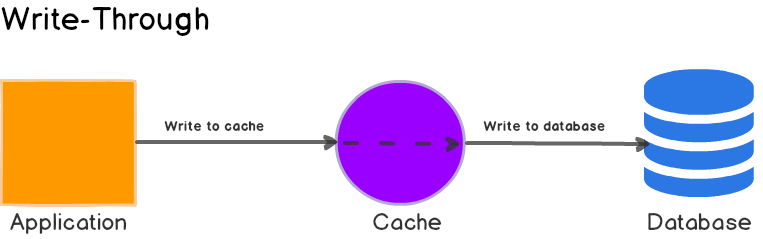

• Write through cache

In this method, changes are made to both the cache and the database. It is considered successful when both are updated.

Pros : High consistency, Robust to system disruption, Fast retrieval.

Cons: High write latency.

• Write around cache

Write requests bypass the cache and go straight to database.

Pros : Reduced write latency.

Cons : Increased read latency, low consistency.

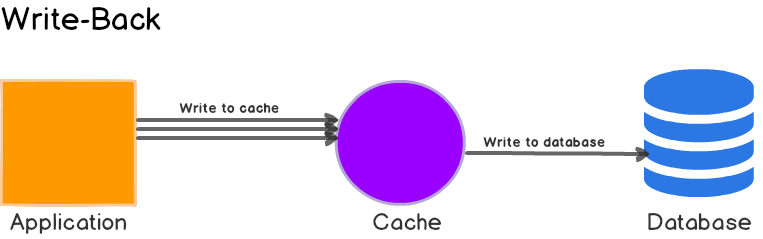

• Write back cache

In this method write is successful when cache layer is updated. After that the cache layer asynchronously updates the database.

Pros : High write throughput, Lower latency

Cons : The data can be lost if anything wrong goes with the cache layer as it acts the single copy of the data.

Cache eviction

Cache eviction are the methods of creating more space in memory by removing un-necessary data. These are the following cache eviction methods:

• First In First Out (FIFO): The cache evicts the first block accessed first without any regard to how often or how many times it was accessed before.

• Random Replacement (RR): Randomly selects a candidate item and discards it to make space when necessary.

• Least Frequently Used (LFU): Counts how often an item is needed. Those that are used least often are discarded first.

• Last In First Out (LIFO): The cache evicts the block accessed most recently first without any regard to how often or how many times it was accessed before.

• Least Recently Used (LRU): Discards the least recently used items first.

• Most Recently Used (MRU): Discards the most recently used items first.

The most commonly used method is Least Frequently Used (LFU).

Cache hit & miss

When a data is checked in the cache and not found its called cache miss, and cache hit if found.

It's important to understand that while designing cache for a system it's important to analyse the ratio of cache hit and miss so that resources can be utilized to their best.

Subscribe to my newsletter

Read articles from Vishwajeet Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vishwajeet Singh

Vishwajeet Singh

Sharing my insights in DevOps