Beat Overfitting: Essential Regularization Techniques for Machine Learning

Bitingo Josaphat

Bitingo Josaphat

In machine learning, overfitting is a common problem where models perform well on training data but fail to generalize to unseen data. This article explores essential regularization techniques that help combat overfitting and improve model performance.

I. Introduction

The Problem of overfittingOverfitting occurs when a model captures noise and specific details in the training data, which hampers its ability to perform well on new data. This leads to poor generalization and unreliable predictions.

Regularization techniques mitigate overfitting by introducing constraints or penalties on model parameters. This helps in maintaining a balance between model complexity and generalization, ensuring better performance on unseen data.

II. Types of Regularization

L1 (Lasso) Regularization

L1 regularization (Lasso)adds a penalty proportional to the absolute values of the model parameters. This encourages sparsity, meaning some feature weights may be reduced to zero, effectively performing feature selection.Real-World Use Case:L1 regularization is valuable in scenarios where feature selection is crucial, such as in high-dimensional datasets.

from sklearn.linear_model import Lasso

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

# Load dataset

X, y = load_boston(return_X_y=True)

# Split into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Apply Lasso Regularization

lasso = Lasso(alpha=0.1)

lasso.fit(X_train, y_train)

# Predict and evaluate

y_pred = lasso.predict(X_test)

mse = mean_squared_error(y_test, y_pred)

print(f'Mean Squared Error with Lasso: {mse}')

L2 (Ridge) Regularization

L2 regularization (Ridge) adds a penalty proportional to the square of the model parameters. This prevents overfitting by shrinking coefficients, thus reducing model complexity without eliminating any features completely.

Real-World Use Case: L2 regularization is often used in regression problems where multicollinearity is a concern.

from sklearn.linear_model import Ridge

# Apply Ridge Regularization

ridge = Ridge(alpha=0.1)

ridge.fit(X_train, y_train)

# Predict and evaluate

y_pred = ridge.predict(X_test)

mse = mean_squared_error(y_test, y_pred)

print(f'Mean Squared Error with Ridge: {mse}')

Dropout regularization

Dropout randomly sets a fraction of input units to zero during each training step. This prevents neurons from co-adapting too much, reducing overfitting and making the network more robust.Real-World Use Case: Dropout is widely used in deep learning models for tasks like image classification and speech recognition to prevent over-reliance on specific neurons.

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout

from tensorflow.keras.datasets import mnist

from tensorflow.keras.utils import to_categorical

# Load dataset

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

y_train, y_test = to_categorical(y_train), to_categorical(y_test)

# Define model

model = Sequential([

Dense(512, activation='relu', input_shape=(784,)),

Dropout(0.5),

Dense(10, activation='softmax')

])

# Compile model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Train model

model.fit(x_train, y_train, epochs=10, batch_size=128, validation_split=0.2)

Early Stopping Regularization

Early stopping monitors the model's performance on a validation set and halts training when performance starts to deteriorate. This helps in finding the optimal point where the model is trained enough to capture patterns without overfitting.Real-World Use Case: Early stopping is useful in training neural networks and other iterative algorithms where overfitting can happen quickly.

from tensorflow.keras.callbacks import EarlyStopping

# Define early stopping callback

early_stopping = EarlyStopping(monitor='val_loss', patience=3)

# Train model with early stopping

model.fit(x_train, y_train, epochs=50, batch_size=128, validation_split=0.2, callbacks=[early_stopping])

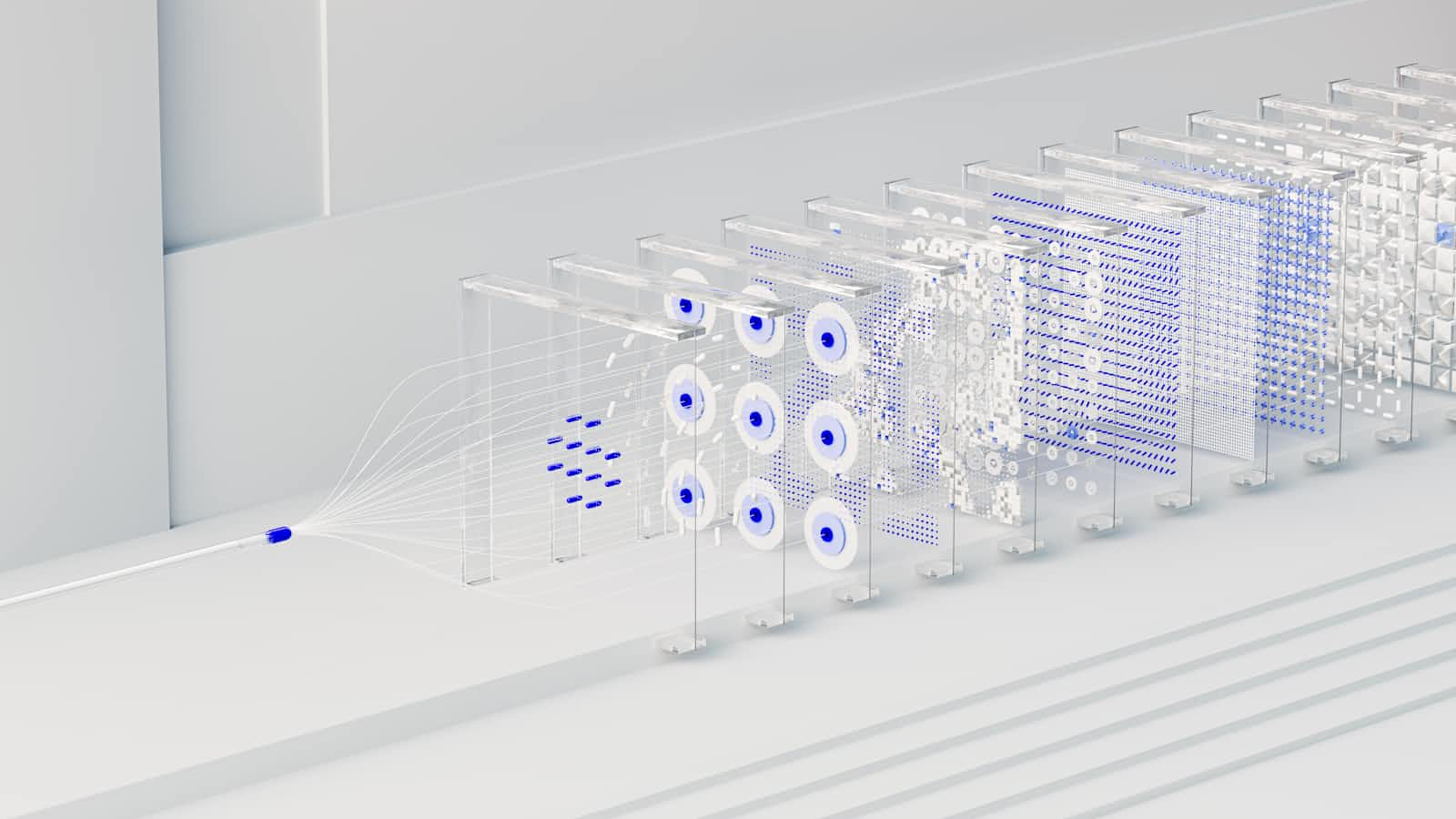

III. How Regularization Works

Adding Penalty Terms to the Loss FunctionRegularization methods work by augmenting the loss function with additional terms that penalize large weights. This discourages complex models and promotes simplicity.

L1 Regularization: Adds

|w|to the loss function.L2 Regularization: Adds

w^2to the loss function.

Reducing Model ComplexityRegularization reduces the effective complexity of the model by constraining the weights. This leads to simpler, more generalizable models that avoid overfitting.

IV. Benefits of Regularization

Improved GeneralizationRegularization enhances the model's ability to perform well on unseen data by preventing it from fitting noise in the training data.

Reduced Overfitting

By penalizing overly complex models, regularization ensures that the model captures only the essential patterns, thus reducing the risk of overfitting.

Enhanced Model Interpretability

Techniques like L1 regularization simplify models by setting some coefficients to zero, making it easier to interpret the contributions of different features.

V. Real-World Applications

Image Classification

In tasks like image classification, regularization techniques such as dropout prevent deep neural networks from memorizing the training images, thus improving their ability to generalize.

# Define a simple CNN model with dropout

model = Sequential([

tf.keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),

tf.keras.layers.MaxPooling2D((2, 2)),

Dropout(0.25),

tf.keras.layers.Flatten(),

Dense(128, activation='relu'),

Dropout(0.5),

Dense(10, activation='softmax')

])

# Compile and train model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.fit(x_train, y_train, epochs=10, batch_size=128, validation_split=0.2)

Natural Language processing

In NLP, regularization helps in preventing models from overfitting to specific patterns in the training text, making them more robust and adaptable to new text data.

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import LogisticRegression

# Example text data

texts = ["I love machine learning", "Machine learning is amazing", "I dislike boring lectures"]

labels = [1, 1, 0]

# Convert text to TF-IDF features

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(texts)

# Apply L2 Regularization using Logistic Regression

log_reg = LogisticRegression(penalty='l2', C=1.0)

log_reg.fit(X, labels)

Recommender SystemsRegularization in recommender systems helps to generalize recommendations by preventing overfitting on user-item interaction data, leading to better recommendations.

from sklearn.decomposition import NMF

from sklearn.preprocessing import normalize

import numpy as np

import pandas as pd

# Example user-item interaction matrix

# Rows: Users, Columns: Items, Values: Ratings

R = np.array([

[5, 3, 0, 1],

[4, 0, 0, 1],

[1, 1, 0, 5],

[1, 0, 0, 4],

[0, 1, 5, 4],

])

# Define the number of latent features

n_components = 2

# Apply Non-negative Matrix Factorization with regularization

nmf_model = NMF(n_components=n_components, alpha=0.1, l1_ratio=0.5, random_state=42)

W = nmf_model.fit_transform(R)

H = nmf_model.components_

# Reconstruct the user-item matrix

R_hat = np.dot(W, H)

# Normalize the reconstructed matrix for recommendations

R_hat_normalized = normalize(R_hat, axis=1, norm='l1')

# Convert the matrices to DataFrames for better readability

users = ['User1', 'User2', 'User3', 'User4', 'User5']

items = ['Item1', 'Item2', 'Item3', 'Item4']

R_df = pd.DataFrame(R, index=users, columns=items)

R_hat_df = pd.DataFrame(R_hat, index=users, columns=items)

R_hat_norm_df = pd.DataFrame(R_hat_normalized, index=users, columns=items)

print("Original User-Item Interaction Matrix:")

print(R_df)

print("\nReconstructed User-Item Matrix:")

print(R_hat_df)

print("\nNormalized Reconstructed User-Item Matrix (for recommendations):")

print(R_hat_norm_df)

VI. Conclusion

Regularization techniques are indispensable tools for preventing overfitting in machine learning models. By adding constraints to the loss function and reducing model complexity, regularization improves the generalization ability of models, making them more robust and reliable.

Subscribe to my newsletter

Read articles from Bitingo Josaphat directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Bitingo Josaphat

Bitingo Josaphat

Bitingo Josaphat JB is a rising Machine Learning Engineer and passionate Artificial Intelligence enthusiast. He is currently pursuing his final year in Bachelor of Science in Computer Science, and has already made a name for himself as a skilled practitioner of Machine Learning, Deep Learning, and Python programming. As an experienced GSD Python and Artificial Intelligence Mentor, Bitingo is always eager to help others learn and grow in their knowledge and understanding of these exciting fields. He is open to collaborating with others and contributing to open-source projects, and is dedicated to making a positive impact on the world through his work in Machine Learning and Artificial Intelligence. Whether you are a student, a professional, or a hobbyist, Bitingo's expertise and passion for AI and Machine Learning make him an invaluable resource for anyone looking to expand their knowledge and skills in these exciting fields. So why not reach out to Bitingo today, and see how he can help you take your skills and knowledge to the next level!