Understanding Docker

Haaris Sayyed

Haaris Sayyed

Introduction

Docker has emerged as a pivotal tool in modern software development, addressing the growing need for consistent and efficient application deployment across diverse environments. As applications have become more complex and reliant on various dependencies, traditional methods of deployment often led to "it works on my machine" issues, where software would function differently in development and production environments. Docker revolutionizes this by encapsulating applications and their dependencies into lightweight, portable containers that run uniformly regardless of the underlying infrastructure. This containerization ensures reliability, scalability, and speed, making it indispensable for developers and organizations aiming to streamline their workflows and enhance their DevOps practices.

What is Docker?

Docker is a software platform that allows you to build, test, and deploy applications quickly. Docker packages software into standardized units called containers that have everything the software needs to run including libraries, system tools, code, and runtime. Using Docker, you can quickly deploy and scale applications into any environment and know your code will run.

Why use Docker?

Using Docker lets you ship code faster, standardize application operations, seamlessly move code, and save money by improving resource utilization. With Docker, you get a single object that can reliably run anywhere. Docker's simple and straightforward syntax gives you full control. Wide adoption means there's a robust ecosystem of tools and off-the-shelf applications that are ready to use with Docker.

1.SHIP MORE SOFTWARE FASTER

Docker users on average ship software 7x more frequently than non-Docker users. Docker enables you to ship isolated services as often as needed.

2.STANDARDIZE OPERATIONS

Small containerized applications make it easy to deploy, identify issues, and roll back for remediation.

3.SEAMLESSLY MOVE

Docker-based applications can be seamlessly moved from local development machines to production deployments on AWS.

4.SAVE MONEY

Docker containers make it easier to run more code on each server, improving your utilization and saving you money.

When to use Docker?

You can use Docker containers as a core building block creating modern applications and platforms. Docker makes it easy to build and run distributed microservices architectures, deploy your code with standardized continuous integration and delivery pipelines, build highly-scalable data processing systems, and create fully-managed platforms for your developers.

MICROSERVICES

Build and scale distributed application architectures by taking advantage of standardized code deployments using Docker containers.

CONTINUOUS INTEGRATION & DELIVERY

Accelerate application delivery by standardizing environments and removing conflicts between language stacks and versions.

CONTAINERS AS A SERVICE

Build and ship distributed applications with content and infrastructure that is IT-managed and secured.

How Docker works?

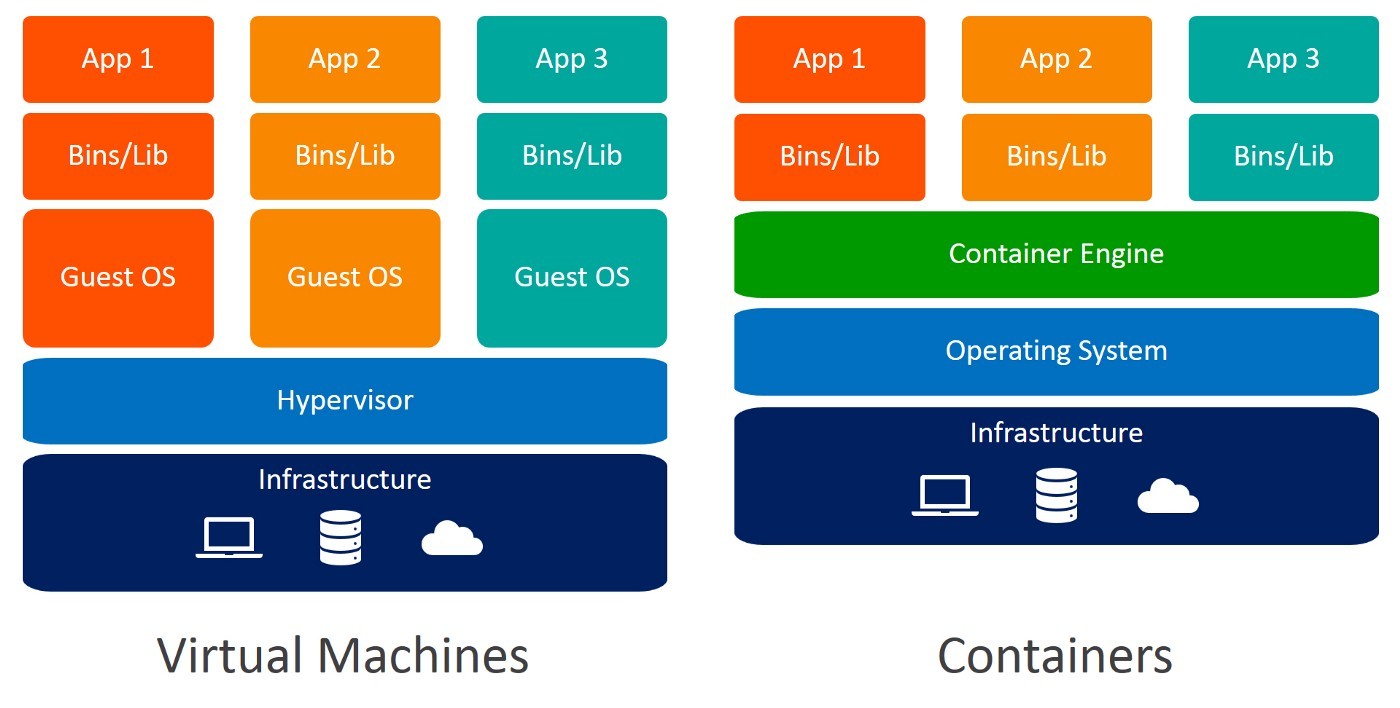

Docker works by providing a standard way to run your code. Docker is an operating system for containers. Similar to how a virtual machine virtualizes (removes the need to directly manage) server hardware, containers virtualize the operating system of a server. Docker is installed on each server and provides simple commands you can use to build, start, or stop containers.

Docker Architecture

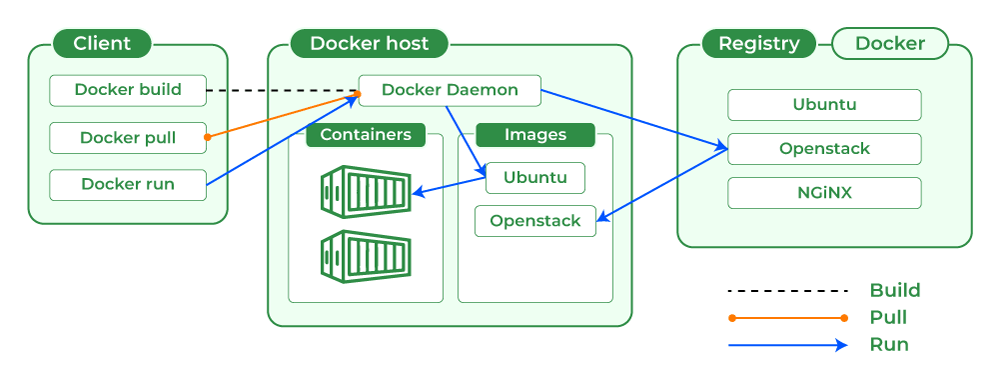

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers.

For download and installation refer: https://docs.docker.com

Docker Client

The Docker client (docker) is the primary way that many Docker users interact with Docker. When you use commands such as docker run, the client sends these commands to dockerd, which carries them out. The docker command uses the Docker API. The Docker client can communicate with more than one daemon.

Docker Daemon

The Docker daemon (dockerd) listens for Docker API requests and manages Docker objects such as images, containers, networks, and volumes. A daemon can also communicate with other daemons to manage Docker services.

Docker Desktop

Docker Desktop is an easy-to-install application for your Mac, Windows or Linux environment that enables you to build and share containerized applications and microservices. Docker Desktop includes the Docker daemon (dockerd), the Docker client (docker), Docker Compose, Docker Content Trust, Kubernetes, and Credential Helper.

Docker Registries

A Docker registry stores Docker images. Docker Hub is a public registry that anyone can use, and Docker looks for images on Docker Hub by default. You can even run your own private registry.

When you use the docker pull or docker run commands, Docker pulls the required images from your configured registry. When you use the docker push command, Docker pushes your image to your configured registry.

Docker objects

When you use Docker, you are creating and using images, containers, networks, volumes, plugins, and other objects. This section is a brief overview of some of those objects.

Docker Images

Docker images are lightweight, stand-alone, and executable software packages that include everything needed to run a piece of software, including the code, runtime, libraries, and system tools. Internally, a Docker image is built in layers, each layer representing a change or addition to the image, such as a new file or an updated configuration. These layers are stacked and form a single unified filesystem. This layered approach not only makes images more efficient by reusing common layers across multiple images but also enables rapid deployment and scaling. Docker uses a union file system to manage these layers, ensuring that the container running from the image operates efficiently and reliably.

$ docker pull hello-world

Using default tag: latest

latest: Pulling from library/hello-world

c1ec31eb5944: Pull complete

Digest: sha256:266b191e926f65542fa8daaec01a192c4d292bff79426f47300a046e1bc576fd

Status: Downloaded newer image for hello-world:latest

docker.io/library/hello-world:latest

Docker images are composed of multiple layers, each uniquely identified by a SHA-256 hash (SHA). This hash acts as a digital fingerprint for the content of each layer. Understanding these SHA layers is crucial for comprehending how Docker images are constructed, distributed, and managed efficiently.

How SHA Layers Work in Docker Images

Layer Creation:

Each instruction in a Dockerfile (such as

FROM,RUN,COPY, etc.) results in the creation of a new layer.After executing an instruction, Docker calculates a SHA-256 hash of the resulting layer. This hash is a unique identifier based on the content of that layer.

Immutable Layers:

Layers are immutable, meaning once a layer is created and assigned a SHA, it cannot be altered. If the content changes, a new layer with a new SHA is created.

This immutability ensures consistency and reliability across different environments.

Layer Caching:

Docker caches layers based on their SHA. If a subsequent build finds a layer with the same SHA already in the cache, it reuses that layer instead of creating it anew.

This significantly speeds up the build process, as unchanged layers don't need to be rebuilt.

Layer Storage and Distribution:

Layers are stored in the Docker registry and on the local filesystem using their SHA as the identifier.

When pulling an image, Docker checks which layers (by SHA) are already present locally and only downloads the missing ones, optimizing network usage and storage.

Viewing SHA's

You can view the SHAs of the layers in a Docker image using the docker inspect command:

$ docker inspect --format='{{.RootFS.Layers}}' <image_name>

This command will list the SHA-256 hashes of all the layers in the specified image.

$ docker inspect --format='{{.RootFS.Layers}}' hello-world:latest

'[sha256:ac28800ec8bb38d5c35b49d45a6ac4777544941199075dff8c4eb63e093aa81e]'

Containers

A container is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI. You can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state.

By default, a container is relatively well isolated from other containers and its host machine. You can control how isolated a container's network, storage, or other underlying subsystems are from other containers or from the host machine.

A container is defined by its image as well as any configuration options you provide to it when you create or start it. When a container is removed, any changes to its state that aren't stored in persistent storage disappear.

The following command runs an ubuntu container, attaches interactively to your local command-line session, and runs /bin/bash.

$ docker run -it ubuntu /bin/bash

Unable to find image 'ubuntu:latest' locally

latest: Pulling from library/ubuntu

00d679a470c4: Pull complete

Digest: sha256:e3f92abc0967a6c19d0dfa2d55838833e947b9d74edbcb0113e48535ad4be12a

Status: Downloaded newer image for ubuntu:latest

root@ab895cb05f1d:/#

Conclusion

Docker revolutionizes the way we develop, deploy, and manage applications by encapsulating them in containers, ensuring consistency across various environments. At the core of Docker's efficiency and reliability are its image layers, uniquely identified by SHA-256 hashes. These layers allow for incremental builds, caching, and reuse, significantly speeding up the development process and optimizing resource usage.

Understanding the structure and internals of Docker images, especially the role of SHA layers, empowers developers to create more efficient, scalable, and secure applications. By leveraging Docker's layered approach, teams can ensure consistent environments from development to production, streamline CI/CD pipelines, and ultimately, deliver high-quality software faster.

Docker not only simplifies the complexity of application deployment but also enhances collaboration and agility within development teams, making it an indispensable tool in modern DevOps practices. As you delve deeper into Docker, harness the power of its image layers to maximize efficiency and reliability in your software projects.

Subscribe to my newsletter

Read articles from Haaris Sayyed directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by