Guide to langsmith

Venkata Vinay Vijjapu

Venkata Vinay Vijjapu

Introduction

Large language models (LLMs) are the talk of the town, with their potential applications seemingly limitless. But for developers, translating LLM potential into real-world applications can be a bumpy ride. Debugging complex workflows, ensuring reliability, and maintaining smooth operation are just a few hurdles on the path to production.

Introducing LangSmith, your one-stop shop for building and deploying robust LLM applications. LangSmith is a comprehensive DevOps platform designed to streamline the entire LLM development process, from initial concept to real-world impact.

With LangSmith, you can:

Craft LLMs with Confidence: Develop applications intuitively with a user-friendly interface that simplifies even intricate workflows.

Test Like a Pro: Uncover and fix vulnerabilities before deployment with LangSmith's robust testing suite.

Gain Deep Insights: Evaluate your application's performance with LangSmith's in-depth tools, ensuring optimal functionality.

Monitor with Peace of Mind: Maintain application stability with LangSmith's real-time monitoring features.

But LangSmith goes beyond just development. It equips you with the power to delve into your LLM application's inner workings. This allows you to:

Debug with Precision: Troubleshoot complex issues efficiently with LangSmith's debugging tools.

Fine-tune Performance: Optimize your application's functionality for maximum impact.

Whether you're a seasoned developer pushing the boundaries of LLMs or just starting your journey, LangSmith empowers you to unlock the full potential of these powerful tools.

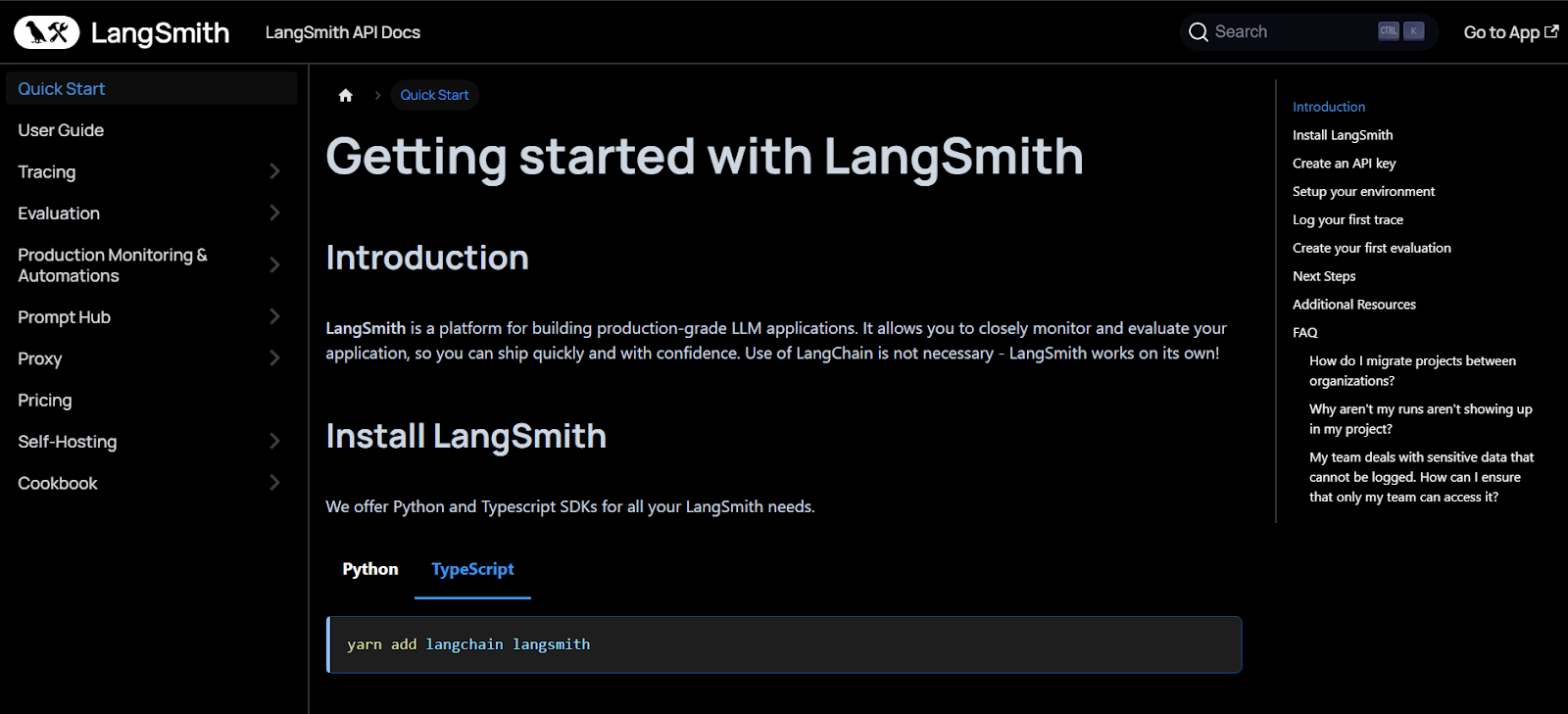

Installation

Python:

Open a terminal or command prompt.

Type the following command and press Enter:

pip install -U langsmith

This downloads and installs the Langsmith library for Python.

Type Script:

Open a terminal or command prompt and navigate to your project directory.

Type the following command and press Enter:

yarn add langchain langsmith

This installs the necessary packages using yarn. If you prefer npm, use npm install langchain langsmith instead.

Setting Up Your Environment

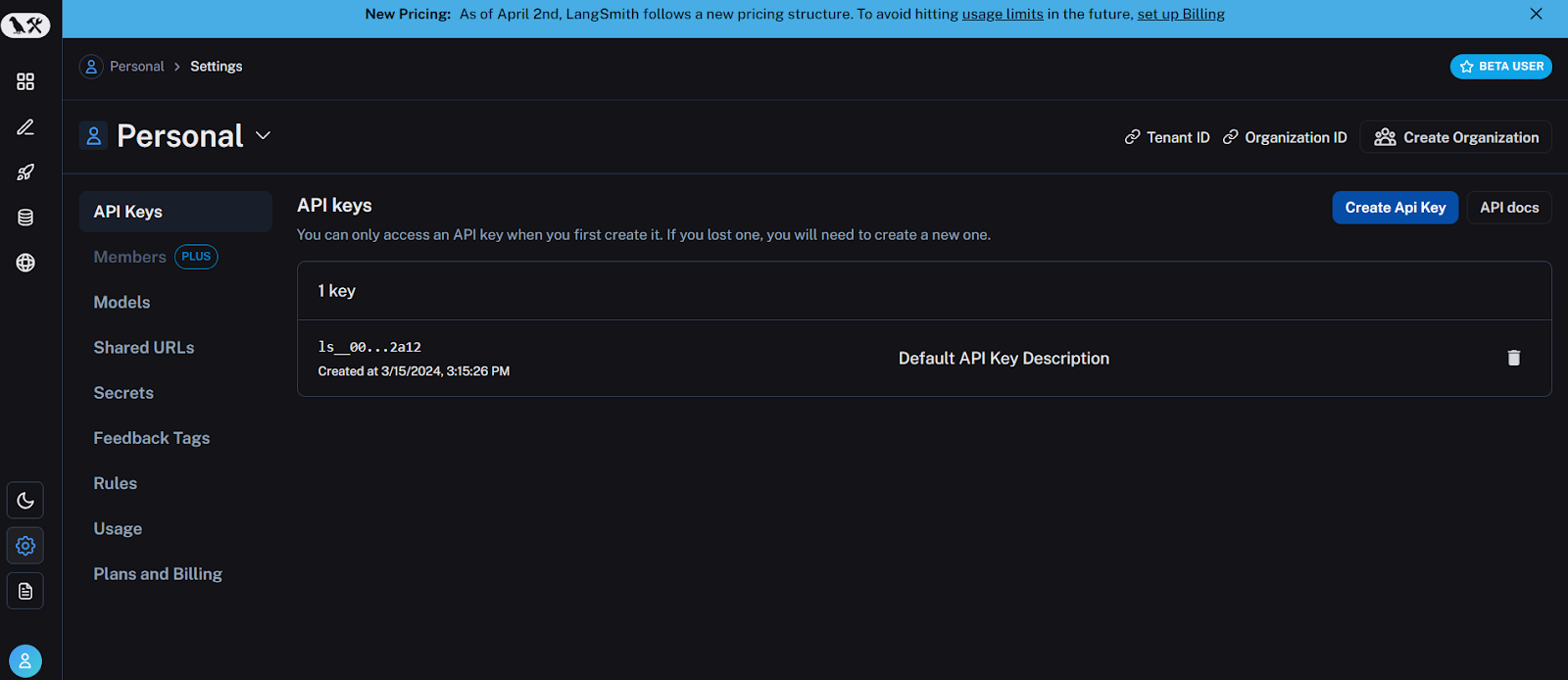

Create an API Key:

Head to the Langsmith website (Link)

Go to your Settings page in the website.

Register if you're new or sign in if you already have an account.

Click on Create API Key and copy the generated API key.

Set Environment Variables:

Open your terminal or command prompt.

For both Python and TypeScript:

Set the LANGCHAIN_TRACING_V2 environment variable to true:

Set the LANGCHAIN_API_KEY environment variable to your copied API key:

export LANGCHAIN_TRACING_V2=true export LANGCHAIN_API_KEY=<your_api_key>

Sample Project

# Importing neccessary modules

import streamlit as st

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

import os

from dotenv import load_dotenv

streamlit: This lets you build a cool website where people can interact with a chatbot.

langchain_openai: This helps you talk to a special program from OpenAI that can chat like a person.

ChatPromptTemplate & StrOutputParser (might be custom): These sound like special tools you made (or got from somewhere) to help control what you say to the chatbot and how it responds.

os: This helps your program work with your computer's files and folders.

dotenv: This keeps your secret codes (like passwords) safe by hiding them in a separate file.

# Load environment variables from .env file

load_dotenv()

os.environ["OPENAI_API_KEY"] = os.getenv("OPENAI_API_KEY")

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = os.getenv("LANGCHAIN_API_KEY")

openai_api_key: This key unlocks access to OpenAI's GPT models, empowering you to leverage their capabilities in your application.

langchain_tracing_v2: Setting this to

"true"enables tracing within your Langchain account, allowing you to visualize the execution flow and identify potential issues.langchain_api_key: This key serves as your Langchain API key, likely used for authentication purposes when interacting with Langchain's services

# Define the prompt template

prompt = ChatPromptTemplate.from_messages(

[

("system", "You are my blog genertor. You are required to create a blog on that topic{question}"),

("user", "Question:{question}")

]

)

- A prompt, in the context of interacting with large language models like GPT, is a piece of text that instructs the model on what kind of response to generate. It sets the stage for the conversation and provides context for the user's input

# Initialize the model and output parser

model = ChatOpenAI(model="gpt-3.5-turbo")

output_parser = StrOutputParser()

# Chain the prompt, model, and output parser

chain = prompt | model | output_parser

Setting Up the Chatbot Engine (

model): We're creating a connection to a powerful language model called GPT-3.5-turbo. Think of it as the brain of the chatbot, responsible for generating responses.Cleaning Up the Response (

output_parser): Sometimes the model's response might be raw text. This line creates a tool to potentially make the response look better for the user.Connecting the Pieces (

chain): This line simply joins the prompt (what you tell the chatbot), the model (the GPT-3 brain), and the cleanup tool into one smooth process.

# Streamlit interface

st.title("LangChain OpenAI Streamlit App")

question = st.text_input("Enter your topic:")

if st.button("Generate blog..."):

if not question:

st.write("Please provide your topic.")

else:

result = chain.invoke({"question": question})

st.write("Blog:")

st.write(result)

This is the interface code where a text box and a button are present. When a question is entered and the button is clicked, the system will process the question and initiate a response from GPT.

To see the code you can go with the link below.

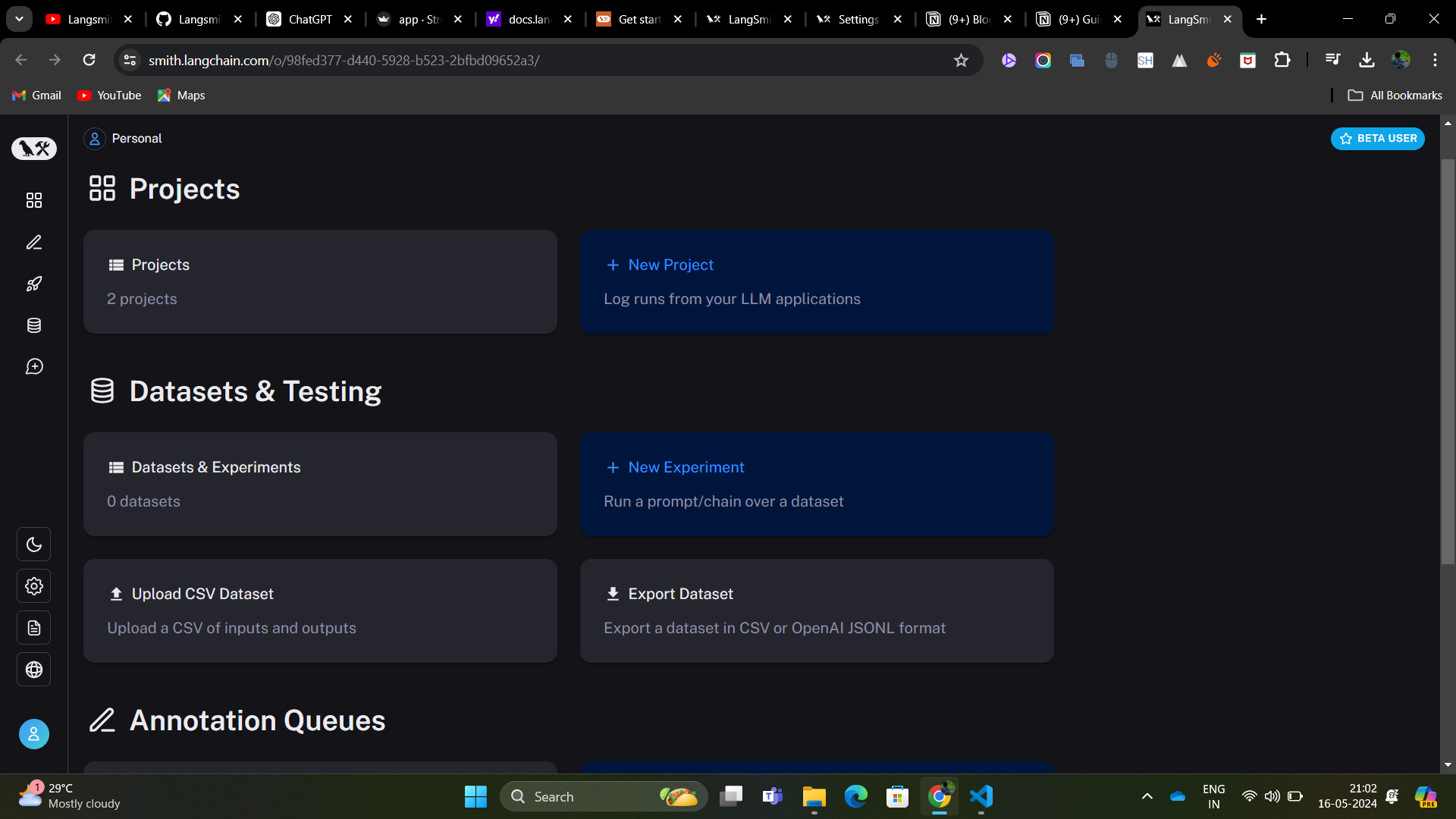

Testing and Debugging Large Language Models with LangSmith:

This document outlines a streamlined approach for testing and debugging Large Language Models (LLMs) using LangSmith, a dedicated platform designed to facilitate this process.

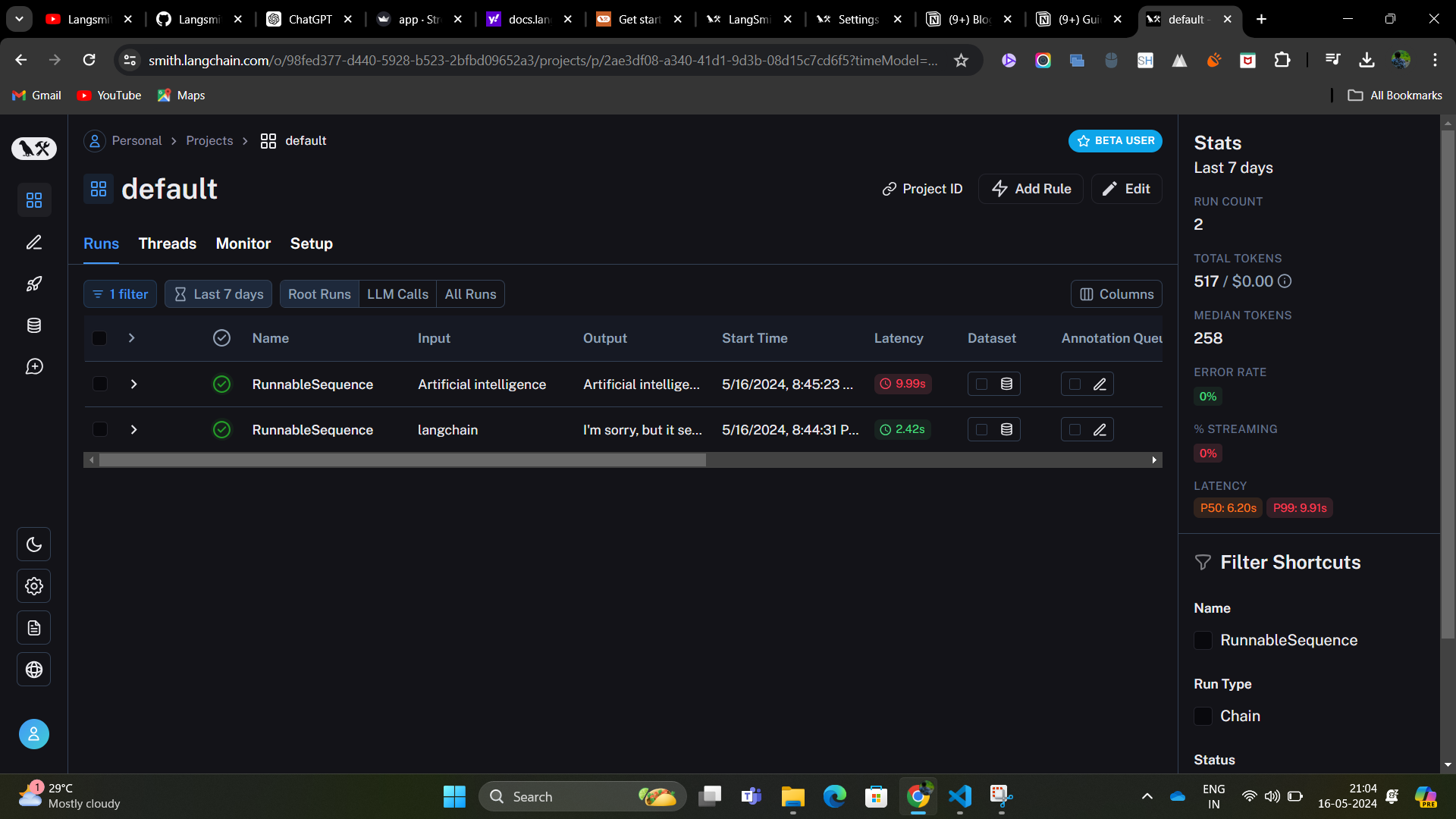

- Access LangSmith: Navigate to the LangSmith website and log in to your account.

Select Project: Within the LangSmith interface, locate and open the project containing the LLM you wish to test and debug.

Review Past Interactions: LangSmith will display a record of previous queries posed to your LLM.

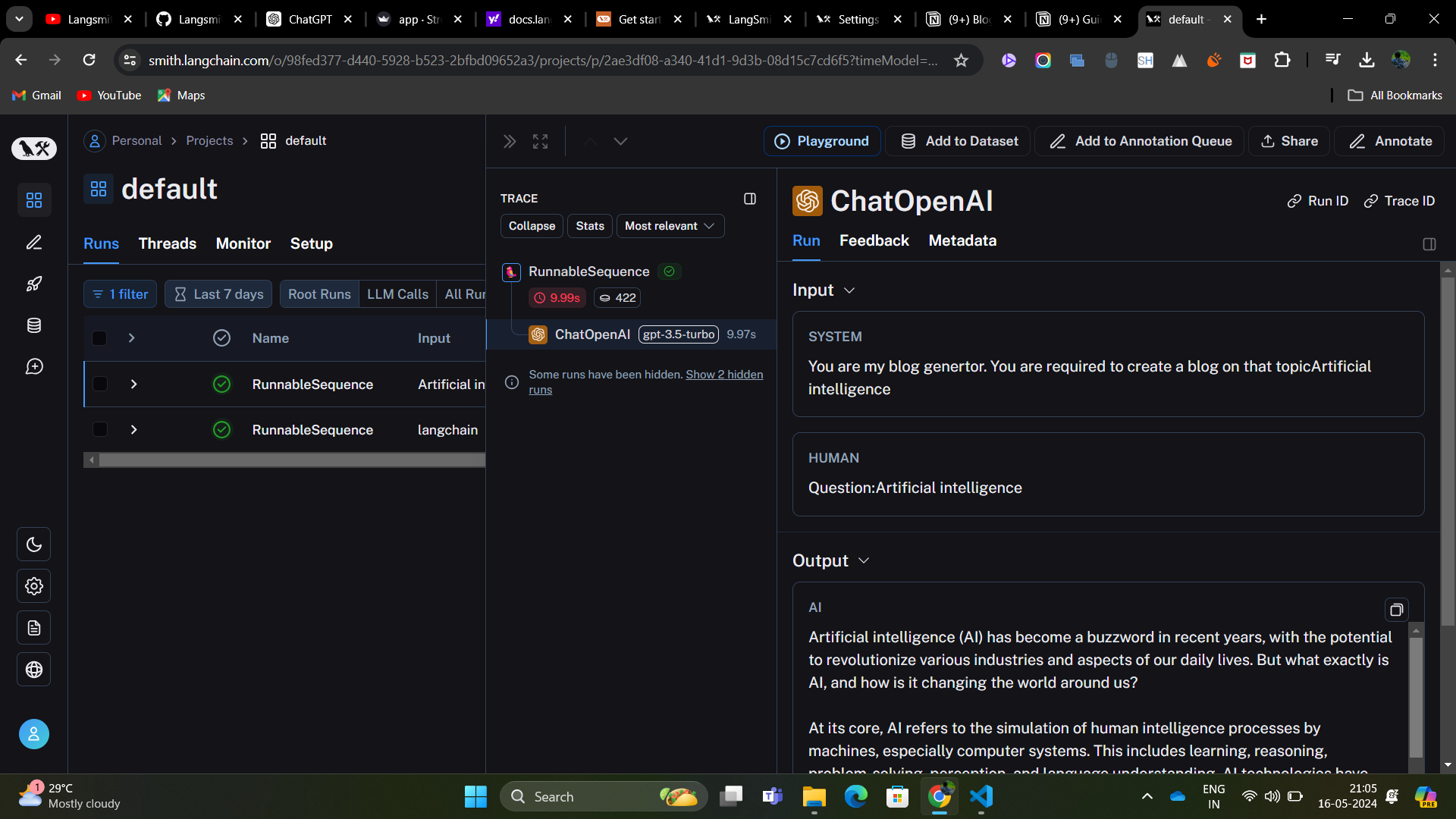

Visualize Execution Chain: Upon selecting a specific query, LangSmith will present a visual representation of the internal processing steps (chain) your LLM undertook to generate the response.

Deep Dive into Sub-processes: Each step within the chain is readily accessible for examination. You can analyze:

Token Count: The number of tokens processed at each stage.

Execution Time: The time taken for each sub-process to complete.

Targeted Testing and Debugging: By analyzing the chain and its individual components, you can pinpoint potential issues within your LLM's reasoning or response generation. LangSmith empowers you to focus your debugging efforts on specific areas, streamlining the process.

Use Cases

1. Optimizing LLM Performance:

Imagine you're developing a chatbot powered by an LLM. The chatbot sometimes provides clunky or irrelevant responses. LangSmith lets you examine the processing chain behind each response. You can see which steps take the most time or tokens, indicating areas for improvement. By analyzing these bottlenecks, you can refine prompts or fine-tune the LLM for better chatbot performance.

2. Identifying Biases:

Let's say you're concerned about potential biases in your LLM's outputs. LangSmith allows you to track the sentiment of user queries and the corresponding LLM responses. This can reveal if the LLM is biased towards certain viewpoints or phrasings. You can then adjust training data or prompts to mitigate these biases.

3. Debugging LLM Reasoning:

You've trained an LLM to do question answering, but it sometimes provides incorrect or nonsensical answers. LangSmith's chain visualization helps you understand the LLM's reasoning process for each answer. By examining which steps led to the wrong answer, you can identify flaws in the LLM's logic or training data and address them.

4. Building Self-Improving LLMs:

LangSmith can be used to create LLMs that learn and improve over time. You can integrate user feedback with LangSmith. Positive feedback on LLM outputs can be used to automatically generate training datasets for further fine-tuning. Conversely, negative feedback can be used to identify areas needing improvement and target them for retraining.

Conclusion

LangSmith is a platform designed to streamline the development, deployment, and monitoring of applications built around large language models (LLMs). By providing tools for debugging, testing, evaluation, and usage metrics, LangSmith aims to bridge the gap between LLM prototypes and production-grade applications. It offers benefits for both individual developers and organizations working with LLMs.

Resources and Further Reading

For additional information and resources on langsmith and langsmith-langchain, check out the following:

Wanna look at the code: Github Link

Subscribe to my newsletter

Read articles from Venkata Vinay Vijjapu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by