Introduction to Supervised Learning Linear Regression

Retzam Tarle

Retzam Tarle

print("Introduction to Linear Regression")

Hola 🤗,

Linear Regression is a supervised learning model used for regression tasks. Regression tasks try to predict continuous values as we explained in our previous chapter, you can revise here.

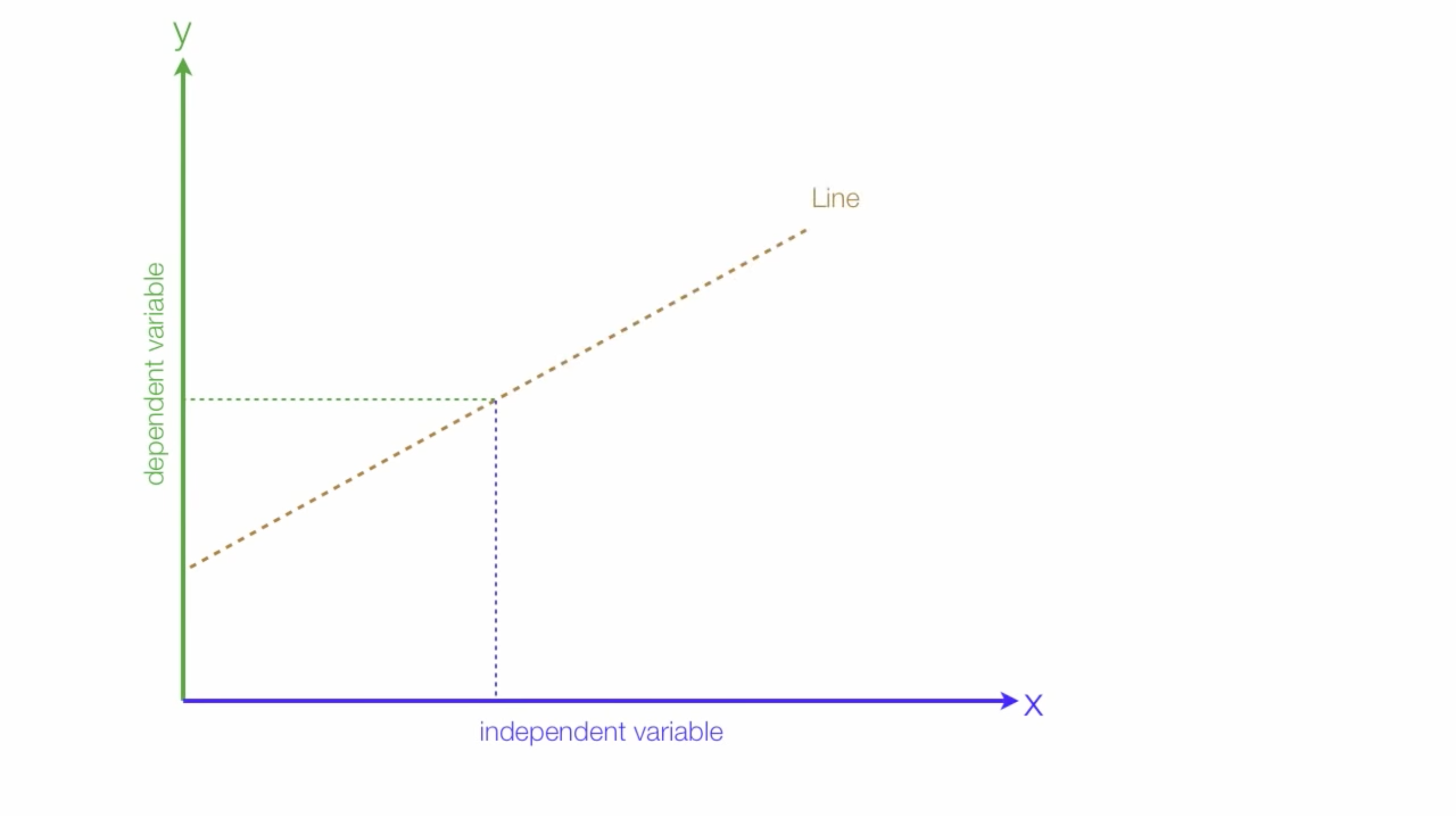

The basic idea behind Linear regression is to find a relationship between a dependent variable(outcome) and one or more independent variables(feature vectors) by drawing a line. Something like the 2D plane below:

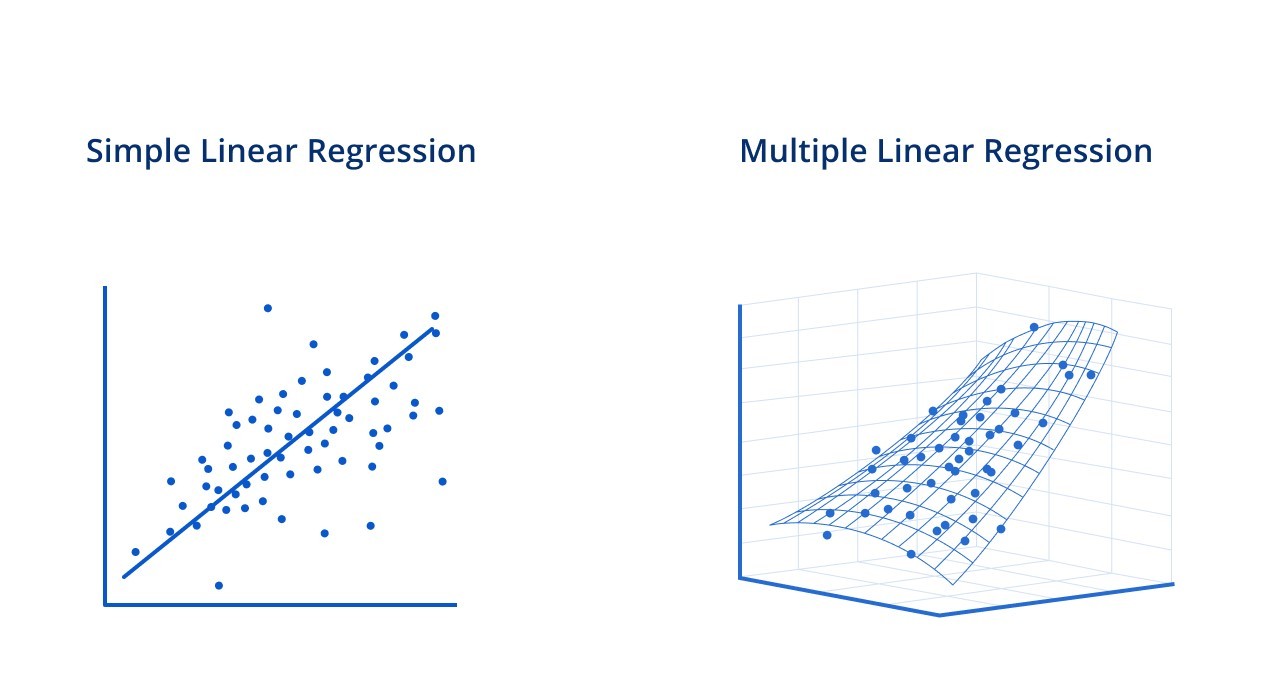

To find the line, also known as the line of best fit depends on the type of regression. There are two types of linear regression:

Simple linear regression: In this type of regression it deals with one dependent variable and one independent variable. Say you want to predict if a person can lift a weight(dependent variable) by using only the person's gender(independent variable)

The formula;

y = b0 + b1x

y: Dependent variable (the variable we want to predict)

b0: Y-intercept (the starting point of the line where it crosses the y-axis)

b1: Slope of the line (the change in y for a unit change in x)

x: Independent variable (the predictor)

Multiple linear regression: Here the linear regression deals with one dependent variable and multiple independent variables. Say you want to predict if a person can lift a weight(dependent variable) by considering their gender, height, and BMI(independent variables).

The formula;

y = b0 + b1x1 + b2x2 + b3x3 + ... + bnxn (There's an artist called bnxn lol)

Here y and b0 are the same as in simple linear regression above. Meanwhile, b1x1 is for each of the multiple independent variables from 1, 2, 3... n, which basically means from 1 to infinity.

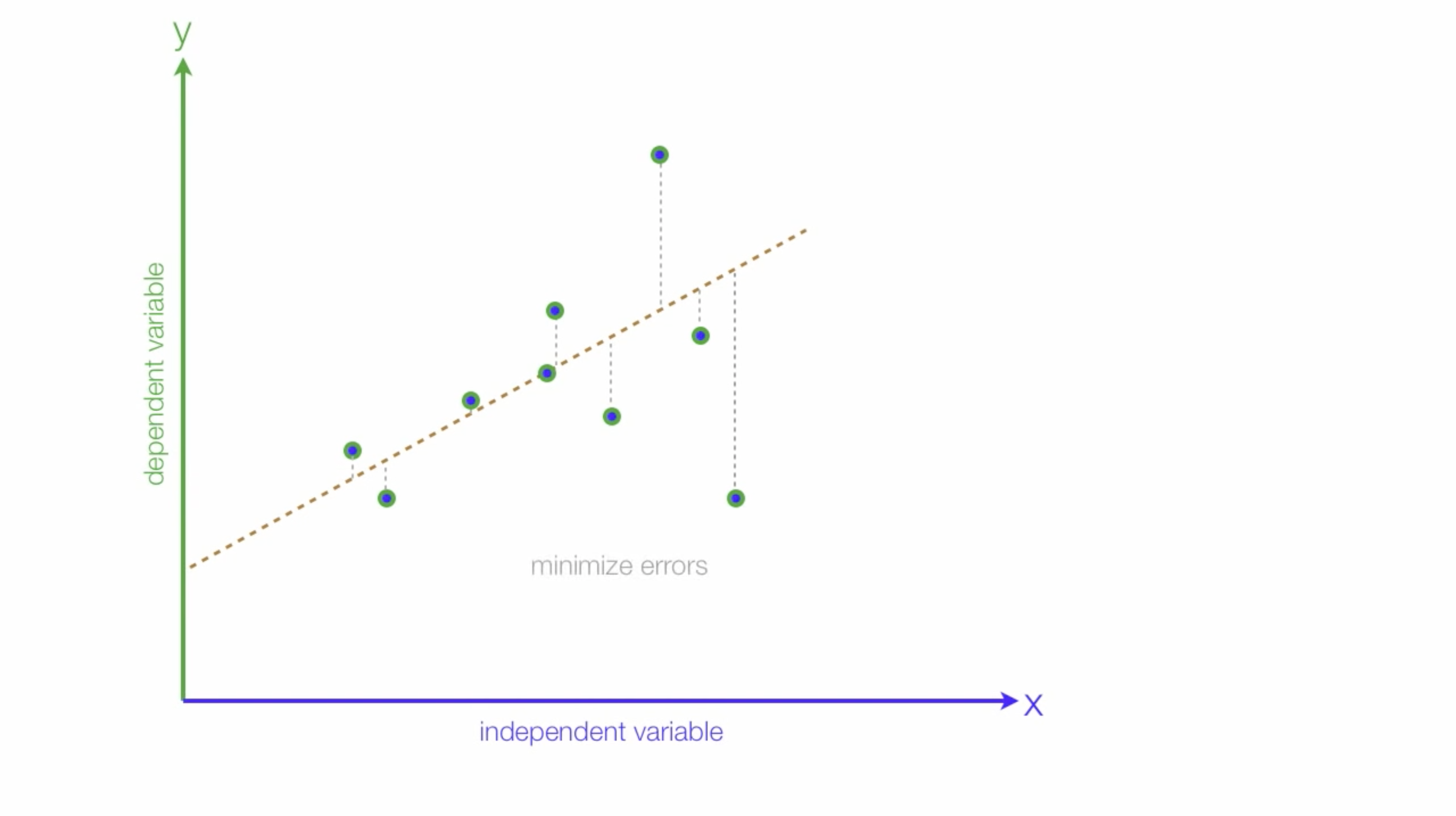

The whole idea behind linear regression is finding that line with the least error possible, using the formulas pointed above. Consider the illustration 2D plane below:

There is a YouTube playlist here, that explains this in more detail.

In linear regression for supervised learning tasks, there are 4 assumptions that are made. We won't explain them in detail here, but they are listed below.

Linearity - The line of best fit should be linear(straight)

Independence - All samples should not rely on/affect one another

Normality - Residuals should be normally distributed.

Homoskedasticity - Residuals should have constant variance.

We talked a little about how we evaluate regression models in the last chapter, I would like to mention a few more here for linear regression models:

Where, y| is the actual value, while y|^ is the predicted value.

Mean absolute error (MAE) = |yI - yI^| / n

Mean squared error (MSE) = |yI - yI^| ^2 / n

Root mean squared error

R^2 - Coefficient of determination:

R^2 = 1 - RSS/TSS

RSS - sum of squared residuals RSS = (yI - YI)^2

TSS - total sum of squares TSS = (yI - y)^2

We'll end it here for Introduction to Linear Regression. Next week we'll delve deeper and build our regression models to make predictions 🤗.

Be safe, till next time.

Aloha 👽

Subscribe to my newsletter

Read articles from Retzam Tarle directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Retzam Tarle

Retzam Tarle

I am a software engineer.