HLS Adaptive Bitrate Streaming

Pradeep Pawar

Pradeep Pawar

Building an HLS Adaptive Bitrate Streaming Service with Node.js, AWS S3, Docker, and ECS Fargate

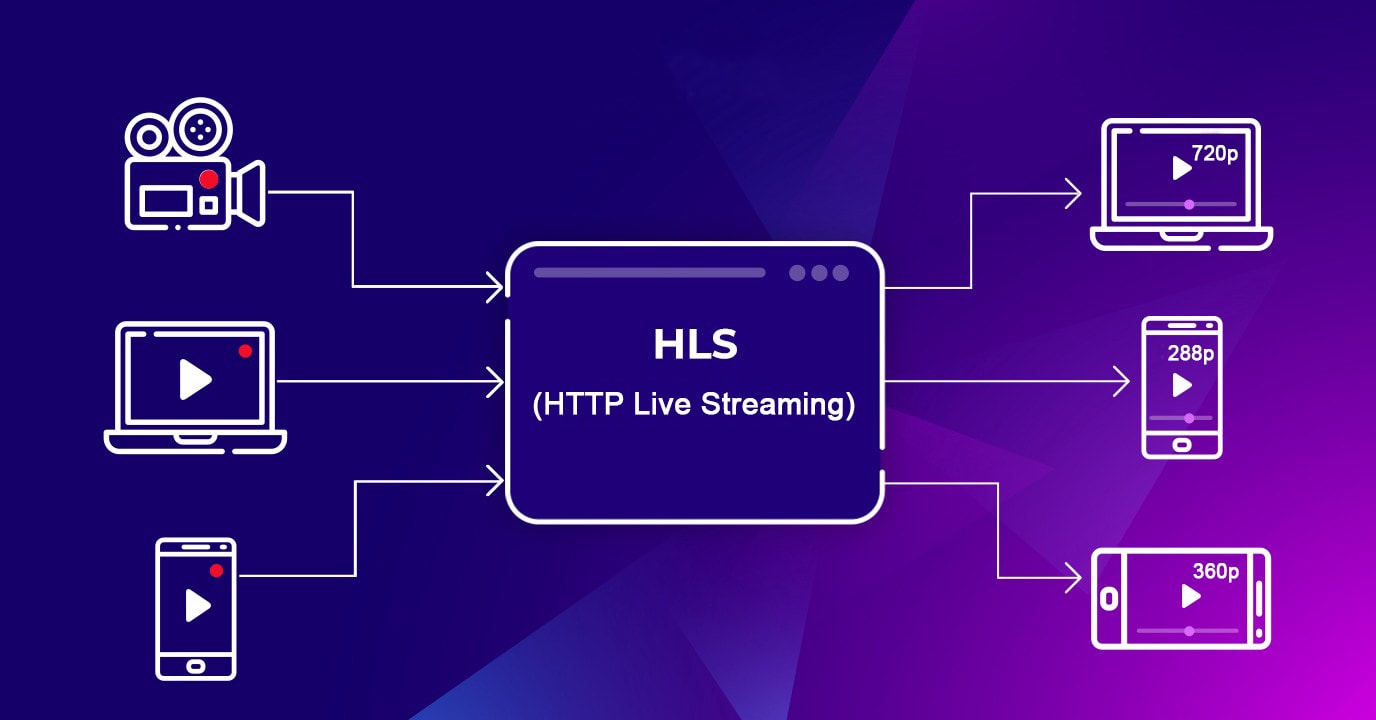

In today's world, delivering high-quality video content is essential. Adaptive Bitrate Streaming (ABR) is a technique used to improve the viewer experience by dynamically adjusting the video quality based on the user's network conditions. In this blog post, we'll explore how to build an HLS (HTTP Live Streaming) Adaptive Bitrate Streaming service using Node.js, AWS S3, Docker, and ECS Fargate.

Table of Contents

Introduction

Adaptive Bitrate Streaming allows for a seamless viewing experience by adjusting the video quality in real-time based on the user's internet speed. This guide will walk you through the steps to set up such a service using various AWS services and Node.js.

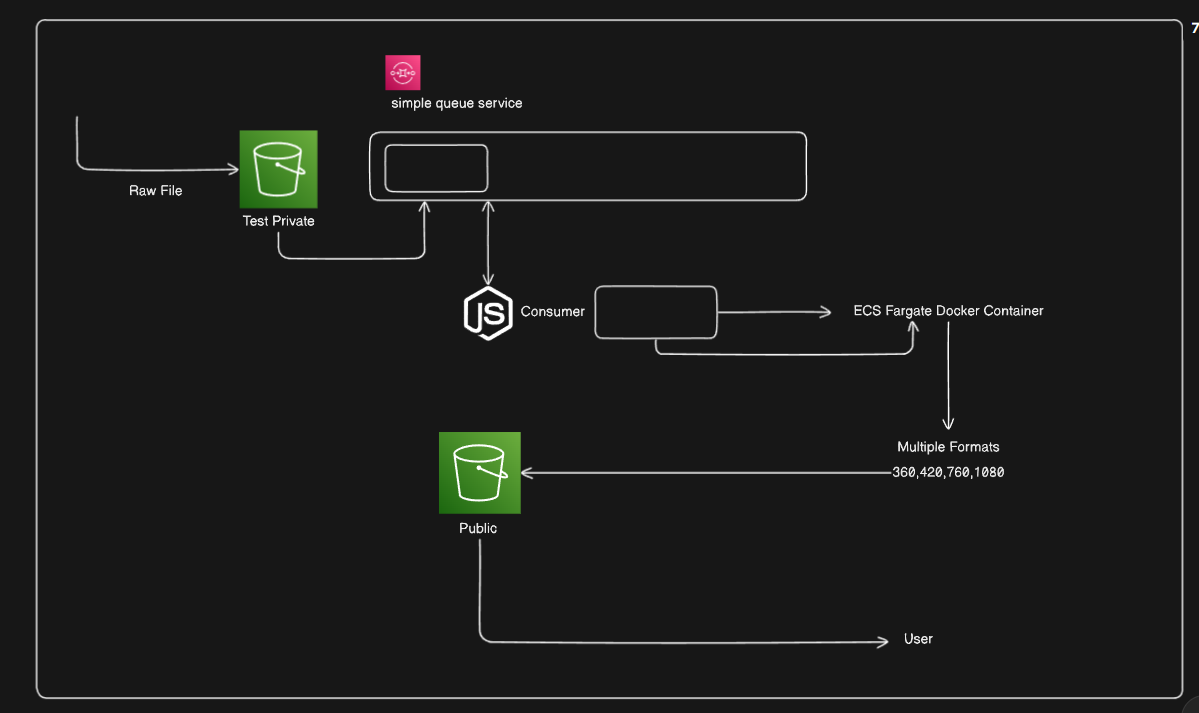

Architecture Overview

The system architecture includes:

AWS S3 Buckets: For storing raw and processed media files.

SQS (Simple Queue Service): To manage the file processing tasks.

Node.js Consumer: To retrieve and process SQS messages.

ECS Fargate Docker Container: For scalable and serverless media encoding.

User Access: To stream the media files.

Setting Up AWS S3 Buckets

Create S3 Buckets:

test-private: Stores raw media files.public: Stores processed media files.

aws s3 mb s3://test-private

aws s3 mb s3://public

- Set Permissions: Ensure the buckets have the correct permissions for read and write operations.

Creating an SQS Queue

- Create SQS Queue: Create a new SQS queue to handle the processing tasks.

aws sqs create-queue --queue-name media-processing-queue

- Configure Permissions: Set permissions for the SQS queue to allow access from the Node.js consumer.

Implementing the Node.js Consumer

Setup Node.js Project:

- Initialize a new Node.js project and install required dependencies.

mkdir media-processor

cd media-processor

npm init -y

npm install aws-sdk

Implement SQS Message Processing:

- Create a script to process SQS messages and trigger the encoding task.

const AWS = require('aws-sdk');

const s3 = new AWS.S3();

const sqs = new AWS.SQS();

async function processMessage(message) {

const { Bucket, Key } = JSON.parse(message.Body);

console.log(`Processing file: ${Bucket}/${Key}`);

// Logic to process the file...

}

async function pollQueue() {

const params = { QueueUrl: 'YOUR_SQS_QUEUE_URL' };

const data = await sqs.receiveMessage(params).promise();

if (data.Messages) {

for (const message of data.Messages) {

await processMessage(message);

await sqs.deleteMessage({ QueueUrl: 'YOUR_SQS_QUEUE_URL', ReceiptHandle: message.ReceiptHandle }).promise();

}

}

}

setInterval(pollQueue, 5000);

Dockerizing the Encoding Process

Create Dockerfile:

- Set up a Dockerfile to build an image with FFmpeg for media encoding.

FROM node:14

RUN apt-get update && apt-get install -y ffmpeg

WORKDIR /app

COPY . /app

RUN npm install

CMD ["node", "processMedia.js"]

Build Docker Image:

- Build and push the Docker image to a container registry.

docker build -t your-docker-repo/media-processor .

docker push your-docker-repo/media-processor

Deploying on AWS ECS Fargate

Create Task Definition:

- Define an ECS task for the Docker container.

{

"family": "media-processor-task",

"containerDefinitions": [

{

"name": "media-processor",

"image": "your-docker-repo/media-processor",

"essential": true,

"memory": 512,

"cpu": 256,

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "media-processor-logs",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

}

}

}

]

}

Run Task on Fargate:

- Trigger the ECS task from the Node.js consumer.

const ecs = new AWS.ECS();

const params = {

taskDefinition: 'media-processor-task',

launchType: 'FARGATE',

cluster: 'your-cluster',

networkConfiguration: {

awsvpcConfiguration: {

subnets: ['subnet-xxxxxxx'],

assignPublicIp: 'ENABLED'

}

}

};

ecs.runTask(params, (err, data) => {

if (err) console.error(err);

else console.log(data);

});

Serving Adaptive Bitrate Streams

Serve Files Using Express:

- Use Express to serve the media files from the public S3 bucket.

const express = require('express');

const app = express();

app.use('/media', express.static('path/to/public/s3/bucket'));

app.listen(3000, () => {

console.log('Server started on port 3000');

});

Conclusion

By following these steps, you can create a robust HLS Adaptive Bitrate Streaming service that scales with your needs. Leveraging AWS services like S3, SQS, and ECS Fargate ensures your solution is scalable and resilient. Dockerizing the encoding process with FFmpeg allows for flexible and efficient media processing. Happy streaming!

Subscribe to my newsletter

Read articles from Pradeep Pawar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Pradeep Pawar

Pradeep Pawar

I am a dedicated and skilled frontend developer with experience in developing user-friendly applications using ReactJS, NextJS, and NodeJS. I have a strong foundation in building RESTful APIs and managing databases like MongoDB