Copy data from a Blob Storage to a Sharepoint folder with Azure Data Factory through Azure Logic App

Massimiliano Figini

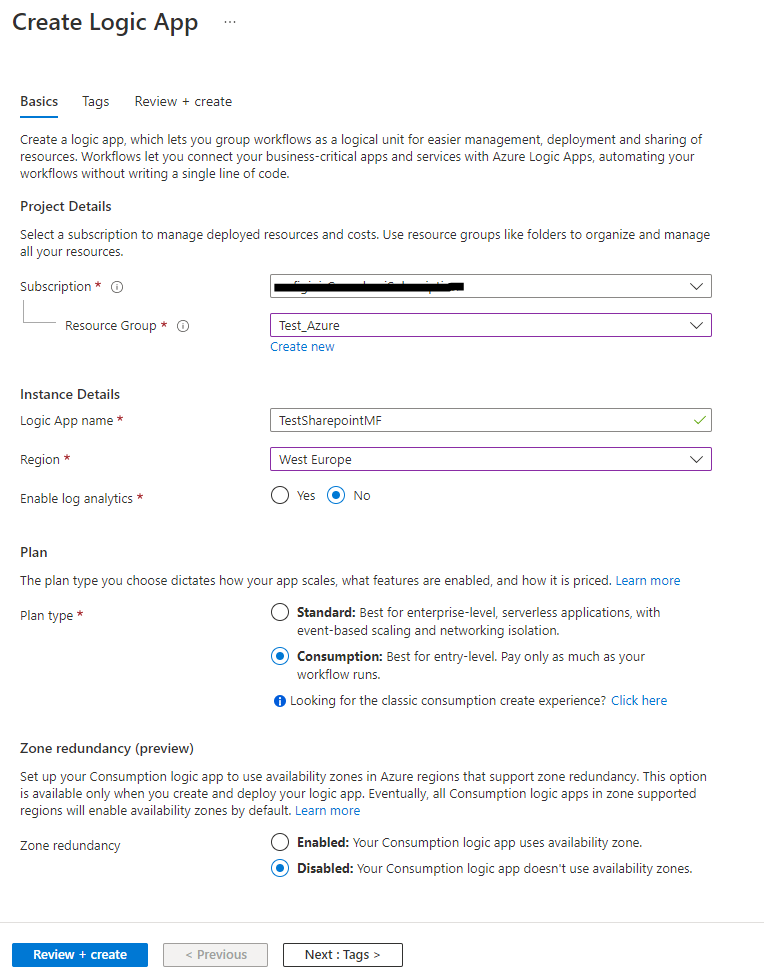

Massimiliano Figini1. Create the Logic App

Choose the "Consuption" type.

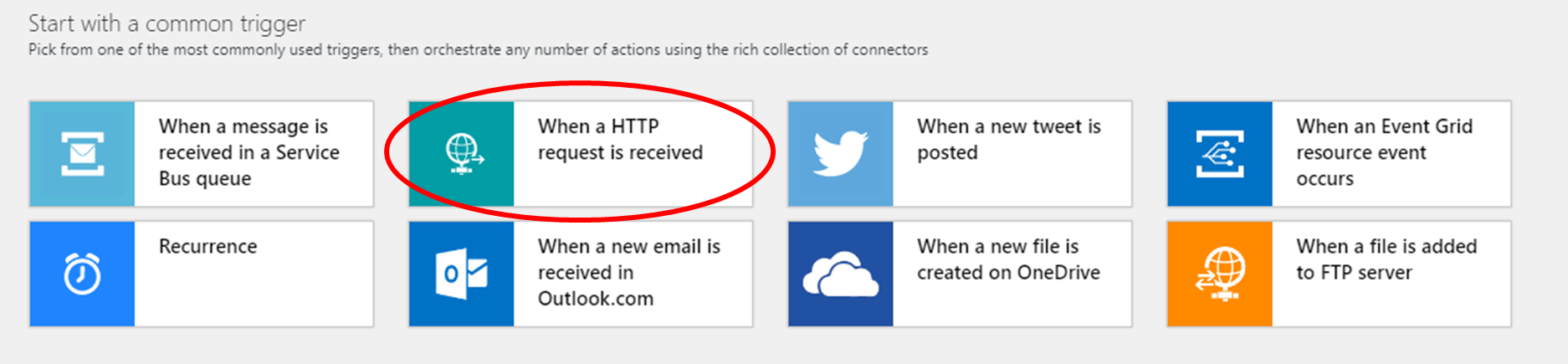

2. Design the Logic App

Start with the common trigger "When a HTTP request is received"

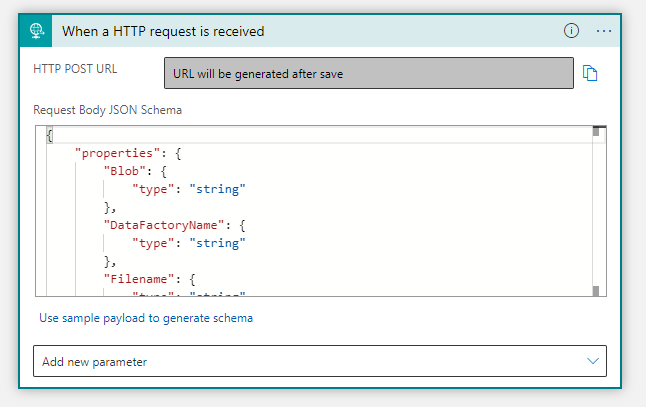

In the JSON body add all the parameters you need to use:

{

"properties": {

"Blob": {

"type": "string"

},

"DataFactoryName": {

"type": "string"

},

"Filename": {

"type": "string"

},

"Folder": {

"type": "string"

}

},

"type": "object"

}

An HTTP POST URL will appear.

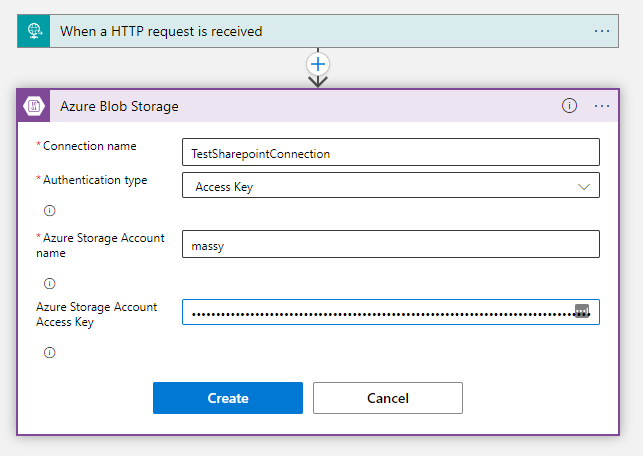

Add a new action step: "Azure Blob Storage - Get blob content (V2)".

Choose how you want to connect to the blob storage. I used the Access Key method: in this case you have to choose a name for the connection, then add the storage account name and its access key and click "Create".

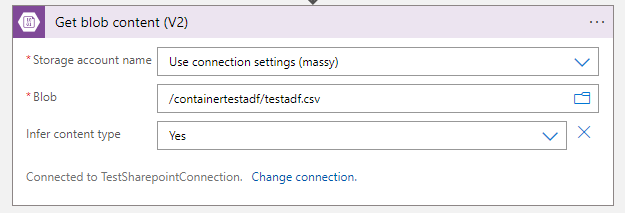

After that in the new box that appear, choose "Use connection settings" in the storage account name and specify the file you want to upload to Sharepoint.

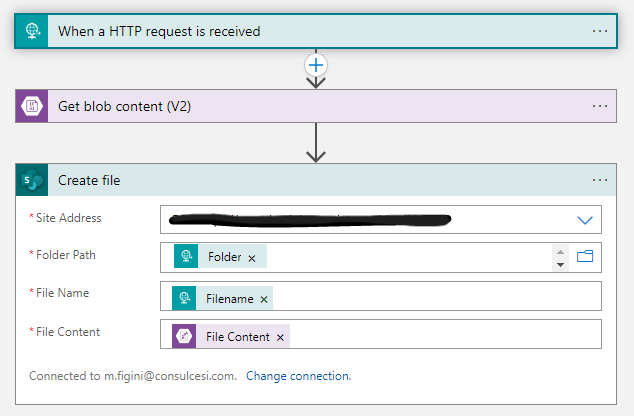

The last step is the Sharepoint action "Create file". Like before, choose a connection method. Then add in the appropriate space the Sharepoint site address, and the parameters defined in the first step in the "Folder Path" and "File Name" spaces using the "Add dynamic content" link.

In the "File content" space, choose again the "Add dynamic content" link and the File Content of the Get blob content (V2) defined earlier.

The Logic App is now ready.

3. Add the new step to your ADF pipeline

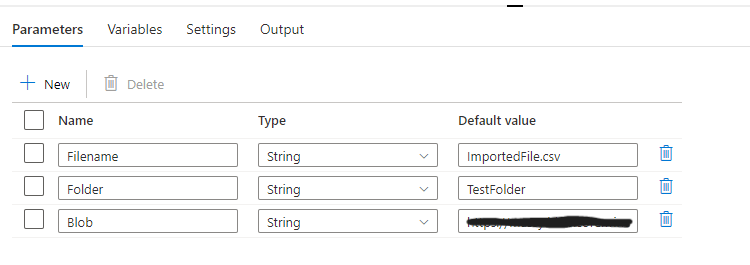

In Azure Data Factory Studio, add three string parameters to your pipeline: Filename, Folder and Blob. Assign a name to the Sharepoint Filename (with the correct extension) to the first, the Sharepoint folder name to the second and the blob address and filename from your Storage Account to the third.

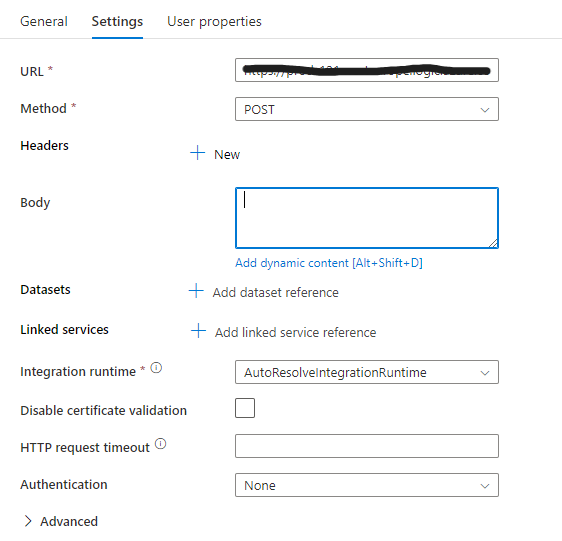

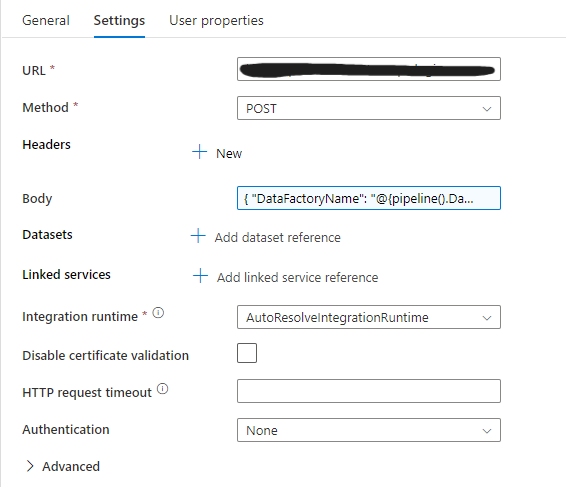

In your Azure Data Factory pipeline, add a new Web activity.

In its Settings tab, add the URL you have copied from the Design Logic App first step in the URL space, choose POST as method and choose "Add dynamic content" under the body space after clicked on it.

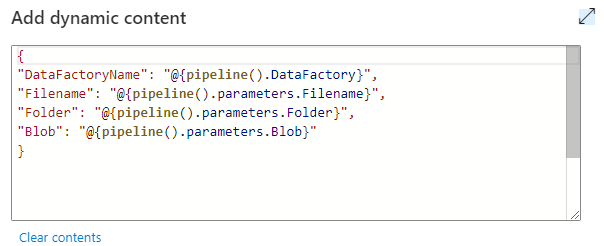

Add the parameters in this format:

{

"DataFactoryName": "@{pipeline().DataFactory}",

"Filename": "@{pipeline().parameters.Filename}",

"Folder": "@{pipeline().parameters.Folder}",

"Blob": "@{pipeline().parameters.Blob}"

}

Choose your Integration Runtime and publish all.

You now can trigger the pipeline.

Subscribe to my newsletter

Read articles from Massimiliano Figini directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Massimiliano Figini

Massimiliano Figini

data engineer • T-SQL developer • r and python • Power BI and tableau • machine learning enthusiast • data lover Follow back granted