An Introduction to Convolutional Neural Networks (CNNs)

Osen Muntu

Osen Muntu

Convolutional Neural Networks (CNNs) are a specialized family of deep learning models that have revolutionized the field of computer vision. Unlike traditional neural networks, CNNs are specifically designed to handle grid-like data such as images. They have become the backbone of various applications in image recognition, medical image analysis, object detection, and more.

What are Convolutional Neural Networks?

CNNs are inspired by the animal visual cortex and are designed to automatically and adaptively learn spatial hierarchies of features from input images. The key advantage of CNNs is their ability to reduce the number of parameters without sacrificing the model’s performance, enabling deeper networks that are computationally feasible.

Detailed Explanation of CNNs and How They Operate

Key Components of CNNs

Convolutional Layers:

Purpose: To detect local features from the input image.

Operation: Applies a set of filters (also called kernels) to the input image. Each filter slides (or convolves) across the image, computing the dot product between the filter and portions of the image, producing a feature map.

Activation Functions:

Purpose: To introduce non-linearity into the model, allowing it to learn complex patterns.

Common Functions: ReLU (Rectified Linear Unit), Sigmoid, Tanh.

Pooling Layers:

Purpose: To reduce the spatial dimensions of the feature maps, helping to reduce computation and prevent overfitting.

Types:

Max Pooling: Selects the maximum value from each region of the feature map.

Average Pooling: Calculates the average value from each region of the feature map.

Fully Connected Layers:

Purpose: To combine the features learned by convolutional and pooling layers and perform the final classification.

Operation: Flattens the feature maps into a vector and passes it through a traditional neural network.

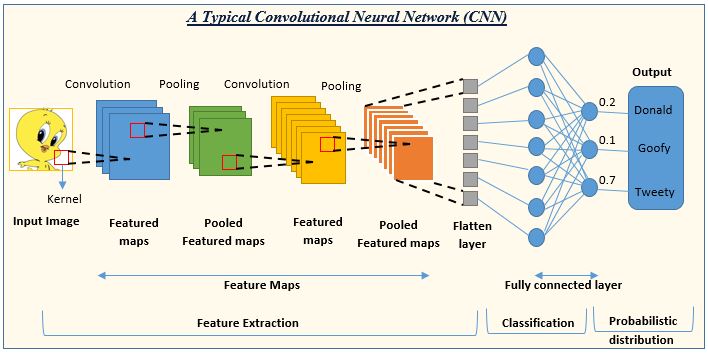

Example Architecture

A typical CNN architecture might look like this:

Input Layer: Takes in the raw image data.

Convolutional Layer: Applies multiple filters to the input image to produce feature maps.

Activation Layer: Applies a non-linear activation function like ReLU.

Pooling Layer: Reduces the spatial dimensions of the feature maps.

Convolutional Layer: Further extracts features from the reduced feature maps.

Activation Layer: Applies the non-linear activation function again.

Pooling Layer: Further reduces the dimensions.

Fully Connected Layer: Flattens the feature maps and processes them through a dense network.

Output Layer: Produces the final classification or prediction.

Implementing a CNN in TensorFlow

Here is an example implementation of a basic CNN using TensorFlow:

import tensorflow as tf

from tensorflow.keras import layers, models

def create_cnn(input_shape, num_classes):

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=input_shape))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.Flatten())

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(num_classes, activation='softmax'))

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

return model

# Example usage with MNIST dataset

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data()

x_train = x_train.reshape((x_train.shape[0], 28, 28, 1)).astype('float32') / 255

x_test = x_test.reshape((x_test.shape[0], 28, 28, 1)).astype('float32') / 255

cnn_model = create_cnn((28, 28, 1), 10)

cnn_model.fit(x_train, y_train, epochs=5, batch_size=64, validation_data=(x_test, y_test))

In this implementation:

A sequential model is created with three convolutional layers followed by max-pooling layers.

The model is compiled with the Adam optimizer and sparse categorical cross-entropy loss.

The MNIST dataset is used for training and testing the model.

Use Cases of CNNs

CNNs have a wide range of applications, including but not limited to:

Image Classification: Identifying objects within images, e.g., recognizing handwritten digits.

Object Detection: Detecting and localizing objects within an image, e.g., face detection.

Segmentation: Partitioning an image into segments, e.g., medical image segmentation.

Video Analysis: Understanding and processing video data, e.g., action recognition.

Natural Language Processing: Text classification, sentiment analysis, and more when combined with techniques for handling sequential data.

Notable CNN Architectures

LeNet-5: Developed by Yann LeCun for handwritten digit recognition, consisting of 7 layers.

AlexNet: Introduced by Krizhevsky et al., with 5 convolutional and 3 fully-connected layers, utilizing ReLU activation.

VGG: Series of models (VGG-11, VGG-13, VGG-16, VGG-19) focusing on the impact of depth on model performance.

GoogleNet (Inception): Introduced inception layers, allowing convolution with different filter sizes.

ResNet: Utilizes skip connections to combat vanishing gradients, consisting of very deep networks.

DenseNet: Uses dense blocks and transition layers to improve information flow between layers.

Advances Beyond CNNs

Despite their success, CNNs have limitations, such as not capturing relationships, size, perspective, and orientation of features effectively. To address these, Capsule Networks (CapsNet) were introduced, which use capsules to detect spatial information and preserve feature relationships more efficiently.

In summary, CNNs are a cornerstone of modern computer vision, providing powerful tools for various image-related tasks. Their design principles and architectural innovations continue to inspire new research and applications in deep learning.

Subscribe to my newsletter

Read articles from Osen Muntu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Osen Muntu

Osen Muntu

Osen Muntu, AI Engineer and Flutter Developer, passionate about artificial intelligence and mobile app development. With expertise in machine learning, deep learning, natural language processing, computer vision, and Flutter, I dedicate myself to building intelligent systems and creating beautiful, functional apps. Through my blog, I share my knowledge and experiences, offering insights into AI trends, development tips, and tutorials to inspire and educate fellow tech enthusiasts. Let's connect and drive innovation together!